Abstract

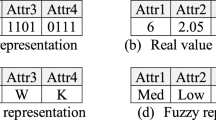

How to rationally inject randomness to control population diversity is still a difficult problem in evolutionary algorithms. We propose balanced-evolution genetic algorithm (BEGA) as a case study of this problem. Similarity guide matrix (SGM) is a two-dimensional matrix to express the population (or subpopulation) distribution in coding space. Different from binary-coding similarity indexes, SGM is able to be suitable for binary-coding and symbol-coding problems, simultaneously. In BEGA, opposite-direction and forward-direction regions are defined by using two SGMs as reference points, respectively. In opposite-direction region, diversity subpopulation always tries to increase Hamming distances between themselves and the current population. In forward-direction region, intensification subpopulation always tries to decrease Hamming distances between themselves and the current elitism population. Thus, diversity subpopulation is more suitable for injecting randomness. Linear diversity index (LDI) measures the individual density around the center-point individual in coding space, which is characterized by itself linearity. According to LDI, we control the search-region ranges of diversity and intensification subpopulations by using negative and positive perturbations, respectively. Thus, the search efforts between exploration and exploitation are balanced. We compared BEGA with CHC, dual-population genetic algorithm, variable dissortative mating genetic algorithm, quantum-inspired evolutionary algorithm, and greedy genetic algorithm for 12 benchmarks. Experimental results were acceptable. In addition, it is worth noting that BEGA is able to directly solve bounded knapsack problem (i.e. symbol-coding problem) as one EA-based solver, and does not transform bounded knapsack problem into an equivalent binary knapsack problem.

Similar content being viewed by others

References

Alba E, Dorronsoro B (2005) The exploration/exploitation tradeoff in dynamic cellular genetic algorithms. IEEE Trans Evol Comput 9:126–142. https://doi.org/10.1109/TEVC.2005.843751

Baluja S (1992) A massively distributed parallel genetic algorithm. Tech. Rep. No. CMU-CS- 92-196R. Carnegie Mellon University

Burke E, Gustafson S, Kendall G, Krasnogor N (2002) Advanced population diversity measures in genetic programming. In: Proceedings of parallel problem solving from nature. Springer, pp 341–350. https://doi.org/10.1007/3-540-45712-7_33

Burke E, Gustafson S, Kendall G (2004) Diversity in genetic programming: an analysis of measures and correlation with fitness. IEEE Trans Evol Comput 8:47–62. https://doi.org/10.1109/TEVC.2003.819263

Cao ZJ, Shi YH, Rong XF, Liu BL, Du ZQ, Yang B (2015) Random grouping brain storm optimization algorithm with a new dynamically changing step size. In: Proceedings of the International Conference on Swarm Intelligence, Lecture Notes in Computer Science. Springer, pp 357–364. https://doi.org/10.1007/978-3-319-20466-6_38

Chen G, Low CP, Yang ZH (2009) Preserving and exploiting genetic diversity in evolutionary programming algorithms. IEEE Trans Evol Comput 13:661–673. https://doi.org/10.1109/TEVC.2008.2011742

Črepinšek M, Liu SH, Mernik M (2013) Exploration and exploitation in evolutionary algorithms: a survey. ACM Comput Surv 45:1–33. https://doi.org/10.1145/2480741.2480752

Darwen PJ, Yao X (2001) Why more choices cause less cooperation in iterated prisoner’s dilemma. In: Proceedings of the 2001 Congress on Evolutionary Computation. IEEE, pp 987–994. https://doi.org/10.1109/cec.2001.934298

De Jong K (1975) An analysis of the behavior of a class of genetic adaptive systems. Dissertation, University of Michigan

De Jong K (2007) Parameter setting in EAs: a 30 year perspective. In: Lobo FG, Lima CF, Michalewicz Z (eds) Parameter setting in evolutionary algorithms. Springer, Berlin, pp 1–18

Dekkers A, Aarts E (1991) Global optimization and simulated annealing. Math Program 50:367–393. https://doi.org/10.1007/BF01594945

Eiben AE, Schippers C (1998) On evolutionary exploration and exploitation. Fundam Inform 35:35–50. https://doi.org/10.3233/FI-1998-35123403

Eiben AE, Smit SK (2011) Parameter tuning for configuring and analyzing evolutionary algorithms. Swarm Evol Comput 1:19–31. https://doi.org/10.1016/j.swevo.2011.02.001

Eiben AE, Hinterding R, Michalewicz Z (1999) Parameter control in evolutionary algorithms. IEEE Trans Evol Comput 3:124–141. https://doi.org/10.1109/4235.771166

Eshelman LJ (1991) The CHC adaptive search algorithm: how to have safe search when engaging in nontraditional genetic recombination. In: Gregory JR (ed) Foundations of genetic algorithms, vol 1. Morgan Kaufmann Publishers Inc, San Francisco, pp 265–283

Fernandes C, Rosa A (2006) Self-regulated population size in evolutionary algorithms. In: Runarsson TP, Beyer HG et al (eds) Parallel problem solving from nature–PPSN IX. Springer, Iceland, pp 920–929

Fernandes C, Rosa A (2008) Self-adjusting the intensity of assortative mating in genetic algorithms. Soft Comput 12:955–979. https://doi.org/10.1007/s00500-007-0265-9

García-Martínez C, Lozano M (2008) Local search based on genetic algorithms. In: Siarry P, Michalewicz Z (eds) Advances in metaheuristics for hard optimization. Springer, Berlin, pp 199–221

Glover F (1997) Heuristics for integer programming using surrogate constraints. Decis Sci 8:156–166. https://doi.org/10.1111/j.1540-5915.1977.tb01074.x

Han KH, Kim JH (2002) Quantum-inspired evolutionary algorithm for a class of combinatorial optimization. IEEE Trans Evol Comput 6:580–593. https://doi.org/10.1109/TEVC.2002.804320

Harik GR, Lobo FG, Goldberg DE (1999) The compact genetic algorithm. IEEE Trans Evol Comput 3:287–297. https://doi.org/10.1109/4235.797971

Holland JH (1975) Adaptation in natural and artificial systems. The MIT Press, Ann Arbor

Jaccard P (1912) The distribution of the flora in the alpine zone. New Phytol 11:37–50. https://doi.org/10.1111/j.1469-8137.1912.tb05611.x

Koumousis VK, Katsaras CP (2006) A saw-tooth genetic algorithm combining the effects of variable population size and reinitialization to enhance performance. IEEE Trans Evol Comput 10:19–28. https://doi.org/10.1109/TEVC.2005.860765

Lacevic B, Amaldi E (2010) On population diversity measures in euclidean space. In: Proceedings of the 2010 Congress on Evolutionary Computation. IEEE, pp 1–8. https://doi.org/10.1109/cec.2010.5586498

Lacevic B, Konjicija S, Avdagic Z (2007) Population diversity measure based on singular values of the distance matrix. In: Proceedings of the 2007 Congress on Evolutionary Computation. IEEE, pp 1863–1869. https://doi.org/10.1109/cec.2007.4424700

Lee CY, Yao X (2004) Evolutionary programming using mutations based on the levy probability distribution. IEEE Trans Evol Comput 8:1–13. https://doi.org/10.1109/TEVC.2003.816583

Lozano M, Herrera F, Cano JR (2008) Replacement strategies to preserve useful diversity in steady-state genetic algorithms. Inf Sci 178:4421–4433. https://doi.org/10.1016/j.ins.2008.07.031

Martello S, Toth P (1990) Knapsack problems: algorithms and computer implementations. Wiley, Hoboken

Martello S, Pisinger D, Toth P (1999) Dynamic programming and strong bounds for the 0–1 knapsack problem. Manag Sci 45:414–424. https://doi.org/10.1287/mnsc.45.3.414

Mattiussi C, Waibel M, Floreano D (2004) Measures of diversity for populations and distances between individuals with highly reorganizable genomes. Evol Comput 12:495–515. https://doi.org/10.1162/1063656043138923

McGinley B, Maher J, O’Riordan C, Morgan F (2011) Maintaining healthy population diversity using adaptive crossover, mutation, and selection. IEEE Trans Evol Comput 15:692–714. https://doi.org/10.1109/TEVC.2010.2046173

Morrison RW, De Jong K (2002) Measurement of population diversity. In: Collet P, Fonlupt C, Hao J, Lutton E, Schoenauer M (eds) Artificial evolution. Springer, France, pp 31–41

Park T, Ryu KR (2010) A dual-population genetic algorithm for adaptive diversity control. IEEE Trans Evol Comput 14:865–884. https://doi.org/10.1109/TEVC.2010.2043362

Pelikan M, Goldberg DE, Cantú-paz EE (2000) Linkage problem, distribution estimation, and bayesian networks. Evol Comput 8:311–340. https://doi.org/10.1162/106365600750078808

Pisinger D (1999) Core problems in knapsack algorithms. Oper Res 47:570–575. https://doi.org/10.1287/opre.47.4.570

Platel MD, Platel MD, Schliebs S, Schliebs S, Kasabov N, Kasabov N (2009) Quantum-inspired evolutionary algorithm: a multimodel EDA. IEEE Trans Evol Comput 13:1218–1232. https://doi.org/10.1109/TEVC.2008.2003010

Resende MGC, Ribeiro CC, Glover F, Martí R (2010) Scatter search and path-relinking: fundamentals, advances, and applications. In: Gendreau M, Potvin JY (eds) Handbook of metaheuristics. Springer, Berlin, pp 87–107

Shi YH (2015) Brain storm optimization algorithm in objective space. In: IEEE Congress on Evolutionary Computation. IEEE, pp 1227–1234. https://doi.org/10.1109/cec.2015.7257029

Smit SK, Eiben AE (2009) Comparing parameter tuning methods for evolutionary algorithms. In: Proceedings of the 2009 Congress on Evolutionary Computation. IEEE, pp 399–406. https://doi.org/10.1109/cec.2009.4982974

Spears WM (2000) Evolutionary algorithms: the role of mutation and recombination. Springer, Berlin

Truong TK, Li KL, Xu YM (2013) Chemical reaction optimization with greedy strategy for the 0–1 knapsack problem. Appl Soft Comput 13:1774–1780. https://doi.org/10.1016/j.asoc.2012.11.048

Ursem RK (2002) Diversity-guided evolutionary algorithms. In: Guervós JJM, Adamidis P et al (eds) Parallel problem solving from nature—PPSN VII. Springer, Espana, pp 462–471

Zhan ZH, Zhang J, Shi YH, Liu HL (2012) A modified brain storm optimization. In: IEEE Congress on Evolutionary Computation. IEEE, pp 1–8. https://doi.org/10.1109/cec.2012.6256594

Zhang J, Chung HSH, Lo W (2007) Clustering-based adaptive crossover and mutation probabilities for genetic algorithms. IEEE Trans Evol Comput 11:326–335. https://doi.org/10.1109/TEVC.2006.880727

Zhu HY, Shi YH (2015) Brain storm optimization algorithms with K-medians clustering algorithms. In: International Conference on Advanced Computation Intelligence. IEEE, pp 107–110. https://doi.org/10.1109/icaci.2015.7184758

Zitzler E (1999) Evolutionary algorithms for multiobjective optimization: methods and applications. Dissertation, Swiss Federal Institute of Technology Zurich

Acknowledgements

The authors would like to thank anonymous reviewers for their constructive comments, especially for improving the concepts of similarity guide matrix and linear diversity index. This work was supported by National Natural Science Foundation of China (Grant No. 61272518) and YangFan Innovative and Entrepreneurial Research Team Project of Guangdong Province.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Trap problem includes k basic functions, whose fitness is equal to the fitness sum of k basic functions (García-Martínez and Lozano 2008). The best solution of a basic function, with all ones, has a fitness value of 220 (Table 3). The basic function is defined by

Analogous to trap problem, basic functions in order-3, order-4, and partially deceptive problems (García-Martínez and Lozano 2008; Baluja 1992) are given in Tables 4, 5, and 6, respectively.

Overlapping deceptive problem (Pelikan et al. 2000) is defined by

where i is the first position of each substring X[i:i+2], N is coding length, and u is the number of ones in the substring X[i:i+2].

PPeaks problem (Spears 2000), whose optimal value is 1.0, is defined by

where Hamdis() returns Hamming distance between X and Peaki (i.e. a N-bit string).

Binary knapsack problem is as follow. Let pj be the profit of type-j item, let wj be the weight of type-j item, and C is the weight capacity of the knapsack. X = {x1, x2, … xj, … xN} is a binary decision variable. If type-j item is loaded in the knapsack, xj = 1. Otherwise, xj = 0. Binary knapsack problem is defined by using Eqs. (25) and (27). First, we use the methods of generating uncorrelated, weakly correlated, and strongly correlated datasets (Martello et al. 1999; Pisinger 1999; Truong et al. 2013). Uncorrelated dataset: pj and wj are randomly distributed in (10, R). Weakly correlated dataset: wj is randomly distributed in (1, R), and pj (pj ≥ 1) is randomly distributed in (wj − R/10, wj + R/10). Strongly correlated dataset: wj is randomly distributed in (1, R), and pj is wj + 10. In this paper, R is 100. Secondly, we use the constraint handling method (Zitzler 1999) as follows. Items with the lowest profit/weight ratio qj (i.e. qj = pj/wj 1 ≤ j ≤ N) are removed first. Items are removed one by one, until the capacity constraint is satisfied.

subject to

Bounded knapsack problem is also formulated by using Eqs. (25) and (27). The difference of bounded knapsack problem is that xj expresses how many type-j item is loaded in the knapsack. First, we also use the same methods of generating test datasets (Martello et al. 1999; Pisinger 1999) for bounded knapsack problem. Secondly, similar to binary knapsack problem, the difference of bounded knapsack problem is that the constraint handling method gradually decreases the number of each item. In this paper, bmax is 4 for bounded knapsack problem. According to 1 ≤ bj ≤ bmax, bj is randomly generated. We assume that pj, wj, bj, and C are greater than 0 and

Appendix 2

CHC uses cross generational elitist selection, heterogeneous recombination, and cataclysmic mutation (Eshelman 1991). Two parents are only allowed to mate, when Hamming distance between two parents is greater than the threshold. CHC only carries out mutation to reinitialize the population by keeping the best individual, when the threshold drops to zero.

DPGA (Park and Ryu 2010) and VDMGA (Fernandes and Rosa 2006, 2008) are given in Algorithms 5 and 6, respectively.

QEA (Han and Kim 2002, Platel et al. 2009) is inspired by the principle of quantum computing. Quantum gate U(θ) is given in Eq. (31), and θ is equal to s(αjβj) × Delta in Table 7.

In the bounded-knapsack-problem (i.e. symbol-coding problem) field, dynamic programming, branch-and-bound algorithm, and reduction algorithm are frequently used (Martello and Toth 1990). Another kind of algorithm is to transform bounded knapsack problem into an equivalent binary knapsack problem (Martello and Toth 1990). However, this implies much more computation cost, because coding length increases. In EAs, there is little research, which directly solves bounded knapsack problem. Thus, GGA is used as the compared algorithm of bounded knapsack problem.

Rights and permissions

About this article

Cite this article

Zhang, H., Liu, Y. & Zhou, J. Balanced-evolution genetic algorithm for combinatorial optimization problems: the general outline and implementation of balanced-evolution strategy based on linear diversity index. Nat Comput 17, 611–639 (2018). https://doi.org/10.1007/s11047-018-9670-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11047-018-9670-5