Abstract

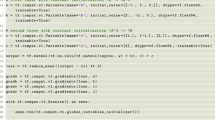

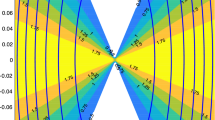

This paper investigates an online gradient method with penalty for training feedforward neural networks with linear output. A usual penalty is considered, which is a term proportional to the norm of the weights. The main contribution of this paper is to theoretically prove the boundedness of the weights in the network training process. This boundedness is then used to prove an almost sure convergence of the algorithm to the zero set of the gradient of the error function.

Similar content being viewed by others

References

Chen T (1995) Universal approximation to nonlinear operations by Neural Networks with arbitrary activation functions and its application to dynamical system. IEEE Trans Neural Netw 6(4): 911–917

Fine TL, Mukherjee S (1999) Parameter convergence and learning curves for neural networks. Neural Comput 11: 747–769

Gaivoronski AA (1994) Convergence properties of backpropagation for neural nets via theory of stochastic gradient methods (Part I). Optim Methods Softw 4: 117–134

Hagan MT, Demuth HB, Beale M (2003) Neural network design. China Machine Press, Beijing

Hanson SJ, Pratt LY (1989) Comparing biases for minimal network construction with back-propagation. Neural Inf Process 1: 177–185

Hu ST (1965) Elements of general topology. Holden-Day, San Francisco

Reed R (1993) Pruning algorithms: a survey. IEEE Trans Neural Netw 4(5): 740–747

Saito K, Nakano R (2000) Second-order learning algorithm with squared penalty term. Neural Comput 12: 709–729

Shao H, Wu W, Liu L (2007) Convergence and monotonicity of an online gradient method with penalty for neural networks. WSEAS Trans Math 6(3): 469–476

Tadic V, Stankovic S (2000) Learning in neural networks by normalized stochastic gradient algorithm: local convergence. Proceedings of the 5th Seminar on Neural Network Applications in Electrical Engineering, Yugoslavia, pp 11–17

White H (1989) Some asymptotic results for learning in single hidden-layer feedforward network models. J Am Stat Ass 84: 1003–1013

Wu W, Feng G, Li Z et al (2005) Convergence of an online gradient method for BP neural networks. IEEE Trans Neural Netw 16(3): 533–540

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, H., Wu, W. Boundedness and Convergence of Online Gradient Method with Penalty for Linear Output Feedforward Neural Networks. Neural Process Lett 29, 205–212 (2009). https://doi.org/10.1007/s11063-009-9104-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-009-9104-6