Abstract

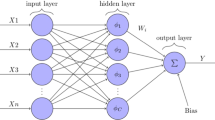

Up to now, there have been many attempts in the use of artificial neural networks (ANNs) for solving optimization problems and some types of ANNs, such as Hopfield network and Boltzmann machine, have been applied for combinatorial optimization problems. However, there are some restrictions in the use of ANNs as optimizers. For example: (1) ANNs cannot optimize continuous variable problems; (2) discrete problems should be mapped into the neural networks’ architecture; and (3) most of the existing neural networks are applicable only for a class of smooth optimization problems and global convexity conditions on the objective functions and constraints are required. In this paper, we introduce a new procedure for stochastic optimization by a recurrent ANN. The concept of fractional calculus is adopted to propose a novel weight updating rule. The introduced method is called fractional-neuro-optimizer (FNO). This method starts with an initial solution and adjusts the network’s weights by a new heuristic and unsupervised rule to reach a good solution. The efficiency of FNO is compared to the genetic algorithm and particle swarm optimization techniques. Finally, the proposed FNO is used for determining the parameters of a proportional–integral–derivative controller for an automatic voltage regulator power system and is applied for designing the water distribution networks.

Similar content being viewed by others

References

Sun S, Zhang Q (2011) Multiple-view multiple-learner semi-supervised learning. Neural Process Lett 34:229–240

Zhang Q, Sun S (2010) Multiple-view multiple-learner active learning. Pattern Recognit 43:3113–3119

Onomi T, Maenami Y, Nakajima K (2011) Superconducting neural network for solving a combinatorial optimization problem. IEEE Trans Appl Superconduct 21:701–704

Hu X, Sun C, Zhang B (2010) Design of recurrent neural networks for solving constrained least absolute deviation problems. IEEE Trans Neural Netw 21:1073–1086

Hu X, Wang J (2012) Solving the assignment problem using continuous-time and discrete-time improved dual networks. IEEE Trans Neural Netw Lear Syst 23:821–827

Mérida-Casermeiro E, Galán-Marín G, Muñoz-Pérez J (2001) An efficient multivalued Hopfield network for the traveling salesman problem. Neural Process Lett 14:203–216

Liu Z, Zhang L, Lv X, Chen J (2011) Evaluation method about bus scheduling based on discrete Hopfield neural network. J Trans Syst Eng Inf Technol 11:77–83

Rawat A, Yadav RN, Shrivastava SC (2012) Neural network applications in smart antenna arrays: a review. AEU – Int J Electron Commun 66:903–912

Sheikhan M, Hemmati E (2012) PSO-optimized Hopfield neural network-based multipath routing for mobile ad-hoc networks. Int J Comput Intell Syst 5:568–581

Liu Q, Dang C, Huang T (2013) A one-layer recurrent neural network for real-time portfolio optimization with probability criterion. IEEE Trans Cyber 43:14–23

Hou Z-G, Cheng L, Tan M (2010) Multicriteria optimization for coordination of redundant robots using a dual neural network. IEEE Trans Syst Man Cybern 40:1075–1087

Guo Z, Liu Q, Wang J (2011) A one-layer recurrent neural network for pseudoconvex optimization subject to linear equality constraints. IEEE Trans Neural Netw 22:1892–1900

Liu Q, Wang J (2011) Finite-time convergent recurrent neural network with a hard-limiting activation function for constrained optimization with piecewise-linear objective functions. IEEE Trans Neural Netw 22:601–613

Manabe S, Asai H (2001) A neuro-based optimization algorithm for tiling problems with rotation. Neural Process Lett 13:267–275

Atencia M, Joya G, Sandoval F (2005) Hopfield neural networks for parametric identification of dynamical systems. Neural Process Lett 21:143–152

Halici U (2004) Artificial neural networks. Middle East Technical University, Ankara

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Kaslik E, Sivasundaram S (2012) Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw 32:245–256

Chen L, Chai Y, Wu R, Ma T, Zhai H (2013) Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 111:190–194

Huang X, Zhao Z, Wang Z, Li Y (2012) Chaos and hyperchaos in fractional-order cellular neural networks. Neurocomputing 94:13–21

Yu J, Hu C, Jiang H (2012) \(\alpha \)-stability and \(\alpha \)-synchronization for fractional-order neural networks. Neural Netw 35:82–87

Zhang R, Qi D, Wang Y (2010) Dynamics analysis of fractional order three dimensional Hopfield neural networks. In: 2010 Sixth International Conference on Natural Computation (ICNC), 10–12 Aug. 2010, pp 3037–3039

Holland JH (1975) Adaptation in natural and artificial systems. University of Michigan Press, Ann Arbor, MI, Internal Report, 1975

Shi Y, Eberhart R (1998) A modified particle swarm optimizer. In: Proceedings of the IEEE international conference on evolutionary computation. IEEE Press, Piscataway, NJ, pp 69–73

Bahavarnia MS, Tavazoei MS (2013) A new view to Ziegler-Nichols step response tuning method: analytic non-fragility justification. J Process Control 23:23–33

Pan I, Das S, Gupta A (2011) Tuning of an optimal fuzzy PID controller with stochastic algorithms for networked control systems with random time delay. ISA Trans 50:28–36

Coelho LS, Bernert DLA (2009) An improved harmony search algorithm for synchronization of discrete-time chaotic systems. Chaos Solitons Fractals 41:2526–2532

Ayala HVH, Coelho LS (2012) Tuning of PID controller based on a multiobjective genetic algorithm applied to a robotic manipulator. Expert Syst Appl 39:8968–8974

Li H, Zhen-yu Z (2012) The application of immune genetic algorithm in main steam temperature of PID control of BP network. Phys Procedia 24:80–86

Duan H, Wang D, Yu X (2006) Novel approach to nonlinear PID parameter optimization using ant colony optimization algorithm. J Bionic Eng 3:73–78

Gaing ZL (2004) A particle swarm optimization approach for optimum design of PID controller in AVR system. IEEE Trans Energy Conversat 9:384–391

Yates DF, Templeman AB, Boffey TB (1984) The computational complexity of the problem of determining least capital cost designs for water supply networks. Eng Optimiz 7:143–145

Bolognesi A, Bragalli C, Marchi A, Artina S (2010) Genetic heritage evolution by stochastic transmission in the optimal design of water distribution networks. Adv Eng Softw 41:792–801

Banos R, Gil C, Reca J, Montoya FG (2010) A memetic algorithm applied to the design of water distribution networks. Appl Soft Comput 10:261–266

Tospornsampan J, Kita I, Ishii M, Kitamura Y (2007) Split-pipe design of water distribution network using simulated annealing. Int J Comput Inf Syst Sci Eng 1:153–163

Zecchin AC, Maier HR, Simpson AR, Leonard M, Roberts AJ, Berrisford MJ (2006) Application of two ant colony optimization algorithms to water distribution system optimization. Math Comput Model 44:451–468

Montalvo I, Izquierdo J, Perez R, Herrera M (2010) Improved performance of PSO with self-adaptive parameters for computing the optimal design of water supply systems. Eng Appl Artif Intell 23:727–735

Rossman LA (2000) EPANET 2 users manual. Reports EPA/600/R-00/057. US Environmental Protection Agency, Cincinnati, Ohio

Fujiwara O, Khang DB (1990) A two phase decomposition method for optimal design of looped water distribution networks. Water Resour Res 26:539–549

Acknowledgments

The author is most grateful to the editor and the three anonymous reviewers for their constructive and useful comments which improved the quality of the paper. This work is supported by Young Researchers and Elite Club of Islamic Azad University of Ahar Branch.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Aghababa, M.P. Fractional-Neuro-Optimizer: A Neural-Network-Based Optimization Method. Neural Process Lett 40, 169–189 (2014). https://doi.org/10.1007/s11063-013-9321-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-013-9321-x