Abstract

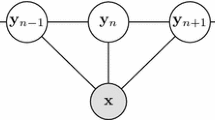

The paper introduces a dynamic extension of the hybrid random field (HRF), called dynamic HRF (D-HRF). The D-HRF is aimed at the probabilistic graphical modeling of arbitrary-length sequences of sets of (time-dependent) discrete random variables under Markov assumptions. Suitable maximum likelihood algorithms for learning the parameters and the structure of the D-HRF are presented. The D-HRF inherits the computational efficiency and the modeling capabilities of HRFs, subsuming both dynamic Bayesian networks and Markov random fields. The behavior of the D-HRF is first evaluated empirically on synthetic data drawn from probabilistic distributions having known form. Then, D-HRFs (combined with a recurrent autoencoder) are successfully applied to the prediction of the disulfide-bonding state of cysteines from the primary structure of proteins in the Protein Data Bank.

Similar content being viewed by others

Notes

Bearing in mind this sequential dynamics of the model, we will occasionally refer to the RNN as operating “over time”, such that it is fed with t-th residue at “time t”.

Available publicly at http://www.biocomp.unibo.it/savojard/PDBCYS.ssbonds.txt.

References

Baldi P, Brunak S (1998) Bioinformatics: the machine learning approach. MIT Press, Cambridge

Berman HM, Westbrook J, Feng Z, Gilliland G, Bhat TN, Weissig H, Shindyalov IN, Bourne PE (2000) The protein data bank. Nucleic Acids Res 28(1):235–242

Besag J (1975) Statistical analysis of non-lattice data. The Statistician 24:179–195

Bongini M, Laveglia V, Trentin E (2016) A hybrid recurrent neural network/dynamic probabilistic graphical model predictor of the disulfide bonding state of cysteines from the primary structure of proteins. In: Proceedings of the 7th IAPR TC 3 workshop on artificial neural networks in pattern recognition, pp 257–268. Springer

Bongini M, Trentin E (2012) Towards a novel probabilistic graphical model of sequential data: a solution to the problem of structure learning and an empirical evaluation. In: Proceedings of the 5th INNS IAPR TC 3 GIRPR workshop on artificial neural networks in pattern recognition, pp. 82–92. Springer

Cappé O, Moulines E, Ryden T (2005) Inference in hidden Markov models. Springer, New York

Ceroni A, Passerini A, Vullo A, Frasconi P (2006) DISULFIND: a disulfide bonding state and cysteine connectivity prediction server. Nucleic Acids Res 34(Web–Server–Issue):177–181

Chen Y, Lin Y, Lin C, Hwang J (2004) Prediction of the bonding states of cysteines using the support vector machines based on multiple feature vectors and cysteine state sequences. Proteins 55(4):1036–1042

Chung W, Yang C, Hor C (2009) An effective tuning method for cysteine state classification. In: Proceedings of the national computer symposium, workshop on algorithms and bioinformatics, Taipei, Taiwan, 27–28 November

Cooper GF, Herskovits E (1992) A Bayesian method for the induction of probabilistic networks from data. Mach Learn 9:309–347

Crick F (1970) Central dogma of molecular biology. Nature 227(5258):561–563

Elidan G, Gould S (2009) Learning bounded treewidth Bayesian networks. In: Koller D, Schuurmans D, Bengio Y, Bottou L (eds) Advances in neural information processing systems, vol 21. Curran Associates, Red Hook, pp 417–424

Fariselli P, Riccobelli P, Casadio R (1999) Role of evolutionary information in predicting the disulfide-bonding state of cysteine in proteins. Proteins 36(3):340–346

Frasconi P, Passerini A, Vullo A (2002) A two-stage SVM architecture for predicting the disulfide bonding state of cysteines. In: Proceedings of the IEEE workshop on neural networks for signal processing, pp 25–34

Freno A, Trentin E (2011) Hybrid random fields: a scalable approach to structure and parameter learning in probabilistic graphical models. Springer, New York

Freno A, Trentin E, Gori M (2009) A hybrid random field model for scalable statistical learning. Neural Netw 22:603–613

Freno A, Trentin E, Gori M (2009) Scalable pseudo-likelihood estimation in hybrid random fields. In: Elder J, Fogelman-Souli F, Flach P, Zaki M (eds) Proceedings of the 15th ACM SIGKDD conference on knowledge discovery and data mining (KDD 2009), pp 319–327. ACM

Freno A, Trentin E, Gori M (2009) Scalable statistical learning: a modular Bayesian/Markov network approach. In: Proceedings of the international joint conference on neural networks (IJCNN 2009), pp 890–897. IEEE

Freno A, Trentin E, Gori M (2010) Kernel-based hybrid random fields for nonparametric density estimation. In: Proceedings of the 19th European conference on artificial intelligence (ECAI), pp 427–432. IOS Press, Amsterdam, The Netherlands

Friedman N (1998) The Bayesian structural EM algorithm. In: Proceedings of the fourteenth conference on uncertainty in artificial intelligence, pp 129–138. Morgan Kaufmann, San Francisco, USA

Ghahramani Z (1998) Learning dynamic Bayesian networks. In: Adaptive processing of sequences and data structures, pp 168–197. Springer

Gilks WR, Richardson S, Spiegelhalter D (1996) Markov chain Monte Carlo in practice. CRC Press, Boca Raton

Grünwald PD (2007) The minimum description length principle. The MIT Press, Cambridge

Hertz J, Krogh A, Palmer RG (1991) Introduction to the theory of neural computation. Addison Wesley, Redwood

Kindermann R, Snell JL (1980) Markov random fields and their applications. American Mathematical Society, Providence

Koller D, Friedman N (2009) Probabilistic graphical models: principles and techniques. MIT Press, Cambridge

Lafferty J, McCallum A, Pereira F (2001) Conditional random fields: probabilistic models for segmenting and labeling sequence data. In: Proceedings of 18th international conference on machine learning, pp 282–289. Morgan Kaufmann, San Francisco, CA

Lauritzen SL (1996) Graphical models. Oxford University Press, Oxford

Lin C, Yang C, Hor C, Huang K (2010) Disulfide bonding state prediction with SVM based on protein types. In: Proceedings of the fifth IEEE international conference on bio-inspired computing: theories and applications, pp 1436–1442

Mandic DP, Chambers J (2001) Recurrent neural networks for prediction: learning algorithms, architectures and stability. Wiley, New York

Mitchell TM (1997) Machine learning. McGraw-Hill, New York

Mozer MC (1989) A focused backpropagation algorithm for temporal pattern recognition. Complex Syst 3(1):349–381

Neal R, Hinton GE (1999) A view of the EM algorithm that justifies incremental, sparse, and other variants. In: Jordan MI (ed) Learning in graphical models. MIT Press, Cambridge, pp 355–368

Neapolitan RE (2004) Learning Bayesian networks. Prentice Hall, Upper Saddle River

Pearl J (1985) Bayesian networks: a model of self-activated memory for evidential reasoning. In: Proceedings of the 7th conference of the cognitive science society, University of California, Irvine, pp 329–334

Pearl J (1988) Probabilistic reasoning in intelligent systems. Morgan Kaufmann, San Francisco

Rabiner LR (1989) A tutorial on hidden Markov models and selected applications in speech recognition. In: Proceedings of the IEEE, vol 77, no 2, pp 257–286

Rust R, Schmittlein D (1985) A Bayesian cross-validated likelihood method for comparing alternative specifications of quantitative models. Mark Sci 4(1):20–40

Savojardo C, Fariselli P, Alhamdoosh M, Martelli PL, Pierleoni A, Casadio R (2011) Improving the prediction of disulfide bonds in eukaryotes with machine learning methods and protein subcellular localization. Bioinformatics 27(16):2224–2230

Scanagatta M, de Campos CP, Corani G, Zaffalon M (2015) Learning Bayesian networks with thousands of variables. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R (eds) Advances in neural information processing systems, vol 28. Curran Associates, Red Hook, pp 1864–1872

Scanagatta M, Corani G, de Campos CP, Zaffalon M (2016) Learning treewidth-bounded Bayesian networks with thousands of variables. In: Lee DD, Sugiyama M, Luxburg UV, Guyon I, Garnett R (eds) Advances in neural information processing systems, vol 29. Curran Associates, Red Hook, pp 1462–1470

Shoombuatong W, Traisathit P, Prasitwattanaseree S, Tayapiwatana C, Cutler RW, Chaijaruwanich J (2011) Prediction of the disulphide bonding state of cysteines in proteins using conditional random fields. IJDMB 5(4):449–464

Singh R (2008) A review of algorithmic techniques for disulfide-bond determination. Brief Funct Genomic Proteomic 7(2):157–172

Trentin E (2015) Maximum-likelihood normalization of features increases the robustness of neural-based spoken human–computer interaction. Pattern Recogn Lett 66(C):71–80

Trentin E, Bongini M (2012) Towards a novel probabilistic graphical model of sequential data: fundamental notions and a solution to the problem of parameter learning. In: Proceedings of the 5th INNS IAPR TC 3 GIRPR workshop on artificial neural networks in pattern recognition, pp 72–81. Springer

Trentin E, Cattoni R (1999) Learning perception for indoor robot navigation with a hybrid hidden Markov model/recurrent neural networks approach. Connect Sci 11(3–4):243–265

Trentin E, Giuliani D (2001) A mixture of recurrent neural networks for speaker normalisation. Neural Comput Appl 10(2):120–135

Witten IH, Frank E (2005) Data mining, 2nd edn. Morgan Kaufmann, San Francisco

Author information

Authors and Affiliations

Corresponding author

Appendices

A Formal Definition of HRF and Related Notions

The material presented in this Appendix and in the next one is drawn from [15]. HRFs are aimed at representing joint probability distributions underlying sets of random variables. The definition of HRF involves a few preliminary notions concerning directed and undirected graphs. First, let us define the concept of (directed) union of directed graphs:

Definition 1

If \(\mathcal {G}_{1} = (\mathcal {V}_{1}, \mathcal {E}_{1})\) and \(\mathcal {G}_{2} = (\mathcal {V}_{2}, \mathcal {E}_{2})\) are directed graphs, then the (directed) union of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) (denoted by \(\mathcal {G}_{1} \cup \mathcal {G}_{2}\)) is the directed graph \(\mathcal {G} = (\mathcal {V}, \mathcal {E})\) such that \(\mathcal {V} = \mathcal {V}_{1} \cup \mathcal {V}_{2}\) and \(\mathcal {E} = \mathcal {E}_{1} \cup \mathcal {E}_{2}\).

Given a set of directed graphs \(\mathcal {G}_{1}, \ldots , \mathcal {G}_{n}\), the directed union \(\mathcal {G} = \bigcup _{i=1}^{n} \mathcal {G}_{i}\) results from iterated application of the binary union operator. Similarly, we define the notion of (undirected) union for undirected graphs as follows:

Definition 2

If \(\mathcal {G}_{1} = (\mathcal {V}_{1}, \mathcal {E}_{1})\) and \(\mathcal {G}_{2} = (\mathcal {V}_{2}, \mathcal {E}_{2})\) are undirected graphs, then the (undirected) union of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) (denoted by \(\mathcal {G}_{1} \cup \mathcal {G}_{2}\)) is the undirected graph \(\mathcal {G} = (\mathcal {V}, \mathcal {E})\) such that \(\mathcal {V} = \mathcal {V}_{1} \cup \mathcal {V}_{2}\) and \(\mathcal {E} = \mathcal {E}_{1} \cup \mathcal {E}_{2}\).

Now, if \(\mathcal {G} = (\mathcal {V}, \mathcal {E})\) is a directed graph, then we say that \(\mathcal {G}^{*} = (\mathcal {V}^{*}, \mathcal {E}^{*})\) is the undirected version of \(\mathcal {G}\) if \(\mathcal {V}^{*} = \mathcal {V}\) and \(\mathcal {E}^{*} = \{\{X_{i}, X_{j}\}: (X_{i}, X_{j}) \in \mathcal {E}\}\). Thus, we also define the undirected union for a pair of directed graphs:

Definition 3

If \(\mathcal {G}_{1} = (\mathcal {V}_{1}, \mathcal {E}_{1})\) and \(\mathcal {G}_{2} = (\mathcal {V}_{2}, \mathcal {E}_{2})\) are directed graphs, then the undirected union of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) (denoted by \(\mathcal {G}_{1} \Cup \mathcal {G}_{2}\)) is the (undirected) graph \(\mathcal {G}^{*} = \mathcal {G}_{1}^{*} \cup \mathcal {G}_{2}^{*}\) such that \(\mathcal {G}_{1}^{*}\) and \(\mathcal {G}_{2}^{*}\) are the undirected versions of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) respectively.

Finally, a HRF can be defined as follows:

Definition 4

Let \(\mathbf {X}\) be a set of random variables \(X_{1}, \ldots , X_{n}\). A hybrid random field for \(X_{1}, \ldots , X_{n}\) is a set of Bayesian networks \(BN_{1}, \ldots , BN_{n}\) (with DAGs \(\mathcal {G}_{1}, \ldots , \mathcal {G}_{n}\)) such that:

-

1.

Each \(BN_{i}\) contains \(X_{i}\) plus a subset \(\mathcal {R}(X_{i})\) of \(\mathbf {X} \setminus \{X_{i}\}\);

-

2.

If \(\mathcal {MB}_{i}(X_{i})\) denotes the Markov blanket [36] of \(X_{i}\) in \(BN_{i}\) (i.e. the set of the parents, the children, and the parents of the children of \(X_{i}\) in \(\mathcal {G}_{i}\)) and \(P(X_{i} \mid \mathcal {MB}_{i}(X_{i}))\) is the conditional distribution of \(X_{i}\) given \(\mathcal {MB}_{i}(X_{i})\), as derivable from \(BN_{i}\), then at least one of the following conditions is satisfied:

-

(a)

The directed graph \(\mathcal {G} = \bigcup _{i=1}^{n} \mathcal {G}_{i}\) is acyclic and there is a Bayesian network \(h_{\mathcal {G}}\) with DAG \(\mathcal {G}\) such that, for each \(X_{i}\) in \(\mathbf {X}\), \(P(X_{i} \mid \mathcal {MB}_{i}(X_{i})) = P(X_{i} \mid \mathcal {MB}_{\mathcal {G}}(X_{i}))\), where \(\mathcal {MB}_{\mathcal {G}}(X_{i})\) is the Markov blanket of \(X_{i}\) in \(h_{\mathcal {G}}\) and \(P(X_{i} \mid \mathcal {MB}_{\mathcal {G}}(X_{i}))\) is the conditional distribution of \(X_{i}\) given \(\mathcal {MB}_{\mathcal {G}}(X_{i})\), as entailed by \(h_{\mathcal {G}}\);

-

(b)

There is a Markov random field \(h_{\mathcal {G}^{*}}\) with graph \(\mathcal {G}^{*}\) such that \(\mathcal {G}^{*} = \mathcal {G}_{1} \Cup \ldots \Cup \mathcal {G}_{n}\) and, for each \(X_{i}\) in \(\mathbf {X}\), \(P(X_{i} \mid \mathcal {MB}_{i}(X_{i})) = P(X_{i} \mid \mathcal {MB}_{\mathcal {G}^{*}}(X_{i}))\), where \(\mathcal {MB}_{\mathcal {G}^{*}}(X_{i})\) is the Markov blanket of \(X_{i}\) in \(h_{\mathcal {G}^{*}}\) and \(P(X_{i} \mid \mathcal {MB}_{\mathcal {G}^{*}}(X_{i}))\) is the conditional distribution of \(X_{i}\) given \(\mathcal {MB}_{\mathcal {G}^{*}}(X_{i})\), as derived from \(h_{\mathcal {G}^{*}}\).

-

(a)

The elements of \(\mathcal {R}(X_{i})\) are called ‘relatives of \(X_{i}\)’. That is, the relatives of a node \(X_{i}\) in a HRF are the nodes appearing in graph \(\mathcal {G}_{i}\) (except for \(X_{i}\) itself). An example of HRF over the variables \(X_1, \ldots , X_4\) is shown in Fig. 10.

The graphical components of a hybrid random field for the variables \(X_{1}, \ldots , X_{4}\). Since each node \(X_{i}\) has its own Bayesian network (where nodes in \(\mathcal {MB}_{i}(X_{i})\) are shaded), there are four different DAGs \({\mathcal {G}}_1, \ldots , {\mathcal {G}}_4\). Relatives of \(X_{i}\) that are not in \(\mathcal {MB}_{i}(X_{i})\) are dashed

Two fundamental properties of HRFs stem from the following Modularity Theorem, whose proof is given in [15].

Theorem 1

Suppose that h is a hybrid random field for the random vector \(\mathbf {X} = (X_{1}, \ldots , X_{n})\), and let h be made up by Bayesian networks \(BN_{1}, \ldots , BN_{n}\) (with DAGs \(\mathcal {G}_{1}, \ldots , \mathcal {G}_{n}\)). Then, if each conditional distribution \(P(X_{i} \mid \mathcal {MB}_{i}(X_{i}))\) is strictly positive (where \(1 \le i \le n\)), h has the following properties:

-

1.

An ordered Gibbs sampler [22] applied to h, i.e. an ordered Gibbs sampler which is applied to the conditional distributions \(P(X_{1} \mid \mathcal {MB}_{1}(X_{1})), \ldots , P(X_{n} \mid \mathcal {MB}_{n}(X_{n}))\), defines a joint probability distribution over \(\mathbf {X}\) via its (unique) stationary distribution \(P(\mathbf {X})\);

-

2.

For each variable \(X_{i}\) in \(\mathbf {X}\), \(P(\mathbf {X})\) is such that \(P(X_{i} \mid \mathbf {X} \setminus \{X_{i}\}) = P(X_{i} \mid \mathcal {MB}_{i}(X_{i}))\).

The first condition in Theorem 1 is exploited in D-HRFs in order to express the overall likelihood of the D-HRF over an input sequence in terms of local, state-specific joint probability distributions (namely, the emission probabilities) modeled via HRFs. In turn, we refer to the second condition in Theorem 1 as the modularity property. Based on the modularity property, the set \(\mathcal {MB}_{i}(X_{i})\) is a Markov blanket of \(X_{i}\) in \(\mathbf {X}\).

B Likelihood Versus Pseudo-Likelihood in HRFs

Let \({{\mathcal {X}}} = \{X_{1}, \ldots , X_{n}\}\) be any set of n discrete random variables of interest. In order to extract the joint probability \(P({{\mathcal {X}}})\) from a HRF [15], Gibbs sampling techniques need to be used [15]. Unfortunately, Gibbs sampling can be computationally intractable [22]. Therefore, in practice we can follow in the footsteps of [3] and resort to the pseudo-likelihood function, defined as :

A discussion of the theoretical properties of the pseudo-likelihood is offered by [26]. Due to the modularity property, Eq. (29) can be rewritten as:

Therefore, in order to measure the pseudo-likelihood \(P^{*}({{\mathcal {X}}})\) of the HRF given \({{\mathcal {X}}}\) we only need to be able to compute the conditional distribution of each node \(X_{i}\) given the state \(mb_{i}(X_{i})\) of \(\mathcal {MB}_{i}(X_{i})\). A discussion of how this can be done in a simple and efficient way is given in [15].

Rights and permissions

About this article

Cite this article

Bongini, M., Freno, A., Laveglia, V. et al. Dynamic Hybrid Random Fields for the Probabilistic Graphical Modeling of Sequential Data: Definitions, Algorithms, and an Application to Bioinformatics. Neural Process Lett 48, 733–768 (2018). https://doi.org/10.1007/s11063-017-9730-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9730-3