Abstract

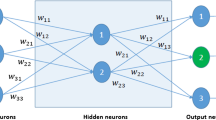

The paper introduces a robust connectionist technique for the empirical nonparametric estimation of multivariate probability density functions (pdf) from unlabeled data samples (still an open issue in pattern recognition and machine learning). To this end, a soft-constrained unsupervised algorithm for training a multilayer perceptron (MLP) is proposed. A variant of the Metropolis–Hastings algorithm (exploiting the very probabilistic nature of the present MLP) is used to guarantee a model that satisfies numerically Kolmogorov’s second axiom of probability. The approach overcomes the major limitations of the established statistical and connectionist pdf estimators. Graphical and quantitative experimental results show that the proposed technique can offer estimates that improve significantly over parametric and nonparametric approaches, regardless of (1) the complexity of the underlying pdf, (2) the dimensionality of the feature space, and (3) the amount of data available for training.

Similar content being viewed by others

Notes

Section 3 covers the issue from the practical standpoint.

Or, any other statistical significance test, for that matter.

References

Andrieu C, de Freitas N, Doucet A, Jordan MI (2003) An introduction to MCMC for machine learning. Mach Learn 50(1–2):5–43

Beirami A, Sardari M, Fekri F (2016) Wireless network compression via memory-enabled overhearing helpers. IEEE Trans Wirel Commun 15(1):176–190

Bishop CM (1995) Neural networks for pattern recognition. Oxford University Press, Oxford

Castillo E, Hadi A, Balakrishnan N, Sarabia J (2004) Extreme value and related models with applications in engineering and science, Wiley Series in Probability and Statistics. Wiley, London

Cybenko G (1989) Approximation by superposition of sigmoidal functions. Math Control Signal Syst 2(4):303–314

Duda RO, Hart PE, Stork DG (2000) Pattern classification, 2nd edn. Wiley-Interscience, New York

Huang CM, Lee YJ, Lin DKJ, Huang SY (2007) Model selection for support vector machines via uniform design. Comput Stat Data Anal 52(1):335–346

Japkowicz N, Shah M (2011) Evaluating learning algorithms: a classification perspective. Cambridge University Press, New York

Koslicki D, Thompson D (2015) Coding sequence density estimation via topological pressure. J Math Biol 70(1/2):45–69

Liang F, Barron A (2004) Exact minimax strategies for predictive density estimation, data compression, and model selection. IEEE Trans Inf Theory 50(11):2708–2726

Magdon-Ismail M, Atiya A (2002) Density estimation and random variate generation using multilayer networks. IEEE Trans Neural Netw 13(3):497–520

Modha DS, Fainman Y (1994) A learning law for density estimation. IEEE Trans Neural Netw 5(3):519–523

Newman MEJ, Barkema GT (1999) Monte Carlo methods in statistical physics. Oxford University Press, Oxford

Ohl T (1999) VEGAS revisited: adaptive Monte Carlo integration beyond factorization. Comput Phys Commun 120:13–19

Rissanen J (1978) Modeling by shortest data description. Automatica 14(5):465–471

Rubinstein RY, Kroese DP (2012) Simulation and the Monte Carlo method, 2nd edn. Wiley, London

Rust R, Schmittlein D (1985) A Bayesian cross-validated likelihood method for comparing alternative specifications of quantitative models. Mark Sci 4(1):20–40

Scholkopf B, Platt JC, Shawe-Taylor JC, Smola AJ, Williamson RC (2001) Estimating the support of a high-dimensional distribution. Neural Comput 13(7):1443–1471

Schwenker F, Abbas HM, Gayar NE, Trentin E (eds) (2016) Artificial neural networks in pattern recognition. In: 7th IAPR TC3 Workshop, ANNPR 2016, proceedings, Lecture Notes in Computer Science, vol 9896. Springer, Berlin

Trentin E (2001) Networks with trainable amplitude of activation functions. Neural Netw 14(45):471–493

Trentin E (2006) Simple and effective connectionist nonparametric estimation of probability density functions. In: Proceedings of the 2nd IAPR workshop on artificial neural networks in pattern recognition. Springer, Berlin, pp 1–10

Trentin E (2015) Maximum-likelihood normalization of features increases the robustness of neural-based spoken human–computer interaction. Pattern Recogn Lett 66:71–80

Trentin E (2016) Soft-constrained nonparametric density estimation with artificial neural networks. In: Proceedings of the 7th workshop on artificial neural networks in pattern recognition (ANNPR). Springer, Berlin, pp 68–79

Trentin E, Gori M (2003) Robust combination of neural networks and hidden Markov models for speech recognition. IEEE Trans Neural Netw 14(6):1519–1531

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Weston J, Gammerman A, Stitson M, Vapnik V, Vovk V, Watkins C (1999) Support vector density estimation. In: Scholkopf B, Burges C, Smola A (eds) Advances in kernel methods: support vector learning. MIT Press, Cambridge, pp 293–306

Yang Z (2010) Machine learning approaches to bioinformatics. World Scientific Publishing Company, Singapore

Acknowledgements

I gratefully acknowledge having been endowed with inspirational support from Alessandra in accomplishing this research.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Trentin, E. Soft-Constrained Neural Networks for Nonparametric Density Estimation. Neural Process Lett 48, 915–932 (2018). https://doi.org/10.1007/s11063-017-9740-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9740-1