Abstract

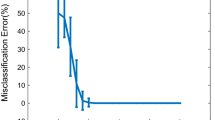

Recent systems from premier research labs, such as Facebook’s and Google’s, employ variants of the basic siamese neural networks (SNNs), a testimony to how SNNs are becoming very important in practical applications. The objective function of an SNN comprises two terms. Whereas there are no issues about the choice of the first term, there appears to be some issues concerning the choice of the second term, along the lines of: 1. apriori boundedness from below; and 2. vanishing gradients. Therefore, in this work, I study four possible candidates for the second term, in order to investigate the roles of apriori boundedness from below, and vanising gradients, on classification accuracy, as well as to, more importantly, from a practical standpoint, elucidate the effects, on classification accuracy, of using different types of second terms in SNNs. My results suggest that neither apriori boundedness nor vanishing gradients are crisp decisive factors governing the performances of the candidate functions. However, results show that, of the four candidates evaluated, a particular candidate features generally superior performance. I therefore recommend this candidate to the community, and this recommendation attains especial importance when taken against a backdrop of another facet of this work’s results which indicates that choosing a wrong objective function could cause classification accuracy to dip by as much as \(17 \%\).

Similar content being viewed by others

References

Bromley J, Guyon I, LeCun Y, Sackinger E, Shah E (1993) Signature verification using a siamese time delay neural network. In: Cowan J, Tesauro G (eds) Advances in neural information processing systems

Schroff F, Kalenichenko D, Philbin J (2015) Facenet: a unified embedding for face recognition and clustering. In: Proceedings of the IEEE conference on computer vision and pattern recognition

Taigman Y, Yang M, Ranzato M, Wolf L (2014) Deep-face: closing the gap to human-level performance in face verification. In: Proceedings of the IEEE conference on computer vision and pattern recognition

Parkhi O, Vedaldi A, Zisserman A (2015) deep face recognition. In: Proceedings of British machine vision conference

Bellet A, Habrard A, Sebban M (2013) A survey on metric learning for feature vectors and structured data. arXiv preprint arXiv:1306.6709

Wang J, Alzahrani M, Gao X (2014) Large margin image set representation and classification. In: The 2014 international joint conference on neural networks

Lu J, Wang G, Deng W, Moulin P, Zhou J (2015). Multi-manifold deep metric learning for image set classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition

Nielsen MA (2015) Neural networks and deep learning. Determination Press

Chopra S, Hadsell R, LeCun Y (2005). Learning a similarity metric discriminatively, with applications to face verificaton. In: Proceedings of the IEEE conference on computer vision and pattern recognition

Bottou L (1998) Online algorithms and stochastic approximations. In: Saad D (ed) Online learning and neural networks. Cambridge University Press, Cambridge

Bottou L (2010) Large-scale machine learning with stochastic gradient descent. Computational statistics

Hadsell R, Chopra S, LeCun Y (2006) Dimensionality reduction by learning an invariant mapping. In: Proceedings of the IEEE conference on computer vision and pattern recognition

Koch G, Zemel R, Salakhutdinov R (2015) Siamese neural networks for one-shot image recognition. In: Proceedings of the international conference on machine learning

Nair V, Hinton G (2010) Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the international conference on machine learning

Varior R, Shuai B, Lu J, Xu D, Wang G (2016) A Siamese long short-term memory architecture for human re-identification. arXiv preprint arXiv:1607.08381v1

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9:1735–1780

Varior R, Haloi M, Wang G (2016) Gated Siamese convolutional neural network architecture for human re-identification. arXiv preprint arXiv:1607.08378v2

Bertinetto L, Valmadre J, Henriques J, Vedaldi A, Torr P (2016) Fully-convolutional siamese networks for object tracking. In: Proceedings of the ECCV, 850–865

Wang Q, Gao J, Yuan Y (2018) Embedding structured contour and location prior in siamesed fully convolutional networks for road detection. IEEE Trans Intell Transp Syst 19:230–241

Ahrabian K and Babaali B (2017) On usage of autoencoders and siamese networks for online handwritten signature verication. arXiv preprint arXiv:1712.02781v2

Yang Z, Yang D, Dyer C, He X, Smola A and Hovy E (2016) Hierarchical attention networks for document classication. In: Proceedings of NAACL-HLT 1480–1489

Baziotis C, Pelekis N, Doulkeridis C (2017) Data stories at SemEval-2017 task 6: Siamese LSTM with attention for humorous text comparison. In: Proceedings of the 11th international workshop on semantic evaluations pp 390–395

Mueller J, Thyagarajan A (2016) Siamese recurrent architectures for learning sentence similarity. In: Proceedings of the 30th AAAI conference on artificial intelligence

Kumar S, Kumar S (2016) Comparative analysis of Manhattan and Euclidean distance metrics using A* algorithm. J Res Eng Appl Sci 1:196–198

Du W, Fang M, Shen M (2017) Siamese convolutional neural networks for authorship verication. http://cs231n.stanford.edu/reports/2017/pdfs/801.pdf. Accessed April 2018

Simonyan K, Zisserman A (2014). Very deep convolutional networks for large-scale image recognition. CoRR, arXiv:1409.1556

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2014). Going deeper with convolutions. CoRR, arXiv:1409.4842

He K, Zhang X, Ren S, Sun J (2015). Deep residual learning for image recognition. CoRR, arXiv:1512.03385

Berlemont S, Lefebvre G, Duffner S, Garcia C (2018) Class-balanced siamese neural networks. Neurocomputing 273:47–56

Ng A, Jiquan N, Chuan F, Yifan M, Caroline S UFDL tutorial on neural networks. http://ufdl.stanford.edu/wiki/index.php/Neural Networks. Accessed August 2014

Bishop C (1995) Neural networks for pattern recognition. Oxford University Press, Oxford

Silva PF, Marcal AR, Almeida da Silva RM (2013) Evaluation of features for leaf discrimination. Lecture Notes in Computer Science, Springer, Heidelberg

Evett IW, Spiehler EJ (1987) Rule induction in forensic science. Central Research Establishment. Home Office Forensic Science Service, Aldermaston, Reading, Berkshire

Street WN, Wolberg WH, Mangasarian OL (1993) Nuclear feature extraction for breast tumor diagnosis. International Symposium on Electronic Imaging: Science and Technology 1905:522–530

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient based learning applied to document recognition. Proc IEEE 86:2278–2324

Acknowledgements

Abdulrahman O. Ibraheem expresses utmost gratitude to God Almighty, Most Gracious and Merciful, Who has made this work a sucess, and who has given him everything. Next, the author thanks his parents who bore and nutured him. Further, he thanks Dr. Tunji Odejobi, Dr. Safiriyu Eludiora, Dr. Luqman Akanbi, Dr. Sururah Bello and Mr AbdulWakeel Ghazali of OAU Ile-Ife, Nigeria. The author also extends his utmost appreciation to Dr. Musodiq Bello of General Electric Healthcare, USA; Dr. Steve Lin of Microsoft Asia; and Dr. Michael Aupetit of Qatar Computing Research Institute for the aid/encouragement he received from them. Finally, he thanks Prof. Yann LeCun of the New York University’s Courant Mathematical Institute for the technical advice he gave concerning this work, and for pointing the author to useful literature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author declares that there are no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ibraheem, A.O. On the Choice of Inter-Class Distance Maximization Term in Siamese Neural Networks. Neural Process Lett 49, 1527–1541 (2019). https://doi.org/10.1007/s11063-018-9882-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-018-9882-9