Abstract

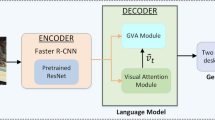

Automatically generating captions of an image is a fundamental problem in computer vision and natural language processing, which translates the content of the image into natural language with correct grammar and structure. Attention-based model has been widely adopted for captioning tasks. Most attention models generate only single certain attention heat map for indicating eyes where to see. However, these models ignore the endogenous orienting which depends on the interests, goals or desires of the observers, and constrain the diversity of captions. To improve both the accuracy and diversity of the generated sentences, we present a novel endogenous–exogenous attention architecture to capture both the endogenous attention, which indicates stochastic visual orienting, and the exogenous attention, which indicates deterministic visual orienting. At each time step, our model generates two attention maps, endogenous heat map and exogenous heat map, and then fuses them into hidden state of LSTM for sequential word generation. We evaluate our model on the Flickr30k and MSCOCO datasets, and experiments show the accuracy of the model and the diversity of captions it learns. Our model achieves better performance over state-of-the-art methods.

Similar content being viewed by others

References

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: CVPR, vol 3, p 6

Ankush G, Yashaswi V, Jawahar CV (2012) Choosing linguistics over vision to describe images. In: AAAI

Ba J, Mnih V, Kavukcuoglu K (2014) Multiple object recognition with visual attention. arXiv preprint arXiv:1412.7755

Bahdanau D, Cho K, Bengio Y (2015) Neural machine translation by jointly learning to align and translater. In: ICLR

Chen X, Lawrence Zitnick C (2015) Mind’s eye: a recurrent visual representation for image caption generation. In: CVPR, pp 2422–2431

Devlin J, Cheng H, Fang H, Gupta S, Deng L, He X, Zweig G, Mitchell M (2015) Language models for image captioning: the quirks and what works. arXiv preprint arXiv:1505.01809

Donahue J, Anne Hendricks L, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2015) Long-term recurrent convolutional networks for visual recognition and description. In: CVPR, pp 2625–2634

Farhadi A, Hejrati M, Sadeghi MA, Young P, Rashtchian C, Hockenmaier J, Forsyth D (2010) Every picture tells a story: generating sentences from images. In: ECCV, pp 15–29

Fu K, Jin J, Cui R, Sha F, Zhang C (2016) Aligning where to see and what to tell: image caption with region-based attention and scene-specific contexts. IEEE Trans Pattern Anal Mach Intell 39(12):2321–2334

Gregor K, Danihelka I, Graves A, Rezende D, Wierstra D (2015) Draw: a recurrent neural network for image generation. In: ICML, pp 1462–1471

Gupta A, Mannem P (2012) From image annotation to image description. In: ICNIP, pp 196–204

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR, pp 770–778

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Hodgson TL, Muller HJ (1999) Attentional orienting in two-dimensional space. Q J Exp Psychol Sect A 52(3):615–648

Hong C, Yu J, Tao D, Wang M (2015) Image-based three-dimensional human pose recovery by multiview locality-sensitive sparse retrieval. IEEE Trans Indust Elect 62(6):3742–3751

Hong C, Yu J, Wan J, Tao D, Wang M (2015) Multimodal deep autoencoder for human pose recovery. IEEE Trans Imag Proc 24(12):5659–5670

Jia X, Gavves E, Fernando B, Tuytelaars T (2015) Guiding the long-short term memory model for image caption generation. In: ICCV, pp 2407–2415

Karpathy A, Fei-Fei L (2015) Deep visual-semantic alignments for generating image descriptions. In: CVPR, pp 3128–3137

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: ICLR. arXiv:1412.6980v9

Kulkarni G, Premraj V, Dhar S, Li S, Choi Y, Berg AC, Berg TL (2011) Baby talk: understanding and generating image descriptions. In: CVPR

Kuznetsova P, Ordonez V, Berg AC, Berg TL, Choi Y (2012) Collective generation of natural image descriptions. ACL 1:359–368

Kuznetsova P, Ordonez V, Berg T, Choi Y (2014) Treetalk: composition and compression of trees for image descriptions. Trans Assoc Comput Ling 2(1):351–362

Lavie A, Agarwal A (2005) Meteor: an automatic metric for mt evaluation with improved correlation with human judgments. In: EMNLP workshop on statistical machine translation, pp 65–72

Lin CY (2004) Rouge: a package for automatic evaluation of summaries. In: ACL workshop on text summarization branches out, vol 8

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: common objects in context. In: ECCV, pp 740–755

Mao J, Xu W, Yang Y, Wang J, Huang Z, Yuille A (2015) Deep captioning with multimodal recurrent neural networks (m-rnn). In: ICLR

Mitchell M, Han X, Dodge J, Mensch A, Goyal A, Berg A, Yamaguchi K, Berg T, Stratos K, Daumé III H (2012) Midge: generating image descriptions from computer vision detections. In: EACL, pp 747–756

Müller HJ, Rabbitt PM (1989) Reflexive and voluntary orienting of visual attention: time course of activation and resistance to interruption. J Exp Psychol Hum Percept Perform 15(2):315

Ordonez V, Kulkarni G, Berg TL (2011) Im2text: describing images using 1 million captioned photographs. In: NIPS, pp 1143–1151

Papineni K, Roukos S, Ward T, Zhu WJ (2002) Bleu: a method for automatic evaluation of machine translation. In: AMACL, pp 311–318

Vedantam R, Lawrence Zitnick C, Parikh D (2015) Cider: consensus-based image description evaluation. In: CVPR, pp 4566–4575

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: CVPR, pp 3156–3164

Wu Q, Shen C, Liu L, Dick A, van den Hengel A (2016) What value do explicit high level concepts have in vision to language problems? In: CVPR, pp 203–212

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In: ICML, pp 2048–2057

Yagcioglu S, Erdem E, Erdem A, Cakici R (2015) A distributed representation based query expansion approach for image captioning. ACL-IJCNLP 2:106–111

Yao T, Pan Y, Li Y, Qiu Z, Mei T (2017) Boosting image captioning with attributes. In: ICCV, pp 22–29

You Q, Jin H, Wang Z, Fang C, Luo J (2016) Image captioning with semantic attention. In: CVPR, pp 4651–4659

Young P, Lai A, Hodosh M, Hockenmaier J (2014) From image descriptions to visual denotations: new similarity metrics for semantic inference over event descriptions. Trans Assoc Comput Ling 2:67–78

Yu J, Kuang Z, Zhang B, Zhang W, Lin D, Fan J (2018) Leveraging content sensitiveness and user trustworthiness to recommend fine-grained privacy settings for social image sharing. IEEE Trans Inf Forensics Secur 13(5):1317–1332

Yu J, Rui Y, Tao D et al (2014) Click prediction for web image reranking using multimodal sparse coding. IEEE Trans Imag Proc 23(5):2019–2032

Yu J, Zhang B, Kuang Z, Lin D, Fan J (2017) iprivacy: image privacy protection by identifying sensitive objects via deep multi-task learning. IEEE Trans Inf Forensics Secur 12(5):1005–1016

Zhang J, Yu J, Tao D (2018) Local deep-feature alignment for unsupervised dimension reduction. IEEE Trans Imag Proc 27(5):2420–2432

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61673402, Grant 61273270, and Grant 60802069, in part by the Natural Science Foundation of Guangdong under Grant 2017A030311029, Grant 2016B010109002, in part by the Science and Technology Program of Guangzhou under Grant 201704020180 and Grant 201604020024, and in part by the Fundamental Research Funds for the Central Universities of China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, T., Hu, H. & He, C. Image Caption with Endogenous–Exogenous Attention. Neural Process Lett 50, 431–443 (2019). https://doi.org/10.1007/s11063-019-09979-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-09979-7