Abstract

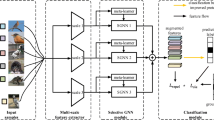

Large scale labeled samples are expensive and difficult to obtain, hence few-shot learning (FSL), only needing a small number of labeled samples, is a dedicated technology. Recently, the graph-based FSL approaches have attracted lots of attention. It is helpful to model pair-wise relations among samples according to the similarity of features. However, the data in the reality usually have high-order relations, which can not be modeled by the traditional graph-based methods. To address this challenge, we introduce hypergraph structure and propose the Dual-Modal Hypergraph Few-Shot Learning (DMH-FSL) method to model the relations from different perspectives to model the high-order relations between samples. Specifically, we construct a dual-modal (e.g., feature-modal and label-modal) hypergraph, the feature-modal hypergraph construct incidence matrix with samples’ features and the label-modal hypergraph construct incidence matrix with samples’ labels. In addition, we employ two hypergraph convolution methods to perform flexible aggregation of samples from different modals. The proposed DMH-FSL method is easy to extend to other graph-based methods. We demonstrate the efficiency of our DMH-FSL method on three benchmark datasets. Our algorithm has at least an increase of 2.62% in Stanford40(from 72.20 to 74.82%), 0.85% in mini-ImageNet(from 50.33 to 51.18%) and 1.61% in USE-PPMI(from 78.77 to 80.38%) in few-shot learning experiments. What’s more, the cross-domain experimental results evaluate our method’s adaptability in real-world applications to some extent.

Similar content being viewed by others

References

Bertinetto L, Henriques JF, Torr P, Vedaldi A (2019) Meta-learning with differentiable closed-form solvers. In: Proceedings of the international conference on learning representations (ICLR)

Fei-Fei L, Fergus R, Perona P (2006) One-shot learning of object categories. IEEE Trans Pattern Anal Mach Intell 28(4):594–611

Feng Y, You H, Zhang Z, Ji R, Gao Y (2019) Hypergraph neural networks. AAAI Conference on Artificial Intelligence (AAAI) 33:3558–3565

Feng Y, Zhang Z, Zhao X, Ji R, Gao Y (2018) Gvcnn: group-view convolutional neural networks for 3d shape recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 264–272

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: International conference on machine learning (ICML)

Fu S, Liu W, Zhou Y, Nie L (2019) Hplapgcn: hypergraph p-Laplacian graph convolutional networks. Neurocomputing 362:166–174

Gao Y, Wang M, Tao D, Ji R, Dai Q (2012) 3-d object retrieval and recognition with hypergraph analysis. IEEE Trans Image Process 21(9):4290–4303

Garcia V, Bruna J (2018) Few-shot learning with graph neural networks. In: Proceedings of the international conference on learning representations (ICLR)

Hihn H, Braun DA (2020) Specialization in hierarchical learning systems. Neural Process Lett 52(3):2319–2352

Huang Y, Liu Q, Metaxas D (2009) Video object segmentation by hypergraph cut. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 1738–1745

Huang Y, Liu Q, Zhang S, Metaxas DN (2010) Image retrieval via probabilistic hypergraph ranking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 3376–3383

Kim J, Kim T, Kim S, Yoo CD (2019) Edge-labeling graph neural network for few-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 11–20

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks. In: Proceedings of the international conference on learning representations (ICLR)

Koch G, Zemel R, Salakhutdinov R (2015) Siamese neural networks for one-shot image recognition. In: Proceedings of the international conference on learning representations workshop (ICLRW), vol. 2. Lille

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Proceedings of the advances in neural information processing systems (NIPS), pp 1097–1105

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 10657–10665

Li LJ, Fei-Fei L (2007) What, where and who? classifying events by scene and object recognition. In: Proceedings of the IEEE international conference on computer vision (ICCV). IEEE, pp 1–8

Liu Y, Lee J, Park M, Kim S, Yang E, Hwang SJ, Yang Y (2019) Learning to propagate labels: transductive propagation network for few-shot learning. In: Proceedings of the international conference on learning representations (ICLR)

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell 39(4):640–651

Nichol A, Achiam J, Schulman J (2018) On first-order meta-learning algorithms. arXiv preprint arXiv:1803.02999

Ravi S, Larochelle H (2016) Optimization as a model for few-shot learning. In: Proceedings of the international conference on learning representations (ICLR)

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning. In: Proceedings of the advances in neural information processing systems (NIPS), pp 4077–4087

Su H, Maji S, Kalogerakis E, Learned-Miller E (2015) Multi-view convolutional neural networks for 3d shape recognition. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 945–953

Sung F, Yang Y, Zhang L, Xiang T, Torr PH, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1199–1208

Vinyals O, Blundell C, Lillicrap T, Wierstra D et al (2016) Matching networks for one shot learning. In: Proceedings of the advances in neural information processing systems (NIPS), pp 3630–3638

Wang M, Liu X, Wu X (2015) Visual classification by l1 hypergraph modeling. IEEE Trans Knowl Data Eng 27(9):2564–2574

Wang Q, Huang W, Xiong Z, Li X (2020) Looking closer at the scene: multiscale representation learning for remote sensing image scene classification. IEEE Trans Neural Netw Learn Syst

Wang Q, Liu S, Chanussot J, Li X (2018) Scene classification with recurrent attention of vhr remote sensing images. IEEE Trans Geosci Remote Sens 57(2):1155–1167

Wang Q, Wang G, Kou G, Zang M, Wang H (2021) Application of meta-learning framework based on multiple-capsule intelligent neural systems in image classification. Neural Process Lett 2021:1–22

Wang W, Lai Q, Fu H, Shen J, Ling H, Yang R (2021) Salient object detection in the deep learning era: An in-depth survey. IEEE Trans Pattern Anal Mach Intell

Yang L, Li L, Zhang Z, Zhou X, Zhou E, Liu Y (2020) Dpgn: distribution propagation graph network for few-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 13390–13399

Yao B, Fei-Fei L (2010) Grouplet: a structured image representation for recognizing human and object interactions. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Yao B, Jiang X, Khosla A, Lin AL, Guibas L, Fei-Fei L (2011) Human action recognition by learning bases of action attributes and parts. In: Proceedings of the IEEE international conference on computer vision (ICCV). IEEE, pp 1331–1338

Yin T, Zhou X, Krahenbuhl P (2021) Center-based 3d object detection and tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 11784–11793

Yu J, Rui Y, Tao D (2014) Click prediction for web image reranking using multimodal sparse coding. IEEE Trans Image Process 23(5):2019–2032

Zheng S, Lu J, Zhao H, Zhu X, Luo Z, Wang Y, Fu Y, Feng J, Xiang T, Torr PH et al (2021) Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 6881–6890

Zhou D, Huang J, Schölkopf B (2007) Learning with hypergraphs: clustering, classification, and embedding. In: Advances in neural information processing systems (NIPS), pp 1601–1608

Acknowledgements

The paper was supported by the National Natural Science Foundation of China (Grant No. 61671480), the Open Project Program of the National Laboratory of Pattern Recognition (NLPR) (Grant No. 202000009),the Major Scientific and Technological Projects of CNPC under Grant ZD2019-183-008, and the Graduate Innovation Project of China University of Petroleum (East China) YCX2021123.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, R., Liu, B., Lu, X. et al. DMH-FSL: Dual-Modal Hypergraph for Few-Shot Learning. Neural Process Lett 54, 1317–1332 (2022). https://doi.org/10.1007/s11063-021-10684-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10684-7