Abstract

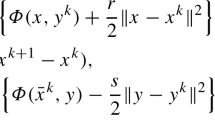

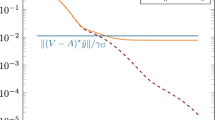

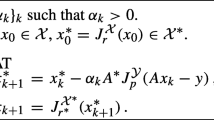

The primal–dual hybrid gradient (PDHG) method has been widely used for solving saddle point problems emerged in imaging processing. In particular, PDHG can be used to solve convex problems with linear constraints. Recently, it was shown that without further assumptions, the original PDHG may fail to converge. In this paper, we modify the original PDHG to obtain a convergent method. The method is in a prediction–correction fashion: the predictor is generated by PDHG and the correction is completed by two minor computations. The requirement of the step size parameters in our method is \(rs>\frac {1}{4}\|A^{T}A\|\), which differs from some existing PDHG variants that require rs > ∥ATA∥, and hence allows for larger step sizes. We prove the global convergence and establish the O(1/t) nonergodic convergence rate result for the method (t represents the iteration number). Numerical results show that our method with larger step sizes needs less iterations than existing efficient methods to achieve the same accuracy.

Similar content being viewed by others

References

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trends Optim. 1, 127–239 (2014)

Bonettini, S., Ruggiero, V.: On the convergence of primal-dual hybrid gradient algorithms for total variation image restoration. J. Math. Imaging Vision 44, 236–253 (2012)

Cai, J.F., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20, 1956–1982 (2010)

Cai, X.J., Han, D.R., Xu, L.L.: An improved first-order primal-dual algorithm with a new correction step. J. Glob. Optim. 57, 1419–1428 (2013)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found Comput. Math. 9, 717–772 (2009)

Chambolle, A., Pock, T.: A first-order primal-dual algorithms for convex problem with applications to imaging. J. Math. Imaging Vis. 40, 120–145 (2011)

Chambolle, A., Pock, T.: On the ergodic convergence rates of a first-order primaldual algorithm. Math. Prog., Series A 159, 253–287 (2016)

Chambolle, A., Pock, T.: An introduction to continuous optimization for imaging. Acta Numerica 25, 161–319 (2016)

Chan, R.H., Ma, S.Q., Yang, J.F.: Inertial primal-dual algorithms for structured convex optimization. arXiv:1409.2992 (2014)

Chen, Y., Lan, G., Ouyang, Y.: Optimal primal-dual methods for a class of saddle point problems. SIAM J. Optim. 24, 1779–1814 (2014)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM Rev. 43, 129–159 (2001)

Esser, E., Zhang, X.Q., Chan, T.F.: A general framework for a class of first order primal-dual algorithms for convex optimization in imaging science. SIAM J. Imag. Sci. 3, 1015–1046 (2010)

Goldstein, T., Li, M., Yuan, X.: Adaptive Primal-Dual Splitting Methods for Statistical Learning and Image Processing. In: Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems 28, pp. 2089–2097. Curran Associates, Inc. http://papers.nips.cc/paper/5723-adaptive-primal-dual-splitting-methods-for-statistical-learningand-image-processing.pdf (2015)

He, B.S., Ma, F., Yuan, X.M.: An algorithmic framework of generalized primal-dual hybrid gradient methods for saddle point problems. J. Math. Imaging Vis. 58, 279–293 (2017)

He, B.S., Yuan, X.M.: Convergence analysis of primal-dual algorithms for a saddle-point problem: From contraction perspective. SIAM J. Imaging Sci. 5, 119–149 (2012)

He, B.S., Yuan, X.M., Zhang, W.X.: A customized proximal point algorithm for convex minimization with linear constraints. Comput. Optim Appl. 56, 559–572 (2013)

He, B.S., You, Y.F., Yuan, X.M.: On the convergence of primal-dual hybrid gradient algorithm. SIAM J. Imaging Sci. 7, 2526–2537 (2014)

He, H.J., Desai, J., Wang, K.: A primal-dual prediction-correction algorithm for saddle point optimization. J. Glob. Optim. 66, 573–583 (2016)

Hestenes, M.R.: Multiplier and gradient methods. J. Optim. Theory Appli. 4, 303–320 (1969)

Martinet, B.: Regularision d’inéquations variationnelles par approximations successive. Revue Francaise d’Automatique et Informatique Recherche Opérationnelle 126, 154–159 (1970)

Ma, F., Ni, M.F.: A class of customized proximal point algorithms for linearly constrained convex optimization. Comput. Appl. Math. 37, 896–911 (2018)

Ma, S.Q., Goldfarb, D., Chen, L.: Fixed point and Bregman iterative methods for matrix rank minimization. Math. Prog. Ser. A. 128, 321–353 (2011)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60, 259–268 (1992)

Powell, M.J.D.: A Method for Nonlinear Constraints in Minimization Problems. In: Fletcher, R. (ed.) Optimization, pp 283–298. Academic Press, New York (1969)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm,. SIAM J. Control Optim. 14, 877–898 (1976)

Starck, J.L., Murtagh, F., Fadili, J.M.: Sparse image and signal processing, wavelets, curvelets, morphological diversity. Cambridge University Press, Cambridge (2010)

Yang, J.F., Yuan, X.M.: Linearized augmented Lagrangian and alternating direction methods for nuclear norm minimization. Math. Comp. 82, 301–329 (2013)

Zhang, X.Q., Burger, M., Osher, S.: A unified primal-dual algorithm framework based on Bregman iteration. J. Sci. Comput. 46, 20–46 (2010)

Zhu, M., Chan, T.F.: An efficient primal-dual hybrid gradient algorithm for total variation image restoration, CAM Report 08-34, UCLA USA (2008)

Acknowledgements

The authors would like to thank the anonymous referee for providing insightful comments and constructive suggestions, which helped us significantly improve the presentation of the manuscript.

Funding

The work is supported in part by the NSFC grant 11701564.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ma, F., Bi, Y. & Gao, B. A prediction–correction-based primal–dual hybrid gradient method for linearly constrained convex minimization. Numer Algor 82, 641–662 (2019). https://doi.org/10.1007/s11075-018-0618-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-018-0618-8