Appendix A: Hermite polynomials and derivation of equations

The probabilistic Hermite polynomials as described in Nualart [16] are defined as:

$$\begin{aligned} h_n(x) = \frac{1}{\varphi (x)}\cdot \left(-\frac{d}{d x} \right)^n \varphi (x). \end{aligned}$$

(7.1)

The first four Hermite polynomials are

$$\begin{aligned} h_0(x) = 1,\quad h_1(x) = x, \quad h_2(x) = x^2 - 1,\quad h_3(x) = x^3 - 3x, \end{aligned}$$

(7.2)

and in general they solve the recurrence relation

$$\begin{aligned} h_{n+1}(x)=x \cdot h_n(x) - n\cdot h_{n-1}(x). \end{aligned}$$

(7.3)

We have the following Hermite polynomial generalization of Stein’s lemma.

Theorem 7.1

If \(X\) is a standard Gaussian random variable, then

$$\begin{aligned} E\left[f(X) \cdot h_n(X)\right] = E\left[\frac{d^n\ }{dX^n}f(X)\right] \end{aligned}$$

where \(f\) is any generalized function.

This follows from induction and integration by parts, since the Gaussian density is smooth (infinitely differentiable). From this result follows the orthogonality property of Hermite polynomials, namely

$$\begin{aligned} E\left[h_n(X) \cdot h_m(X)\right] = {\left\{ \begin{array}{ll} m!&\quad \text{ if} n \text{=} m , \\ 0&\quad \text{ if} n \ne m. \end{array}\right.} \end{aligned}$$

This follows from the fact that \(m\) derivatives of a degree \(n\) polynomial is always zero when \(m>n\). Moreover, we get \(m!\) when \(m=n\) since \(h_m(x)\) is always a monic polynomial. Since \(h_0(X) = 1\), it now follows that all random variables of the form \(h_n(X)\) have expectation zero, when \( n \ge 1\).

If \(f(X)\) is square integrable, then it can be written as an infinite sum of Hermite polynomials of \(X\), i.e.,

$$\begin{aligned} f(X)&= \sum ^{\infty }_{n=0} \frac{1}{n!} E\left[\frac{d^n\ }{dX^n}f(X)\right] \cdot h_n(X), \end{aligned}$$

where the convergence is with respect to the mean square (or \(L^2\)) norm. From this orthogonal series expansion, it follows that

$$\begin{aligned} E\left[f(X) \cdot g(X)\right]&= \sum ^{\infty }_{n=0} \frac{1}{n!} \cdot E\left[\frac{d^n\ }{dX^n}f(X)\right] \cdot E\left[\frac{d^n }{dX^n}g(X)\right] \\ \end{aligned}$$

or equivalently

$$\begin{aligned} \text{ Cov}\left[f(X) , g(X)\right]&= \sum ^{\infty }_{n=1} \frac{1}{n!} \cdot E\left[\frac{d^n\ }{dX^n}f(X)\right] \cdot E\left[\frac{d^n\ }{dX^n}g(X)\right]. \\ \end{aligned}$$

Proof of Theorem 4.1:

This proof follows from the forward equations for the first, second, and third cumulant moments or Eq. 2.2 and our assumption that \(\mathcal Q \! =\! q \!+\! \sqrt{v} \cdot Y_\theta \). We show it for two of the terms. All remaining expressions are derived similarly.

$$\begin{aligned} E\left[ (\mathcal Q - c)^+ \right] = E\left[ (Y_\theta - \chi )^+ \right]\cdot \sqrt{v}. \end{aligned}$$

(7.4)

Using the property that the covariance of any random variable with a constant is zero, then we have

$$\begin{aligned} \text{ Cov}\left[\mathcal Q ,(\mathcal Q - c)^+ \right] =\text{ Cov}\left[Y_\theta , (Y_\theta - \chi )^+ \right] \cdot {v}. \end{aligned}$$

(7.5)

\(\square \)

Recall that for our GSA algorithm, we need to find simple expressions for terms like

$$\begin{aligned} \text{ Cov}\left[ {Y_\theta ,\left( {Y_\theta - \chi } \right)^ + } \right] \text{ and} \text{ Cov}\left[ {Y_\theta ^2 ,\left( {Y_\theta - \chi } \right)^ + } \right]. \end{aligned}$$

Observing that \( \left( {Y_\theta - \chi } \right)^ + = \left( {Y_\theta - \chi } \right) \cdot \{ Y_\theta \ge \chi \}\), motivates the next two lemmas.

Lemma 7.2

For all \( n\ge 1 \), we have

$$\begin{aligned} E\left[ {\frac{{d^n \,}}{{dX^n }}\left\{ {Y_\theta \ge \chi } \right\} } \right]&= h_{n - 1} (z_ + ) \cdot \varphi (z_ + ) - h_{n - 1} (z_ - ) \cdot \varphi (z_ - ) \\ \end{aligned}$$

Proof

We use the identity \( \left\{ {Y_\theta \ge \chi } \right\} = \overline{\left\{ {z_ - \le X \le z_ + } \right\} } \) , where we use the overline of an event to denote its complement. \(\square \)

Lemma 7.3

For all \( n\ge 3 \), we have

$$\begin{aligned}&E\left[ {\frac{d^{n}}{dX^{n}}\left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad = \cos \theta \cdot \left( {h_{n - 2} (z_ + ) \cdot \varphi (z_ + ) - h_{n - 2} (z_ - ) \cdot \varphi (z_ - )} \right) \\&\quad \quad + \sqrt{2} \cdot \sin \theta \cdot \left( \left( {z_ + \cdot h_{n - 2} (z_ + ) + h_{n - 3} (z_ + )} \right) \cdot \varphi (z_ + )\right.\\&\qquad \left. - \left( {z_ - \cdot h_{n - 2} (z_ - ) + h_{n - 3} (z_ - )} \right) \cdot \varphi (z_ - ) \right). \end{aligned}$$

with

$$\begin{aligned}&E\left[ {\frac{d^{2}}{dX^{2}} \left( {Y_\theta - \chi } \right)^ + } \right]\\&\quad =\! \cos \theta \cdot \left( {\varphi (z_ + ) - \varphi (z_ - )} \right) \!+\! \sqrt{2} \cdot \sin \theta \cdot \left( {\left( {z_ + \cdot \varphi (z_ + ) {-} z_ {-} \cdot \varphi (z_ - )} \right) + P\left\{ {Y_\theta \ge \chi } \right\} } \right). \end{aligned}$$

and

$$\begin{aligned} E\left[ {\frac{{d\;\,}}{{dX}}\left( {Y_\theta - \chi } \right)^ + } \right] = \cos \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \sqrt{2} \cdot \sin \theta \cdot \left( {\varphi (z_ + ) - \varphi (z_ - )} \right).\qquad \end{aligned}$$

(7.6)

Proof

We prove the first equality to establish the idea and omit the proof of the latter two terms as they are proved in an identical fashion. Define \(f(z_{\pm }) = f(z_+) - f(z_-)\) and \(\zeta (z)\equiv z\). We then have

$$\begin{aligned}&E\left[ {\frac{d^{n}}{dX^{n}}\left( {Y_\theta - \chi } \right)^ + } \right]\nonumber \\&\quad = E\left[ {h_{n - 1} (X) \cdot \frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right] \nonumber \\&\quad = E\left[ {h_{n - 1} (X) \cdot \left( {Y_\theta - \chi } \right) \cdot \frac{d}{dX}\left\{ {Y_\theta \ge \chi } \right\} } \right]\nonumber \\&\qquad + E\left[ {h_{n - 1} (X) \cdot \left\{ {Y_\theta \ge \chi } \right\} \cdot \frac{d}{dX} \left( {Y_\theta - \chi } \right)} \right]\nonumber \\&\quad = E\left[ {h_{n - 1} (X) \cdot \left( {Y_\theta - \chi } \right) \cdot \delta _{z \pm } (X)} \right] + E\left[ {h_{n - 1} (X) \cdot \left\{ {Y_\theta \ge \chi } \right\} \cdot Y^{\prime }_\theta } \right] \nonumber \\&\quad =\cos \theta \! \cdot \! E\left[ {h_{n - 1} (X) \cdot \left\{ {Y_\theta \!\ge \! \chi } \right\} } \right] \!+\! \sqrt{2} \cdot \sin \theta \cdot E\left[ {h_{n - 1} (X) \!\cdot \! X \!\cdot \! \left\{ {Y_\theta \ge \chi } \right\} } \right] \end{aligned}$$

(7.7)

$$\begin{aligned}&\quad =\cos \theta \cdot E\left[ {\frac{d^{n - 1} }{dX^{n - 1}} \cdot \left\{ {Y_\theta \ge \chi } \right\} } \right] + \sqrt{2} \cdot \sin \theta \cdot E\left[ {\frac{d^{n - 1}}{dX^{n - 1}} \cdot X \cdot \left\{ {Y_\theta \ge \chi } \right\} } \right] \nonumber \\&\quad =\cos \theta \cdot E\left[ {\left( {h_{n - 2} \cdot \delta _{z \pm } } \right)(X)} \right]\nonumber \\&\quad \quad + \sqrt{2} \cdot \sin \theta \cdot \left( {E\left[ {X \cdot \frac{d^{n - 1}}{dX^{n - 1}} \left\{ {Y_\theta \ge \chi } \right\} } \right] + (n - 1) \cdot E\left[ {\frac{d^{n - 2}}{dX^{n - 2}} \left\{ {Y_\theta \ge \chi } \right\} } \right]} \right) \nonumber \\&\quad = \cos \theta \cdot E\left[ {\left( {h_{n - 2} \cdot \delta _{z \pm } } \right)(X)} \right]\nonumber \\&\quad \quad + \sqrt{2} \cdot \sin \theta \cdot \left( {E\left[ {\left( {h_{n - 1} \cdot \delta _{z \pm } } \right)(X)} \right] + (n - 1) \cdot E\left[ {\left( {h_{n - 3} \cdot \delta _{z \pm } } \right)(X)} \right]} \right)\nonumber \\&\quad = \cos \theta \cdot E\left[ {\left( {h_{n - 2} \cdot \delta _{z \pm } } \right)(X)} \right]\nonumber \\&\quad \quad +\sqrt{2} \cdot \sin \theta \cdot \left( E\left[ {\left( {\left( {\zeta \cdot h_{n - 2} - (n - 2) \cdot h_{n - 3} } \right) \cdot \delta _{z \pm } } \right)(X)} \right]\right.\nonumber \\&\qquad \left.+ (n - 1) \cdot E\left[ {\left( {h_{n - 3} \cdot \delta _{z \pm } } \right)(X)} \right] \right) = \cos \theta \cdot E\left[ {\left( {h_{n - 2} \cdot \delta _{z \pm } } \right)(X)} \right]\nonumber \\&\qquad + \sqrt{2} \cdot \sin \theta \cdot E\left[ {\left( {\left( {\zeta \cdot h_{n - 2} + h_{n - 3} } \right) \cdot \delta _{z \pm } } \right)(X)} \right]. \end{aligned}$$

(7.8)

\(\square \)

Now that we have established our main lemmas, we use them to obtain the following simple expressions for the covariance terms that are used in the forward equations for the mean, variance, and skewness of the GSA.

Proof of Theorem 4.3:

$$\begin{aligned} \text{ Cov}\left[ {Y_\theta ,\left( {Y_\theta - \chi } \right)^ + } \right]&= E\left[ {\frac{d}{dX}Y_\theta } \right] \cdot E\left[ {\frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right]\\&+ \frac{1}{2} \cdot E\left[ {\frac{d^{2}}{dX^{2}}Y_\theta } \right] \cdot E\left[ {\frac{d^{2}}{dX^{2}}\left( {Y_\theta - \chi } \right)^ + } \right] \\&= \cos \theta \cdot E\left[ {\frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right]\\&+ \frac{1}{2} \cdot \sqrt{2} \cdot \sin \theta \cdot E\left[ {\frac{d^{2}}{dX^{2}}\left( {Y_\theta - \chi } \right)^ + } \right] \\&= \cos \theta \cdot \left( {\cos \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \sqrt{2} \cdot \sin \theta \cdot \varphi (z_ \pm )} \right) \\&+ \frac{\sin \theta }{\sqrt{2}} \cdot \left( \cos \theta \cdot \varphi (z_ \pm ) + \sqrt{2} \cdot \sin \theta \cdot ( ( {\zeta \cdot \varphi } )(z_ \pm )\right. \\&\left. + P\{ {Y_\theta \ge \chi } \} ) \right) \\&= P\left\{ {Y_\theta \ge \chi } \right\} + \frac{3\sqrt{2}}{2} \cdot \sin \theta \cdot \cos \theta \cdot \varphi (z_ \pm ) \\&+ \sin ^2 \theta \cdot \left( {\zeta \cdot \varphi } \right)(z_ \pm ). \end{aligned}$$

For the next set of calculations, we define

$$\begin{aligned} Y_{\theta }^{\prime } \equiv \frac{d}{dX}Y_{\theta } \text{ and} Y_{\theta }^{\prime \prime } \equiv \frac{d^2}{dX^2} Y_{\theta } \end{aligned}$$

(7.9)

and use them when convenient. Moreover, for the second covariance term, we have

$$\begin{aligned}&{\text{ Cov}}\left[ {Y_\theta ^2 ,\left( {Y_\theta - \chi } \right)^ + } \right]\\&\quad = E\left[ {\frac{d}{dX}Y_\theta ^2 } \right] \cdot E\left[ {\frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right] + \frac{1}{2} \cdot E\left[ {\frac{d^2}{dX^2}Y_\theta ^2 } \right] \cdot E\left[ {\frac{d^2}{dX^2}\left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad \quad + \frac{1}{6} \cdot E\left[ {\frac{d^{3}}{dX^{3}}Y_\theta ^2 } \right] \cdot E\left[ {\frac{d^{3}}{dX^{3}}\left( {Y_\theta - \chi } \right)^ + } \right]\\&\qquad + \frac{1}{24} \cdot E\left[ {\frac{d^{4}}{dX^{4}}Y_\theta ^2 } \right] \cdot E\left[ {\frac{d^{4}}{dX^{4}}\left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad = 2\sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right]\\&\qquad + \frac{1}{2} \cdot 2\left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\frac{d^{2}}{dX^{2}}\left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad \quad + \frac{1}{6} \cdot 6\sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\frac{d^{3}}{dX^{3}}\left( {Y_\theta - \chi } \right)^ + } \right]\\&\qquad + \frac{1}{24} \cdot 12\sin ^2 \theta \cdot E\left[ {\frac{d^{4}}{dX^{4}} \left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad = 2\sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right]\\&\qquad + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {h_1 (X) \cdot \frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad \quad + \sqrt{2} \sin \theta \cos \theta \cdot E\left[ {h_2 (X) \cdot \frac{d}{dX} \left( {Y_\theta - \chi } \right)^ + } \right]\\&\qquad + \frac{\sin ^2 \theta }{2} \cdot E\left[ {h_3 (X) \cdot \frac{d}{dX}\left( {Y_\theta - \chi } \right)^ + } \right] \\&\quad = 2\sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\left\{ {Y_\theta \ge \chi } \right\} \cdot Y^{\prime }_\theta } \right] {+} \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {h_1 (X) \cdot \left\{ {Y_\theta {\ge } \chi } \right\} \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + \sqrt{2} \sin \theta \cos \theta \cdot E\left[ {h_2 (X) \cdot \left\{ {Y_\theta \!\ge \! \chi } \right\} \cdot Y^{\prime }_\theta } \right] \!+\! \frac{\sin ^2 \theta }{2} \cdot E\left[ {h_3 (X) \cdot \left\{ {Y_\theta \!\ge \! \chi } \right\} \cdot Y^{\prime }_\theta } \right]\\&\quad = 2\sqrt{2} \sin \theta \cos ^2 \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} \!+\! \left( {1 \!+\! \sin ^2 \theta } \right) \cdot E\left[ {\delta _{z \pm } (X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 4\sin ^2 \theta \cos \theta \cdot E\left[ {\left\{ {Y_\theta \ge \chi } \right\} \cdot X} \right] + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\left\{ {Y_\theta \ge \chi } \right\} \cdot Y^{\prime \prime }_\theta } \right] \\&\quad \quad + \sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^2 \theta }{2} \cdot E\left[ {\left( {h_2 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + \sqrt{2} \sin \theta \cos \theta {\cdot } E\left[ {h_1 (X) {\cdot } \left\{ {Y_\theta \ge \chi } \right\} \cdot Y^{\prime \prime }_\theta } \right] {+}\, \frac{\sin ^2 \theta }{2} \cdot E\left[ {h_2 (X) \cdot \left\{ {Y_\theta \!\ge \! \chi } \right\} \cdot Y^{\prime \prime }_\theta } \right] \\&\quad = 2\sqrt{2} \sin \theta \cos ^2 \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\delta _{z \pm } (X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 4\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] + \sqrt{2} \sin \theta \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\left\{ {Y_\theta \ge \chi } \right\} } \right] \\&\quad \quad + \sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^2 \theta }{2} \cdot E\left[ {\left( {h_2 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 2\sin ^2 \theta \cos \theta \cdot E\left[ {h_1 (X) \cdot \left\{ {Y_\theta \ge \chi } \right\} } \right] + \frac{\sin ^2 \theta }{2}\sqrt{2} \sin \theta \cdot E\left[ {h_2 (X) \cdot \left\{ {Y_\theta \ge \chi } \right\} } \right]\\&\quad = 2\sqrt{2} \sin \theta \cos ^2 \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\delta _{z \pm } (X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 4\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] + \sqrt{2} \sin \theta \left( {1 + \sin ^2 \theta } \right) \cdot P\left\{ {Y_\theta \ge \chi } \right\} \\&\quad \quad + \sqrt{2} \sin \theta \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^{2} \theta }{2} \cdot E\left[ {\left( {h_2 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 2\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] + \frac{\sin ^{2} \theta }{2}\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \end{aligned}$$

Finally, we have

$$\begin{aligned}&{\text{ Cov}}\left[ {Y_\theta ^2 ,\left( {Y_\theta - \chi } \right)^ + } \right]\\&\quad = \left( {2\sqrt{2} \sin \theta - 2\sqrt{2} \sin ^3 \theta + \sqrt{2} \sin \theta + \sqrt{2} \sin ^3 \theta } \right) \cdot P\left\{ {Y_\theta \ge \chi } \right\} \\&\quad \quad + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\delta _{z \pm } (X) \cdot Y^{\prime }_\theta } \right] + 6\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] \\&\quad \quad + \frac{\sqrt{2}}{2}\sin \theta \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^2 \theta }{2} \cdot E\left[ {\left( {h_2 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + \frac{\sqrt{2}}{2}\sin \theta \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^{2} \theta }{2}\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad = \left( {3 - \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\delta _{z \pm } (X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 6\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right]\\&\qquad + \frac{\sin \theta }{\sqrt{2}} \cdot E\left[ {\left( {\left( {\cos \theta \cdot h_1 + \frac{\sin \theta }{\sqrt{2}} \cdot h_2 } \right) \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + \frac{\sqrt{2}}{2}\sin \theta \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^{2} \theta }{2}\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad = \left( {3 - \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \left( {1 + \sin ^2 \theta } \right) \cdot E\left[ {\delta _{z \pm } (X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + 6\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] + \frac{\sin \theta }{\sqrt{2}} \cdot E\left[ {\left( {\chi \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] \\&\quad \quad + \frac{\sin \theta }{\sqrt{2}} \cdot \cos \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X) \cdot Y^{\prime }_\theta } \right] + \frac{\sin ^{3} \theta }{\sqrt{2} } \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad = \left( {3 - \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} + \left( {1 + \sin ^2 \theta } \right)\cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] \\&\quad \quad + \left( {1 + \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] + 6\sin ^2 \theta \cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] \\&\quad \quad + \frac{\sin \theta }{\sqrt{2} }\cos \theta \cdot E\left[ {\left( {\chi \cdot \delta _{z \pm } } \right)(X)} \right] + \chi \cdot \frac{\sin \theta }{\sqrt{2} }\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad \quad + \frac{\sin \theta }{\sqrt{2} } \cdot \cos ^2 \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\qquad + \frac{\sin \theta }{\sqrt{2}} \cdot \cos \theta \cdot \sqrt{2} \sin \theta \cdot E\left[ {\left( {\left( {h_2 + 1} \right) \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad \quad + \frac{\sin ^3 \theta }{\sqrt{2}} \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad = \left( {3 - \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} \\&\qquad + \left( {1 + \chi \cdot \frac{\sin \theta }{\sqrt{2}} + 8\sin ^2 \theta } \right)\cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] \\&\quad \quad + \left( {\frac{3}{2} + \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right]\\&\qquad + \chi \cdot \frac{\sin \theta }{\sqrt{2}}\sqrt{2} \sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad \quad + \cos \theta \sin \theta \sqrt{2} \cdot E\left[ {\left( {\left( {\chi - \cos \theta \cdot h_1 } \right) \cdot \delta _{z \pm } } \right)(X)} \right] \\&\quad = \left( {3 - \sin ^2 \theta } \right)\sqrt{2} \sin \theta \cdot P\left\{ {Y_\theta \ge \chi } \right\} \\&\qquad + \left( {1 + \frac{3\sqrt{2}}{2} \cdot \chi \sin \theta + 8\sin ^2 \theta } \right)\cos \theta \cdot E\left[ {\delta _{z \pm } (X)} \right] \\&\quad \quad + \left( {\frac{\sqrt{2}}{2} + \chi \cdot \sin \theta + 2\sqrt{2} \sin ^2 \theta } \right)\sin \theta \cdot E\left[ {\left( {h_1 \cdot \delta _{z \pm } } \right)(X)} \right]. \end{aligned}$$

\(\square \)

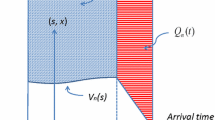

Appendix B: Monte Carlo queueing simulation

To simulate the mean of our queueing process, we implement an algorithm similar to the one illustrated below for a Monte Carlo simulation of the transient mean for an \(M/M/c\) queue. As shown in Fig. 14, the inner loop is for each i.i.d. Markov sample path labeled by “run.” In theory, we are summing up all the sample path realizations of the number in the queue at a fixed “time.” In practice, however, the sum of the previous instance, “time” minus “tick,” is known. Determining which “next event time” for a given “run” exceeds the current “time” is now a “run” whose queue must be updated. Applying this same update of a customer arrival or departure to the total sum, or “queue run sum,” updates the total sum. After we are done with all the “total runs,” we then move forward in time by a small amount “tick.” Care must be taken to make sure that the size tick is smaller than any of the average holding times for any state.

Using this approach, we do not have to store the discretized version of each sample path. For our numerical example, we would have to store 10,000 vectors of dimension 40,000. The latter number is the length of the time interval 40.0 divided by \(\Delta t = 10^{-3}\). We only need to store a single vector of this type which corresponds to the sample mean of the number in the queue as it evolves over time.

Figure 15 illustrates what is going on inside the “Update” subroutine in more detail. At state \(n\), the holding time for our \(M/M/c\) queue has an exponential distribution with rate \(\lambda + \mu *\min (n,c)\). The next transition state is \(n+1\) (or using the programming language of C, \(n\)++, see Kernighan and Richie for details [9]) with probability \(\lambda \) divided by the holding time rate. Otherwise, the next transition state is \(n-1\) (or \(n\)-- when programming in C), if this is possible, or nothing happens. We refer to the state as “queue state[run]” and the holding time rate as “event rate[run].”

Using a pseudo random number generator, we simulate a random variable \(U\) that is uniformly distributed on the interval \((0,1]\) (see Ross for more details [19]). The random event of the holding time rate times \(U\) being less than \(\lambda \) has the desired probability of \(\lambda \) divided by the holding time rate. When this event occurs, we then execute the arrival simulation as “queue state[run]++” and “queue run sum++”. Now that we are in our new state, we compute its holding time rate. If we generate another uniform random variable \(V\), then \(-\log V\) divided by the next holding time rate gives us an exponentially distributed random variable with the holding rate, again see Ross [19]. Adding this to the current time gives us the time of the next event or updates “next event time[run].”

Finally, to simulate this model with a time varying arrival rate function, according to a Poisson thinning method as found in Ross [19], two things need to be done. First, change the previous use of \(\lambda \) to the maximum possible value for the arrival rate over the given, finite time interval. Second, after a positive test for the product of \(U\) times the holding rate being less than the maximum arrival rate occurs, now test for almost the same event except that the maximum possible arrival rate is replaced by the arrival rate that happens at the current “time.” If this is also true, then the arriving customer event is still valid. Otherwise, the arrival event does not happen.

For this given “time,” the average of value for “mean queue” is “queue run sum” divided by “total runs.” The confidence interval for simulating the mean is plus or minus 3 times the square root of the ratio for the simulation of the variance divided by the number of “total runs.”