Abstract

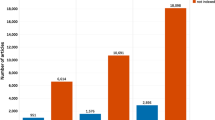

This paper correlates the peer evaluations performed in late 2009 by the disciplinary committees of CNPq (a Brazilian funding agency) with some standard bibliometric measures for 55 scientific areas. We compared the decisions to increase, maintain or decrease a scientist’s research scholarship funded by CNPq. We analyzed these decisions for 2,663 Brazilian scientists and computed their correlations (Spearman rho) with 21 different measures, among them: total production, production in the last 5 years, production indexed in Web of Science and Scopus, total citations received (according to WOS, Scopus, and Google Scholar), h-index and m-quotient (according to the three citation services). The highest correlations for each area range from 0.95 to 0.29, although there are areas with no significantly positive correlation with any of the metrics.

Similar content being viewed by others

Notes

There is another evaluation system sponsored by CAPES for graduate programs.

References

Aksnes, D., & Taxt, R. (2004). Peer reviews and bibliometric indicators: A comparative study at a Norwegian university. Research Evaluation, 13(1), 33–41.

Alonso, S., Cabrerizo, F., Herrera-Viedma, E., & Herrera, F. (2009). h-index: A review focused in its variants, computation and standardization for different scientific fields. Journal of Informetrics, 3(4), 273–289.

Bornmann, L., & Daniel, H. (2005). Does the h-index for ranking of scientists really work?. Scientometrics, 65(3), 391–392.

Bornmann, L., Mutz, R., & Daniel, H. (2008). Are there better indices for evaluation purposes than the h index? A comparison of nine different variants of the h index using data from biomedicine. Journal of the American Society for Information Science and Technology, 59(5), 830–837.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences, 2nd edn. London: Routledge Academic.

Field, A. P. (2001). Meta-analysis of correlation coefficients: A Monte Carlo comparison of fixed- and random-effects methods. Psychological Methods, 6(2), 161–180.

Franceschet M., & Costantini, A. (2011). The first Italian research assessment exercise: A bibliometric perspective. Journal of Informetrics, 5(2), 275–291.

Hedges, L. V., & Vevea, J. L. (1998). Fixed- and random-effects models in meta- analysis. Psychological Methods, 3(4), 486–504.

Hicks, D. (2011). Systemic data infrastructure for innovation policy. In: Science and innovation policy, 2011 Atlanta Conference on, IEEE, (pp 1–8).

Hirsch, J. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United states of America, 102(16), 569–16,572.

Iglesias, J., & Pecharroman, C. (2007). Scaling the h-index for different scientific ISI fields. Scientometrics, 73(3), 303–320.

Korevaar, J. (1996). Validation of bibliometric indicators in the field of mathematics. Scientometrics, 37(1), 117–130.

Li, J., Sanderson, M., Willett, P., Norris, M., & Oppenheim, C. (2010). Ranking of library and information science researchers: Comparison of data sources for correlating citation data, and expert judgments. Journal of Informetrics, 4(4), 554–563.

Merton, R. (1968). The Matthew effect in science. Science, 159(3810), 56–63.

Oliveira, E., Colosimo, E., Martelli, D., Quirino, I., Oliveira, M., Lima, L., Simoes e Silva, A., & Martelli-Junior, H. (2012). Comparison of Brazilian researchers in clinical medicine: Are criteria for ranking well-adjusted?. Scientometrics, 90(2), 429–443.

Patterson, M., & Harris, S. (2009). The relationship between reviewers’ quality-scores and number of citations for papers published in the journal physics in medicine and biology from 2003–2005. Scientometrics, 80(2), 343–349.

Radicchi, F., Fortunato, S., & Castellano, C. (2008). Universality of citation distributions: Toward an objective measure of scientific impact. Proceedings of the National Academy of Sciences, 105(45), 17,268.

Reale, E., Barbara, A., & Costantini, A. (2007). Peer review for the evaluation of academic research: Lessons from the Italian experience. Research Evaluation, 16(3), 216–228.

Rinia, E., van Leeuwen, T., Van Vuren, H., & van Raan, A. (1998). Comparative analysis of a set of bibliometric indicators and central peer review criteria: Evaluation of condensed matter physics in the Netherlands. Research Policy, 27(1), 95–107.

van Raan, A. (2006). Comparison of the Hirsch-index with standard bibliometric indicators and with peer judgment for 147 chemistry research groups. Scientometrics, 67(3), 491–502.

Wainer, J., Eckmann, M., Goldenstein, S., & Rocha, A. (2012). Differences in productivity and impact across the different computer science subareas. Technical Report IC-12-08, Institute of Computing, University of Campinas, http://www.ic.unicamp.br/~reltech/2012/12-08.pdf Accessed December 2012.

Waltman, L., van Eck, N., van Leeuwen, T., Visser, M., & van Raan, A. (2011). On the correlation between bibliometric indicators and peer review: Reply to Opthof and Leydesdorff. Scientometrics, 88(3), 1017–1022.

Zitt, M., Ramanana-Rahary, S., & Bassecoulard, E. (2005). Relativity of citation performance and excellence measures: From cross-field to cross-scale effects of field-normalisation. Scientometrics, 63(2), 373–401.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wainer, J., Vieira, P. Correlations between bibliometrics and peer evaluation for all disciplines: the evaluation of Brazilian scientists. Scientometrics 96, 395–410 (2013). https://doi.org/10.1007/s11192-013-0969-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-013-0969-9