Abstract

A reduction in reviewer’s recommendation quality may be caused by a limitation of time or cognitive overload that comes from the level of redundancy, contradiction and inconsistency in the research. Some adaptive mechanisms by reviewers who deal with situations of information overload may be chunking single pieces of manuscript information into generic terms, unsystematic omission of research details, queuing of information processing, and prematurely stop the manuscript evaluation. Then, how would a reviewer optimize attention to positive and negative attributes of a manuscript before making a recommendation? How a reviewer’s characteristics such as her prior belief about the manuscript quality and manuscript evaluation cost, affect her attention allocation and final recommendation? To answer these questions, we use a probabilistic model in which a reviewer chooses the optimal evaluation strategy by trading off the value and cost of review information about the manuscript quality. We find that a reviewer could exhibit a confirmatory behavior under which she pays more attention to the type of manuscript attributes that favor her prior belief about the manuscript quality. Then, confirmatory bias could be an optimal behavior of the reviewers that optimize attention to positive and negative manuscript attributes under information overload. We also show that reviewer’s manuscript evaluation cost plays a key role in determining whether she may exhibit confirmatory bias. Moreover, when the reviewer’s prior belief about the manuscript quality is low enough, the probability of obtaining a positive review signal decreases with reviewer’s manuscript evaluation cost, for a sufficiently high cost.

Similar content being viewed by others

References

Burnham, J. C. (1990). The evolution of editorial peer review. JAMA, 263(10), 1323–1329.

Cover, T. M., & Thomas, J. A. (2006). Elements of information theory. Hoboken, NJ: Wiley.

Chubin, D. E., & Hackett, E. J. (1990). Peerless science: Peer review and US science policy. New York: State University of New York Press.

Garcia, J. A., Rodriguez-Sánchez, R., & Fdez-Valdivia, J. (2016). Authors and reviewers who suffer from confirmatory bias. Scientometrics, 109(2), 1377–1395. https://doi.org/10.1007/s11192-016-2079-y.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2019). The optimal amount of information to provide in an academic manuscript. Scientometrics, 121(3), 1685–1705. https://doi.org/10.1007/s11192-019-03270-1.

Griffin, D., & Tversky, A. (1992). The weighing of evidence and the determinants of confidence. Cognitive Psychology, 24(3), 411–435.

Hebert, B., & Woodford, M. (2017). Rational Inattention and Sequential Information Sampling. NBER Working Paper No. 23787. National Bureau of Economic Research, 1050 Massachusetts Avenue Cambridge, MA 02138. https://doi.org/10.3386/w23787

Hergovich, A., Schott, R., & Burger, C. (2010). Biased evaluation of abstracts depending on topic and conclusion: Further evidence of a confirmation bias within scientific psychology. Current Psychology, 29(3), 188–209. https://doi.org/10.1007/s12144-010-9087-5.

Jerath, K., & Ren, Q. (2018). Consumer attention allocation and firm information design. Summer Institute in SICS Competitive Strategy, SICS 2018. https://biomsymposium.org/wp-content/uploads/sites/21/2019/03/Consumer-attention-allocation-and-Firm-Info-Design.pdf.

Keren, G. (1987). Facing uncertainty in the game of bridge: A calibration study. Organizational Behaviour and Human Decision Processes, 39(1), 98–114.

Klayman, J., & Ha, Y.-W. (1987). Confirmation, disconfirmation, and information in hypothesis testing. Psychological Review, 94(2), 211–228. https://doi.org/10.1037/0033-295X.94.2.211.

Kunda, Z. (1999). Social cognition: Making sense of people. Cambridge: MIT Press. ISBN 978-0-262-61143-5.

Lee, C. J., Sugimoto, C. R., Zhang, G., & Cronin, B. (2013). Bias in peer review. Journal of the American Society for Information Science and Technology, 64(1), 2–17.

Lewicka, M. (1998). Confirmation bias: Cognitive error or adaptive strategy of action control? In M. Kofta, G. Weary, & G. Sedek (Eds.), Personal control in action: Cognitive and motivational mechanisms (pp. 233–255). Berlin: Springer. ISBN 978-0-306-45720-3.

Lord, C. G., Ross, L., & Lepper, M. R. (1979). Biased assimilation and attitude polarization: The effects of prior theories on subsequently considered evidence. Journal of Personality and Social Psychology, 37(11), 2098–2109.

Maccoun, R. J. (1998). Biases in the interpretation and use of research results. Annual Review of Psychology, 49, 259–287. https://doi.org/10.1146/annurev.psych.49.1.259.

Mahoney, M. J. (1977). Publication prejudices: An experimental study of confirmatory bias in the peer review system. Cognitive Therapy and Research, 1(2), 161–175. https://doi.org/10.1007/BF01173636.

Mason, L., & Scirica, F. (2006). Prediction of students’ argumentation skills about controversial topics by epistemological understanding. Learning and Instruction, 16(5), 492–509. https://doi.org/10.1016/j.learninstruc.2006.09.007.

Miller, J. G. (1960). Information input overload and psychopathology. The American Journal of Psychiatry, 116, 695–704. https://doi.org/10.1176/ajp.116.8.695.

Morris, S. E., & Strack, P. (2019). The wald problem and the relation of sequential sampling and ex-ante information costs. Available at SSRN: https://ssrn.com/abstract=2991567 or https://doi.org/10.2139/ssrn.2991567.

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175–220. https://doi.org/10.1037/1089-2680.2.2.175.

Oswald, M. E., & Grosjean, S. (2004). Confirmation Bias. In R. F. Pohl (Ed.), Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory (pp. 79–96). Hove: Psychology Press. ISBN 978-1-84169-351-4.

Rabin, M., & Schrag, J. L. (1999). First impressions matter: A model of confirmatory bias. The Quarterly Journal of Economics, 114(1), 37–82. https://doi.org/10.1162/003355399555945.

Roetzel, P. (2018). Information overload in the information age: A review of the literature from business administration, business psychology, and related disciplines with a bibliometric approach and framework development. Business Research,. https://doi.org/10.1007/s40685-018-0069-z.

Souder, L. (2011). The ethics of scholarly peer review: A review of the literature. Learned Publishing, 24(1), 55–72.

Wason, P. C. (1960). On the failure to eliminate hypotheses in a conceptual task. Quarterly Journal of Experimental Psychology, 12(3), 129–140. https://doi.org/10.1080/17470216008416717.

Weinstock, M. (2009). Relative expertise in an everyday reasoning task: Epistemic understanding, problem representation, and reasoning competence. Learning and Individual Differences, 19(4), 423–434. https://doi.org/10.1016/j.lindif.2009.03.003.

Acknowledgements

This research was sponsored by the Spanish Board for Science, Technology, and Innovation under Grant TIN2017-85542-P, and co-financed with European FEDER funds. Sincere thanks are due to the reviewers for their constructive suggestions.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Confirmatory bias in peer review

To find out the optimal manuscript evaluation strategy given by \(\delta _0\) and \(\delta _1\), the reviewer should trade off the value and cost of review information about the manuscript quality. Then, the optimal evaluation strategy chosen by the reviewer is to maximize the expected utility of learning about the research quality:

subject to \(\delta _0 + \delta _1 >1\), and where

and

There, the value of review satisfies:

since (by Bayes’ theorem)

and

where \(q = \Pr ( X=1)\), \(\delta _0 = \Pr ( S=0 \ | \ X=0)\), and, \(\delta _1 = \Pr ( S=1 \ | \ X=1)\). Therefore, it follows that the expected value of review information increases as the review signal becomes more accurate (i.e., with both \(\delta _0\) and \(\delta _1\)) since

and

On the other hand, the Shannon mutual information is given by Cover and Thomas (2006):

where

and

Therefore, it follows that

Following Jerath and Ren (2018), we have that the objective function is strictly concave for the optimization problem. Therefore, the optimal solution for this maximization is simply obtained by the first order condition of the optimization problem (a mathematical condition for optimization stating that the first derivative is zero).

Then, the value \(\delta _0^*\) of \(\delta _0\) that maximizes the objective function must satisfy

or equivalently

Similarly, the value \(\delta _1^*\) of \(\delta _1\) that maximizes the objective function must satisfy

or equivalently

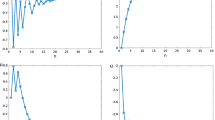

Solving the equations we get

and

Then, taking into account the constraints \(\delta _0^* + \delta _1^* >1\), \(0< \delta _1^* < 1\), and \(0< \delta _0^* < 1\), it follows that

and

where \(k = \frac{-U_0 }{\lambda }\), \(l = \frac{U_1-U_0 }{\lambda }\).

Appendix 2: Probability of positive review signal

From “Appendix 1”, we have that

Following (Jerath and Ren 2018), denoting

it follows that if \(q/(1-q) > \sigma\), then \(\frac{d \Pr (S=1) }{d \lambda } >0\) and \(\Pr (S=1)\) increases with evaluation cost \(\lambda\); otherwise, if \(q/(1-q) < \sigma\), then \(\frac{d \Pr (S=1) }{d \lambda } <0\) and \(\Pr (S=1)\) decreases with evaluation cost \(\lambda\).

We have that \(\sigma \rightarrow \frac{-U_0}{U_1}\) as \(\lambda \rightarrow \infty\). Besides, if \(U_1 > |U_0|\) then \(\sigma\) increases with \(\lambda\). Similarly, if \(U_1 < |U_0|\) then \(\sigma\) decreases with \(\lambda\).

Therefore, given the gain of accepting a quality manuscript is larger than the loss of accepting a poor-quality work \(U_1 > | U_0 |\), if \(q/(1-q) < \frac{-U_0}{U_1}\) (or equivalently, \(q < \frac{- U_0}{U_1 - U_0}\)), there exists \(\hat{\lambda }\) such that for \(\lambda > \hat{\lambda }\) (i.e., when evaluation cost is high enough) then \(\frac{d \Pr (S=1) }{d \lambda } <0\) and \(\Pr (S=1)\) decreases with evaluation cost \(\lambda\).

Furthermore, given the loss of accepting a poor-quality work is larger than the gain of accepting a quality manuscript \(U_1 < | U_0 |\), if \(q/(1-q) < \frac{-U_0}{U_1}\) (or equivalently, \(q < \frac{- U_0}{U_1 - U_0}\)), then it must always be that \(q/(1-q) < \sigma\) which implies that \(\frac{d \Pr (S=1) }{d \lambda } <0\) and thus the positive review probability \(\Pr (S=1)\) always decreases with manuscript evaluation cost \(\lambda\).

Rights and permissions

About this article

Cite this article

Garcia, J.A., Rodriguez-Sánchez, R. & Fdez-Valdivia, J. Confirmatory bias in peer review. Scientometrics 123, 517–533 (2020). https://doi.org/10.1007/s11192-020-03357-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-020-03357-0