Abstract

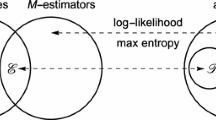

In view of its ongoing importance for a variety of practical applications, feature selection via ℓ 1-regularization methods like the lasso has been subject to extensive theoretical as well empirical investigations. Despite its popularity, mere ℓ 1-regularization has been criticized for being inadequate or ineffective, notably in situations in which additional structural knowledge about the predictors should be taken into account. This has stimulated the development of either systematically different regularization methods or double regularization approaches which combine ℓ 1-regularization with a second kind of regularization designed to capture additional problem-specific structure. One instance thereof is the ‘structured elastic net’, a generalization of the proposal in Zou and Hastie (J. R. Stat. Soc. Ser. B 67:301–320, 2005), studied in Slawski et al. (Ann. Appl. Stat. 4(2):1056–1080, 2010) for the class of generalized linear models.

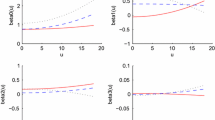

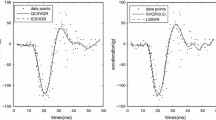

In this paper, we elaborate on the structured elastic net regularizer in conjunction with two important loss functions, the check loss of quantile regression and the hinge loss of support vector classification. Solution paths algorithms are developed which compute the whole range of solutions as one regularization parameter varies and the second one is kept fixed.

The methodology and practical performance of our approach is illustrated by means of case studies from image classification and climate science.

Similar content being viewed by others

References

Bartlett, P., Jordan, M., McAuliffe, J.: Convexity, classification, and risk bounds. J. Am. Stat. Assoc. 101, 138–156 (2006)

Bennett, K., Mangasarian, O.: Multicategory separation via linear programming. Optim. Methods Softw. 3, 27–39 (1993)

Bondell, H., Reich, B.: Simultaneous regression shrinkage, variable selection and clustering of predictors with OSCAR. Biometrics 64, 115–123 (2008)

Bradley, P., Mangasarian, O.: Feature selection via concave minimization and support vector machines. In: International Conference on Machine Learning (1998)

Christianini, N., Shawe-Taylor, J.: An Introduction to Support Vector Machines. Cambridge University Press, Cambridge (2000)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression (with discussion). Ann. Stat. 32, 407–499 (2004)

El Anbari, M., Mkhadri, A.: Penalized regression combing the L 1 norm and a correlation based penalty. Technical report, Université Paris Sud 11 (2008)

Hans, C.: Bayesian lasso regression. Biometrika 96, 221–229 (2009)

Hans, C.: Model uncertainty and variable selection in Bayesian lasso regression. Stat. Comput. 20, 221–229 (2010)

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning. Springer, New York (2001)

Hastie, T., Rosset, S., Tibshirani, R., Zhu, J.: The entire regularization path for the support vector machine. J. Mach. Learn. Res. 5, 1391–1415 (2004)

James, G., Wang, J., Zhu, J.: Functional linear regression that’s interpretable. Ann. Stat. 37, 2083–2108 (2008)

Koenker, R.: Quantile Regression. Cambridge University Press, Cambridge (2005)

Lancaster, T., Jun, S.J.: Bayesian quantile regression methods. J. Appl. Econom. 25, 287–307 (2009)

Landau, S., Ellison-Wright, I., Bullmore, E.: Tests for a difference in timing of physiological response between two brain regions measured by using functional magnetic resonance imaging. Appl. Stat. 63–82, 53 (2003)

Le Cun, Y., Boser, B., Denker, J., Henderson, D., Howard, R., Hubbard, W., Jackel, L.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 2, 541–551 (1989)

Li, C., Li, H.: Variable selection and regression analysis for graph-structured covariates with an application to genomics. Ann. Appl. Stat. 4(3), 1498–1516 (2010)

Li, Y., Zhu, J.: L 1-norm Quantile regression. J. Comput. Graph. Stat. 17, 163–185 (2008)

Li, Y., Liu, Y., Zhu, J.: Quantile regression in reproducing kernel Hilbert spaces. J. Am. Stat. Assoc. 102, 255–268 (2007)

Lin, Y.: Support vector machines and the Bayes rule in classification. Data Min. Knowl. Discov. 6, 259–275 (2002)

Park, T., Casella, G.: The Bayesian lasso. J. Am. Stat. Assoc. 103, 681–686 (2008)

Ramsay, J., Silverman, B.: Functional Data Analysis. Springer, New York (2006)

Rosset, S.: Bi-level path following for cross validated solution of kernel quantile regression. In: International Conference on Machine Learning (2008)

Rosset, S., Zhu, J.: Piecewise linear regularized solution paths. Ann. Stat. 35, 1012–1030 (2007)

Schölkopf, B., Smola, A.: Learning with Kernels. MIT Press, Cambridge (2002)

Slawski, M., zu Castell, W., Tutz, G.: Feature selection guided by structural information. Ann. Appl. Stat. 4(2), 1056–1080 (2010)

Sollich, P.: Bayesian methods for support vector machines: evidence and predictive class probabilities. Mach. Learn. 46, 21–52 (2002)

Stein, M.: Interpolation of Spatial Data. Springer, New York (1999)

Steinwart, I., Christmann, A.: Support Vector Machines. Springer, Berlin (2008)

Takeuchi, I., Le, Q., Sears, T., Smola, A.: Nonparametric quantile regression. J. Mach. Learn. Res. 7, 1231–1264 (2006)

Tibshirani, R.: Regression shrinkage and variable selection via the lasso. J. R. Stat. Soc. Ser. B 58, 671–686 (1996)

Tibshirani, R., Saunders, M., Rosset, S., Zhu, J., Knight, K.: Sparsity and smoothness via the fused lasso. J. R. Stat. Soc. Ser. B 67, 91–108 (2005)

Tutz, G., Gertheiss, J.: Feature extraction in signal regression: a boosting technique for functional data regression. J. Comput. Graph. Stat. 19, 154–174 (2010)

Tutz, G., Ulbricht, J.: Penalized regression with correlation based penalty. Stat. Comput. 19, 239–253 (2009)

Wang, L., Shen, X.: Multi-category support vector machines, feature selection, and solution path. Stat. Sin. 16, 617–634 (2005)

Wang, L., Zhu, J., Zou, H.: The doubly regularized support vector machine. Stat. Sin. 16, 589–616 (2006)

Wood, S.: R package gamair: Data for “GAMs: An Introduction with R”, Version 0.0-4. Available from www.r-project.org (2006)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B 68, 49–67 (2006)

Zhao, P., Rocha, G., Yu, B.: The composite absolute penalties family for grouped and hierarchical variable selection. Ann. Stat. 37, 3468–3497 (2009)

Zhu, J., Rosset, S., Hastie, T., Tibshirani, R.: L 1 norm support vector machine. Adv. Neural Inf. Process. Syst. 16, 55–63 (2003)

Zou, H., Hastie, T.: Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 67, 301–320 (2005)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Slawski, M. The structured elastic net for quantile regression and support vector classification. Stat Comput 22, 153–168 (2012). https://doi.org/10.1007/s11222-010-9214-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-010-9214-z