Abstract

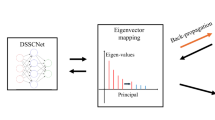

Dimension reduction techniques are very important, as high-dimensional data are ubiquitous in many real-world applications, especially in this era of big data. In this paper, we propose a novel supervised dimensionality reduction method, called appropriate points choosing based DAG-DNE (Apps-DAG-DNE). In Apps-DAG-DNE, we choose appropriate points to construct adjacency graphs, for example, it chooses nearest neighbors to construct inter-class graph, which can build a margin between samples if they belong to the different classes, and chooses farthest points to construct intra-class graph, which can establish relationships between remote samples if and only if they belong to the same class. Thus, Apps-DAG-DNE could find a good representation for original data. To investigate the performance of Apps-DAG-DNE, we compare it with the state-of-the-art dimensionality reduction methods on Caltech-Leaves and Yale datasets. Extensive experimental demonstrates that the proposed Apps-DAG-DNE outperforms other dimensionality reduction methods and achieves state-of-the-art performance for image classification.

Similar content being viewed by others

References

Turk MA, Pentland AP (1991) Face recognition using eigenfaces. In: Proceedings of the Conference on Computer Vision and Pattern Recognition. IEEE, pp 586–591

He XF, Yan SC, Hu YX, Niyogi P (2001) Face recognition using laplacianfaces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Jolliffe I (2002) Principal component analysis, 2nd edn. Springer, Berlin

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

He XF, Niyogi P (2003) Locality preserving projections. Adv Neural Inf Process Syst 16:153–160

He XF, Cai D, Yan SC, Zhang HJ (2005) Neighborhood preserving embedding. In: Proceedings of the Tenth International Conference on Computer Vision, vol 2. IEEE, pp 1208–1213

Martinez AM, Kak AC (2001) PCA versus LDA. IEEE Trans Pattern Anal Mach Intell 23(2):228–233

Yu H, yang J (2001) A direct LDA algorithm for high-dimensional data with application to face recognition. Pattern Recognit 34(10):2067–2070

Zhang W, Xue XY, Guo YF (2006) Discriminant neighborhood embedding for classification. Pattern Recognit 39(11):2240–2243

Yan SC, Xu D, Zhang BY, Zhang HJ (2005) Graph embedding: a general framework for dimensionality reduction. In: Proceedings of the Conference on Computer Vision and Pattern Recognition, vol 2. IEEE, pp 830–837

Yan SC, Xu D, Zhang BY, Zhang HJ, Yang Q, Lin S (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29(1):20–51

Gou JP, Zhang Y (2012) Locality-based discriminant neighborhood embedding. Comput J 56(9):1063–1082

Miao S, Wang J, Cao Q, Chen F, Wang Y (2016) Discriminant structure embedding for image recognition. Neurocomputing 174:850–857

Ding CT, Zhang L (2015) Double adjacency graphs-based discriminant neighborhood embedding. Pattern Recognit 48(5):1734–1742

Srivastava N, Rao S (2016) Learning-based text classifiers using the Mahalanobis distance for correlated datasets. Int J Big Data Intell 3(1):18–27

Lin WW, Pang XW, Wan BS, Li HF (2016) MR-LDA: an efficient topic model for classification of short text in big social data. Int J Grid High Perform Comput 8(4):100–113

Chen M, Li YH, Zhang ZF, Hsu CH, Wang SG (2016) Real-time and large scale duplicate image detection method based on multi-feature fusion. J Real-Time Image Process 13(3):557–570

Bao X, Zhang L, Wang BJ, Yang JW (2014) A supervised neighborhood preserving embedding for face recognition. In: Proceedings of the International Joint Conference on Neural Networks. IEEE, pp 278–284

Yu WW, Teng XL, Liu CQ (2006) Face recognition using discriminant locality preserving projections. Image Vis Comput 24(3):239–248

You GB, Zheng NN, Du SY, Wu Y (2007) Neighborhood discriminant projection for face recognition. Pattern Recognit Lett 28(10):1156–1163

Yang LP, Gong WG, Gu XH, Li WH, Liang YX (2008) Null space discriminant locality preserving projections for face recognition. Neurocomputing 71(16):3644–3649

Lu YQ, Lu C, Qi M, Wang SY (2010) A supervised locality preserving projections based local matching algorithm for face recognition. In: Advances in Computer Science and Information Technology, pp 28–37

Kokiopoulou E, Sadd Y (2007) Orthogonal neighborhood preserving projections: a projection-based dimensionality reduction technique. IEEE Trans Pattern Anal Mach Intell 29(12):2143–2156

Wang JH, Xu Y, Zhang D, You J (2010) An efficient method for computing orthogonal discriminant vectors. Neurocomputing 73(10):2168–2176

Caltech-Leaves dataset. http://www.vision.caltech.edu/html-files/archive.html. Accessed 30 Dec 2016

Yale dataset. http://vision.ucsd.edu/content/yale-face-database. Accessed 30 Dec 2016

Cai D, He XF, Han JW (2007) Spectral regression: a unified approach for sparse subspace learning. In: Proceedings of the Seventh International Conference on Data Mining. IEEE, pp 73–82

Zhang Z, Li FZ, Zhao MB, Zhang L, Yan SC (2016) Joint low-rank and sparse principal feature coding for enhanced robust representation and visual classification. IEEE Trans Image Process 25(6):2429–2443

Acknowledgements

This work was supported by the National Science Foundation of China (61571066 and 61472047).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ding, C., Wang, S. Appropriate points choosing for subspace learning over image classification. J Supercomput 75, 688–703 (2019). https://doi.org/10.1007/s11227-018-2687-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-018-2687-9