Abstract

Protein secondary structure is the local conformation assigned to protein sequences with the help of its three-dimensional structure. Assigning the local conformation to protein sequences requires much computational work. There exists a vast literature on the protein secondary structure prediction approaches (more than 20 techniques), but to date, none of the existing techniques is entirely accurate. Thus, there is an excellent room for developing new models of protein secondary structure prediction to address the issues of prediction accuracy. In the present study, ensemble techniques such as AdaBoost- and Bagging-based deep learning models are proposed to predict the protein secondary structure. The data from standard datasets, namely CB513, RS126, PTOP742, PSA472, and MANESH, have been used for training and testing purposes. These standard datasets possess less than 25% redundancy. The model is evaluated using performance measures: Q8 and Q3 cross-validation accuracy, class precision, class recall, kappa factor, and testing on a dataset that is not used for training purposes, i.e., blind test. The ensembling technique used along with variability in datasets can remove the bias of each dataset by balancing it and making the features more distinguishable, leading to the improvement in accuracy as compared to the conventional and existing techniques. The proposed model shows an average improvement of ~ 2% and ~ 3% accuracy over the existing methods in a blind test for Q8 and Q3 accuracy.

Similar content being viewed by others

References

Hoye AT (2010) Synthesis of natural and non-natural polycylicalkaloids. Doctoral dissertation, University of Pittsburgh

Chou PY, Fasman GD (1974) Prediction of protein conformation. Biochemistry 13(2):222–245

Geourjon C, Deleage G (1995) SOPMA: significant improvements in protein secondary structure prediction by consensus prediction from multiple alignments. Bioinformatics 11(6):681–684

Garnier J, Gibrat JF, Robson B (1996) GOR method for predicting protein secondary structure from amino acid sequence. Methods Enzymol 266:540–553

Rost B, Sander C (1994) Combining evolutionary information and neural networks to predict protein secondary structure. Proteins Struct Funct Bioinform 19(1):55–72

Jones DT (1999) Protein secondary structure prediction based on position-specific scoring matrices. J Mol Biol 292(2):195–202

Pollastri G, Przybylski D, Rost B, Baldi P (2002) Improving the prediction of protein secondary structure in three and eight classes using recurrent neural networks and profiles. Proteins Struct Funct Bioinform 47(2):228–235

Drozdetskiy A, Cole C, Procter J, Barton GJ (2015) JPred4: a protein secondary structure prediction server. Nucleic Acids Res 43(1):389–394

Wang Z, Zhao F, Peng J, Xu J (2011) Protein 8-class secondary structure prediction using conditional neural fields. Proteomics 11(19):3786–3792

Awais M, Iqbal MJ, Ahmad I, Alassafi MO, Alghamdi R, Basheri M, Waqas M (2019) Real-time surveillance through face recognition using hog and feedforward neural networks. IEEE Access 7:121236–121244

Yusuf SA, Alshdadi AA, Alghamdi R, Alassafi MO, Garrity DJ (2020) An autoregressive exogenous neural network to model fire behaviour via a naïve bayes filter. IEEE Access. https://doi.org/10.1109/ACCESS.2020.2997016

Bryson K, McGuffin LJ, Marsden RL, Ward JJ, Sodhi JS, Jones DT (2005) Protein structure prediction servers at University College London. Nucleic Acids Res 33(2):36–38

Zhou GP, Assa Munt N (2001) Some insights into protein structural class prediction. Proteins Struct Funct Bioinform 44(1):57–59

Guo Y, Wang B, Li W, Yang B (2018) Protein secondary structure prediction improved by recurrent neural networks integrated with two-dimensional convolutional neural networks. Bioinform Comput Biol 16(5):185–200

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Heffernan R, Paliwal K, Lyons J, Dehzangi A, Sharma A, Wang J, Sattar A, Yang Y, Zhou Y (2015) Improving prediction of secondary structure, local backbone angles, and solvent accessible surface area of proteins by iterative deep learning. Sci Rep 5(1):1–11

Wang S, Peng J, Ma J, Xu J (2016) Protein secondary structure prediction using deep convolutional neural fields. Sci Rep 6(1):1–11

Zhang B, Li J, Lü Q (2018) Prediction of 8-state protein secondary structures by a novel deep learning architecture. BMC Bioinform 19(1):293–302

Giulini M, Potestio R (2019) A deep learning approach to the structural analysis of proteins. Interface Focus 9(3):201–210

Asgari E, Poerner N, McHardy A, Mofrad M (2019) DeepPrime2Sec: deep learning for protein secondary structure prediction from the primary sequences. Bioinformatics 21(20):1–8. https://doi.org/10.1101/705426

Li Z, Yu Y (2016) Protein Secondary Structure Prediction Using Cascaded Convolutional and Recurrent Neural Networks. In: International joint conference on artificial intelligence (IJCAI). 160–176

Guo Y, Li W, Wang B, Liu H, Zhou D (2019) DeepACLSTM: deep asymmetric convolutional long short-term memory neural models for protein secondary structure prediction. BMC Bioinform 20(1):341–352

Mirabello C, Wallner B (2019) rawMSA: end-to-end deep learning using raw multiple sequence alignments. PLoS ONE 14(8):1–15

Makhlouf MA (2018) Deep learning for prediction of protein-protein interaction. Egypt Comput Sci J 42(3):1–14

Adhikari B, Hou J, Cheng J (2018) Protein contact prediction by integrating deep multiple sequence alignments, coevolution, and machine learning. Proteins Struct Funct Bioinform 86:84–96

Zhou J, Wang H, Zhao Z, Xu R, Lu Q (2018) CNNH_PSS: protein 8-class secondary structure prediction by a convolutional neural network with the highway. BMC Bioinform 19(4):99–109

Ji S, Oruç T, Mead L, Rehman MF, Thomas CM, Butterworth S, Winn PJ (2019) DeepCDpred: inter-residue distance and contact prediction for improved prediction of protein structure. PLoS ONE 14(1):1–15

Dietterich T G (2000, June). Ensemble methods in machine learning. International workshop on multiple classifier systems. 1–15 Springer, Berlin, Heidelberg

Liu Y, Yang C, Gao Z, Yao Y (2018) Ensemble deep kernel learning with application to quality prediction in industrial polymerization processes. Chemom Intell Lab Syst 174:15–21

Liu Y, Fan Y, Chen J (2017) Flame images for oxygen content prediction of combustion systems using DBN. Energy Fuels 31(8):8776–8783

He X, Ji J, Liu K, Gao Z, Liu Y (2019) Soft sensing of silicon content via bagging local semi-supervised models. Sensors 19(17):38–41

Liu Y, Zhang Z, Chen J (2015) Ensemble local kernel learning for online prediction of distributed product outputs in chemical processes. Chem Eng Sci 137:140–151

Rose PW, Prlić A, Bi C, Bluhm WF, Christie CH, Dutta S, Young J (2015) The RCSB protein data bank: views of structural biology for basic and applied research and education. Nucleic Acids Res 43(1):345–356

Kabsch W, Sander C (1983) Dictionary of protein secondary structure: pattern recognition of hydrogen-bonded and geometrical features. Biopolymers 22(12):2577–2637

Karchin, R. (2003). Evaluating local structure alphabets for protein structure prediction. Doctoral dissertation, University of California, Santa Cruz 2003

Engh RA, Huber R (1991) Accurate bond and angle parameters for X-ray protein structure refinement. Acta Crystallogr A 47(4):392–400

Almalawi A, AlGhamdi R, Fahad A (2017) Investigate the use of anchor-text and of query-document similarity scores to predict the performance of search engine. Int J Adv Comput Sci Appl 8(11):320–332

Koehl P, Levitt M (1999) A brighter future for protein structure prediction. Nat Struct Biol 6:108–111

Zhu J, Zou H, Rosset S, Hastie T (2009) Multi-class adaboost. Stat Interface 2(3):349–360

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Günzel H, Albrecht J, Lehner W (1999) Data mining in a multidimensional environment. Advances in Databases and Information Systems. Springer, Berlin/Heidelberg, pp 191–204

Wood JM (2007) Understanding and computing cohen's kappa: a tutorial. WebPsychEmpiricist. ID: 141840274

Acknowledgment

This work was supported by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, under grant No. (DF-520-611-1441). The authors, therefore, gratefully acknowledge DSR technical and financial support.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Experimental set up

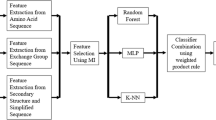

A computational framework has been proposed for prediction of secondary structure of proteins. The approaches employed to construct the computational framework are: supervised learning, simple deep learning, and deep learning with ensemble techniques. The steps of the proposed computational framework are given below:

-

1. Preparation of dataset.

-

2. Cleansing of dataset.

-

3. Construction of deep neural network.

-

Feed-forward neural network

-

Backpropagation

-

-

4.Deep neural network with ensemble techniques

-

Bagging

-

AdaBoost

-

-

5. Training.

-

6. Testing.

-

Cross-validation

-

Blind test

-

Multilayer feed-forward-based artificial deep neural network is applied and trained with stochastic gradient descent (SGD). The stochastic gradient descent (SGD) algorithm is a drastic simplification of simple gradient descent. Instead of computing the gradient of En(fw) exactly, each iteration estimates this gradient on the basis of a single randomly picked example [53]. A deep neural network containing two layers each having 100 nodes is trained with rectifier activation function.

Advanced features such as adaptive learning rate, rate annealing, momentum training, dropout, and L1 or L2 regularization enable high predictive accuracy. Each compute node trains a copy of the global model parameters on its local data with multi-threading (asynchronously) and contributes periodically to the global model via model averaging across the network.

A multilayer deep neural network can distort the input space to make the classes of data (examples of which are on the red and blue lines). Note how a regular grid (shown on the left) in input space is also transformed (shown in the middle panel) by hidden units.

The chain rule of derivatives tells how two small effects (that of a small change of x on y, and that of y on z) are composed. A small change Δx in x gets transformed first into a small change Δy in y by getting multiplied by ∂y/∂x. Similarly, the change Δy creates a change Δz in z. On substituting one equation into the other gives the chain rule of derivatives—how Δx gets turned into Δz through multiplication by the product of ∂y/∂x and ∂z/∂x.

See Fig.

The equations used for computing the forward pass in a deep neural net with two hidden layers and one output layer, each constituting a module through which one can backpropagate gradients, are shown in Fig. 2. At each layer, the total input z is computed first to each unit, which is a weighted sum of the outputs of the units in the layer below to the previous layer. Then a nonlinear function f(.) is applied to z to get the output of the unit. For simplicity, in Fig. 2 bias terms are omitted. The nonlinear functions used in neural networks include the rectified linear unit (ReLU) f(z) = max(0,z). This nonlinear function is commonly used in recent years [7] (see Fig.

3).

The backward pass is computed by the equations are shown in Fig. 3. At each hidden layer, the error derivative is computed with respect to the output of each unit, which is a weighted sum of the error derivatives with respect to the total inputs to the units in the layer above. The error derivative is then converted with respect to the output into the error derivative with respect to the input by multiplying it by the gradient of f(z). At the output layer, the error derivative with respect to the output of a unit is computed by differentiating the cost function [7].

Rights and permissions

About this article

Cite this article

AlGhamdi, R., Aziz, A., Alshehri, M. et al. Deep learning model with ensemble techniques to compute the secondary structure of proteins. J Supercomput 77, 5104–5119 (2021). https://doi.org/10.1007/s11227-020-03467-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-020-03467-9