Abstract

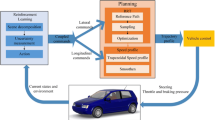

Aiming at the problem of vehicle model tracking error and overdependence in traditional path planning of intelligent driving vehicles, a path planning method of intelligent driving vehicles based on deep reinforcement learning is proposed. Firstly, the abstract model of real environment is extracted. The model uses deep reinforcement learning end-to-end strategy (DRL-ETE) and vehicle dynamics model to train the reinforcement learning model which approaches the optimal intelligent driving. Secondly, the real scene problem is transferred to the virtual abstract model through the model transfer strategy, and the control and trajectory sequences are calculated according to the trained deep reinforcement learning model in the environment. Finally, the optimal trajectory sequence is selected according to the evaluation function in real environment. Because the storage mode of experience playback mechanism of Deep Q-Network algorithm is FIFO, and the sampling mode of later playback training is average sampling, the efficiency of experience playback is low. These two problems lead to the slow process of intelligent driving vehicle to target and route finding. And because of greedy strategy, the information of exploration map is incomplete, and IDQNPER algorithm model is proposed. When storing samples, the samples are given weight, and sent to the network in priority order for sample training. Meanwhile, the importance data sequence is retained in the experience playback cache area, and the sequence with high similarity is removed. The total reward value is about 10% higher than the reward value of original Deep Q-network, which proves that the accuracy of intelligent driving vehicles tends to target points is higher. In order to further realize the autonomous decision-making of intelligent driving vehicles and solve the problem of relying too much on map information in the traditional human planning framework, an end-to-end path planning method is proposed based on the depth reinforcement learning theory, which maps the action instructions directly from the sensor information and then issues them to the intelligent driving vehicles. Firstly, CNN and LSTM are used to process radar and camera information. By comparing the advantages of DQ, Double DQN, Dueling DQN and PER algorithm, IDQNPER algorithm is used to train the automatic path planning of intelligent driving vehicles. Finally, the simulation and verification experiments are carried out in the static obstacle environment. The test results show that IDQNPER algorithm is adaptable to intelligent vehicles in different environments. The method can deal with the continuous input state and generate the continuous control sequence of the corner control, which can reduce the lateral tracking error. At the same time, the generalization performance of the model can be improved and the overdependence problem can be reduced by experience playback.

Similar content being viewed by others

References

Li LI, Feiyue WANG (2018) A century review of ground traffic control and prospects for the next 50 years. Acta Auto Sinica 44(4):577–583

Sun H, Deng WW, Zhang SM et al. (2014) Trajectory planning for vehicle autonomous driving with uncertainties. Proceedings of 2014 International Conference on Informative and Cybernetics for Computational Social Systems (ICCSS), pp 34–38. Qingdao: IEEE

Rastelli JP, Lattarulo R, Nashashibi F. 2014 Dynamic trajectory generation using continuous-curvature algorithms for door to door assistance vehicles. Proceedings of IEEE Intelligent Vehicles Symposium. Dearborn, MI, USA: IEEE: 510–515

Cong YF, Sawodny O, Chen H, et al. Motion planning for an autonomous vehicle driving on motorways by using flatness proper-ties. 2010 IEEE International Conference on Control Applications. Yokohama: IEEE: 908–913

Lingli Y, Ziwei L, Kaijun Z (2016) Non-time trajectory tracking method for robot based on Bezier curve. J Sci Instr 37(7):1564–1572

Cheng C, Yuqing HE, Chunguang BU et al (2015) Feasible trajectory planning of unmanned vehicles based on fourth-order Bezier curve. Acta Autom Sinica 41(3):486–496

Silver D, Huang A, Maddison CJ et al (2016) Mastering thegame of go with deep neural networks and tree search. Nature 529(7587):484–489

Santana E, Hotz G (2016) Learning a driving simulator. https://arxiv.org/abs/1608.01230v1

Paxton C, Raman V, Hager GD et al (2017) Combining neural networks and tree search for task and motion planning in challenging environments. 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 6059–6066. Vancouver, BC: IEEE

Pfeiffer M, Schaeuble M, Nieto J et al (2017) From perception to decision: A data-driven approach to end-to-end motion planning for autonomous ground robots. 2017 IEEE International Conference on Robotics and Automation (ICRA), pp 1527–1533. Singapore: IEEE

Liu W, Li Z, Li L et al (2017) Parking like a human: a direct trajectory planning solution. IEEE Trans Intell Transp Syst 18(12):3388–3397

Lin YL, Li L, Dai XY et al (2017) Master general parking skill via deep learning. 2017 IEEE Intelligent Vehicles Symposium (IV). Los Angeles, CA: IEEE: 941–946.

Mnih V, Kavukcuoglu K, Silver D et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529

Lillicrap TP, Hunt JJ, Pritzel A et al (2015) Continuous control with deep reinforcement learning. Comput Sci 8(6):187–194

Dongbin Z, Kun S, Yuanheng Z et al (2016) Overview of deep reinforcement learning: concurrently on the development of computer go. Control Theory Appl 33(6):701–717

Zuo G, Du T, Lu J (2017) Double DQN method for object detection. 2017 Chinese Automation Congress (CAC). Jinan: IEEE, pp 6727–6732

Metz L, Ibarz J, Jaitly N et al (2017) Discrete sequential prediction of continuous actions for deep RL. arXiv preprint arXiv: 1705.05035

Schaul T, Quan J, Antonoglou I et al (2015) Prioritized experi-ence replay. arXiv preprint arXiv: 1511.05952

Xu M, Shi H, Wang Y (2018) Play games using reinforcement learning and artificial neural networks with experience replay. 2018 IEEE/ACIS 17th International Conference on Computer and Information Science (ICIS). Singapore: IEEE, pp 855–859

Uhlenbeck GE, Ornstein LS (1930) On the theory of the Brownian motion. Phys Rev 17(2/3):323–342

Plappert M, Houthooft R, Dhariwal P et al (2017) Parameter space noise for exploration. arXiv preprint arXiv: 1706.01905

Gu S, Holly E, Lillicrap T et al. (2017) Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. 2017 IEEE International Conference on Robotics and Automation (ICRA). Singapore: IEEE: 3389–3396

Genders W, Razavi S (2016) Using a deep reinforcement learning agent for traffic signal control. arXiv preprint arXiv: 1611.01142

Isele D, Rahimi R, Cosgun A et al (2017) Navigating occluded intersections with autonomous vehicles using deep reinforcement learning. arXiv preprint arXiv: 1705.01196

Tai L, Paolo G, Liu M (2017) Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In: 2017 IEEE/RSJ International Conference on Intelligent Robots andSystems (IROS). Vancouver, BC: IEEE, pp 31–36

MIROWSKI P, PASCANU R, VIOLA F et al. (2016) Learning to navigate in complex environments. arXiv preprint arXiv: 1611.03673

Ru Q (2018) Research and application of deep Q neural network algorithm combined with prior knowledge in indoor path planning [D]. Hefei University of technology

Tamang J et al (2021) Dynamical properties of ion-acoustic waves in space plasma and its application to image encryption. IEEE Access 9:18762–18782

Chowdhary CL et al (2020) Analytical study of hybrid techniques for image encryption and decryption. Sensors 20(18):5162

Zhu F, Wu W, Fu YC et al (2019) Secure deep reinforcement learning method based on dual deep network. Acta computa Sinica 8:1812–1826

Wang Y, Jing XC, Tian T et al (2019) Research on multi agent path planning method based on Reinforcement Learning. Comput Appl Softw (8):156–163

Ijaz MF, Muhammad A, Youngdoo S (2020) Data-driven cervical cancer prediction model with outlier detection and over-sampling methods. Sensors 20(10):2809

Alfian G et al (2018) A personalized healthcare monitoring system for diabetic patients by utilizing BLE-based sensors and real-time data processing. Sensors 18(7):2183

Ijaz MF et al (2018) Hybrid prediction model for type 2 diabetes and hypertension using DBSCAN-based outlier detection, synthetic minority over sampling technique (SMOTE), and random forest. Appl Sci 8(8):1325

Mohanty PK , Sah AK , Kumar V et al (2017) Application of Deep Q-Learning for Wheel Mobile Robot Navigation. In: 2017 3rd International Conference on Computational Intelligence and Networks (CINE), pp 88-93. IEEE Computer Society

Acknowledgements

This paper is supported by Dongguan Social Science and Technology Development Project in 2020 (2020507156694), Special Fund for Science and Technology Innovation Strategy of Guangdong Province in 2021 (special fund for climbing plan) (pdjh2021a0943), 2020 School-level Quality Engineering of Dongguan Polytechnic (JGYB202010), 2019 School-level Research Fund Key Project of Dongguan Polytechnic (2019a17), the Science and Technology Research Project in Department of

Education of Jiangxi Province under Grant GJJ181511.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, J., Chen, Y., Zhao, X. et al. An improved DQN path planning algorithm. J Supercomput 78, 616–639 (2022). https://doi.org/10.1007/s11227-021-03878-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-021-03878-2