Abstract

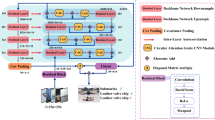

Ship detection in Synthetic Aperture Radar (SAR) is a challenging task due to the random orientation of the ship and discrete appearance caused by radar signal. In this paper, We introduce a novel unsupervised domain adaptation framework for ship detection in SAR images by employing context-preserving region-based contrastive learning. We enhance the ship detection in SAR by learning knowledge from both labeled remote sensing optical image domain and unlabeled SAR image domain. Additionally, we propose a pseudo feature generation network to generate pseudo domain samples for augmenting pseudo-features. Specifically, we refine the pseudo-features by calculating a region-based contrastive loss on the features extracted from the object region and the background region to capture the contextual information for SAR ship detection. Extensive experiments and visualizations show that our method can outperform the state-of-the-art and have good generalization performance.

Similar content being viewed by others

References

Du, L., Li, L., Wei, D., & Mao, J. (2019). Saliency-guided single shot multibox detector for target detection in sar images. IEEE Transactions on Geoscience and Remote Sensing, 58, 3366–3376.

Jiao, J., Zhang, Y., Sun, H., Yang, X., Gao, X., Hong, W., et al. (2018). A densely connected end-to-end neural network for multiscale and multiscene sar ship detection. IEEE Access, 6, 20881–20892.

Bao, W., Huang, M., Zhang, Y., Xu, Y., Liu, X., & Xiang, X. (2021). Boosting ship detection in sar images with complementary pretraining techniques. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 14, 8941–8954.

Shrivastava, A., Pfister, T., Tuzel, O., Susskind, J., Wang, W., & Webb, R. (2017). Learning from simulated and unsupervised images through adversarial training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 2107–2116).

Chen, Y., Li, W., Sakaridis, C., Dai, D., & Van Gool, L. (2018). Domain adaptive faster r-cnn for object detection in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 3339–3348).

Wang, X., Cai, Z., Gao, D., & Vasconcelos, N. (2019b). Towards universal object detection by domain attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 7289–7298).

Lou, X., & Wang, H. (2021). Object detection in sar via generative knowledge transfer. In 2021 IEEE 31st International Workshop on Machine Learning for Signal Processing (MLSP) (pp. 1–6). IEEE.

Wackerman, C. C., Friedman, K. S., Pichel, W. G., Clemente-Colón, P., & Li, X. (2001). Automatic detection of ships in radarsat-1 sar imagery. Canadian journal of remote sensing, 27, 568–577.

Wang, C., Bi, F., Zhang, W., & Chen, L. (2017). An intensity-space domain cfar method for ship detection in hr sar images. IEEE Geoscience and Remote Sensing Letters, 14, 529–533.

Zhao, Z., Ji, K., Xing, X., Zou, H., & Zhou, S. (2014). Ship surveillance by integration of space-borne sar and ais-review of current research. The Journal of Navigation, 67, 177–189.

Girshick, R., Donahue, J., Darrell, T., & Malik, J. (2014). Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 580–587).

Ren, S., He, K., Girshick, R., & Sun, J. (2017). Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis & Machine Intelligence, 39, 1137–1149.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask r-cnn. In Proceedings of the IEEE international conference on computer vision (pp. 2961–2969).

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., & Berg, A. C. (2016). Ssd: Single shot multibox detector. In European conference on computer vision (pp. 21–37). Springer.

Lin, T.-Y., Goyal, P., Girshick, R., He, K., & Dollár, P. (2017b). Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (pp. 2980–2988).

Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 779–788).

Lin, Z., Ji, K., Leng, X., & Kuang, G. (2018). Squeeze and excitation rank faster r-cnn for ship detection in sar images. IEEE Geoscience and Remote Sensing Letters, 16, 751–755.

He, Z., & Zhang, L. (2019). Multi-adversarial faster-rcnn for unrestricted object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 6668–6677).

Saito, K., Ushiku, Y., Harada, T., & Saenko, K. (2019). Strong-weak distribution alignment for adaptive object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 6956–6965).

Wang, T., Zhang, X., Yuan, L., & Feng, J. (2019a). Few-shot adaptive faster r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 7173–7182).

Yao, X., Zhao, S., Xu, P., & Yang, J. (2021). Multi-source domain adaptation for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 3273–3282).

Yang, S., Wu, L., Wiliem, A., & Lovell, B. C. (2020a). Unsupervised domain adaptive object detection using forward-backward cyclic adaptation. In Proceedings of the Asian Conference on Computer Vision.

Zhu, X., Pang, J., Yang, C., Shi, J., & Lin, D. (2019). Adapting object detectors via selective cross-domain alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 687–696).

Yang, S., Wu, L., Wiliem, A., & Lovell, B. C. (2020b). Unsupervised domain adaptive object detection using forward-backward cyclic adaptation. In Proceedings of the Asian Conference on Computer Vision.

Jaiswal, A., Babu, A. R., Zadeh, M. Z., Banerjee, D., & Makedon, F. (2021). A survey on contrastive self-supervised learning. Technologies, 9, 2.

Wang, X., Zhang, R., Shen, C., Kong, T., & Li, L. (2021). Dense contrastive learning for self-supervised visual pre-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 3024–3033).

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. (2020). Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 9729–9738).

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. (2020). A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning (pp. 1597–1607). PMLR.

Bochkovskiy, A., Wang, C.-Y., & Liao, H.-Y. M. (2020). Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934

Lin, T. Y., Dollar, P., Girshick, R., He, K., Hariharan, B., & Belongie, S. (2017a). Feature pyramid networks for object detection. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

Liu, S., Qi, L., Qin, H., Shi, J., & Jia, J. (2018). Path aggregation network for instance segmentation. IEEE.

Glenn, J. yolov5. https://github.com/ultralytics/yolov5

Wang, X., & Qi, G.-J. (2021). Contrastive learning with stronger augmentations. arXiv preprint arXiv:2104.07713.

Han, Z., Fu, Z., Chen, S., & Yang, J. (2021). Contrastive embedding for generalized zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 2371–2381).

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., & Ren, D. (2020). Distance-iou loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence (pp. 12993–13000). volume 34.

Sun, X., Wang, Z., Sun, Y., et al. (2019). Air-sarship-1.0: High-resolution sar ship detection dataset. Journal of Radars, 8, 852–863.

Wei, S., Zeng, X., Qu, Q., Wang, M., Su, H., & Shi, J. (2020). Hrsid: A high-resolution sar images dataset for ship detection and instance segmentation. Ieee Access, 8, 120234–120254.

Li, K., Wan, G., Cheng, G., Meng, L., & Han, J. (2020). Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS Journal of Photogrammetry and Remote Sensing, 159, 296–307.

Li, J., Qu, C., & Shao, J. (2017). Ship detection in sar images based on an improved faster r-cnn. In 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA) (pp. 1–6). IEEE.

Xu, C.-D., Zhao, X.-R., Jin, X., & Wei, X.-S. (2020). Exploring categorical regularization for domain adaptive object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 11724–11733).

Acknowledgements

This work was supported in part by the Fundamental Research Funds for the Central Universities (NO.2021ZY86) , the Natural Science Foundation of China (NSFC) (NO.61703046) and the open fund of Science and Technology on Complex Electronic System Simulation Laboratory (No.614201004012103).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, T., Lou, X., Wang, H. et al. Context-Preserving Region-Based Contrastive Learning Framework for Ship Detection in SAR. J Sign Process Syst 95, 3–12 (2023). https://doi.org/10.1007/s11265-022-01799-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-022-01799-8