Abstract

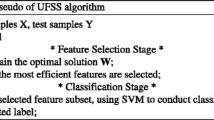

Feature selection is an important preprocessing step for dealing with high dimensional data. In this paper, we propose a novel unsupervised feature selection method by embedding a subspace learning regularization (i.e., principal component analysis (PCA)) into the sparse feature selection framework. Specifically, we select informative features via the sparse learning framework and consider preserving the principal components (i.e., the maximal variance) of the data at the same time, such that improving the interpretable ability of the feature selection model. Furthermore, we propose an effective optimization algorithm to solve the proposed objective function which can achieve stable optimal result with fast convergence. By comparing with five state-of-the-art unsupervised feature selection methods on six benchmark and real-world datasets, our proposed method achieved the best result in terms of classification performance.

Similar content being viewed by others

References

Boyd, S.: Alternating Direction Method of Multipliers. In: NIPS Workshop on Optimization and Machine Learning (2011)

Cai, D., Zhang, C., He, X.: Unsupervised Feature Selection for Multi-Cluster Data. In: SIGKDD, pp 333–342 (2010)

Daubechies, I., Devore, R., Fornasier, M., Gunturk, C.S.: Iteratively re-weighted least squares minimization for sparse recovery. Commun. Pure Appl. Math. 63(1), 1–38 (2008)

Ge, Z., Sharma, S.R., Smith, M.J.T.: Pca/lda approach for text-independent speaker recognition. SPIE 8401(7), 1–11 (2016)

Hinrichs, A., Novak, E., Ullrich, M., Woźniakowski, H.: The curse of dimensionality for numerical integration of smooth functions ii. J. Complex. 30(2), 117–143 (2014)

Rongyao, H., Zhu, X., Cheng, D., He, W., Yan, Y., Song, J., Zhang, S.: Graph self-representation method for unsupervised feature selection. Neurocomputing 220, 130–137 (2017)

Liu, G., Yan, S.: Latent low-rank representation for subspace segmentation and feature extraction. In: CVPR, pp 1615–1622 (2011)

Luukka, P.: Feature selection using fuzzy entropy measures with similarity classifier. Expert Syst. Appl. 38(4), 4600–4607 (2011)

Nie, F., Huang, H., Cai, X., Ding, C.: Efficient and robust feature selection via joint 2,1 -norms minimization. In: NIPS, pp 1813–1821 (2010)

Nie, F., Xiang, S., Jia, Y., Zhang, C., Yan, S.: Trace ratio criterion for feature selection. In: AAAI, pp 671–676 (2008)

Nie, F., Zhang, R., Li, X.: A generalized power iteration method for solving quadratic problem on the stiefel manifold. Sci. China Inf. Sci. 60(11), 112101 (2017)

Nie, F., Zhu, W., Li, X.: Unsupervised feature selection with structured graph optimization. In: AAAI, pp 1302–1308 (2016)

Qian, M., Zhai, C.: Robust unsupervised feature selection. In: IJCAI, pp 1621–1627 (2013)

Qin, Y., Zhang, S., Zhu, X., Zhang, J., Zhang, C.: Semi-parametric optimization for missing data imputation. Appl. Intell. 27(1), 79–88 (2007)

De, W., Nie, F., Huang, H.: Unsupervised unified trace ratio formulation and k-means clustering (track). In: ECML/PKDD, pp 306–321 (2014)

Wang, H., Nie, F., Huang, H., Risacher, S, Ding, C., Saykin, A.J., Shen, L.: Sparse multi-task regression and feature selection to identify brain imaging predictors for memory performance. In: ICCV, pp 557–562 (2011)

Wang, T., Qin, Z., Zhang, S., Zhang, C.: Cost-sensitive classification with inadequate labeled data. Inf. Syst. 37(5), 508–516 (2012)

Wang, Z., Zhu, X., Adeli, E., Zhu, Y., Nie, F., Munsell, B., Guorong, W.: Multi-modal classification of neurodegenerative disease by progressive graph-based transductive learning. Med. Image Anal. 39, 218–230 (2017)

Wen, Z., Yin, W.: A feasible method for optimization with orthogonality constraints. Math. Program. 142(1-2), 397–434 (2013)

Yan, K., Sukthankar, R.: Pca-sift: a More distinctive representation for local image descriptors. In: CVPR, vol. 2, pp II–506–II–513 (2004)

Zhang, S.: Shell-neighbor method and its application in missing data imputation. Appl. Intell. 35(1), 123–133 (2011)

Zhang, S.: Nearest neighbor selection for iteratively knn imputation. J. Syst. Softw. 85(11), 2541–2552 (2012)

Zhang, S., Jin, Z., Zhu, X.: Missing data imputation by utilizing information within incomplete instances. J. Syst. Softw. 84(3), 452–459 (2011)

Zhang, S., Li, X., Zong, M., Zhu, X., Cheng, D.: Learning k for knn classification. ACM Trans. Intell. Syst. Technol. 8(3), 43 (2017)

Zhang, S., Li, X., Zong, M., Zhu, X., Wang, R.: Efficient knn classification with different numbers of nearest neighbors. IEEE Trans. Neural Netw. Learn. Syst. (2017). https://doi.org/10.1109/TNNLS.2017.2673241

Zhang, S., Qin, Z., Ling, C.X., Sheng, S.: missing is useful: missing values in cost-sensitive decision trees. IEEE Trans. Knowl. Data Eng. 17(12), 1689–1693 (2005)

Zhang, S., Zhang, J., Zhu, X., Qin, Y., Zhang, C.: Missing value imputation based on data clustering. Trans Comput. Sci. I, 128–138 (2008)

Zhang, X., Gao, X., Liu, B.J., Ma, K., Yan, W., Long, L., Huang, Y., Hiroshi, F.: Effective staging of fibrosis by the selected texture features of liver: which one is better, ct or mr imaging?. Comput. Medical Imag. Graph. 46, 227–236 (2015)

Zhang, Z., Bai, L., Liang, Y., Hancock, E.: Joint hypergraph learning and sparse regression for feature selection. Pattern Recogn. 63, 291–309 (2016)

Zhu, P., Qinghua, H., Zhang, C., Zuo, W.: Coupled dictionary learning for unsupervised feature selection AAAI, pp 1–7 (2016)

Zhu, P., Zuo, W., Zhang, L., Qinghua, H., Shiu, S.C.K.: Unsupervised feature selection by regularized self-representation. Pattern Recogn. 48(2), 438–446 (2015)

Zhu, X., Zi, H., Shen, H.T., Zhao, X.: Linear cross-modal hashing for efficient multimedia search. In: ACM Multimedia, pp 143–152 (2013)

Zhu, X., Zi, H., Yang, Y., Shen, H.T., Changsheng, X., Luo, J.: Self-taught dimensionality reduction on the high-dimensional small-sized data. Pattern Recogn. 46(1), 215–229 (2013)

Zhu, X., Li, X., Zhang, S.: Block-row sparse multiview multilabel learning for image classification. IEEE Trans. Cybern. 46(2), 450–461 (2016)

Zhu, X., Li, X., Zhang, S., Chunhua, J., Xindong, W.: Robust joint graph sparse coding for unsupervised spectral feature selection. IEEE Trans. Neural Netw. Learn. Syst. 28(6), 1263–1275 (2017)

Zhu, X., Li, X., Shichao, Z., Zongben, X., Yu, L., Wang, C.: Graph pca hashing for similarity search. IEEE Trans. Multimed. (2017). https://doi.org/10.1109/TMM.2017.2703636

Zhu, X., Suk, H.-II, Huang, H., Shen, D.: Low-rank graph-regularized structured sparse regression for identifying genetic biomarkers. IEEE Trans. Big Data. (2017). https://doi.org/10.1109/TBDATA.2017.2735991

Zhu, X., Suk, H., Wang, L., Lee, S.-W., Shen, D.: A novel relational regularization feature selection method for joint regression and classification in AD diagnosis. Med. Image Anal. 38, 205–214 (2017)

Zhu, X., Zhang, L., Zi, H.: A sparse embedding and least variance encoding approach to hashing. IEEE Trans. Image Process. 23(9), 3737–3750 (2014)

Zhu, Y., Zhu, X., Kim, M., Kaufer, D., Guorong, W.: A novel dynamic hyper-graph inference framework for computer assisted diagnosis of neuro-diseases. In: IPMI, pp 158–169 (2017)

Acknowledgments

This work was supported in part by the China Key Research Program (Grant No: 2016YFB1000905), the China 973 Program (Grant No: 2013CB329404), the Nation Natural Science Foundation of China (Grants No: 61573270, 81460274, 81760324 and 61672177), the Guangxi Natural Science Foundation (Grant No: 2015GXNSFCB139011), the Guangxi High Institutions Program of Introducing 100 High-Level Overseas Talents, the Guangxi Collaborative Innovation Center of Multi-Source Information Integration and Intelligent Processing, the Research Fund of Guangxi Key Lab of MIMS (16-A-01-01 and 16-A-01-02), the Guangxi Key Laboratory of Multimedia Communications and Network Technology, and the Innovation Project of Guangxi Graduate Education (YCSW2017039).

Author information

Authors and Affiliations

Corresponding author

Additional information

This article belongs to the Topical Collection: Special Issue on Deep Mining Big Social Data

Guest Editors: Xiaofeng Zhu, Gerard Sanroma, Jilian Zhang, and Brent C. Munsell

Rights and permissions

About this article

Cite this article

Zhu, Y., Zhang, X., Wang, R. et al. Self-representation and PCA embedding for unsupervised feature selection. World Wide Web 21, 1675–1688 (2018). https://doi.org/10.1007/s11280-017-0497-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-017-0497-2