Abstract

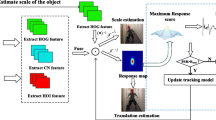

Recent progress has witnessed continued attention in discriminative correlation filter (DCF) tracking algorithms due to its high-efficiency. However, the existing DCF inevitably introduces some cyclic repetitions in learning and detection, which might lead to the heavy drift problem encountered in significant appearance variants owing to occlusion, deformation and motion blur. In this paper, we propose a dilated-aware discriminative correlation filter framework for visual tracking, which fully exploits multi-scale receptive contextual information of correlation filter to mitigate the impact of unwanted boundary and model degradation. On the premise of nondestructive filtering structure, our method adopts a simple formulation based on Kronecker product over discriminative correlation filter. By hands of multiple dilated factors perceive the multi-level spatial receptive map on objects. The framework learns a reliable response map by the residual understanding of multiple factor-dilated correlations filters. Furthermore, experiment results in a recent comprehensive tracking benchmark demonstrate a promising performance of the proposed method subjectively and objectively compared with several state-of-the-art algorithms.

Similar content being viewed by others

References

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.: Fully-convolutional siamese networks for object tracking. In: European conference on computer vision, pp. 850–865. Springer (2016)

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: 2010 IEEE conference on computer vision and pattern recognition (CVPR), pp. 2544–2550. IEEE (2010)

Choi, J., Chang, H.J., Yun, S., Fischer, T., Demiris, Y., Choi, J.Y., et al.: Attentional correlation filter network for adaptive visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, vol. 2 (2017)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1561–1575 (2017)

Dinh, T.B., Vo, N., Medioni, G.: Context tracker: exploring supporters and distracters in unconstrained environments. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR), pp. 1177–1184. IEEE (2011)

Du, X., Yin, H., Huang, Z., Yang, Y., Zhou, X.: Exploiting detected visual objects for frame-level video filtering. World Wide Web, pp. 1–26 (2017)

Fernandez, J.A., Boddeti, V.N., Rodriguez, A., Kumar, B.V.: Zero-aliasing correlation filters for object recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37 (8), 1702–1715 (2015)

Hare, S., Golodetz, S., Saffari, A., Vineet, V., Cheng, M.M., Hicks, S.L., Torr, P.H.: Struck: Structured output tracking with kernels. IEEE Trans. Pattern Anal. Mach. Intell. 38(10), 2096–2109 (2016)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: Exploiting the circulant structure of tracking-by-detection with kernels. In: European conference on computer vision, pp. 702–715. Springer (2012)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2015)

Jia, X., Lu, H., Yang, M.H.: Visual tracking via adaptive structural local sparse appearance model. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR), pp. 1822–1829. IEEE (2012)

Kalal, Z., Matas, J., Mikolajczyk, K.: Pn Learning: bootstrapping binary classifiers by structural constraints. In: 2010 IEEE conference on computer vision and pattern recognition (CVPR), pp. 49–56. IEEE (2010)

Kiani, G.H., Sim, T., Lucey, S.: Correlation Filters with Limited Boundaries. In: CVPR, vol. 1, p. 6 (2015)

Kiani Galoogahi, H., Sim, T., Lucey, S.: Multi-channel correlation filters. In: Proceedings of the IEEE international conference on computer vision, pp. 3072–3079 (2013)

Kwon, J., Lee, K.M.: Visual tracking decomposition. In: 2010 IEEE conference on computer vision and pattern recognition (CVPR), pp. 1269–1276. IEEE (2010)

Li, Y., Zhu, J.: A scale adaptive kernel correlation filter tracker with feature integration. In: ECCV workshops (2), pp. 254–265 (2014)

Li, Y., Lu, H., Li, J., Li, X., Li, Y., Serikawa, S.: Underwater image de-scattering and classification by deep neural network. Comput. Electr. Eng. 54, 68–77 (2016)

Lu, H., Li, Y., Uemura, T., Ge, Z., Xu, X., He, L., Serikawa, S., Kim, H.: Fdcnet: filtering deep convolutional network for marine organism classification. Multimedia Tools and Applications, pp. 1–14 (2017)

Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp. 4293–4302. IEEE (2016)

Ošep, A., Mehner, W., Voigtlaender, P., Leibe, B.: Track, then decide: Category-agnostic vision-based multi-object tracking. arXiv:1712.07920 (2017)

Ross, D.A., Lim, J., Lin, R.S., Yang, M.H.: Incremental learning for robust visual tracking. Int. J. Comput. Vis. 77(1), 125–141 (2008)

Song, Y., Ma, C., Gong, L., Zhang, J., Lau, R.W., Yang, M.H.: Crest: convolutional residual learning for visual tracking. In: 2017 IEEE international conference on computer vision (ICCV), pp. 2574–2583. IEEE (2017)

Tang, M., Feng, J.: Multi-Kernel correlation filter for visual tracking. In: Proceedings of the IEEE international conference on computer vision, pp. 3038–3046 (2015)

Wang, L., Ouyang, W., Wang, X., Lu, H.: Visual tracking with fully convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp. 3119–3127 (2015)

Wang, X., Gao, L., Wang, P., Sun, X., Liu, X.: Two-stream 3d convnet fusion for action recognition in videos with arbitrary size and length. IEEE Transactions on Multimedia (2017)

Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015)

Yang, W., Zhou, Q., Fan, Y., Gao, G., Wu, S., Ou, W., Lu, H., Cheng, J., Latecki, L.J.: Deep context convolutional neural networks for semantic segmentation. In: CCF Chinese Conference on computer vision, pp. 696–704. Springer (2017)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. arXiv:1511.07122 (2015)

Yu, F., Koltun, V., Funkhouser, T.: Dilated residual networks. arXiv:1705.09914 (2017)

Zhang, D., Maei, H., Wang, X., Wang, Y.F.: Deep reinforcement learning for visual object tracking in videos. arXiv:1701.08936 (2017)

Zhong, W., Lu, H., Yang, M.H.: Robust object tracking via sparsity-based collaborative model. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR), pp. 1838–1845. IEEE (2012)

Acknowledgements

This work is sponsored by the National Natural Science Foundation (61501259), sponsored by China Postdoctoral Science Foundation (2016M591891), sponsored by Natural Science Foundation of Jiangsu Province (BK20140874, BK20150864), sponsored by Leading Initiative for Excellent Young Researcher (LEADER) of Ministry of Education, Culture, Sports, Science and Technology-Japan (16809746), Grants-in-Aid for Scientific Research of JSPS (17K14694), Research Fund of Chinese Academy of Sciences (No.MGE2015KG02).

Author information

Authors and Affiliations

Corresponding author

Additional information

This article belongs to the Topical Collection: Special Issue on Deep vs. Shallow: Learning for Emerging Web-scale Data Computing and Applications

Guest Editors: Jingkuan Song, Shuqiang Jiang, Elisa Ricci, and Zi Huang

Rights and permissions

About this article

Cite this article

Xu, G., Zhu, H., Deng, L. et al. Dilated-aware discriminative correlation filter for visual tracking. World Wide Web 22, 791–805 (2019). https://doi.org/10.1007/s11280-018-0555-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-018-0555-4