Abstract

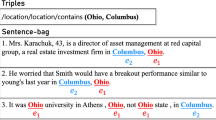

Distant supervised relation extraction which is to extract heterogeneous relations from text data without manual annotation has been widely used in decision-making tasks such as question answering or recommendation system. However, existing distant supervised methods inevitably accompany with the wrong labelling problem. They typically use attention mechanism to select valid instances while ignore the core of relation extraction, i.e., entity pairs and relations. To address this problem, in this paper we incorporate enhanced representations into a gated graph convolutional network to enrich the background information and further improve the attention mechanism to focus on the most relevant relation. Specifically, in the proposed framework, 1) we introduce a triplet enhanced word representation method to focus on not only position information but also entity pair and implicit relation information in a sentence; 2) we use a Gated Rectified Linear Units (GRLU) module to integrate triplet information into an instance so as to achieve the purpose of enhancing sentence-level features; and 3) we employ sentence-relation joint attention over multiple instances and multiple relations, which is expected to dynamically reduce the weights of those noisy instances and enhance the bag representation. Extensive experiments on two popular datasets show that our model achieves significant improvement over all baseline methods.

Similar content being viewed by others

References

Alt, C., Hübner, M, Hennig, L.: Fine-tuning pre-trained transformer language models to distantly supervised relation extraction. In: ACL (1). Association for Computational Linguistics, pp 1388–1398 (2019)

Bunescu, R.C., Mooney, R.J.: Subsequence kernels for relation extraction. In: NIPS, pp 171–178 (2005)

Cai, R., Zhang, X., Wang, H.: Bidirectional recurrent convolutional neural network for relation classification. In: ACL (1). The Association for Computer Linguistics (2016)

Cai, C., Li, J., Mian, A.S., et al.: Target-aware holistic influence maximization in spatial social networks. IEEE Trans. Knowl. Data Eng., 1–1 (2020)

Chen, M., Lin, Z., Cho, K.: Graph convolutional networks for classification with a structured label space. CoRR arXiv:1710.04908 (2017)

Chen, J., Zhong, M., Li, J., et al.: Effective deep attributed network representation learning with topology adapted smoothing. IEEE Trans. Cybern., 1–12 (2021)

Christou, D., Tsoumakas, G.: Improving distantly-supervised relation extraction through bert-based label and instance embeddings. IEEE Access 9:62,574–62,582 (2021)

Culotta, A., Sorensen, J.S.: Dependency tree kernels for relation extraction. In: ACL. ACL, pp 423–429 (2004)

Dauphin, Y.N., Fan, A., Auli, M., et al.: Language modeling with gated convolutional networks. In: ICML, Proceedings of Machine Learning Research, vol 70. PMLR, pp 933–941 (2017)

Dietterich, T.G., Lathrop, R.H., Lozano-Pėrez, T.: Solving the multiple instance problem with axis-parallel rectangles. Artif. Intell. 89(1-2), 31–71 (1997)

Du, J., Michalska, S., Subramani, S., et al.: Neural attention with character embeddings for hay fever detection from twitter. Health Inf. Sci. Syst. 7 (1), 21 (2019)

Du, J., Han, J., Way, A., et al.: Multi-level structured self-attentions for distantly supervised relation extraction. In: EMNLP. Association for Computational Linguistics, pp 2216–2225 (2018)

Han, X., Yu, P., Liu, Z., et al.: Hierarchical relation extraction with coarse-to-fine grained attention. In: EMNLP. Association for Computational Linguistics, pp 2236–2245 (2018)

He, Y., Li, Z., Yang, Q., et al.: End-to-end relation extraction based on bootstrapped multi-level distant supervision. World Wide Web 23(5), 2933–2956 (2020)

Huang, Y.Y., Wang, W.Y.: Deep residual learning for weakly-supervised relation extraction. In: EMNLP. Association for Computational Linguistics, pp 1803–1807 (2017)

Hoffmann, R., Zhang, C., Ling, X., et al.: Knowledge-based weak supervision for information extraction of overlapping relations. In: ACL. The Association for Computer Linguistics, pp 541–550 (2011)

Jat, S., Khandelwal, S., Talukdar, P.P.: Improving distantly supervised relation extraction using word and entity based attention. In: AKBC@NIPS. OpenReview.net (2017)

Jat, S., Khandelwal, S., Talukdar, P.P.: Improving distantly supervised relation extraction using word and entity based attention. CoRR arXiv:1804.06987 (2018)

Ji, G., Liu, K., He, S., et al.: Distant supervision for relation extraction with sentence-level attention and entity descriptions. In: AAAI, pp 3060–3066. AAAI Press (2017)

Jiang, X., Wang, Q., Li, P., et al.: Relation extraction with multi-instance multi-label convolutional neural networks. In: COLING. ACL, pp 1471–1480 (2016)

Jin, D., Yu, Z., Jiao, P., et al.: A survey of community detection approaches: From statistical modeling to deep learning. IEEE Transactions on Knowledge and Data Engineering. https://doi.org/10.1109/TKDE.2021.3104155 (2021)

Jin, D., Huo, C., Liang, C., et al.: Heterogeneous graph neural network via attribute completion. In: WWW. ACM / IW3C2, pp 391–400 (2021)

Li, Z., Wang, X., Li, J., et al.: Deep attributed network representation learning of complex coupling and interaction. Knowl. Based Syst. 212:106,618 (2021)

Liu, Y., Wei, F., Li, S., et al.: A dependency-based neural network for relation classification. In: ACL (2). The Association for Computer Linguistics, pp 285–290 (2015)

Liu, T., Wang, K., Chang, B., et al.: A soft-label method for noise-tolerant distantly supervised relation extraction. In: EMNLP. Association for Computational Linguistics, pp 1790–1795 (2017)

Lin, Y., Shen, S., Liu, Z., et al.: Neural relation extraction with selective attention over instances. In: ACL (1). The Association for Computer Linguistics (2016)

Mintz, M., Bills, S., Snow, R., et al: Distant supervision for relation extraction without labeled data. In: ACL/IJCNLP. The Association for Computer Linguistics, pp 1003–1011 (2009)

Ouyang, X., Chen, S., Wang, R.: Semantic enhanced distantly supervised relation extraction via graph attention network. Inf 11(11), 528 (2020)

Riedel, S., Yao, L., McCallum, A.: Modeling relations and their mentions without labeled text. In: ECML/PKDD (3), Lecture Notes in Computer Science, vol. 6323. Springer, pp 148–163 (2010)

Rios, A., Kavuluru, R.: Few-shot and zero-shot multi-label learning for structured label spaces. In: EMNLP. Association for Computational Linguistics, pp 3132–3142 (2018)

Shen, S., Duan, S., Gao, H., et al.: Improved distant supervision relation extraction based on edge-reasoning hybrid graph model. J Web Semant 70:100,656 (2021)

Song, X., Li, J., Tang, Y., et al.: Jkt: A joint graph convolutional network based deep knowledge tracing. Inf. Sci. 580:510–523. https://doi.org/10.1016/j.ins.2021.08.100, https://www.sciencedirect.com/science/article/pii/S0020025521009142 (2021)

Surdeanu, M., Tibshirani, J., Nallapati, R., et al.: Multi-instance multi-label learning for relation extraction. In: EMNLP-CoNLL. ACL, pp 455–465 (2012)

Supriya, Siuly S, Wang, H., et al.: Automated epilepsy detection techniques from electroencephalogram signals: a review study. Health Inf. Sci. Syst. 8(1), 33 (2020)

Srivastava, N., Hinton, G.E., Krizhevsky, A., et al.: Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1), 1929–1958 (2014)

Vashishth, S., Joshi, R., Prayaga, S.S., et al.: RESIDE: improving distantly-supervised neural relation extraction using side information. In: EMNLP. Association for Computational Linguistics, pp 1257–1266 (2018)

Xu, K., Feng, Y., Huang, S., et al.: Semantic relation classification via convolutional neural networks with simple negative sampling. In: EMNLP. The Association for Computational Linguistics, pp 536–540 (2015a)

Xu, Y., Mou, L., Li, G., et al.: Classifying relations via long short term memory networks along shortest dependency paths. In: EMNLP. The Association for Computational Linguistics, pp 1785–1794 (2015b)

Xu, K., Ba, J., Kiros, R., et al.: Show, attend and tell: Neural image caption generation with visual attention. In: ICML, JMLR Workshop and Conference Proceedings, vol 37. JMLR.org, pp 2048–2057 (2015)

Xue, G., Zhong, M., Li, J., et al.: Dynamic network embedding survey. CoRR arXiv:2103.15447 (2021)

Ye, Z., Ling, Z.: Distant supervision relation extraction with intra-bag and inter-bag attentions. In: NAACL-HLT (1). Association for Computational Linguistics, pp 2810–2819 (2019)

Zhang, F., Wang, Y., Liu, S., et al.: Decision-based evasion attacks on tree ensemble classifiers. World Wide Web 23(5), 2957–2977 (2020)

Zelenko, D., Aone, C., Richardella, A.: Kernel methods for relation extraction. In: EMNLP, pp 71–78 (2002)

Zeng, D., Liu, K., Lai, S., et al.: Relation classification via convolutional deep neural network. In: COLING. ACL, pp 2335–2344 (2014)

Zeng, D., Liu, K., Chen, Y., et al.: Distant supervision for relation extraction via piecewise convolutional neural networks. In: EMNLP. The Association for Computational Linguistics, pp 1753–1762 (2015)

Zhou, G., Su, J., Zhang, J., et al.: Exploring various knowledge in relation extraction. In: ACL. The Association for Computer Linguistics, pp 427–434 (2005)

Acknowledgements

This work is jointly supported by National Natural Science Foundation of China (61877043) and National Natural Science of China (61877044).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Special Issue on Decision Making in Heterogeneous Network Data Scenarios and Applications Guest Editors: Jianxin Li, Chengfei Liu, Ziyu Guan, and Yinghui Wu

Rights and permissions

About this article

Cite this article

Ying, X., Meng, Z., Zhao, M. et al. Gated graph convolutional network with enhanced representation and joint attention for distant supervised heterogeneous relation extraction. World Wide Web 26, 401–420 (2023). https://doi.org/10.1007/s11280-021-00979-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-021-00979-z