Abstract

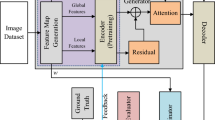

Recently, adversarial attack against Deep Neural Networks (DNN) have drawn very keen interest of researchers. Existence of universal adversarial perturbations could empower the cases where could not generate the image-dependent adversarial examples, which are known to be very successful on image classification. Previous work are mainly optimization-based, which take a long time to search perturbations, and the obtained adversarial examples are not so real and can be easily defensed. Moreover the researches on universal adversarial perturbation against vision-language systems are few. In our work, we novelly construct a GenerAtive Network for Universal Adversarial Perturbations, dubbed as UAP-GAN, to study the robustness of image classification and captioning systems, based on convolutional neural networks and plus recurrent neural networks, respectively. Specifically, our proposed UAP-GAN improves the framework of GAN to compute universal adversarial perturbations, with the input of a fixed random noise. Comparing to existing methods, our UAP-GAN method has four main characteristics: fast generation, high attack success rate, close to natural image, yet difficult to defense. In addition, our proposed model could produce image-agnostic perturbations for targeted and non-targeted attacks, according to the selected scene. In the end, our comprehensive experiments on MSCOCO and ImageNet, demonstrate the clear superiority to the existing work, and also prove that our UAP-GAN architecture could effectively fool the image captioning and classification models with splendid results, yet avoid the redesign of framework for different tasks.

Similar content being viewed by others

References

Akhtar, N., Liu, J., Mian, A.: Defense against universal adversarial perturbations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Arnab, A., Miksik, O., Torr, P.H.S.: On the robustness of semantic segmentation models to adversarial attacks. IEEE Trans. Pattern Anal. Mach. Intell. 42 (12), 3040–3053 (2020)

Carlini, N., Wagner, D.: Towards evaluating the robustness of neural networks. In: IEEE Symposium on Security and Privacy (SP), pp 39–57 (2017)

Chen, H., Zhang, H., Chen, P.-Y., Yi, J., Hsieh, C.-J.: Attacking visual language grounding with adversarial examples: A case study on neural image captioning. In: Association for Computational Linguistics (ACL), pp 2587–2597 (2018)

Chen, Z., Xie, L., Pang, S., He, Y., Tian, Q.: Appending adversarial frames for universal video attack. In: IEEE Winter Conference on Applications of Computer Vision (WACV) (2021)

Cheng, S., Dong, Y., Pang, T., Su, H., Zhu, J.: Improving black-box adversarial attacks with a transfer-based prior. In: Neural Information Processing Systems (NeuralIPS) (2019)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 248–255. IEEE (2009)

Denkowski, M., Lavie, A.: Meteor universal: Language specific translation evaluation for any target language. In: Proceedings of the Ninth Workshop on Statistical Machine Translation, pp 376–380 (2014)

Ding, W., Wei, X., Hong, X., Ji, R., Gong, Y.: Universal adversarial perturbations against person re-identification. In: arXiv (2019)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Neural Information Processing Systems (NeuralIPS), pp 2672–2680 (2014)

Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: International Conference on Learning Representations (ICLR) (2014)

Jiang, L., Ma, X., Chen, S., Bailey, J., Jiang, Y.: Black-box adversarial attacks on video recognition models. In: ACM International Conference on Multimedia (ACM MM) (2019)

Junyu, L., Lei, X., Yingqi, L., Xiangyu, Z.: Black-box adversarial sample generation based on differential evolution. J. Syst. Softw. 170, 110767 (2020)

Kaiyi, L., Xing, X., Lianli, G., Zheng, W., Hengtao, S.: Learning cross-aligned latent embeddings for zero-shot cross-modal retrieval. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) (2020)

Kingma, D.P., Adam, J.B.A.: A method for stochastic optimization. arXiv:1412.6980 (2014)

Krizhevsky, A., Hinton, G., et al.: Learning multiple layers of features from tiny images. Technical report, Citeseer (2009)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Neural Information Processing Systems (NeuralIPS), pp 1097–1105 (2012)

Kumar, K.N., Vishnu, C., Mitra, R., Mohan, C.K.: Black-box adversarial attacks in autonomous vehicle technology. In: 2020 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), pp 1–7 (2020)

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, J., Ji, R., Liu, H., Hong, X., Gao, Y., Tian, Q.: Universal perturbation attack against image retrieval. In: International Conference on Computer Vision (ICCV) (2019)

Liang, B., Li, H., Su, M., Bian, P., Li, X., Shi, W.: Deep text classification can be fooled. In: International Joint Conference on Artificial Intelligence (IJCAI), pp 4208–4215 (2018)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: Common objects in context. In: European Conference on Computer Vision (ECCV), pp 740–755 (2014)

Liu, H., Ji, R., Li, J., Zhang, B., Gao, Y., Wu, Y., Huang, F.: Universal adversarial perturbation via prior driven uncertainty approximation. In: International Conference on Computer Vision (ICCV) (2019)

Madry, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. In: International Conference on Learning Representations (ICLR) (2018)

Meng, L., Lin, C.-T., Jung, T.-P., Wu, D.: White-box target attack for EEG-based BCI regression problems. In: Neural Information Processing, pp 476–488 (2019)

Metzen, J.H., Kumar, M.C., Brox, T., Fischer, V.: Universal adversarial perturbations against semantic image segmentation. In: International Conference on Computer Vision (ICCV), pp 2774–2783 (2017)

Moosavi-Dezfooli, S.-M., Fawzi, A., Fawzi, O., Frossard, P.: Universal adversarial perturbations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Moosavi-Dezfooli, S.-M., Fawzi, A., Frossard, P.: Deepfool: A simple and accurate method to fool deep neural networks. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Mopuri, K.R., Ojha, U., Garg, U., Babu, R.V.: Nag: Network for adversary generation. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 742–751 (2018)

Oseledets, I., Khrulkov, V.: Art of singular vectors and universal adversarial perturbations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 8562–8570 (2018)

Papineni, K., Roukos, S., Ward, T., Zhu, W.-J.: Bleu: A method for automatic evaluation of machine translation. In: Association for Computational Linguistics (ACL), pp 311–318 (2002)

Pei, K., Cao, Y., Yang, J., Jana, S.: Deepxplore: Automated whitebox testing of deep learning systems. In: Proceedings of the 26th Symposium on Operating Systems Principles, pp 1–18 (2017)

Peng, L., Yang, Y., Wang, Z., Huang, Z., Shen, H.T.: Mra-net: Improving vqa via multi-modal relation attention network. IEEE Trans. Pattern Anal. Mach. Intell. https://doi.org/10.1109/TPAMI.2020.3004830 (2020)

Poursaeed, O., Katsman, I., Gao, B., Belongie, S.: Generative adversarial perturbations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Shafahi, A., Najibi, M., Xu, Z., Dickerson, J.P., Davis, L.S., Goldstein, T.: Universal adversarial training. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) (2020)

Shi, Y., Wang, S., Han, Y.: Curls & Whey: Boosting black-box adversarial attacks. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (ICLR) (2014)

Sun, J., Cao, Y., Chen, Q.A., Mao, Z.M.: Towards robust lidar-based perception in autonomous driving: General black-box adversarial sensor attack and countermeasures. In: Proceedings of the 29th USENIX Conference on Security Symposium (2020)

Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., Fergus, R.: Intriguing properties of neural networks. In: International Conference on Learning Representations (ICLR) (2014)

Tang, S., Huang, X., Chen, M., Sun, C., Yang, J.: Adversarial attack type I: Cheat classifiers by significant changes. IEEE Trans. Pattern Anal. Mach. Intell. (2019)

Tolias, G., Radenovic, F., Chum, O.: Targeted mismatch adversarial attack: Query with a flower to retrieve the tower. In: International Conference on Computer Vision (ICCV) (2019)

Vinyals, O., Toshev, A., Bengio, S., Erhan, D.: Show and tell: A neural image caption generator. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 3156–3164 (2015)

Wang, N., Wang, Z., Xu, X., Shen, F., Yang, Y., Shen, H.T.: Attention-based relation reasoning network for video-text retrieval. In: IEEE International Conference on Multimedia and Expo (ICME), pp 1–6 (2021)

Wang, Z., Chen, K., Zhang, M., He, P., Wang, Y., Zhu, P., Yang, Y.: Multi-scale aggregation network for temporal action proposals. Pattern Recogn. Lett. 122, 60–65 (2019)

Wang, Z., Zhou, J., Ma, J., Li, J., Ai, J., Yang, Y.: Discovering attractive segments in the user-generated video streams. Inf. Process. Manag., 57 (2020)

Xiao, C., Li, B., Zhu, J.-Y., He, W., Liu, M., Song, D.: Generating adversarial examples with adversarial networks. In: International Joint Conference on Artificial Intelligence (IJCAI) (2018)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhutdinov, R., Zemel, R., Bengio, Y.: Show, attend and tell: Neural image caption generation with visual attention. In: International Conference on Machine Learning (ICML) (2015)

Xu, X., Chen, J., Xiao, J., Gao, L., Shen, F., Shen, H.T.: What Machines see is not what they get: Fooling scene text recognition models with adversarial text images. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Xu, X., Chen, J., Xiao, J., Wang, Z., Yang, Y., Shen, H.T.: Learning optimization-based adversarial perturbations for attacking sequential recognition models. In: ACM International Conference on Multimedia (ACMMM) (2020)

Xu, X., Chen, X., Liu, C., Rohrbach, A., Darrell, T., Song, D.: Can you fool AI with adversarial examples on a visual turing test?. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Xu, Y., Wu, B., Shen, F., Fan, Y., Zhang, Y., Shen, H.T., Liu, W.: Exact adversarial attack to image captioning via structured output learning with latent variables. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Zhang, C., Benz, P., Imtiaz, T., Kweon, I.S.: Cd-uap: Class discriminative universal adversarial perturbation. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pp 6754–6761 (2020)

Zhang, C., Benz, P., Imtiaz, T., Kweon, I.S.: Understanding adversarial examples from the mutual influence of images and perturbations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 14509–14518 (2020)

Zhang, S., Wang, Z., Xu, X., Guan, X., Yang, Y.: Fooled by imagination: Adversarial attack to image captioning via perturbation in complex domain. In: IEEE International Conference on Multimedia and Expo (ICME) (2020)

Acknowledgements

This work was supported in part by the Sichuan Science and Technology Program, China (2020YJ0038 ), and the National Natural Science Foundation of China (U20B2063, 61976049).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Special Issue on Synthetic Media on the Web

Guest Editors: Huimin Lu, Xing Xu, Jože Guna, and Gautam Srivastava

Rights and permissions

About this article

Cite this article

Wang, Z., Yang, Y., Li, J. et al. Universal adversarial perturbations generative network. World Wide Web 25, 1725–1746 (2022). https://doi.org/10.1007/s11280-022-01058-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-022-01058-7