Abstract

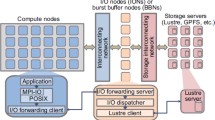

Modern High-Performance Computing (HPC) systems are adding extra layers to the memory and storage hierarchy, named deep memory and storage hierarchy (DMSH), to increase I/O performance. New hardware technologies, such as NVMe and SSD, have been introduced in burst buffer installations to reduce the pressure for external storage and boost the burstiness of modern I/O systems. The DMSH has demonstrated its strength and potential in practice. However, each layer of DMSH is an independent heterogeneous system and data movement among more layers is significantly more complex even without considering heterogeneity. How to efficiently utilize the DMSH is a subject of research facing the HPC community. Further, accessing data with a high-throughput and low-latency is more imperative than ever. Data prefetching is a well-known technique for hiding read latency by requesting data before it is needed to move it from a high-latency medium (e.g., disk) to a low-latency one (e.g., main memory). However, existing solutions do not consider the new deep memory and storage hierarchy and also suffer from under-utilization of prefetching resources and unnecessary evictions. Additionally, existing approaches implement a client-pull model where understanding the application’s I/O behavior drives prefetching decisions. Moving towards exascale, where machines run multiple applications concurrently by accessing files in a workflow, a more data-centric approach resolves challenges such as cache pollution and redundancy. In this paper, we present the design and implementation of Hermes: a new, heterogeneous-aware, multi-tiered, dynamic, and distributed I/O buffering system. Hermes enables, manages, supervises, and, in some sense, extends I/O buffering to fully integrate into the DMSH. We introduce three novel data placement policies to efficiently utilize all layers and we present three novel techniques to perform memory, metadata, and communication management in hierarchical buffering systems. Additionally, we demonstrate the benefits of a truly hierarchical data prefetcher that adopts a server-push approach to data prefetching. Our evaluation shows that, in addition to automatic data movement through the hierarchy, Hermes can significantly accelerate I/O and outperforms by more than 2x state-of-the-art buffering platforms. Lastly, results show 10%–35% performance gains over existing prefetchers and over 50% when compared to systems with no prefetching.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Kitchin R. Big Data, new epistemologies and paradigm shifts. Big Data & Society, 2014, 1(1): Article No. 1.

Reinsel D, Gantz J, Rydning J. Data age 2025: The evolution of data to life-critical – Don’t focus on big data; focus on the data that’s big. https://www.import.io/wpcontent/uploads/2017/04/Seagate-WP-DataAge2025-March-2017.pdf, May 2019.

Hey T, Stewart T, Tolle K M. The Fourth Paradigm: Data-Intensive Scientific Discovery (1st edition). Microsoft Research, 2009.

Thakur R, Gropp W, Lusk E. Data sieving and collective I/O in ROMIO. In Proc. the 7th Symposium on the Frontiers of Massively Parallel Computation, February 1999, pp.182-189.

Folk M, Cheng A, Yates K. HDF5: A file format and I/O library for high performance computing applications. In Proc. Supercomputing, November 1999, pp.5-33.

Braam P. The Lustre storage architecture. arXiv:1903.01955, 2019. https://arxiv.org/pdf/1903.01955, May 2019.

Schmuck F B, Haskin R L. GPFS: A shared-disk file system for large computing clusters. In Proc. the Conference on File and Storage Technologies, January 2002, pp.231-244.

Carns P H, Ligon III W B, Ross R B, Thakur R. PVFS: A parallel file system for Linux clusters. In Proc. the 4th Annual Linux Showcase and Conference, October 2000, pp.391-430.

Khaleel M A. Scientific Grand Challenges: Crosscutting Technologies for Computing at the Exascale. Pacific Northwest National Laboratory, 2010. http://digital.library.unt.edu/ark:/67531/metadc841613/, Dec. 2019.

Dongarra J, Beckman P, Moore T et al. The international exascale software project roadmap. International Journal of High Performance Computing Applications, 2011, 25(1): 3-60.

Reed D A, Dongarra J. Exascale computing and big data. Communications of the ACM, 2015, 58(7): 56-68.

Shalf J, Dosanjh S, Morrison J. Exascale computing technology challenges. In Proc. the 9th International Conference on High Performance Computing for Computational Science, June 2010, pp.1-25.

Bent J, Grider G, Kettering B, Manzanares A, McClelland M, Torres A, Torrez A. Storage challenges at Los Alamos National Lab. In Proc. the 28th IEEE Symposium on Mass Storage Systems and Technologies, April 2012, Article No. 12.

Caulfield A M, Grupp L M, Swanson S. Gordon: Using flash memory to build fast, power efficient clusters for data-intensive applications. In Proc. the 14th International Conference on Architectural Support for Programming Languages and Operating Systems, March 2009, pp.217-228.

Kannan S, Gavrilovska A, Schwan K, Milojicic D, Talwar V. Using active NVRAM for I/O staging. In Proc. the 2nd International Workshop on Petascal Data Analytics: Challenges and Opportunities, November 2011, pp.15-22.

Caulfield A M, Coburn J, Mollov T, De A, Akel A, He J H, Jagatheesan A, Gupta R K, Snavely A, Swanson S. Understanding the impact of emerging non-volatile memories on high-performance, IO-intensive computing. In Proc. the 2010 ACM/IEEE International Conference for High Performance Computing, Networking, Storage and Analysis, November 2010.

Lockwood G K, Hazen D, Koziol Q et al. Storage 2020: A vision for the future of HPC storage. Technical Report, Lawrence Berkeley National Laboratory, 2017. https://escholarship.org/uc/item/744479dp, May 2019.

Li JW, LiaoWK, Choudhary A, Ross R, Thakur R, Gropp W, Latham R, Siegel A, Gallagher B, Zingale M. Parallel netCDF: A high-performance scientific I/O interface. In Proc. the 2003 ACM/IEEE Conference on Supercomputing, Nov. 2003.

Lofstead J F, Klasky S, Schwan K, Podhorszki N, Jin C. Flexible IO and integration for scientific codes through the adaptable IO system (ADIOS). In Proc. the 6th International Workshop on Challenges of Large Applications in Distributed Environments, June 2008, pp.15-24.

Chang F, Gibson G A. Automatic I/O hint generation through speculative execution. In Proc. the 3rd USENIX Symposium on Operating Systems Design and Implementation, February 1999, pp.1-14.

He J, Sun X H, Thakur R. KNOWAC: I/O prefetch via accumulated knowledge. In Proc. the 2012 IEEE International Conference on Cluster Computing, September 2012, pp.429-437.

Dong B, Wang T, Tang H J, Koziol Q, Wu K S, Byna S. ARCHIE: Data analysis acceleration with array caching in hierarchical storage. In Proc. the 2018 IEEE International Conference on Big Data, December 2018, pp. 211-220.

Buyya R, Calheiros R N, Amir V D. Big Data: Principles and Paradigms (1st edition). Morgan Kaufmann, 2016.

Kune R, Konugurthi P, Agarwal A, Rao C R, Buyya R. The anatomy of big data computing. Software — Practice and Experience, 2016, 46(1): 79-105.

Kougkas A, Devarajan H, Sun X H, Lofstead J F. Harmonia: An interference-aware dynamic I/O scheduler for shared non-volatile burst buffers. In Proc. the 2018 IEEE International Conference on Cluster Computing, September 2018, pp. 290-301.

Xie B, Huang Y Z, Chase J S, Choi J Y, Klasky S, Lofstead J, Oral S. Predicting output performance of a petascale supercomputer. In Proc. the 26th International Symposium on High-Performance Parallel and Distributed Computing, June 2017, pp.181-192.

Kim Y, Gunasekaran R, Shipman G M, Dillow D A, Zhang Z, Settlemyer B W. Workload characterization of a leadership class storage cluster. In Proc. the 5th Petascale Data Storage Workshop, Nov. 2010, Article No. 4.

Mi N F, Riska A, Zhang Q, Smirni E, Riedel E. Efficient management of idleness in storage systems. ACM Transactions on Storage, 2019, (2): Article No. 4.

Ahern S, Alam S R, Fahey M R et al. Scientific application requirements for leadership computing at the exascale. Technical Report, Oak Ridge National Laboratory, 2007. https://www.olcf.ornl.gov/wpcontent/uploads/2010/03/Exascale Reqms.pdf, May 2019.

Carns P, Harms K, Allcock W, Bacon C, Lang S, Latham R, Ross R. Understanding and improving computational science storage access through continuous characterization. ACM Transactions on Storage, 2011, 7(3): Article No. 8.

Dundas J, Mudge T. Improving data cache performance by pre-executing instructions under a cache miss. In Proc. the 11th International Conference on Supercomputing, July 1997, pp.68-75.

Doweck J. Shared memory access. http://download.intel. com/technology/architecture/sma.pdf, May 2019.

Mutlu O, Stark J, Wilkerson C, Patt Y N. Runahead execution: An alternative to very large instruction windows for out-of-order processors. In Proc. the 9th International Symposium on High-Performance Computer Architecture, February 2003, pp.129-140.

Qadri M Y, Qadri N N, Fleury M, McDonald-Maier K D. Energy-efficient data prefetch buffering for low-end embedded processors. Microelectronics Journal, 2017, 62: 57-64.

Sun X H, Byna S, Chen Y. Server-based data push architecture for multi-processor environments. Journal of Computer Science and Technology, 2007, 22(5): 641-652.

Zhou H Y. Dual-core execution: Building a highly scalable single-thread instruction window. In Proc. the 14th International Conference on Parallel Architectures and Compilation Techniques, September 2005, pp.231-242.

Cao P, Felten E W, Karlin A R, Li K. Implementation and performance of integrated application-controlled file caching, prefetching, and disk scheduling. ACM Transactions on Computer Systems, 1996, 14(4): 311-343.

Ding X N, Jiang S, Chen F, Davis K, Zhang X D. DiskSeen: Exploiting disk layout and access history to enhance I/O prefetch. In Proc. the 2017 USENIX Annual Technical Conference, June 2007, pp.261-274.

Klaiber A C, Levy H M. An architecture for software-controlled data prefetching. In Proc. the 18th Annual International Symposium on Computer Architecture, May 1991, pp.43-53.

Mowry T, Gupta A. Tolerating latency through software-controlled prefetching in shared-memory multiprocessors. Journal of Parallel and Distributed Computing, 1991, 12(2): 87-106.

Subedi P, Davis P, Duan S H, Klasky S, Kolla H, Parashar M. Stacker: An autonomic data movement engine for extreme-scale data staging-based in-situ workflows. In Proc. the International Conference for High Performance Computing, Networking, November 2018, Article No. 73.

Cherubini G, Kim Y, Lantz M, Venkatesan V. Data prefetching for large tiered storage systems. In Proc. the 2017 IEEE International Conference on Data Mining, November 2017, pp.823-828.

Joo Y, Park S, Bahn H. Exploiting I/O reordering and I/O interleaving to improve application launch performance. ACM Transactions on Storage, 2017, 13(1): Article No. 8.

Abbasi H, Wolf M, Eisenhauer G, Klasky S, Schwan K, Zheng F. DataStager: Scalable data staging services for petascale applications. Cluster Computing, 2010, 13(3): 277-290.

Bengio Y. Markovian models for sequential data. Neural Computing Surveys, 1999, 2(199): 129-162.

Thilaganga V, Karthika M, Lakshmi M M. A prefetching technique using HMM forward and backward chaining for the DFS in cloud. Asian Journal of Computer Science and Technology, 2017, 6(2): 23-26.

Tran N, Reed D A. Automatic ARIMA time series modeling for adaptive I/O prefetching. IEEE Transactions on Parallel and Distributed Systems, 2004, 15(4): 362-377.

Matthieu D, Ibrahim S, Antoniu G, Ross R. Omnisc’IO: A grammar-based approach to spatial and temporal I/O patterns prediction. In Proc. the International Conference for High Performance Computing, Networking, Storage and Analysis, November 2014, pp.623-634.

Luo Y F, Shi J, Zhou S G. JeCache: Just-enough data caching with just-in-time prefetching for big data applications. In Proc. the 37th IEEE International Conference on Distributed Computing Systems, June 2017, pp.2405-2410.

Daniel G, Sunyé G, Cabot J. PrefetchML: A framework for prefetching and caching models. In Proc. the 19th ACM/IEEE International Conference on Model Driven Engineering Languages and Systems, October 2016, pp.318-328.

Xu R, Jin X, Tao L F, Guo S Z, Xiang Z K, Tian T. An efficient resource-optimized learning prefetcher for solid state drives. In Proc. the 2018 Design, Automation & Test in Europe Conference & Exhibition, March 2018, pp.273-276.

Wu K, Huang Y C, Li D. Unimem: Run-time data management on non-volatile memory-based heterogeneous main memory. In Proc. the 2017 International Conference for High Performance Computing, Networking, Storage and Analysis, November 2017, Article No. 58.

Snyder B, Bosanac D, Davies R. Introduction to Apache ActiveMQ. In Active MQ in Action, Snyder B, Bosanac D, Davies R (eds.), Manning Publications, 2011, pp.6-16.

Kreps J, Narkhede N, Rao J. Kafka: A distributed messaging system for log processing. In Proc. the 6th Workshop on Networking Meets Databases, June 2011, pp.1-7.

Zawislak D, Toonen B, Allcock W, Rizzi S, Insley J, Vishwanath V, Papka M E. Early investigations into using a remote RAM pool with the vl3 visualization framework. In Proc. the 2nd Workshop on In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization, November 2016, pp.23-28.

Carns P, Latham R, Ross R, Iskra K, Lang S, Riley K. 24/7 characterization of petascale I/O workloads. In Proc. the 2009 IEEE International Conference on Cluster Computing, August 2009, Article No. 73.

Rao D S, Kumar S, Keshavamurthy A, Lantz P, Reddy D, Sankaran R, Jackson J. System software for persistent memory. In Proc. the 9th Eurosys Conference, April 2014, Article No. 15.

Qreshi M K, Srinivasan V, Rivers J A. Scalable high performance main memory system using phase-change memory technology. ACM SIGARCH Computer Architecture News, 2009, 37(3): 24-33.

Berriman G B, Good J C, Laity A C, Kong M. The Montage image mosaic service: Custom image mosaics on-demand. In Proc. the 2007 Conference on Astronomical Data Analysis Software and Systems, September 2007, pp.83-102.

Strukov D B, Snider G S, Stewart D R, Williams R S. The missing memristor found. Nature, 2008, 453(7191): 80-83.

Joo Y, Ryu J, Park S, Shin K G. FAST: Quick application launch on solid-state drives. In Proc. the 9th USENIX Conference on File and Storage Technologies, February 2011, pp.259-272.

Maghraoui K E, Kandiraju G, Jann J, Pattnaik P. Modeling and simulating flash based solid-state disks for operating systems. In Proc. the 1st Joint WOSP/SIPEW International Conference on Performance Engineering, January 2010, pp.15-26.

Andersen D G, Franklin J, Kaminsky M, Phanishayee A, Tan L, Vasudevan V. FAWN: A fast array of wimpy nodes. In Proc. the 22nd ACM SIGOPS Symposium on Operating Systems Principles, October 2009, pp.1-14.

Chen S. FlashLogging: Exploiting flash devices for synchronous logging performance. In Proc. the 2009 ACM SIGMOD International Conference on Management of Data, June 2009, pp.73-86.

Bhimji W, Bard D, Romanus M et al. Accelerating science with the NERSC burst buffer early user program. In Proc. the 2016 Cray User Group, May 2016.

Kang S, Park S, Jung H, Shim H, Cha J. Performance tradeoffs in using NVRAM write buffer for flash memory-based storage devices. IEEE Transactions on Computers, 2008, 58(6): 744-758.

Caulfield A M, De A, Coburn J, Mollow T I, Gupta R K, Swanson S. Moneta: A high-performance storage array architecture for next-generation, non-volatile memories. In Proc. the 43rd Annual IEEE/ACM International Symposium on Microarchitecture, December 2010, pp.385-395.

Akel A, Caulfield A M, Mollov T I, Gupta R K, Swanson S. Onyx: A prototype phase change memory storage array. In Proc. the 3rd USENIX Workshop on Hot Topics in Storage and File Systems, June 2011, Article No. 8.

Dong X Y, Muralimanohar N, Jouppi N, Kaufmann R, Xie Y. Leveraging 3D PCRAM technologies to reduce checkpoint overhead for future exascale systems. In Proc. the 2009 Conference on High Performance Computing Networking, Storage and Analysis, November 2009, Article No. 57.

Wang T, Oral S, Wang Y D, Settlemyer B, Atchley S, Yu W K. BurstMem: A high-performance burst buffer system for scientific applications. In Proc. the 2014 IEEE International Conference on Big Data, October 2014, pp.71-79.

Sato K, Mohror K, Moody A, Gamblin T, de Supinski B R, Maruyama N, Matsuoka S. A user-level InfiniBand-based file system and checkpoint strategy for burst buffers. In Proc. the 14th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, May 2014, pp.21-30.

Ma X S,Winslett M, Lee J, Yu S K. Faster collective output through active buffering. In Proc. the 16th International Parallel and Distributed Processing Symposium, April 2001, Article No. 44.

Ma X S, Winslett M, Lee J, Yu S K. Improving MPI-IO output performance with active buffering plus threads. In Proc. the 17th International Parallel and Distributed Processing Symposium, April 2003, Article No. 68.

Pai V S, Druschel P, Zwaenepoel W. IO-Lite: A unified I/O buffering and caching system. In Proc. the 3rd USENIX Symposium on Operating Systems Design and Implementation, February 1999, pp.15-28.

Nitzberg B, Lo V. Collective buffering: Improving parallel I/O performance. In Proc. the 6th IEEE International Symposium on High Performance Distributed Computing, August 1997, pp.148-157.

Bent J, Gibson G, Grider G, McClelland B, Nowoczynski P, Nunez J, Polte M, Wingate M. PLFS: A checkpoint filesystem for parallel applications. In Proc. the 2009 ACM/IEEE Conference on High Performance Computing Networking, Storage and Analysis, November 2009, Article No. 21.

Dong B, Byna S, Wu K, Johansen H, Johnson J N, Keen N. Data elevator: Low-contention data movement in hierarchical storage system. In Proc. the 23rd International Conference on High Performance Computing, December 2016, pp.152-161.

Wang T, Byna S, Dong B, Tang H. UniviStor: Integrated hierarchical and distributed storage for HPC. In Proc. the 2018 IEEE International Conference on Cluster Computing, September 2018, pp.134-144.

Lee D, Choi J, Kim J H, Noh S H, Min S L, Cho Y, Kim C S. LRFU: A spectrum of policies that subsumes the least recently used and least frequently used policies. IEEE Transactions on Computers, 2001, 50(12): 1352-1361.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

ESM 1

(PDF 953 kb)

Rights and permissions

About this article

Cite this article

Kougkas, A., Devarajan, H. & Sun, XH. I/O Acceleration via Multi-Tiered Data Buffering and Prefetching. J. Comput. Sci. Technol. 35, 92–120 (2020). https://doi.org/10.1007/s11390-020-9781-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11390-020-9781-1