Abstract

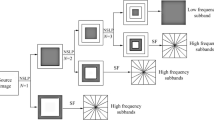

The aim of medical image fusion is to improve the clinical diagnosis accuracy, so the fused image is generated by preserving salient features and details of the source images. This paper designs a novel fusion scheme for CT and MRI medical images based on convolutional neural networks (CNNs) and a dual-channel spiking cortical model (DCSCM). Firstly, non-subsampled shearlet transform (NSST) is utilized to decompose the source image into a low-frequency coefficient and a series of high-frequency coefficients. Secondly, the low-frequency coefficient is fused by the CNN framework, where weight map is generated by a series of feature maps and an adaptive selection rule, and then the high-frequency coefficients are fused by DCSCM, where the modified average gradient of the high-frequency coefficients is adopted as the input stimulus of DCSCM. Finally, the fused image is reconstructed by inverse NSST. Experimental results indicate that the proposed scheme performs well in both subjective visual performance and objective evaluation and has superiorities in detail retention and visual effect over other current typical ones.

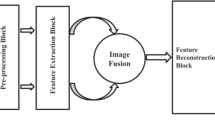

A schematic diagram of the CT and MRI medical image fusion framework using convolutional neural network and a dual-channel spiking cortical model.

Similar content being viewed by others

References

Y Liu, X Chen, J Cheng et al. A medical image fusion method based on convolutional neural networks, International Conference on Information Fusion. IEEE, 1–7(2017)

Gaurav Bhatnagar QM, Wu J, Liu Z (2013) Directive contrast based multimodal medical image fusion in NSCT domain. IEEE Trans Multimedia 15(5):1014–1024

Liu X, Mei W, Du H (2017) Structure tensor and nonsubsampled shearlet transform based algorithm for CT and MRI image fusion. Neurocomputing 235:131–139

Vijayarajan R, Muttan S (2015) Discrete wavelet transform based principal component averaging fusion for medical images. AEU-Int J Electron C 69(6):896–902

Toet A (1989) Image fusion by a ratio of low-pass pyramid. Pattern Recogn Lett 9(4):245–253

Do Minh N, Vetterli M (2005) The contourlet transform: an efficient directional multiresolution image representation. IEEE Trans Image Process 14(12):2091–2106

Da Cunha AL, Zhou J, Do MN (2006) The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans Image Process 15(10):3089–3101

Zhang T, Zhou Q, Feng H et al (2007) Fusion of infrared and visible light images based on nonsubsampled shearlet transform. Int J Infrared Millimeter Waves 26(6):476–480

Johnson JL, Padgett ML (1999) PCNN models and applications. IEEE Trans Neural Netw 17(3):480–498

Jin X, Nie RC, Zhou DM et al (2016) Multifocus color image fusion based on NSST and PCNN. J Sens 8359602. https://doi.org/10.1155/2016/8359602

He K, Zhou D, Zhang X et al (2018) Multi-focus image fusion combining focus-region-level partition and pulse-coupled neural network. Soft Comput 4:1–15. https://doi.org/10.1007/s00500-018-3118-9

Hou R, Nie R, Zhou D et al (2018) Infrared and visible images fusion using visual saliency and optimized spiking cortical model in non-subsampled shearlet transform domain. Multimed Tools Appl 1:1–24. https://doi.org/10.1007/s11042-018-6099-x

Liu Y, Chen X, Wang Z et al (2018) Deep learning for pixel-level image fusion: recent advances and future prospects. Inform Fusion 42:158–173

Liu Y, Chen X, Peng H et al (2017) Multi-focus image fusion with a deep convolutional neural network. Inform Fusion 36:191–207

Prabhakar KR, Srikar VS, Babu RV (2017) DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image Pairs. IEEE International Conference on Computer Vision. IEEE Computer Society, 4724–4732

Ide H, Kurita T (2017) Improvement of learning for CNN with ReLU activation by sparse regularization. International Joint Conference on Neural Networks IEEE, 2684–2691

Gatys LA, Ecker AS, Bethge M (2016) Image style transfer using convolutional neural networks. IEEE Conference on Computer Vision and Pattern Recognition. IEEE Computer Society, 2414–2423

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Comput Sci

Zhan K, Zhang H, Ma Y (2009) New spiking cortical model for invariant texture retrieval and image processing. IEEE Trans Neural Netw 20(12):1980–1986

Yoshifusa I (1991) Representation of functions by superpositions of a step or sigmoid function and their applications to neural network theory. Neural Netw 4(3):385–394

Zhang Y (2007) Adaptive region-based image fusion using energy evaluation model for fusion decision. SIViP 1.3:215–223

Liu X, Mei W, Du H (2016) Multimodality medical image fusion algorithm based on gradient minimization smoothing filter and pulse coupled neural network. Biomed Signal Process Control 30:140–148

Huang Z, Ding M, Zhang X (2017) Medical image fusion based on non-subsampled shearlet transform and spiking cortical model. J Med Imag Health In 7.1:229–234

Kim M, Han DK, Ko H (2010) Joint patch clustering-based dictionary learning for multimodal image fusion. ACM T Sensor Network 6.3:20

Li S, Kang X, Hu J (2013) Image fusion with guided filtering. IEEE Trans Image Process 22.7:2864–2875

Hossny M, Nahavandi S, Creighton D (2008) Comments on “Information measure for performance of image fusion”. Electron Lett 44(18):1066–1067

Wang Z, Bovik AC, Sheikh HR et al (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Vladimir P, Costas X (2004) Evaluation of image fusion performance with visible differences. 8th European Conference on Computer Vision, ECCV 2004, Lecture Notes in Computer Science, 3023: 380–391

Acknowledgements

The authors thank the editors and the anonymous reviewers for their careful works and valuable suggestions for this study.

Funding

This work was supported by the National Natural Science Foundation of China under Grants 61463052 and 61365001 and Yunnan Province University Key Laboratory Construction Plan Funding, China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interests.

Rights and permissions

About this article

Cite this article

Hou, R., Zhou, D., Nie, R. et al. Brain CT and MRI medical image fusion using convolutional neural networks and a dual-channel spiking cortical model. Med Biol Eng Comput 57, 887–900 (2019). https://doi.org/10.1007/s11517-018-1935-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-018-1935-8