Abstract

Purpose

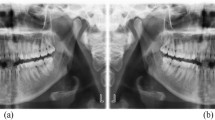

Dental radiography represents 13% of all radiological diagnostic imaging. Eliminating the need for manual classification of digital intraoral radiographs could be especially impactful in terms of time savings and metadata quality. However, automating the task can be challenging due to the limited variation and possible overlap of the depicted anatomy. This study attempted to use neural networks to automate the classification of anatomical regions in intraoral radiographs among 22 unique anatomical classes.

Methods

Thirty-six literature-based neural network models were systematically developed and trained with full supervision and three different data augmentation strategies. Only libre software and limited computational resources were utilized. The training and validation datasets consisted of 15,254 intraoral periapical and bite-wing radiographs, previously obtained for diagnostic purposes. All models were then comparatively evaluated on a separate dataset as regards their classification performance. Top-1 accuracy, area-under-the-curve and F1-score were used as performance metrics. Pairwise comparisons were performed among all models with Mc Nemar’s test.

Results

Cochran's Q test indicated a statistically significant difference in classification performance across all models (p < 0.001). Post hoc analysis showed that while most models performed adequately on the task, advanced architectures used in deep learning such as VGG16, MobilenetV2 and InceptionResnetV2 were more robust to image distortions than those in the baseline group (MLPs, 3-block convolutional models). Advanced models exhibited classification accuracy ranging from 81 to 89%, F1-score between 0.71 and 0.86 and AUC of 0.86 to 0.94.

Conclusions

According to our findings, automated classification of anatomical classes in digital intraoral radiographs is feasible with an expected top-1 classification accuracy of almost 90%, even for images with significant distortions or overlapping anatomy. Model architecture, data augmentation strategies, the use of pooling and normalization layers as well as model capacity were identified as the factors most contributing to classification performance.

Similar content being viewed by others

Availability of data and material

Not publicly available.

Code availability

Code will be published in the following link: https://github.com/nkyventidis/intraoral-radiograph-classifier.

References

[UNSCEAR] United Nations Scientific Committee on the Effects of Atomic Radiation, Sources and effects of ionizing radiation: United Nations Scientific Committee on the Effects of Atomic Radiation (2008) UNSCEAR report to the General Assembly, with scientific annexes. United Nations, New York, p 2010

[FDA] Food and Drug Administration, Dental Radiography: Doses and Film Speed (2017). https://www.fda.gov/radiation-emitting-products/nationwide-evaluation-x-ray-trendsnext/dental-radiography-doses-and-film-speed. Accessed 25 June 2020

Horner K, Rushton VV, Tsiklakis K, Hirschmann P, Stelt PF, Glenny A, Velders X, Pavitt S (2004) European guidelines on radiation protection in dental radiology: the safe use of radiographs in dental practice. Radiat Prot 136:11–17

Horner K (2012) Radiation protection in dental radiology. In: Proceedings of international conference 3–7 December 2012, International Atomic Energy Agency, Bonn, Germany, 2012

[NEMA] National Electrical Manufacturers Association (2005) Digital Imaging and Communications in Medicine, Supplement 60: Hanging Protocols, 2005

[NEMA] National Electrical Manufacturers Association (2019) Digital Imaging and Communications in Medicine, PS3.17 2019d - Explanatory Information, 2019

C. Langlotz, B. Allen, B. Erickson, J. Kalpathy-Cramer, K. Bigelow, T. Cook, A. Flanders, M. Lungren, D. Mendelson, J. Rudie, G. Wang, K. Kandarpa (2019) A roadmap for foundational research on artificial intelligence in medical imaging: from the (2018) NIH/RSNA/ACR/The academy workshop. Radiology 291:781–791

Deng J, Dong W, Socher R, Li J, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition. IEEE, pp. 248–255

Krizhevsky A, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks. In: Advances in neural information processing systems, vol 25. Curran Associates, Inc., pp 1097–1105

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg A, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis IJCV 115:211–252

Rawat W, Wang Z (2017) Deep convolutional neural networks for image classification: a comprehensive review. Neural Comput 29:2352–2449

Litjens G, Kooi T, Bejnordi B, Setio A, Ciompi F, Ghafoorian M, Van Der Laak J, Van Ginneken B, Sánchez C (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88

Bossuyt P, Reitsma J, Bruns D, Gatsonis C, Glasziou P, Irwig L, Lijmer J, Moher D, Rennie D, de Vet H, Kressel H, Rifai N, Golub R, Altman D, Hooft L, Korevaar D, Cohen J (2015) STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. Radiology 277(2015):826–832

Mongan J, Moy L, Kahn CE (2020) Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell 2:e200029. https://doi.org/10.1148/ryai.2020200029

Simonyan K, Zisserman A, (2015) Very deep convolutional networks for large-scale image recognition, In: 3rd Int. Conf. Learn. Represent. ICLR 2015 San Diego CA USA May 7–9 2015 Conf. Track Proc

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L (2018) MobileNetV2: inverted residuals and linear bottlenecks. In: 2018 IEEECVF conference on computer vision and pattern recognition, 2018, pp 4510–4520

Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2017) Inception-v4, inception-ResNet and the impact of residual connections on learning. In: Proceedings of thirty-first AAAI conference on artificial intelligence. AAAI Press, pp 4278–4284.

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE conference on computer vision and pattern recognition CVPR, 2016, pp 2818–2826

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, 2015, pp 448–456

Lin M, Chen Q, Yan S (2014) Network In Network, In: 2nd Int. Conf. Learn. Represent. ICLR 2014 Banff AB Can. April 14-16 2014 Conf. Track Proc

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Kingma D, Ba J (2015) Adam: a method for stochastic optimization, In: 3rd Int. Conf. Learn. Represent. ICLR 2015 San Diego CA USA May 7-9 2015 Conf. Track Proc

Nair V, Hinton G (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of 27th international conference on international conference on machine learning, Omnipress, Madison, WI, USA, 2010, pp 807–814

[NCHS] National Center for Health Statistics (1999) National Health and Nutrition Examination Survey Data., U.S. Department of Health and Human Services, Hyattsville, MD, 1999.

Dietterich T (1998) Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput 10:1895–1923

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

García S, Herrera F, Shawe-Taylor J (2008) An extension on “statistical comparisons of classifiers over multiple data sets” for all pairwise comparisons. J Mach Learn Res 9:2677–2694

Chow L, Paramesran R (2016) Review of medical image quality assessment. Biomed Signal Process Control 27:145–154

Funding

This study has received no external funding.

Author information

Authors and Affiliations

Contributions

Kyventidis Nikolaos involved in conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft, visualization. Angelopoulos Christos involved in resources, data curation, writing—review and editing, supervision, project administration.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest, financial or otherwise.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Kyventidis, N., Angelopoulos, C. Intraoral radiograph anatomical region classification using neural networks. Int J CARS 16, 447–455 (2021). https://doi.org/10.1007/s11548-021-02321-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-021-02321-4