Abstract

Purpose

Precise polyp detection and localisation are essential for colonoscopy diagnosis. Statistical machine learning with a large-scale data set can contribute to the construction of a computer-aided diagnosis system for the prevention of overlooking and miss-localisation of a polyp in colonoscopy. We propose new visual explaining methods for a well-trained object detector, which achieves fast and accurate polyp detection with a bounding box towards a precise automated polyp localisation.

Method

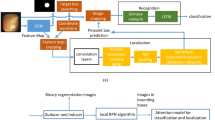

We refine gradient-weighted class activation mapping for more accurate highlighting of important patterns in processing a convolutional neural network. Extending the refined mapping into multiscaled processing, we define object activation mapping that highlights important object patterns in an image for a detection task. Finally, we define polyp activation mapping to achieve precise polyp localisation by integrating adaptive local thresholding into object activation mapping. We experimentally evaluate the proposed visual explaining methods with four publicly available databases.

Results

The refined mapping visualises important patterns in each convolutional layer more accurately than the original gradient-weighted class activation mapping. The object activation mapping clearly visualises important patterns in colonoscopic images for polyp detection. The polyp activation mapping localises the detected polyps in ETIS-Larib, CVC-Clinic and Kvasir-SEG database with mean Dice scores of 0.76, 0.72 and 0.72, respectively.

Conclusions

We developed new visual explaining methods for a convolutional neural network by refining and extending gradient-weighted class activation mapping. Experimental results demonstrated the validity of the proposed methods by showing that accurate visualisation of important patterns and localisation of polyps in a colonoscopic image. The proposed visual explaining methods are useful for the interpreting and applying a trained polyp detector.

Similar content being viewed by others

References

Urban G, Tripathi P, Alkayali T, Mittal M, Jalali F, Karnes W, Baldi P (2018) Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology 155(4):1069-1078.e8

Misawa M, Kudo S-E, Mori Y, Hotta K, Ohtsuka K, Matsuda T, Saito S, Kudo T, BaBa T, Ishida F, Itoh H, Oda M, Mori K (2021) Development of a computer-aided detection system for colonoscopy and a publicly accessible large colonoscopy video database (with video). Gastrointest Endosc 93(4):960–967

Lieberman DA, Rex DK, Winawer SJ, Giardiello FM, Johnson DA, Levin TR (2012)Guidelines for Colonoscopy Surveillance after Screening and Polypectomy: a Consensus Update by the US Multi-Society Task Force

Itoh H, Oda H, Jiang K, Mori Y, Misawa M, Kudo SE, Imai K, Ito S, Hotta K, Mori K (2021) Binary polyp-size classification based on deep-learned spatial information. Int J Comput Assist Radiol Surg 16:1817–1828

Itoh H, Oda H, Jiang K, Mori Y, Misawa M, Kudo SE, Imai K, Ito S, Hotta K, Mori K (2022) Uncertainty meets 3D-spatial feature in colonoscopic polyp-size determination. Comput Methods Biomech Biomed Eng: Imaging Vis 10(3):289–298

Nogueira-Rodríguez A, Domínguez-Carbajales R, Campos-Tato F, Herrero J, Puga M, Remedios D, Sánchez E, Iglesias Á, Cubiella J, Fdez-Riverola F, López-Fernández H, Reboiro-Jato M, Glez-Peña D (2022) Real-time polyp detection model using convolutional neural networks. Neural Comput Appl 34:10375–10396

Jha D, Riegler MA, Johansen D, Halvorsen P, Johansen HD (2020) DoubleU-Net: a deep convolutional neural network for medical image segmentation. In: Proceedings of IEEE 33rd international symposium on computer-based medical systems: 558-564

Jha D, Smedsrud PH, Johansen D, de Lange T, Johansen HD, Halvorsen H, Riegler MA (2021) A comprehensive study on colorectal polyp segmentation with ResUNet++, conditional random field and test-time augmentation. IEEE J Biomed Health Inform 25(6):2029–2040

Mahmud T, Paul B, Fattah SA (2021) PolypSegNet: a modified encoder-decoder architecture for automated polyp segmentation from colonoscopy images. Comput Biol Med 128:104119

Wang R, Chen S, Ji C, Fan J, Li Y (2022) Boundary-aware context neural network for medical image segmentation. Medical Image Anal 78:102395

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of IEEE international conference on computer vision: 779–788

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. CoRR arXiv:1804.02767v1, https://pjreddie.com/darknet/yolo/

Ronneberger O, Fischer P, Brox T, Navab N, Hornegger J, Wells WM, Frangi A (2015) U-Net: convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention – MICCAI 2015. MICCAI 2015. Lecture notes in computer science, vol 9351. Springer, Cham, pp 234–241

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. In: Proceedings of neural information processing systems, pp 91–99

Bodla N, Singh B, Chellappa R, Davis LS (2017) Soft-NMS improving object detection with one line of code. In: Proceedings of the IEEE international conference of computer vision, pp 5562–5570

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2020) Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis 128:336-359

Springenberg JT, Dosovitskiy A, Brox T (2015) Riedmiller MA (2014) Striving for simplicity: the all convolutional net. In: Proceedings of international conference on learning representations workshop track

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybernet 9(1):62–66

Silva JS, Histace A, Romain O, Dray X, Granado B (2014) Towards embedded detection of polyps in WCE images for early diagnosis of colorectal cancer. Int J Comput Assist Radiol Surg 9(2):283–293

Bernal J, Sánchez FJ, Fernández-Esparrach G, Gil D, Rodríguez C, Vilariño F (2015) WM-DOVA maps for accurate polyp highlighting in colonoscopy: validation vs. saliency maps from physicians. Comput Med Imaging Graph 43:99–111

Jha D, Smedsrud PH, Riegler MA, Halvorsen P, Johansen D, de Lange T, Johansen HD (2020) Kvasir-SEG: a segmented polyp dataset, Proceedings of International Conference on Multimedia Modeling

Acknowledgements

This study was funded by grants from AMED (19hs0110006h0003), JSPS MEXT KAKENHI (26108006, 17H00867, 17K20099), and the JSPS Bilateral Joint Research Project.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Kudo SE received scholarship grant from TAIHO Pharmaceutical Co. Ltd., CHUGAI Pharmaceutical Co. Ltd. and Bayer Yakuhin Ltd. Misawa M received lecture fees from Olympus. Mori Y received consultant and lecture fees from Olympus. Mori K is supported by Cybernet Systems and Olympus (research grant) in this work and by NTT outside of the submitted work. The other authors have no conflicts of interest.

Ethical approval

All the procedures performed in studies involving human participants were in accordance with the ethical committee of Nagoya University (No. 357), and the 1964 Helsinki Declaration and subsequent amendments or comparable ethical standards. Informed consent was obtained by an opt-out procedure from all individual participants in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Itoh, H., Misawa, M., Mori, Y. et al. Positive-gradient-weighted object activation mapping: visual explanation of object detector towards precise colorectal-polyp localisation. Int J CARS 17, 2051–2063 (2022). https://doi.org/10.1007/s11548-022-02696-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-022-02696-y