Abstract

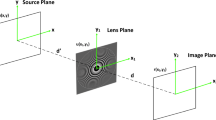

The image clarity evaluation function was commonly used by the autofocus algorithm to evaluate the clarity of the image. And autofocus algorithms like peak search algorithm and zoom tracking algorithm are based on the evaluation value. This paper proposes an Improved Feedback Zoom Tracking method (IFZT) based on defocus difference, using the amount of defocus difference as the degree of image blur. IFZT algorithm modifies the revision criterion for feedback revision point and removes the relatively complex PID algorithm. IFZT determines the orientation of the in-focus motor position according to the amount of defocus difference and uses the depth of defocus method to estimate the ideal focus position. In this paper, the calculation formula of defocus difference and ideal focus position are deduced. Finally, the algorithm was experimented on an integrated camera; the experimental results show that: IFZT algorithm using the amount of defocus has good tracking accuracy, and has a larger promotion compared with other zoom tracking algorithms. And the overall performance of IFZT algorithm is in line with the requirements of zoom tracking algorithm.

Similar content being viewed by others

References

Peddigari, V., Gamadia, M., Kehtarnavaz, N.: Real-time implementation issues in passive automatic focusing for digital still cameras. J. Imaging Sci. Technol 49(2), 114–123 (2005)

Wang, J.C.: Theoretical Study of Auto-focus Algorithm Based on Image Processing. Chongqing University (2012)

Zhu, K.F., Jiang, W., Wang, D.F.: New kind of clarity-evaluation-function of image. Infrared Laser Eng. 34(4), 464–468 (2005)

Hoad, P., Illingworth, J.: Automatic control of camera pan, zoom and focus for improving object recognition. In: International Conference on Image Processing and its Applications, pp. 291–295 (1995)

Chang C.H., Fuh C.S.: Auto Focus Using Adaptive Step Size Search and Zoom Tracking Algorithm. Artif Int Appl. 1(1), 22–30 (2005)

Kim, Y., Lee, J.S., Morales, A.W.: A video camera system with enhanced zoom tracking and auto white balance. IEEE Trans. Consum. Electron. 48(3), 428–434 (2002)

Peddigari, V., Kehtarnavaz, N.: A relational approach to zoom tracking for digital still cameras. IEEE Trans. Consum. Electron. 51(4), 1051–1059 (2005)

Peddigari, V., Kehtarnavaz, N.: Real-time predictive zoom tracking for digital still cameras. J. Real-Time Image Proc. 2(1), 45–54 (2007)

Peddigari, V.R., Kehtarnavaz, N., Lee, S.Y.: Real-time implementation of zoom tracking on TI DM processor. In: Electronic Imaging. International Society for Optics and Photonics, pp. 8–18 (2005)

Zou, T., Tang, X., Song, B., Wang, J., Chen, J.: Robust feedback zoom tracking for digital video surveillance. Sensors 12(6), 8073–8099 (2012)

Cong, B.D., Seol, T.I., Chung, S.T.: Real-time zoom tracking for DM36x-based IP Network Camera. J. Korea Multimed. Soc. 16(11), 1261–1271 (2013)

Subbarao, M., Surya, G.: Depth from defocus: A spatial domain approach. Int. J. Comput. Vis. 13(3), 271–294 (1994)

Pei, X.Y., Feng, H.J., Qi, L.I.: A depth from defocus auto-focusing method based on frequency analysis. Opto-Electron. Eng. 30(5), 62–65 (2003)

Yan, H.: Auto-focus method based on autocorrelation of derivative image. Acta Optica Sinica 30(12), 3435–3440 (2010)

Zhou, C., Lin, S., Nayar, S.K.: Coded aperture pairs for depth from defocus and defocus deblurring. Int. J. Comput. Vis. 93(1), 53–72 (2011)

Matsui, S., Nagahara, H., Taniguchi, R.I.: Half-sweep imaging for depth from defocus. In: Advances in Image and Video Technology, pp. 335–347. Springer, Berlin (2011)

Li, Q., Xu, Z., Feng, H.: Autofocus area design of digital imaging system. Acta Photonica Sinica 31(1), 63–66 (2002)

Mannan, F., Langer, M.S.: What is a good model for depth from defocus?. In: Conference on Computer and Robot Vision, pp. 273–280 (2016)

Qi, L.I., Feng, H.J., Zhi-Hai, X.U.: Method of improving autofocus speed based on defocus estimation. J. Optoelectron. Laser. 16(7), 850 (2005)

Mannan, F., Langer, M.S.: Blur calibration for depth from defocus. In: Conference on Computer and Robot Vision. IEEE Computer Society, pp. 281–288 (2016)

Mannan, F., Langer, M.S.: Optimal camera parameters for depth from defocus. In: International Conference on 3d Vision. IEEE Computer Society, pp 326–334 (2015)

Acknowledgements

This project is supported by National Natural Science Foundation of China (Grant no. 52075483).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Derivation of formula 9 : \({I}^{{{\prime}}}\left(x,y\right)=I\left(x,y\right)+\frac{1}{4}{\sigma }^{2}{\nabla }^{2}{I}^{{{\prime}}}\left(x,y\right)\)

In our application, we usually use a third-order polynomial to approximate the image.

where, A1, A2, B1, B2 are the image boundaries.

Using the forward S transform, we have the blurred image,

Assuming h(x, y) is circularly symmetric, it can be shown that

For any point spread function,

From Eqs. (25) and (27), \({I}^{^{\prime}}(x,y)\) becomes

From Eq. (26) we have \({h}_{{0,1}}={h}_{{1,0}}={h}_{{1,1}}=0\) and \({h}_{{0,2}}={h}_{{2,0}},\) and using inverse S transform, we can get:

From the definition of moments and \(\sigma\), we have \({h}_{{2,0}}={h}_{{0,2}}={\sigma }^{2}/2\), so

Note that \(I^{\prime 2,0} + I^{\prime 0,2} = \nabla^{2} I^{\prime }\) corresponds to the Laplacian operation on the image \(I^{\prime } \left( {x,y} \right)\), thus we can get:

Rights and permissions

About this article

Cite this article

Wang, X., Zhu, Y. & Ji, J. A zoom tracking algorithm based on defocus difference. J Real-Time Image Proc 18, 2417–2428 (2021). https://doi.org/10.1007/s11554-021-01133-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-021-01133-8