Abstract

Recently, similar to Hager and Zhang (SIAM J Optim 16:170–192, 2005), Yu (Nonlinear self-scaling conjugate gradient methods for large-scale optimization problems. Thesis of Doctors Degree, Sun Yat-Sen University, 2007) and Yuan (Optim Lett 3:11–21, 2009) proposed modified PRP conjugate gradient methods which generate sufficient descent directions without any line searches. In order to obtain the global convergence of their algorithms, they need the assumption that the stepsize is bounded away from zero. In this paper, we take a little modification to these methods such that the modified methods retain sufficient descent property. Without requirement of the positive lower bound of the stepsize, we prove that the proposed methods are globally convergent. Some numerical results are also reported.

Similar content being viewed by others

References

Hestenes M.R., Stiefel E.L.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. Sect. B 49, 409–432 (1952)

Fletcher R., Reeves C.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Polak B., Ribiére G.: Note surla convergence des méthodes de directions conjuguées. Rev. Fr. Inf. Rech. Operatonelle 3e Année 16, 35–43 (1969)

Polyak B.T.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Dai Y., Yuan Y.: A nonlinear conjugate gradient with a strong global convergence properties. SIAM J. Optim. 10, 177–182 (2000)

AL-Baali M.: Descent property and global convergence of the Fletcher–Reeves method with inexact line search. IMA J. Numer. Anal. 5, 121–124 (1985)

Dai Y., Yuan Y.: Convergence properties of the Fletcher–Reeves method. IMA J. Numer. Anal. 16(2), 155–164 (1996)

Gilbert J.C., Nocedal J.: Global convergence properties of conjugate gradient methods for optimization. SIAM. J. Optim. 2, 21–42 (1992)

Grippo L., Lucidi S.: A globally convergent version of the Polak–Ribiére gradient method. Math. Program. 78, 375–391 (1997)

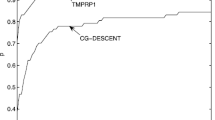

Hager W.W., Zhang H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager W.W., Zhang H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35–58 (2006)

Yu, G.H.: Nonlinear self-scaling conjugate gradient methods for large-scale optimization problems. Thesis of Doctors Degree, Sun Yat-Sen University (2007)

Yuan G.L.: Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optim. Lett. 3, 11–21 (2009)

Zhang L., Zhou W., Li D.H.: Global convergence of a modified Fletcher-Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104, 561–572 (2006)

Zhang L., Zhou W., Li D.H.: A descent modified Polak–Ribiére–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26, 629–640 (2006)

Andrei N.: A Dai-Yuan conjugate gradient algorithm with sufficient descent and conjugacy conditions for unconstrained optimization. Applied Mathematics Letters. 21, 165–171 (2008)

Andrei N.: A hybrid conjugate gradient algorithm for unconstrained optimization as a convex combination of Hestenes–Stiefel and Dai–Yuan. Stud. Inf. Control 17, 55–70 (2008)

Zhang, J., Xiao, Y., Wei, Z.: Nonlinear conjugate gradient methods with sufficient descent condition for large-scale unconstrained optimization. Math. Probl. Eng. Article ID 243290, 16 p. doi:10.1155/2009/243290 (2009)

Bongartz K.E., Conn A.R., Gould N.I.M., Toint P.L.: CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

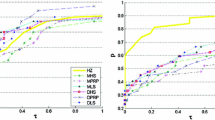

Dolan E.D., Moré J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Zoutendijk G.: Nonlinear programming computational methods. In: Abadie, J. (ed.) Integer and Nonlinear Programming, pp. 37–86. North-Holland, Amsterdam (1970)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dai, Zf., Tian, BS. Global convergence of some modified PRP nonlinear conjugate gradient methods. Optim Lett 5, 615–630 (2011). https://doi.org/10.1007/s11590-010-0224-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-010-0224-8