Abstract

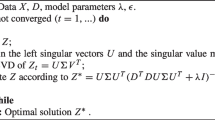

In recent years dictionary learning has become a favorite sparse feature extraction technique. Dictionary learning represents each data as a sparse combination of atoms (columns) of the dictionary matrix. Usually, the input data is contaminated by errors that affect the quality of the obtained dictionary and so sparse features. This effect is especially critical in applications with high dimensional data such as gene expression data. Therefore, some robust dictionary learning methods have investigated. In this study, we proposed a novel robust dictionary learning algorithm, based on the total least squares, that could consider the inexactness of data in modeling. We confirm that standard and some robust dictionary learning models are the particular cases of our proposed model. Also, the results on various data indicate that our method performs better than other dictionary learning methods on high dimensional data.

Similar content being viewed by others

References

Aharon M, Elad M, Bruckstein A (2006) K-SVD: an algorithm for designing overcomplete dictionaries for sparse representations. IEEE Trans Signal Process 54(11):4311–4322

Alon U et al (1999) Broad patterns of gene expression revealed by clustering of tumor and normal colon tissues probed by oligonucleotide arrays. PNAS 96(12):6745–6750

Bishop C (2006) Pattern recognition and machine learning. Springer, Berlin

Bjorck A (2015) Numerical methods in matrix computations. Springer, Berlin

Chang H, Yang M, Yang J (2016) Learning a structure adaptive dictionary for sparse representation based classification. Neurocomputing 190:124–131

Chen C, Huang J, He L, Li H (2014) Fast Iteratively reweighted least squares algorithms for analysis-based sparsity

Elad M (2010) Sparse and redundant representations: from theory to applications in signal and image processing. Springer, New York

Engan K, Aase S, Husoy J (2000) Multi-frame compression: Theory and design. Signal Process 80:2121–2140

Fan Z, Ni M, Zhu Q, Liu E (2015) Weighted sparse representation for face recognition. Neurocomputing 151:304–309

Feiz R, Rezghi M (2017) A splitting method for total least square color image restoration problem. J Vis Commun Image Represent 46:48–57

Fu H, Ng MK, Barlow JL (2006) Structured total least squares for color image restoration. SIAM J Matrix Anal Appl 28:1100–1119

Golub TR et al (1999) Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 286(15):531–537

Golub GH, Van Loan C (2013) Matrix computations, 4th edn. The Johns Hopkins University Press, Baltimore, pp 320–327

Khademlou M, Rezghi M (2015) Integrated single image super resolution based on sparse representation, AISP

Khan J et al (2001) Classification and diagnostic prediction of cancers using gene expression profiling and artificial neural networks. Nat Med 7(6):673–679

Kim H, Park H (2007) Sparse non-negative matrix factorizations via alternating non-negativity-constrained least squares for microarray data analysis. Bioinformatics 23:1495–1502

Kukush A, Tsaregorodtsev Y (2016) Asymptotic normality of total least squares estimator in a multivariate errors-in-variables model AX=B. Modern Stochast Theory Appl 3:47–57

Kunchevaa LI, Rodríguez JJ (2018) On feature selection protocols for very low-sample-size data. Pattern Recognit 81:660–673

Lai M, Xu Y, Yin W (2013) Improved iteratively reweighted least squares for unconstrained smoothed \(l_{q}\) minimization. SIAM J Numer Anal 51:927–957

Lee G, Barlow Jesse L (2017) Two projection methods for regularized total least squares approximation, Linear Algebra Appl 461:18–41

Li Y, Ngom A (2013) Non-negative least squares methods for the classification of high dimensional biological data. IEEE/ACM Trans Comput Biol Bioinform 10:447–456

Li Y, Ngom A (2013) Sparse representation approaches for the classification of high-dimensional biological data. BMC Syst Biol 7:S6

Liao L, Li Q (2016) Parameter identification and temperature compensation of quartz flexible accelerometer based on total least squares. Int J Signal Process Syst 4(1):27–31

Liu M, Zhang D (2016) Pairewise constraint-guided sparse learning for feature selection. IEEE Trans Cybern 46:298–310

Luh K, Vu V (2016) Dictionary learning with few samples and matrix concentration. IEEE Trans Inform Theory 62(3):1516–1527

Mukherjee S, Basu R, Seelamantula CS (2016) \(l_{1}\)KSVD: a robust dictionary learning algorithm with simultanous update. Signal Process 123:42–52

Notterman DA et al (2001) Transactional gene expression profiles of colorectal adenoma, adenocarcinoma, and normal tissue examined by oligonucleotide arrays. Cancer Res 61:3124–3130

Piage CC, Suanders MA (1982) LSQR: an algorithm for sparse linear equations and sparse least squares. ACM Trans Math Softw 8:43–71

Piao Y, Piao M, Park K, Ryu KH (2012) An ensemble correlation-based gene selection algorithm for cancer classification with gene expression data. Bioinformatics 28:3306–3315

Rezghi M, Hosseini SM, Elden L (2014) Best Kronecker product approximation of the blurring operator in three dimensional image restoration problems. SIMAX 35:1086–1104

Rosen JB, Park H, Glick J (1996) Total least norm formulation and solution for structured problems. SIAM J Matrix Anal Appl 17:110–126

Sadeghi M, Babaie-Zadeh M, Jutten C (2013) Dictionary learning for sparse representation: a novel approach. IEEE Signal Process Lett 20(12):1195–1198

Sadeghi M, Babaie-Zadeh M, Jutten C (2014) Learning overcomplete dictionaries based on atom-by-atom updating. IEEE Trans Signal Process 62(4):883–891

Sigl J (2016) Nonlinear residual minimization by iteratively reweighted least squares. Comput Optim Appl 64:755–792

Yang J, Wright J, Huang TS (2010) Image super-resolution via sparse representation. IEEE Trans Image Process 19:2861–2873

Yin X, Xu Y, Shen H (2016) Enhancing the prediction of transmembrane \(\beta -\)barrel segments with chain learning and feature sparse representation. IEEE/ACM Trans Comput Biol Bioinform 13:1016–1026

Yu Q, Dai W, Cvetkovic Z (2019) and Jubo Zhu Dictionary. Learning with BLOTLESS Update, Arxive

Zhai Y, Yang Z, Liao Z, Wright J, Ma Yi (2019) Complete dictionary learning via \(l_4\)-norm maximization over the orthogonal Group, Arxiv

Zhanga S, Zhang C, Wanga Z, Kong W (2018) Combining sparse representation and singular value decomposition for plant recognition. Appli Soft Comput 67:164–171

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Parvasideh, P., Rezghi, M. A novel dictionary learning method based on total least squares approach with application in high dimensional biological data. Adv Data Anal Classif 15, 575–597 (2021). https://doi.org/10.1007/s11634-020-00417-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-020-00417-4