Abstract

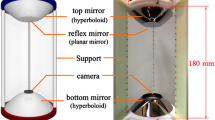

In recent years, the use of omnidirectional view (OV) sensors has gained popularity in robotics. The main reason behind this growth is due to the large field of view (FOV) that spans \(360^{\circ }\) offered by these sensors under a catadioptric configuration. The large FOV addresses several shortcomings of a conventional perspective imaging sensor by allowing simultaneous monitoring of surrounding environment under a single image compilation. Feature detection is one of the fundamental components in visual robotics applications that enable intelligent vision system with advanced features such as object, scene, and human detection, localisation, simultaneous localisation and mapping, and odometry. In this paper, the adaptation of visual detection algorithm in omnidirectional vision is reviewed by investigating the recent works and the underlying supporting mechanism. Furthermore, state-of-the-art vision detection algorithms and important factors of OV sensors, such as hardware requirements, fundamental theories, cost, and usability, are also investigated in order to explain the adaptation involved. To conclude this work, a case study related to OV mapping transform is presented, and insights on possible future research direction are provided.

Similar content being viewed by others

Notes

A hybrid view sensor system is where feature matching occurs between two different sensors that produces perspective view images and OV images.

Dioptric is of association with refraction of light, i.e. using only lens.

Catadioptric is of association with refraction and reflection of light, i.e. using a combination of a mirror and a lens.

The hyperboloidal catadioptric sensor and the paraboloidal hyperboloidal catadioptric sensor are also known as the hyper-catadioptric sensor and the para-catadioptric sensor, respectively, mainly due to extensive utilisation.

The egomotion refers to the 3D motion of a camera.

The ground plane view is also known as the bird’s eye view.

A publicly available MATLAB [71] Linux M-file for calculating overlapping error is available at http://www.robots.ox.ac.uk/~vgg/research/affine/index.html.

The Linux binaries for SIFT, PCA-SIFT, and GLOH were obtained from http://www.robots.ox.ac.uk/~vgg/research/affine/index.html. The Linux binary for SURF was obtained from http://www.vision.ee.ethz.ch/~surf/.

To date, performance of state-of-the-art visual detections under current hardware can easily exceed 15 frames per second.

References

Advanced Micro Devices, Inc.: AMD Stream (2012)

Rituerto, A., Puig, L., Guerrero, J.J.: Comparison of omnidirectional and conventional monocular systems for visual slam. In: The Proceedings of the 10th Workshop on Omnidirectional Vision (OMNIVIS) in Conjunction with Robotics Systems and Science RSS (2010)

Andreasson, H., Treptow, A., Duckett, T.: Self-localization in non-stationary environments using omni-directional vision. Rob. Auton. Syst. 55, 541–551 (2007). doi:10.1016/j.robot.2007.02.002. http://dl.acm.org/citation.cfm?id=1247761.1248293

Arican, Z., Frossard, P.: Super-resolution from unregistered omnidirectional images. In: 19th International Conference on Pattern Recognition, ICPR 2008, pp. 1–4 (2008). doi:10.1109/ICPR.2008.4760988

Arican, Z., Frossard, P.: Scale-invariant features and polar descriptors in omnidirectional imaging. IEEE Trans. Image Process. 21(5), 2412–2423 (2012). doi:10.1109/TIP.2012.2185937

Baker, S., Nayar, S.K.: A theory of catadioptric image formation. In: Proceedings of the Sixth International Conference on Computer Vision, 1998, pp. 35–42. Bombay, India (1998). doi:10.1109/ICCV.1998.710698

Baker, S., Nayar, S.K.: A theory of single-viewpoint catadioptric image formation. Int. J. Comput. Vis. 35(2), 175–196 (1999)

Bay, H., Ess, A., Tuytelaars, T., Vangool, L.: Speeded-up robust features (SURF). Comput. Vis. Image Underst. 110(3), 346–359 (2008). http://linkinghub.elsevier.com/retrieve/pii/S1077314207001555

Bay, H., Tuytelaars, T., Gool, L.: SURF: speeded up robust features. In: Leonardis, A., Bischof, H., Pinz, A. (eds.) Computer Vision ECCV 2006, Lecture Notes in Computer Science, vol. 3951, pp. 404–417. Springer, Berlin (2006). doi:10.1007/11744023_32

Bermúdez, J., Puig, L., Guerrero, J.J.: Line extraction in central hyper-catadioptric systems. In: Proccedings of the OMNIVIS —10th Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras (2010)

Blaer, P., Allen, P.: Topological mobile robot localization using fast vision techniques. In: Proceedings of the IEEE International Conference on Robotics and Automation, ICRA ’02, vol. 1, pp. 1031–1036 (2002). doi:10.1109/ROBOT.2002.1013491

Brown, M., Lowe, D.G.: Invariant features from interest point groups. In: British Machine Vision Conference, pp. 656–665. Cardiff, Wales (2002)

Bulow, T.: Spherical diffusion for 3d surface smoothing. IEEE Trans Pattern Anal. Mach. Intell. 26(12), 1650–1654 (2004). doi:10.1109/TPAMI.2004.129

Calonder, M., Lepetit, V., Strecha, C., Fua, P.: BRIEF: binary robust independent elementary features. In: Proceedings of the 11th European conference on Computer vision: Part IV, ECCV’10, pp. 778 –792 (2010)

Chen, C.H., Yao, Y., Page, D., Abidi, B., Koschan, A., Abidi, M.: Heterogeneous fusion of omnidirectional and PTZ cameras for multiple object tracking. IEEE Trans. Circuits Syst. Video Technol. 18(8), 1052–1063 (2008). doi:10.1109/TCSVT.2008.928223

Chong, N.S., Kho, Y.H., Wong, M.L.D.: Closed form spherical omnidirectional image unwrapping. In: Proceedings of The IET Conference on Image Processing (IPR 2012), pp. 1–5. London, UK (2012a). doi:10.1049/cp.2012.0443

Chong, N.S., Kho, Y.H., Wong, M.L.D.: Detection of sift keypoints in spherical omnidirectional view sensor. Procedia Eng. 41, 90–96 (2012) (International Symposium on Robotics and Intelligent Sensors 2012 (IRIS 2012)). doi:10.1016/j.proeng.2012.07.147

Cruz-Mota, J., Bogdanova, I., Paquier, B., Bierlaire, M., Thiran, J.P.: Scale invariant feature transform on the sphere: theory and applications. Int. J. Comput. Vis. 98, 217–241 (2012). doi:10.1007/s11263-011-0505-4

Ding, Y., Xiao, J., Yu, J.: A theory of multi-perspective defocusing. In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, CVPR ’11, pp. 217–224. IEEE Computer Society, Washington, DC, USA (2011). doi:10.1109/CVPR.2011.5995617

Duda, R.O., Hart, P.E.: Use of the hough transformation to detect lines and curves in pictures. Commun. ACM 15(1), 11–15 (1972). doi:10.1145/361237.361242

Gaspar, J., Santos-Victor, J.: Visual path following with a catadioptric panoramic camera. In: Proceedings of the International Symposium on Intelligent Robotic Systems—SIRS’99. Coimbra, Portugal (1999). doi:10.1.1.33.3379. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.33.3379

Geyer, C., Daniilidis, K.: Catadioptric camera calibration. In: The Proceedings of the Seventh IEEE International Conference on Computer Vision, vol. 1, pp. 398–404. Kerkyra, Greece (1999). doi:10.1109/ICCV.1999.791248

Geyer, C., Daniilidis, K.: A unifying theory for central panoramic systems and practical applications. In: Proceedings of the 6th European Conference on Computer Vision-Part II, ECCV ’00, pp. 445–461. London, UK (2000). http://dl.acm.org/citation.cfm?id=645314.649434

Geyer, C., Daniilidis, K.: Paracatadioptric camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 24(5), 687–695 (2002). doi:10.1109/34.1000241

Hamming, R.W.: Error detecting and error correcting codes. Bell Syst. Tech. J. 26(2), 147–160 (1950)

Harris, C., Stephens, M.: A combined corner and edge detector. In: Proceedings of Fourth Alvey Vision Conference, pp. 147–151. Manchester, UK (1988)

Hartley, R.I., Zisserman, A.: Multiple View Geometry in Computer Vision, 2nd edn. Cambridge University Press, Cambridge (2004)

Hecht, E.: Optics, 4th edn. Addison Wesley, Reading, MA (2001)

Hicks RA, Bajcsy R (2001) Reflective surfaces as computational sensors. Image Vis. Comput. 19(11), 773–777. http://linkinghub.elsevier.com/retrieve/pii/S0262885600001049

Hong, J., Tan, X., Pinette, B., Weiss, R., Riseman, E.M.: Image-based homing. IEEE Control Syst. 12(1), 38–45 (1992)

Intel Corporation, Willow Garage, Itseez: OpenCV. Version 2.4.3 (2013)

Jeng, S.W., Tsai, W.H.: Using pano-mapping tables for unwarping of omni-images into panoramic and perspective-view images. IET Image Process. 1(2), 49–155 (2007). doi:10.1049/iet-ipr:20060201

Ke, Y., Sukthankar, R.: Pca-sift: a more distinctive representation for local image descriptors. In: Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 506–513. Los Alamitos, CA, USA (2004). doi:10.1109/CVPR.2004.183

Koenderink, J.J.: The structure of images. Biol. Cybern. 50(5), L363–L370 (1984). doi:10.1007/BF00336961

Lei, J., Du, X., Zhu, Y.F., Liu, J.L.: Unwrapping and stereo rectification for omnidirectional images. J. Zhejiang Univ. Sci. A 10(8), 1125–1139 (2009). http://www.springerlink.com/index/10.1631/jzus.A0820357

Li, W., Li, Y.: Overall well-focused catadioptric image acquisition with multifocal images: a model-based method. IEEE Trans. Image Process. 21(8), 3697–3706 (2012). doi:10.1109/TIP.2012.2195010

Li, W., Li, Y., Wu, Y.: A model based method for overall well focused catadioptric image acquisition with multi-focal images. In: Proceedings of the 13th International Conference on Computer Analysis of Images and Patterns, CAIP ’09, pp. 460–467. Springer, Berlin (2009). doi:10.1007/978-3-642-03767-2_56

Li, Y., Zhang, M., Lou, J., Wang, W.: Design of catadioptric omnidirectional imaging system for defocus deblurring. Acta. Optica. Sinica. 32(9), 0911001 (2012). doi:10.3788/AOS201232.0911001

Lindeberg, T.: Scale-space theory: a basic tool for analysing structures at different scales. J. Appl. Stat. 21(2), 224–270 (1994)

Lourenco, M., Pedro, V., Barreto, J.: Localization in indoor environments by querying omnidirectional visual maps using perspective images. In: 2012 IEEE International Conference on Robotics and Automation (ICRA), pp. 2189–2195 (2012). doi:10.1109/ICRA.2012.6225134

Lowe, D.G.: Object recognition from local scale-invariant features. In: The Proceedings of the Seventh IEEE International Conference on Computer Vision, vol. 2, pp. 1150–1157 (1999)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60, 91–110 (2004). doi:10.1023/B:VISI.0000029664.99615.94

Mair, E., Hager, G., Burschka, D., Suppa, M., Hirzinger, G.: Adaptive and generic corner detection based on the accelerated segment test. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) Computer Vision ECCV 2010, Lecture Notes in Computer Science, vol. 6312, pp. 183–196. Springer, Berlin (2010). doi:10.1007/978-3-642-15552-9_14

Mikolajczyk, K., Schmid, C.: Scale and affine invariant interest point detectors. Int. J. Comput. Vis. 60(1), 63–86 (2004)

Mikolajczyk, K., Schmid, C.: A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 27, 1615–1630 (2005). doi:10.1109/TPAMI.2005.188. http://dl.acm.org/citation.cfm?id=1083822.1083989

Moravec, H.: Obstacle avoidance and navigation in the real world by a seeing robot rover. In: Technical Report CMU-RI-TR-80-03. Robotics Institute, Carnegie Mellon University and Doctoral Dissertation, Stanford University, CMU-RI-TR-80-03 (1980)

Murillo, A.C., Campo, P., Kosecka, J., Guerrero, J.J.: Gist vocabularies in omnidirectional images for appearance based mapping and localization. In: Proccedings of the OMNIVIS—10th Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras (2010)

Nayar, S.K.: Catadioptric omnidirectional camera. In: Proceedings of the 1997 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 482–488 (1997). doi:10.1109/CVPR.1997.609369

Nene, S.A., Nayar, S.K.: Stereo with mirrors. In: Proceedings of Sixth International Conference on Computer Vision, pp. 1087–1094 (1998). doi:10.1109/ICCV.1998.710852

NVIDIA Corporation: NVIDIA CUDA (2012)

Oliva, A., Torralba, A.: Building the gist of a scene : the role of global image features in recognition. Brain 155(1), 23–36 (2006). http://www.ncbi.nlm.nih.gov/pubmed/17027377

Peng, Y., Liu, Y., Li, Y., Zhang, M.: Coded aperture techniques for catadioptric omni-directional image defocus deblurring. In: 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), pp. 3373–3377. Seoul, Korea (2012). doi:10.1109/ICSMC.2012.6378313

Puig, L., Guerrero, J., Sturm, P.: Matching of omnidirectional and perspective images using the hybrid fundamental matrix. In: Proceedings of the 8th Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras, OMNIVIS 2008. Marseille, France (2008)

Quinlan, J.R.: Induction of decision trees. Mach. Learn. 1(1), 81–106 (1986). doi:10.1023/A:1022643204877

Ramalingam, S., Bouaziz, S., Sturm, P., Brand, M.: Geolocalization using skylines from omni-images. In: Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops (ICCV Workshops), pp. 23–30 (2009). doi:10.1109/ICCVW.2009.5457723

Rees, D.W.: Patent 3505465 (1970)

Rosenfeld, A., Pfaltz, J.L.: Distance functions on digital pictures. Pattern Recognit. 1(1), 33–61 (1968). http://linkinghub.elsevier.com/retrieve/pii/0031320368900137

Rosten, E., Drummond, T.: Fusing points and lines for high performance tracking. IEEE Int. Conf. Comput. Vis. 2, 1508–1511 (2005). doi:10.1109/ICCV.2005.104

Rosten, E., Drummond, T.: Machine learning for high-speed corner detection. Eur. Conf. Comput. Vis. 1, 430–443 (2006). doi:10.1007/11744023_34

Scaramuzza, D., Martinelli, A., Siegwart, R.: A toolbox for easily calibrating omnidirectional cameras. In: Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5695–5701. Beijing, China (2006). doi:10.1109/IROS.2006.282372

Scaramuzza, D., Siegwart, R.: Appearance-guided monocular omnidirectional visual odometry for outdoor ground vehicles. IEEE Trans. Robot. 24(5), 1015–1026 (2008). doi:10.1109/TRO.2008.2004490

Scaramuzza, D., Siegwart, R., Martinelli, A.: A robust descriptor for tracking vertical lines in omnidirectional images and its use in mobile robotics. Int. J. Robot. Res. 28(2), 149–171 (2009). doi:10.1177/0278364908099858

Schroth, G., Huitl, R., Chen, D., Abu-Alqumsan, M., Al-Nuaimi, A., Steinbach, E.: Mobile visual location recognition. IEEE Sig. Process. Mag. 28(4), 77–89 (2011). http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=5888650

Shabayek, A., Morel, O., Fofi, D.: Auto-calibration and 3d reconstruction with non-central catadioptric sensors using polarization imaging. In: The Proceedings of the 10th Workshop on Omnidirectional Vision (OMNIVIS) in conjunction with Robotics Systems and Science RSS. Zaragoza, Spain (2010)

Sturm, P.: Mixing catadioptric and perspective cameras. In: Proceedings of the Third Workshop on Omnidirectional Vision, 2002, pp. 37–44 (2002). doi:10.1109/OMNVIS.2002.1044489

Sturzl, W., Srinivasan, M.V.: Omnidirectional imaging system with constant elevational gain and single viewpoint. In: Proccedings of the OMNIVIS—10th Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras, pp. 1–7. Zaragoza, Spain (2010)

Svoboda, T., Pajdla, T.: Epipolar geometry for central catadioptric cameras. Int. J. Comput. Vis. 49, 23–37 (2002). doi:10.1023/A:1019869530073. http://dl.acm.org/citation.cfm?id=598433.598513

Swaminathan, R.: Focus in catadioptric imaging systems. In: IEEE 11th International Conference on Computer Vision, ICCV 2007, pp. 1–7 (2007). doi:10.1109/ICCV.2007.4409205

Swaminathan, R., Grossberg, M.D., Nayar, S.K.: Non-single viewpoint catadioptric cameras: geometry and analysis. Int. J. Comput. Vis. 66(3), 211–229 (2001)

Tamimi, H., Andreasson, H., Treptow, A., Duckett, T., Zell, A.: Localization of mobile robots with omnidirectional vision using particle filter and iterative sift. Robot. Auton. Syst. 54(9), 758–765 (2006). http://linkinghub.elsevier.com/retrieve/pii/S0921889006000868

The MathWorks Inc.: MATLAB (2011). Version 7.14 (R2012a)

Ulrich, I., Nourbakhsh, I.: Appearance-based place recognition for topological localization. In: Proceedings of the IEEE International Conference on Robotics and Automation, ICRA ’00, vol. 2, pp. 1023–1029 (2000). doi:10.1109/ROBOT.2000.844734

Wang, M.L., Huang, C.C., Lin, H.Y.: An intelligent surveillance system based on an omnidirectional vision sensor. In: Proceedings of the IEEE Conference on Cybernetics and Intelligent Systems, pp. 1–6 (2006). doi:10.1109/ICCIS.2006.252312

Wiener, N.: Extrapolation, Interpolation, and Smoothing of Stationary Time Series. The MIT Press, Cambridge (1964)

Winters, N., Gaspar, J., Lacey, G., Santos-Victor, J.: Omni-directional vision for robot navigation. In: Proceedings of the IEEE Workshop on Omnidirectional Vision, pp. 21–28 (2000). doi:10.1109/OMNVIS.2000.853799

Yagi, Y.: Omnidirectional sensing and its applications. IEICE Trans. Inf. Syst. E82–D, 568–579 (1999)

Yagi, Y., Kawato, S.: Panorama scene analysis with conic projection. In: Proceedings of the IEEE International Workshop on Intelligent Robots and Systems ’90. ’Towards a New Frontier of Applications’, IROS ’90, vol. 1, pp. 181–187 (1990). doi:10.1109/IROS.1990.262385

Yamazawa, K., Yagi, Y., Yachida, M.: Omnidirectional imaging with hyperboloidal projection. In: Proceedings of the 1993 IEEE/RSJ International Conference on Intelligent Robots and Systems ’93, IROS ’93, vol. 2, pp. 1029–1034. Tokyo, Japan (1993). doi:10.1109/IROS.1993.583287

Ying, X., Hu, Z.: Catadioptric camera calibration using geometric invariants. IEEE Trans. Pattern Anal. Mach. Intell. 26(10), 1260–1271 (2004). doi:10.1109/TPAMI.2004.79

Zhang, Z.: A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334 (2000). doi:10.1109/34.888718

Acknowledgments

Nguan Soon Chong thanks Swinburne University of Technology (Sarawak Campus) for his Ph.D. studentship.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research project is funded by the Ministry of Higher Education (MOHE), Malaysia, under a Fundamental Research Grant Scheme No. FRGS/2/2010/TK/SWIN/03/02.

Rights and permissions

About this article

Cite this article

Chong, N.S., Kho, Y.H. & Wong, M.L.D. Visual detection in omnidirectional view sensors. SIViP 9, 923–940 (2015). https://doi.org/10.1007/s11760-013-0528-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-013-0528-0