Abstract

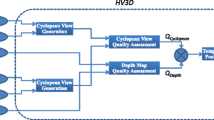

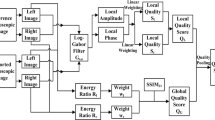

With the consideration that incorporating visual saliency information appropriately can benefit image quality assessment metrics, this paper proposes an objective stereoscopic video quality assessment (SVQA) metric by incorporating stereoscopic visual attention (SVA) to SVQA metric. Specifically, based upon the multiple visual masking characteristics of HVS, a stereoscopic just-noticeable difference model is proposed to compute the perceptual visibility for stereoscopic video. Next, a novel SVA model is proposed to extract stereoscopic visual saliency information. Then, the quality maps are calculated by the similarity of the original and distorted stereoscopic videos’ perceptual visibility. Finally, the quality score is obtained by incorporating visual saliency information to the pooling of quality maps. To evaluate the proposed SVQA metric, a subjective experiment is conducted. The experimental result shows that the proposed SVQA metric achieves better performance in comparison with the existing SVQA metrics.

Similar content being viewed by others

References

Ha, K., Kim, M.: A perceptual quality assessment metric using temporal complexity and disparity information for stereoscopic video. In: Proceedings of the ICIP, pp. 2525–2528 (2011)

Bensalma, R., Larabi, M.C.: A perceptual metric for stereoscopic image quality assessment based on the binocular energy. Multidimens. Syst. Signal Process. 24(2), 281–316 (2013)

Shao, F., Lin, W., Gu, S., Jiang, G., Srikanthan, T.: Perceptual full-reference quality assessment of stereoscopic images by considering binocular visual characteristics. IEEE Trans. Image Process. 22(5), 1940–1953 (2013)

Joveluro, P., Malekmohamadi, H., Fernando, W.A.C., Kondoz, A.M.: Perceptual video quality metric for 3d video quality assessment. In: Proceedings of the 3DTV-CON, pp. 1–4 (2010)

Jin, L., Boev, A., Gotchev, A., Egiazarian, K.: 3D-DCT based perceptual quality assessment of stereo vide. In: Proceedings of the ICIP, pp. 2521–2524 (2011)

Lu, F., Wang, H., Ji, X., Er, G.: Quality assessment of 3D asymmetric view coding using spatial frequency dominance model. In: Proceedings of the 3DTV-CON, pp. 1–4 (2009)

Han, J., Jiang, T., Ma, S.: Stereoscopic video quality assessment model based on spatial–temporal structural information. In: Proceedings of the VCIP, pp. 119–125 (2012)

Chou, C.-H., Li, Y.-C.: A perceptually tuned sub-band image coder based on the measure of just-noticeable-distortion profile. IEEE Trans. Circuits Syst. Video Technol. 5(6), 467–476 (1995)

Yang, X., Lin, W., Lu, Z., Ong, E.P., Yao, S.: Motion-compensated residue preprocessing in video coding based on just-noticeable-distortion profile. IEEE Trans. Circuits Syst. Video Technol. 15(6), 742–752 (2005)

Zhang, X., Lin, W., Xue, P.: Just-noticeable difference estimation with pixels in images. J. Vis. Commun. Image Represent. 19, 30–41 (2008)

Qi, F., Jiang, T., Fan, X., Ma, S., Zhao, D.: Stereoscopic video quality assessment based on stereo just-noticeable difference model. In: Proceedings of the ICIP, pp. 34–38 (2013)

Lin, W., Jay Kuo, C.-C.: Perceptual visual quality metrics: a survey. J. Vis. Commun. Image Represent. 22(4), 297–312 (2011)

Zhao, Y., Chen, Z., Zhu, C., Tan, Y., Yu, L.: Binocular just-noticeable-difference model for stereoscopic images. IEEE Signal Process. Lett. 18(1), 19–22 (2011)

De. Silva, D., Fernando, W.A.C., Worrall, S.T., Yasakethu, S.L.P., Kondoz, A.M.: Just noticeable difference in depth model for stereoscopic 3D displays. In: Proceedings of the ICME, pp. 1219–1224 (2010)

Li, X., Wang, Y., Zhao, D., Jiang, T., Zhang, N.: Joint just noticeable difference model based on depth perception for stereoscopic images. In: Proceedings of the VCIP, pp. 1–4 (2011)

Zhai, G., Wu, X., Yang, X., Lin, W., Zhang, W.: A psychovisual quality metric in free-energy principle. IEEE Trans. Image Process. 21(1), 41–52 (2012)

Friston, K.: The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11(2), 127–138 (2010)

Howard, I.P., Rogers, B.J.: Binocular Vision and Stereopsis. Oxford University Press, Oxford (1995)

Wang, Z., Li, Q.: Information content weighting for perceptual image quality assessment. IEEE Trans. Image Process. 20(5), 1185–1198 (2011)

Zhang, Y., Jiang, G., Yu, M., Chen, K.: Stereoscopic visual attention model for 3-D video. In: Proceedings of the Multimedia Modeling, pp. 314–324 (2010)

Dittrich, T., Kopf, S., Schaber, P., Guthier, B., Effelsberg, W.: Saliency detection for stereoscopic video. In: Proceedings of the 4th ACM Conference on Multimedia Systems, pp. 12–23 (2013)

Wang, J., Perreira, M., Silva, D., Callet, P.L., Ricordel, V.: A computational model of stereoscopic 3D visual saliency. IEEE Trans. Image Process. 22(6), 2151–2165 (2013)

Zhang, L., Shen, Y., Li, H.Y.: VSI: a visual saliency induced index for perceptual image quality assessment. IEEE Trans. Image Process. 23(10), 4270–4281 (2014)

Aflaki, P., Hannuksela, M.M., Hakkinen, J., Lindroos, P., Gabbouj, M.: Subjective study on compressed asymmetric stereoscopic video. In: Proceedings of the ICIP, pp. 4021–4024 (2010)

Fleet, D.J., Wagner, H., Heeger, D.J.: Neural encoding of binocular disparity: energy models, position shifts and phase shifts. J. Vis. Res. 36(12), 1839–1857 (1996)

Cheng, M.M., Zhang, G.X., Mitra, N.J., Huang, X., Hu, S.M.: Global contrast based salient region detection. In: Proceedings of the CVPR, pp. 409–416 (2011)

May, K.A., Li, Z.P., Hibbard, P.B.: Perceived direction of motion determined by adaptation to static binocular images. Curr. Biol. 22(1), 28–32 (2012)

Seo, H.J., Milanfar, P.: Visual saliency for automatic target detection, boundary detection, and image quality assessment. In: Proceedings of the ICASSP (2010)

Zhong, S.H., Liu, Y., Ren, F.F., Zhang, J.H., Ren, T.W.: Video saliency detection via dynamic consistent spatio-temporal attention modelling. In: Proceedings of the AAAI Conference on Artificial Intelligence (2013)

Urvoy, M., Gutirrez, J., Barkowsky, M., Cousseau, R., Koudota, Y., Ricordel, V., Callet, P.L., Garca, N.: NAMA3DS1-COSPAD1: subjective video quality assessment database on coding conditions introducing freely available high quality 3D stereoscopic sequences. In: Fourth International Workshop on Quality of Multimedia Experience (2012)

Qi, F.: The Illumination of SVQA Subjective Test. http://www.escience.cn/people/qifeng/index.html

Acknowledgments

This work has been supported in part by the Major State Basic Research Development Program of China (973 Program 2015CB351804), the National Science Foundation of China under Grant (Nos. 61472101, 61272386).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Qi, F., Zhao, D., Fan, X. et al. Stereoscopic video quality assessment based on visual attention and just-noticeable difference models. SIViP 10, 737–744 (2016). https://doi.org/10.1007/s11760-015-0802-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-015-0802-4