Abstract

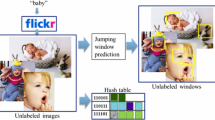

In this work, we propose an efficient image annotation approach based on visual content of regions. We assume that regions can be described using low-level features as well as high-level ones. Indeed, given a labeled dataset, we adopt a probabilistic semantic model to capture relationships between low-level features and semantic clusters of regions. Moreover, since most previous works on image annotation do not deal with the curse of dimensionality, we solve this problem by introducing a fuzzy version of the Vector Approximation Files (VA-Files). Indeed, the main contribution of this work resides in the association of the generative model with fuzzy VA-Files, which offer an accurate multi-dimensional indexing, to estimate relationships between low-level features and semantic concepts. In fact, the proposed approach reduces the computation complexity while optimizing the annotation quality. Preliminary experiments highlight that the suggested approach outperforms other state-of-the-art approaches.

Similar content being viewed by others

References

Barhoumi, W., Gallas, A., Zagrouba, E.: Effective region-based relevance feedback for interactive content-based image retrieval. New Dir. Intell. Interact. Multimed. Syst. Serv. 226, 177–187 (2009)

Barhoumi, W., Zagrouba, E.: Boundaries detection based on polygonal approximation by genetic algorithms. Front. Artif. Intell. Appl. 82, 1529–1533 (2002)

Barnard, K., Duygulu, P., Forsyth, D., Freitas, N.D., Blei, D.M., Jordan, M.I.: Matching words and pictures. J. Mach. Learn. Res. 3(Feb), 1107–1135 (2003)

Beyer, K., Goldstein, J., Ramakrishnan, R., Shaft, U.: When is nearest neighbor meaningful? In: International Conference on Database Theory, pp. 217–235 (1999)

Blei, D.M., Edu, B.B., Ng, A.Y., Edu, A.S., Jordan, M.I., Edu, J.B.: Latent dirichlet allocation. J. Mach. Learn. Res. 3(Jan), 993–1022 (2003)

Cai, X., Nie, F., Cai, W., Huang, H.: New graph structured sparsity model for multi-label image annotations. In: IEEE International Conference on Computer Vision, pp. 801–808 (2013)

Carneiro, G., Chan, A.B., Moreno, P.J., Vasconcelos, N.: Supervised learning of semantic classes for image annotation and retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 29(3), 394–410 (2007)

Chang, E., Goh, K., Sychay, G., Wu, G.: CBSA: content-based soft annotation for multimodal image retrieval using bayes point machines. IEEE Trans. Circuits Syst. Video Technol. 13(1), 26–38 (2003)

Li, J., Wang, J.Z.: Automatic linguistic indexing of pictures by a statistical modeling approach. IEEE Trans. Pattern Anal. Mach. Intell. 25(9), 1075–1088 (2004)

Chien, B.C., Ku, C.W.: Large-scale image annotation with image-text hybrid learning models. Soft Comput. 21(11), 2857–2869 (2017)

Darwish, S.M.: Combining firefly algorithm and bayesian classifier: new direction for automatic multilabel image annotation. IET Image Process. 10(10), 763–772 (2016)

Duygulu, P., Barnard, K., de Freitas, J.F., Forsyth, D.A.: Object recognition as machine translation: Learning a lexicon for a fixed image vocabulary. In: European Conference on Computer Vision, pp. 97–112. Springer, Berlin (2002)

Feng, S.L., Manmatha, R., Lavrenko, V.: Multiple bernoulli relevance models for image and video annotation. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1002–1009 (2004)

Glotin, H., Tollari, S.: Fast image auto-annotation with visual vector approximation clusters. In: Fourth International Workshop on Content-Based Multimedia Indexing (2005)

Grubinger, M.: Analysis and evaluation of visual information systems performance. Ph.D. thesis, Victoria University (2007)

Hung, C., Tsai, C.F.: Automatically annotating images with keywords: a review of image annotation systems. Recent Pat. Comput. Sci. 1(1), 55–68 (2008)

Jeon, J., Lavrenko, V., Manmatha, R.: Automatic image annotation and retrieval using cross-media relevance models. In: Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 119–126 (2003)

Ji, P., Gao, X., Hu, X.: Automatic image annotation by combining generative and discriminant models. Neurocomputing 236(March), 48–55 (2017)

Lavrenko, V., Manmatha, R., Jeon, J.: A model for learning the semantics of pictures. Neural Inf. Process. Syst. 1(2), 553–560 (2003)

Li, Z., Liu, J., Zhu, X., Liu, T., Lu, H.: Image annotation using multi-correlation probabilistic matrix factorization. In: Proceedings of the International Conference on Multimedia, pp. 1187–1190 (2010)

Li, Z., Tang, Z., Zhao, W., Li, Z.: Combining generative/discriminative learning for automatic image annotation and retrieval. Int. J. Intell. Sci. 02(03), 55–62 (2012)

Monay, F., Gatica-Perez, D.: On image auto-annotation with latent space models. In: ACM International Conference on Multimedia, pp. 275–278 (2003)

Monay, F., Gatica-Perez, D.: PLSA-based image auto-annotation: constraining the latent space. In: International Conference on Multimedia, pp. 348–351 (2004)

Mori, Y., Takahashi, H., Oka, R.: Image-to-word transformation based on dividing and vector quantizing images with words. In: First International Workshop on Multimedia Intelligent Storage and Retrieval Management, pp. 1–9 (1999)

Murthy, V.N., Can, E.F., Manmatha, R.: A hybrid model for automatic image annotation. In: International Conference on Multimedia Retrieval, pp. 369–376 (2014)

Murthy, V.N., Sharma, A., Chari, V., Manmatha, R.: Image annotation using multi-scale hypergraph heat diffusion framework. In: International Conference on Multimedia Retrieval, pp. 299–303 (2016)

Ren, Y.: A comparative study of irregular pyramid matching in bag-of-bags of words model for image retrieval. Signal Image Video Process. 10(3), 471–478 (2016)

Tang, J., Li, H., Qi, G.J., Chua, T.S.: Image annotation by graph-based inference with integrated multiple/single instance representations. IEEE Trans. Multimed. 12(2), 131–141 (2010)

Thomas, S.S., Gupta, S., Venkatesh, K.: Perceptual synoptic view-based video retrieval using metadata. Signal Image Video Process. 11(3), 549–555 (2017)

Tian, D.: Support vector machine for automatic image annotation. Int. J. Hybrid Inf. Technol. 8(11), 435–446 (2015)

Vo, P., Sahbi, H.: Transductive kernel map learning and its application image annotation. In: British Machine Vision Conference 2012, pp. 1–12 (2012)

Von Ahn, L., Dabbish, L.: Labeling images with a computer game. In: Conference on Human Factors in Computing Systems, pp. 319–326 (2004)

Wang, C., Yan, S., Zhang, L., Zhang, H.J.: Multi-label sparse coding for automatic image annotation. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1643–1650 (2009)

Weber, R., Schek, H.J., Blott, S.: A quantitative analysis and performance study for similarity-search methods in high-dimensional spaces. In: International Conference on Very Large Data Bases, pp. 194–205 (1998)

Yang, S., Bian, J., Zha, H.: Hybrid generative/discriminative learning for automatic image annotation. arXiv preprint arXiv:1203.3530 (2) (2012)

Zhang, D., Islam, M.M., Lu, G.: A review on automatic image annotation techniques. Pattern Recognit. 45(1), 346–362 (2012)

Zhang, X., Liu, C.: Image understanding based on histogram of contrast. Signal Image Video Process. 10(1), 103–112 (2016)

Zheng, H., Ip, H.H.S.: Image classification and annotation based on robust regularized coding. Signal Image Video Process. 10(1), 55–64 (2016)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ben Rejeb, I., Ouni, S., Barhoumi, W. et al. Fuzzy VA-Files for multi-label image annotation based on visual content of regions. SIViP 12, 877–884 (2018). https://doi.org/10.1007/s11760-017-1233-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1233-1