Abstract

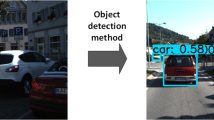

Vision-based autonomous driving systems need to overcome many challenges at nighttime, such as complicated illumination conditions, dazzle caused by headlamps, light refraction, motion blur and many other special problems. Although many research works have gradually paid attention to low-light challenges, there are still lacking natural nighttime driving datasets covering various countries and regions. Thus, we propose the transnational image object detection datasets from nighttime driving (TDND datasets), which contain natural driving images across multiple countries and regions. The TDND datasets not only cover severe weather such as heavy rain and snow, but also retain complicated illumination conditions and other problems. These datasets consist of 115k images which are annotated in six classes. The performance of six deep-learning-based object detection methods is further compared for evaluation, which are Faster R-CNN, Cascade R-CNN, RetinaNet, YOLO-V3, CornerNet and FCOS. The results show that the quality of the TDND datasets is comparable to that of MS-COCO. Moreover, for special problems at nighttime, the state-of-the-art object detection methods are worthy of further research and optimization. The datasets can be downloaded at https://github.com/biubiu3/TDND-dataset.

Similar content being viewed by others

References

Everingham, M., Van Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: common objects in context. In: European Conference on Computer Vision, pp. 740–755 (2014)

Loh, Y.P., Chan, C.S.: Getting to know low-light images with the exclusively dark dataset. Comput. Vis. Image Underst. 178, 30–42 (2019)

Lore, K.G., Akintayo, A., Sarkar, S.: LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recogn. 61, 650–662 (2017)

Shen, L., Yue, Z., Feng, F., Chen, Q., Liu, S., Ma, J.: Msr-net: Low-light image enhancement using deep convolutional network. arXiv preprint arXiv:1711.02488 (2017)

Zang, Y., Fan, C., Zheng, Z., Yang, D.: Pose estimation at night in infrared images using a lightweight multi-stage attention network. SIViP 15(8), 1757–1765 (2021)

Chen, C., Chen, Q., Xu, J., Koltun, V.: Learning to see in the dark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3291–3300 (2018)

Wei, C., Wang, W., Yang, W., Liu, J.: Deep Retinex decomposition for low-light enhancement. In: British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, September 3–6, 2018, p. 155 (2018)

Cai, J., Gu, S., Zhang, L.: Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 27(4), 2049–2062 (2018)

Yang, W., Yuan, Y., Ren, W., Liu, J., Scheirer, W.J., Wang, Z., Zhang, T., Zhong, Q., Xie, D., Pu, S.: Advancing image understanding in poor visibility environments: a collective benchmark study. IEEE Trans. Image Process. 29, 5737–5752 (2020)

Neumann, L., Karg, M., Zhang, S., Scharfenberger, C., Piegert, E., Mistr, S., Prokofyeva, O., Thiel, R., Vedaldi, A., Zisserman, A.: Nightowls: a pedestrians at night dataset. In: Asian Conference on Computer Vision, pp. 691–705 (2018)

Sun, P., Kretzschmar, H., Dotiwalla, X., Chouard, A., Patnaik, V., Tsui, P., Guo, J., Zhou, Y., Chai, Y., Caine, B.: Scalability in perception for autonomous driving: Waymo open dataset. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2446–2454 (2020)

Yu, F., Chen, H., Wang, X., Xian, W., Chen, Y., Liu, F., Madhavan, V., Darrell, T.: Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2633–2642 (2020)

Caesar, H., Bankiti, V., Lang, A.H., Vora, S., Liong, V.E., Xu, Q., Krishnan, A., Pan, Y., Baldan, G., Beijbom, O.: nuscenes: A multimodal dataset for autonomous driving. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11618–11628 (2020)

Pham, Q.-H., Sevestre, P., Pahwa, R.S., Zhan, H., Pang, C.H., Chen, Y., Mustafa, A., Chandrasekhar, V.R., Lin, J.: A*3d dataset: Towards autonomous driving in challenging environments. In: 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 2267–2273 (2020)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? The KITTI vision benchmark suite. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3354–3361 (2012)

Singha, A., Bhowmik, M.K.: TU-VDN: Tripura University video dataset at night time in degraded atmospheric outdoor conditions for moving object detection. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 2936–2940. IEEE (2019)

Hwang, S., Park, J., Kim, N., Choi, Y., Kweon, I.S.: Multispectral pedestrian detection: Benchmark dataset and baseline. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1037–1045 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 936–944 (2017)

Cai, Z., Vasconcelos, N.: Cascade R-CNN: high quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 43(5), 1483–1498 (2021)

Lin, T.-Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision (2017)

Redmon, J., Farhadi, A.: YOLOv3: An Incremental Improvement. CoRR arXiv:1804.02767 (2018)

Law, H., Deng, J.: Cornernet: Detecting objects as paired keypoints. In: 15th European Conference on Computer Vision, ECCV 2018, pp. 765–781 (2018)

Newell, A., Yang, K., Deng, J.: Stacked hourglass networks for human pose estimation. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision—ECCV 2016, pp. 483–499 (2016)

Tian, Z., Shen, C., Chen, H., He, T.: FCOS: fully convolutional one-stage object detection. In: 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea (South), October 27–November 2, 2019, pp. 9626–9635 (2019)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6517–6525 (2017)

Chen, K., Wang, J., Pang, J., Cao, Y., Xiong, Y., Li, X., Sun, S., Feng, W., Liu, Z., Xu, J., Zhang, Z., Cheng, D., Zhu, C., Cheng, T., Zhao, Q., Li, B., Lu, X., Zhu, R., Wu, Y., Dai, J., Wang, J., Shi, J., Ouyang, W., Loy, C.C., Lin, D.: MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv preprint arXiv:1906.07155 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is partially supported by Guangzhou Innovation Leading Team with the Grant Number 202009020002.

Rights and permissions

About this article

Cite this article

Nie, C., Qadar, M.A., Zhou, S. et al. Transnational image object detection datasets from nighttime driving. SIViP 17, 1123–1131 (2023). https://doi.org/10.1007/s11760-022-02319-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02319-8