Abstract

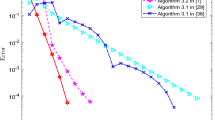

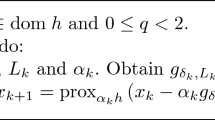

In this paper, we propose a new variant of stochastic Gauss–Newton algorithm to solve a broad class of nonconvex composite optimization problems by using stochastic recursive momentum estimators. We first show that the mean-square error bounds on the estimators is bounded. Then, we prove that the oracle complexity of the proposed algorithm is \(\mathcal {O}(\varepsilon ^{-2})\) in the expectation setting. Moreover, the oracle complexity with high probability in the finite-sum setting is also established as \(\mathcal {O}(\varepsilon ^{-2})\). Finally, some numerical experiments have been conducted to verify the efficiency of the algorithm.

Similar content being viewed by others

References

Allen-Zhu, Z., Hazan, E.: Variance reduction for faster non-convex optimization. In: International Conference on Machine Learning, PMLR, pp. 699–707 (2016)

Barakat, A., Bianchi, P.: Convergence analysis of a momentum algorithm with adaptive step size for nonconvex optimization. arXiv preprint arXiv:1911.07596

Cutkosky, A., Orabona, F.: Momentum-based variance reduction in non-convex SGD. Neural Inf. Process. Syst. 32, 15236–15245 (2019)

Dai, B., He, N., Pan, Y., et al.: Learning from conditional distributions via dual kernel embeddings. arXiv preprint arXiv:1607.04579

Dentcheva, D., Penev, S., Ruszczyski, A.: Statistical estimation of composite risk functionals and risk optimization problems. Ann. Inst.. Stat. Math. 69(4), 737–760 (2017)

Fang, C., Li, C.J., Lin, Z., Zhang, T.: Spider: near-optimal non-convex optimization via stochastic path-integrated differential estimator. Neural Inf. Process. Syst. 31, 689–699 (2018)

Ghadimi, S., Lan, G.H.: Accelerated gradient methods for nonconvex nonlinear and stochastic programming. Math. Program. 156(1), 59–99 (2016)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. In: International Conference on Learning Representations. arXiv preprint arXiv:1412.6980

Lan, G.: Complexity of stochastic dual dynamic programming. Math. Program. 191, 717–754 (2022)

Nesterov, Y.: Modified Gauss–Newton scheme with worst case guarantees for global performance. Optim. Methods Softw. 22(3), 469–483 (2007)

Nocedal, J., Wright, S.: Numerical Optimization, 2nd edn. Springer, Berlin (2006)

Reddi, S.J., Hefny, A., Sra, S., Poczos, B., Smola, A.: Stochastic variance reduction for nonconvex optimization. In: International Conference on Machine Learning, PMLR, pp. 314–323 (2016)

Tran-Dinh, Q., Pham, N.H., Nguyen, L.M.: Stochastic Gauss–Newton algorithms for nonconvex compositional optimization. In: International Conference on Machine Learning, PMLR, pp. 9572–9582 (2020)

Wang, M., Liu, J., Fang, E.X.: Accelerating stochastic composition optimization. Neural Inf. Process. Syst. 29, 1714–1722 (2016)

Wang, Z., Ji, K.Y., Zhou, Y., Liang, Y.B., Tarokh, V.: SpiderBoost and momentum: faster variance reduction algorithms. Neural Inf. Process. Syst. 32, 2406–2416 (2019)

Zhang, J., Xiao, L.: A stochastic composite gradient method with incremental variance reduction. Neural Inf. Process. Syst. 32, 9078–9088 (2019)

Zhang, J., Xiao, L.: A composite randomized incremental gradient method. In: International Conference on Machine Learning, PMLR, pp. 7454–7462 (2019)

Zhang, J.Y., Xiao, L.: Stochastic variance-reduced prox-linear algorithms for nonconvex composite optimization. Math. Program. (2021). https://doi.org/10.1007/s10107-021-01709-z

Zhou, D., Xu, P., Gu, Q.: Stochastic nested variance reduction for nonconvex optimization. J. Mac. Learn. Res. 21(103), 1–63 (2020)

Acknowledgements

The authors would like to thank the editors and anonymous reviewers for their insight and helpful comments and suggestions which improve the quality of the paper. This work is also supported in part by NSFC11801131, Natural Science Foundation of Hebei Province (Grant No. A2019202229).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This author’s work was supported in part by NSFC11801131, Natural Science Foundation of Hebei Province (Grant No. A2019202229)

Rights and permissions

About this article

Cite this article

Wang, Z., Wen, B. Stochastic Gauss–Newton algorithm with STORM estimators for nonconvex composite optimization. J. Appl. Math. Comput. 68, 4621–4643 (2022). https://doi.org/10.1007/s12190-022-01722-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-022-01722-1