Abstract

In this work, a basic resource allocation (RA) problem is considered, where a fixed capacity must be shared among a set of users. The RA task can be formulated as an optimization problem, with a set of simple constraints and an objective function to be minimized. A fundamental relation between the RA optimization problem and the notion of max–min fairness is established. A sufficient condition on the objective function that ensures the optimal solution is max–min fairness is provided. Notably, some important objective functions like least squares and maximum entropy fall in this case. Finally, an application of max–min fairness for overload protection in 3G networks is considered.

Similar content being viewed by others

Notes

This definition is consistent because due to constraint (2) it follows that \(0<\frac{c_{\!j}}{C} <1\) and \(\sum_j \frac{c_{\!j}}{C} =1\).

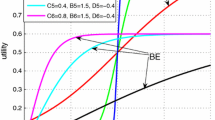

Obviously, from Theorem 2 we know that f PF and f ME yields the same max–min fair allocation as f LS.

References

Keshav S (1997) An engineering approach to computer networking. Addison-Wesley, Reading, pp 215–217

Bertsekas D, Gallager R (1992) Data networks. Prentice-Hall, Englewood Cliffs

Gafni EM, Bertsekas DP (1984) Dynamic control of session input rates in communication networks. IEEE Trans Automat Contr AC-29(11):1009–1016

Salles RM, Barria JA (2008) Lexicographic maximin optimisation for fair bandwidth allocation in computer networks. Eur J Oper Res 185:778–794

Uchida M (2007) Information theoretic aspects of fairness criteria in network resource allocation problems. In: GameComm ’07, Nantes, France, 22 Oct 2007

Le Baudec, J-Y (2007) Rate adaptation, congestion control and fairness: a tutorial. Ecole Polytechnique Fédérale de Lausanne (EPFL)

Kelly F (1997) Charging and rate control for elastic traffic. Eur Trans Telecommun 8:33–37

Mo J, Walrand J (2000) Fair end-to-end window-based congestion control. IEEE/ACM Trans Netw 8(8): 556–567

Dianati M, Shen X, Naik S (2005) A new fairness index for radio resource allocation in wireless networks. Proc. IEEE wireless communications and networking conference (WCNC 2005), vol 2. IEEE Commun 772 Society/WCNC, pp 712–717

Malla A, El-Kadi M, Olariu S, Todorova P (2003) A fair resource allocation protocol for multimedia wireless networks. IEEE Trans Parallel Distrib Syst 14(1):63–71

Aoun B, Boutaba R (2006) Max–min fair capacity of wireless mesh networks. In: IEEE Int’l Conf. on mobile ad hoc and sensor systems (MASS)

Boche H, Wiczanowski M, Stanczak S (2005) Unifying view on min–max fairness and utility optimization in cellular networks. Proc. IEEE wireless communications and networking conference (WCNC 2005), vol 3. IEEE Commun Society/WCNC, pp 1280–1285

Pérez D, Rodríguez Fonollosa J (2005) Practical algorithms for a family of waterfilling solutions. IEEE Trans Signal Process 53(2):686–695

Marsic I (2011) Computer networks: performance and QoS. Available at: www.caip.rutgers.edu/simmarsic/books/QoS/book-QoS_marsic.pdf

The ATM Forum Technical Committee (1996) Traffic management specification. Technical report

Cao Z, Zegura E W (1999) Utility max–min: an application-oriented bandwidth allocation scheme In: IEEE INFOCOM ’99, pp 793–801

Radunović B, Le Boudec J-Y (2007) A unified framework for max–min and min–max fairness With applications. IEEE/ACM Trans Netw 15(5):1073–1083

Cioffi JM (2011) EE379C advanced digital communications. Available at: http://www.stanford.edu/class/ee379c

Johansson M, Sternad M (2005) Resource allocation under uncertainty using the maximum entropy principle. IEEE Trans Inf Theory 51(12):4103–4117

Berger A, Della Pietra S, Della Pietra V (1996) A maximum entropy approach to natural language processing. Comput Linguist 22:39–68

Boyd S, Vandenberghe L (2004) Convex optimization. Cambridge University Press, Cambridge

Massoulié L, Proutière A, Virtamo J (2006) A queueing analysis of max–min fairness, proportional fairness and balanced fairness. Queueing Syst: Theory and Applications 53(1–2)

Ladiwalaa S, Ramaswamya R, Wolf T (2009) Transparent TCP acceleration. Comput Commun 32(4):691–702

Huang J, Subramanian VG, Agrawal R, Berry RA (2009) Downlink scheduling and resource allocation for OFDM systems. IEEE Trans Wirel Commun 8(1):39–71

Subramanian VG, Berry RA, Agrawal R (2010) Joint scheduling and resource allocation in CDMA systems. IEEE Trans Inf Theory 56(5):2416–2432

Madan R, Boyd SP, Lall S (2010) Fast algorithms for resource allocation in wireless cellular networks. IEEE/ACM Trans Netw 18(3):973–984

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

Appendix 1: Proof of Eq. 5

The problem (4) can be easily solved by using the Lagrange multipliers method. The Lagrangian can be written as

where \(\mathcal{D}\) is the (unknown) set of users that are fully satisfied and \(k_{\mathcal{D}}\) its cardinality. Taking the derivatives we obtain:

Imposing the gradient equal to zero yields the stationary points

hence the optimal solution can be written as \(X^{{\kern1.5pt} *}=\max_{\mathcal{D}} X(\mathcal{D})\). In principle, one should explore all the possible configurations of \(\mathcal{D}\) (i.e., all the combinations of d i elements) to find the maximum. However, a certain number of configurations are not admissible since they violate the constraints \(\boldsymbol{0} < \boldsymbol{c}\leq\boldsymbol{d}\). Moreover, it can be easily seen that, among all the configurations of same cardinality \(k_{\mathcal{D}}\), the maximum is reached when \(\mathcal{D}\) contains the \(k_{\mathcal{D}}\) smallest demands (i.e., \(d_1,\ldots,d_{k_{\mathcal{D}}}\)). Therefore, in order to maximize the objective function in problem (4), we can order the demands and restrict the search on those configurations of \(\mathcal{D}\) built by taking the \(k_{\mathcal{D}}\) (unknown) smallest d i , yielding:

that is the thesis.□

Appendix 2: Proof of Theorem 1

Since each derivative is a function of the sole derivation variable, the stationarity condition is

for j = 1,...,N. Using the hypothesis, we end up with the following Karush–Kuhn–Tucker conditions (see e.g., [21]) for the problem (3):

where j = 1,...,N. Since c j > 0, the constraint − ν j c j = 0 is satisfied only for ν j = 0. This means that system (15) simplifies into

The index set \(\{j{\kern1.2pt}\}_{j\;=1}^{j=N}\) can be partitioned into two subsets \(\mathcal{S}\) and \(\mathcal{D}\) based on the value of μ j :

Since \(\mu_{\!j}>0 \Longrightarrow c_{\!j}=d_j\) in force of the fifth equation in the system (16), \(\mathcal{D}\) contains all the users that are fully satisfied. Conversely, for μ j = 0 the first equation becomes g j ′(c j ) = − λ, which means that users in \(\mathcal{S}\) are provided with intermediate shares so as to make their derivatives all equal. Posing \(s_j { \stackrel{\textrm{\tiny def}}{=}} g_j'^{-1}\left( -\lambda \right)\) we can write:

that is the thesis.□

Appendix 3: Proof of Corollary 1

With the further hypothesis, the gradient becomes:

and, accordingly, the first equation of the system (16) becomes

Using the partition \(\{\mathcal{S}, \mathcal{D}\}\) as in Appendix 2, we can write \(c_{\!j}= g'^{-1}( -\lambda )\) \( j\in\mathcal{S}\), which does not depend on the index j. The equality constraint yields:

that is

Rearranging terms, we end up with the following expression for the candidate optimum points:

that is the thesis.□

Appendix 4: Proof of Theorem 2

The feasible set of the problem (3) is convex, hence it always admits a max–min fair vector [17, p. 1077], which we denote by \(\boldsymbol{c}^*=[c^*_1 c^*_2 \cdots c^*_N]^T\). Recalling Eq. 5 we can write

where, arranging user demands in increasing order (i.e., d 1 ≤ d 2 ≤ ⋯ ≤ d N ),

and k * denotes the cardinality of \(\mathcal{D}^*\).

Since \(f\;(\boldsymbol{c})=\sum_{j\;=1}^N h(c_{\!j})\), the hypothesis of Corollary 1 on the gradient \(\nabla f\) are satisfied, thus all candidate points for the optimum must take the form:

and k denotes the cardinality of \(\mathcal{D}\). To prove that \(\boldsymbol{c}^*\) is the optimum, we show that any other candidate point \(\boldsymbol{c}\) cannot improve the objective function value, i.e., \(\boldsymbol{c}\neq\boldsymbol{c}^* \Longrightarrow f\;(\boldsymbol{c})\geq f\;(\boldsymbol{c}^*)\). By hypothesis h(·) in convex in the region of interest (since any allocation of resource greater than max i d i would not be in the feasible set), which in turn implies the convexity of f (·) (see e.g., [21]). The idea is to split the latter function into separate terms to be subsequently bounded by leveraging the properties of convex functions. The choice of a convenient split that allows for a sufficiently tight bound is not trivial. In the following we describe such a choice. To illustrate, imagine that a generic feasible solution \(\boldsymbol{c}\) (in the form of Eq. 18) is obtained from \(\boldsymbol{c}^*\) by performing some moves. In doing so, the elements of the sets \(\mathcal{S}^*, \mathcal{D}^*\) are rearranged into the sets \(\mathcal{S}, \mathcal{D}\). We can then identify four kinds of transition, depending on the departure/arrival sets, as listed in the Table 1.

If we denote with k ∩ the cardinality of \(\mathcal{D}_{\cap}\), the value of the objective function in \(\boldsymbol{c}\) can be recast as follows:

We now proceed to rewrite the latter three summations in Eq. 19. For the second summation, it is important to realize that each user in \(\mathcal{D}\setminus \mathcal{D}_{\cap}\) contributes to \(f\;(\boldsymbol{c})\) with an individual term that can be rewritten as \(h(d_i)=h(X^{{\kern1.5pt} *}+\Delta_i)\) with Δ i ≥ 0, \(i\in\mathcal{D}\setminus \mathcal{D}_{\cap}\). In fact, the set of constraints (1) ensures that the water-level X * is no larger than any demand d i for \(i\in\mathcal{S}^*\), and \(\mathcal{D}\setminus \mathcal{D}_{\cap} \subseteq \mathcal{S}^*\). Similarly, each user in \(\mathcal{D^*}\setminus \mathcal{D}_{\cap}\) contributes with an individual term h(d i ) = h(d i − Δ i ) with Δ i ≥ 0, \(i\in\mathcal{D}^* \setminus \mathcal{D}_{\cap}\), because X is smaller than any demand d i for \(i\in\mathcal{S}\), and \(\mathcal{D^*}\setminus \mathcal{D}_{\cap} \subseteq \mathcal{S}\). For the last summation, note that the individual contributions h(X) are the same for all user indeces. Finally, we denote by k ∪ the cardinality of the union set of the first three summations in Eq. 19, formally:

With these positions we can rewrite (19) as:

By construction the water-level X * of the max–min fair solution is the maximum among the water-levels associated to the candidate points taking the form of Eq. 18, i.e.,

This follows directly from the optimization (4), however a formal proof is given in Appendix 5. From Eq. 22 it follows that X = X * − Δ, where Δ ≥ 0 is such that all the individual level gaps Δ i compensate reciprocally, in order for the point to remain within the candidate set. The value of Δ can be obtained by zeroing the algebraic sum:

i.e.,

Now let us write the value of the objective function at \(\boldsymbol{c}^*\):

We now partition \(\mathcal{D}^*\) into \(\mathcal{D}_{\cap}\) and \(\mathcal{D}^* \setminus \mathcal{D}_{\cap}\), and split the last term based on the equivalence \(N-k^*=N-k_{\cup} +k-k_{\cap}\) (recall Eq. 20), rewriting:

With such rearrangement we can directly compare the values of the objective function in \(\boldsymbol{c}\) and \(\boldsymbol{c}^*\), i.e., Eqs. 21 and 23:

Since the function h(·) is convex, the inequality h(y) − h(x) ≥ h′(x) (y − x) holds (see e.g., [21]), where h′(·) is the derivative of h(·). Applying the inequality to each term of Eq. 24 we can write:

Substituting the explicit expression for Δ and arranging by homologous terms, after some simple algebraic steps we obtain:

The convexity of h(·) implies h′(·) is an increasing function; moreover, recalling Eq. 6 it holds \(X^{{\kern1.5pt} *} \geq \max_{i\in\mathcal{D}^*} d_i\). Thus, the difference \(h'(X^{{\kern1.5pt} *}) - h'(d_i)\) is always positive for all \(i\in\mathcal{D}^* \setminus \mathcal{D}_{\cap}\), and hence Δf ≥ 0 that is \(f\;(\boldsymbol{c})\geq f\;(\boldsymbol{c}^*)\). For the arbitrariness of \(\boldsymbol{c}\), we conclude \(\boldsymbol{c}^*\) is the optimum.□

Appendix 5: Proof of Eq. 22

By definition X is the common water-level of partially satisfied users, i.e., users belonging to \(\mathcal{S}\), hence it holds that \(X < \min_{i\in\mathcal{S}} d_i \). Furthermore, we already noticed that \(X^{{\kern1.5pt} *} \geq \max_{i\in\mathcal{D}^*} d_i\) . We now consider two distinct cases based on the mutual relation between the sets \(\mathcal{S}\) and \(\mathcal{D}^*\). If \(\mathcal{S}\cap\mathcal{D}^*\neq \emptyset\), we can take a generic element \(\bar{d}\in\mathcal{S}\cap\mathcal{D}^*\) and write:

Conversely, if \(\mathcal{S}\cap\mathcal{D}^*= \emptyset\), it follows necessarily that \(\mathcal{D} \supset \mathcal{D}^*\): in fact, moving from \(\mathcal{D}^*\) to \(\mathcal{D}\) the number of fully satisfied users increases, leading necessarily to a lower water level. This is a direct consequence of the definition of max–min fair water-level. Thus, we conclude that X * ≥ X.□

Rights and permissions

About this article

Cite this article

Coluccia, A., D’Alconzo, A. & Ricciato, F. On the optimality of max–min fairness in resource allocation. Ann. Telecommun. 67, 15–26 (2012). https://doi.org/10.1007/s12243-011-0246-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12243-011-0246-y