Abstract

The results are from an on-going study which aims to assist in the teaching of Sign Language (SL) to hearing impaired children by means of non-verbal communication and imitation based interaction games between a humanoid robot and the child. In this study, the robot will be able to express a word in the SL among a set of chosen words using hand movements, body and face gestures and having comprehended the word, the child will give relevant feedback to the robot.

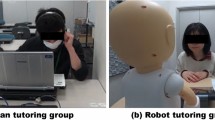

This paper reports the findings of such an evaluation on a subset of sample words chosen from Turkish Sign Language (TSL) via the comparison of their video representations carried out by human teachers and the Nao H25 robot. Within this study, several surveys and user studies have been realized to reveal the resemblance between the two types of videos involving the performance of the robot simulator and the human teacher for each chosen word. In order to investigate the perceived level of similarity between human and robot behavior, participants with different sign language acquaintance levels and age groups have been asked to evaluate the videos using paper based and online questionnaires. The results of these surveys have been summarized and the most significant factors affecting the comprehension of TSL words have been discussed.

Similar content being viewed by others

References

Aran O, Keskin C, Akarun L (2005) Sign language tutoring tool. In: European signal processing conference (EUSIPCO’05), Antalya, Turkey

Gürel TC (2010) Turkish sign language animation with articulated body model. MSc thesis, Boğazici University

Turkish sign language dictionary v1.0. [online]. Available: http://www.cmpe.boun.edu.tr/pilab/tidsozlugu

Jaffe D (1994) Evolution of mechanical fingerspelling hands, for people who are deaf-blind. J Rehabil Res Dev 31:236–244

Hersh MA, Johnson MA (eds) (2003) Assistive technology for the hearing-impaired, deaf and deaf blind. Springer, Berlin. ISBN:978-1-85233-382-9

Staner AT, Pentland A (2002) Real-time American sign language recognition from video using hidden Markov models. Technical report TR-306, Media Lab, MIT

Kadous W (1995) GRASP: recognition of Australian sign language using instrumented gloves. MSc thesis, University of New South Wales

Murakami K, Taguchi H. (1991) Gesture recognition using recurrent neural networks. In: Proceedings of CHI’91: human factors in computing systems, pp 237–242

Aran O, Akarun L (2010) A multi-class classification strategy for fisher scores: application to signer independent sign language recognition. Pattern Recognit 43(5):1717–1992

Keskin C, Akarun L (2009) Input-output HMM based 3D hand gesture recognition and spotting for generic applications (accepted for publication). Pattern Recognit Lett 30(12):1086–1095

Aran O, Burger MST, Caplier A, Akarun L (2009) A belief-based sequential fusion approach for fusing manual and non-manual signs. Pattern Recognit 42(5):812–822

Aran O, Ari I, Benoit A, Campr P, Carrillo AH, Fanard F, Akarun L, Caplier A, Rombaut M, Sankur B (2009) Signtutor: an interactive system for sign language tutoring. IEEE Multimed 16(1):81–93

Aran O, Ari I, Campr P, Dikici E, Hruz M, Parlak S, Akarun L, Saraclar M (2008) Speech and sliding text aided sign retrieval from hearing impaired sign news videos. J Multimodal User Interfaces 2(1):117–131

Caplier A, Stillittano S, Aran O, Akarun L, Bailly G, Beautemps D, Aboutabit N, Burger T (2007) Image and video for hearing impaired people. J Image Video Process (Special issue on image and video processing for disability). doi:10.1155/2007/45641

Shen Q, Kose-Bagci H, Saunders J, Dautenhahn K (2009) An experimental investigation of interference effects in human-humanoid interaction games. In: The 18th IEEE international symposium on robot and human interactive communication (ROMAN 2009), pp 291–298. doi:10.1109/ROMAN.2009.5326342

Kose-Bagci H, Dautenhahn K, Nehaniv CL (2008) Emergent dynamics of turn-taking interaction in drumming games with a humanoid Robot. In: Proc IEEE RO-MAN 2008, Technische Universitat Munchen, Munich, Germany, 1–3 August 2008

Kose-Bagci H, Ferrari E, Dautenhahn K, Syrdal DS, Nehaniv CL (2009) Effects of embodiment and gestures on social interaction in drumming games with a humanoid robot. Adv Robot (Special issue on robot and human interactive communication) 24(14):1951–1996

Kose-Bagci H, Dautenhahn K, Syrdal DS, Nehaniv CL (2010) Drum-mate: interaction dynamics and gestures in human-humanoid drumming experiments. Connect Sci 22(2):103–134. doi:10.1080/09540090903383189

Dautenhahn K, Nehaniv CL, Walters ML, Robins B, Kose-Bagci H, Mirza NA, Blow M (2009) KASPAR—a minimally expressive humanoid robot for human-robot interaction research. Special issue on “humanoid robots”. Appl Bionics Biomech 6(3):369–397

Adamo-Villani N (2006) A virtual learning environment for deaf children: design and evaluation. Int. J. Appl. Sci. Eng. Technol. 16:18–23

Lee S, Henderson V, Hamilton H, Starner T, Brashear H, Hamilton S (2005) A gesture-based American sign language (ASL) tutor for deaf children. In: Proceedings of CHI (computer-human interaction), Portland, OR, April 2005

Greenbacker C, McCoy K (2008) The ICICLE project: an overiew. In: First annual computer science research day. Department of Computer & Information Sciences, University of Delaware

Graf C, Härtl A, Röfer T, Laue T (2009) A robust closed-loop gait for the standard platform league humanoid. In: Zhou C, Pagello E, Menegatti E, Behnke S, Röfer T (eds) Proceedings of the fourth workshop on humanoid soccer robots in conjunction with the 2009 IEEE-RAS international conference on humanoid robots, Paris, France, 2009, pp 30–37

Aldebaran robotics choregraphe. Available: http://www.aldebaran-robotics.com/en/programmable

Kose H, Yorganci R http://humanoid.ce.itu.edu.tr

Kose H, Yorganci R, Algan HE (2011) Evaluation of the robot sign language tutor using video-based studies. In: 5th European conference on mobile robots (ECMR11), pp 109–115

Kose H, Yorganci R, Itauma II (2011) Robot assisted interactive sign language tutoring game. In: IEEE ROBIO conference 2011, pp 2247–2249

Kose H, Yorganci R (2011) Tale of a robot: humanoid robot assisted sign language tutoring. In: 11th IEEERAS international conference on humanoid robots (HUMANOIDS 2011). pp 105–111. doi:10.1109/Humanoids.2011.6100846

Acknowledgements

This work was supported by Istanbul Technical University Scientific Research Projects foundation under the contract BAP 34255. The analysis of data was partly supported by the EU Integrated Project LIREC (LIving with Robots and intEractive Companions), funded by the European Commission FP7-ICT under contract FP7-215554.

Special thanks to sign language teacher Feride Korkmaz, Prof. Dr. Sumru Ozsoy, and Prof. Dr. Lale Akarun for their guidance. We are also thankful to our participants for their patience and wonderful collaboration.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kose, H., Yorganci, R., Algan, E.H. et al. Evaluation of the Robot Assisted Sign Language Tutoring Using Video-Based Studies. Int J of Soc Robotics 4, 273–283 (2012). https://doi.org/10.1007/s12369-012-0142-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-012-0142-2