Abstract

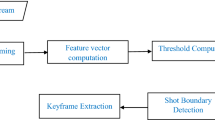

Keyframe selection is the process of finding a set of representative frames from an image sequence. We aim to achieve an automatic keyframe selection. The main problem is that the accuracy of keyframe selection is highly subjective to each particular user. To deal with this problem, we propose a VIEMO keyframe selection framework based on visual and excitement features that consists of two integrated modules, namely; event segmentation and keyframe selection. Firstly, scene change detection algorithm was applied for an event segmentation. Later, visual features which are contrast, color variance, sharpness, noise and saliency along with excitement features from a biosensor are used to filter keyframe that closely matches with user selection keyframe. Two different fusion scheme which are flat and hierarchical fusion were also investigated. To evaluate the quality of keyframe from the proposed method, we present an evaluation techniques which grades the quality of the keyframe automatically. Even when the keyframe does not exactly match with the keyframe selected by the user, the degree of acceptance calculated from visual similarity is provided. Experimental results showed that keyframe selection using only visual features yielded an acceptance rate of \(74.16 \,\%\). Our proposed method achieves a higher acceptance rate of \(83.71\,\%\). Moreover, the acceptance rate was improved by the average of \(9.55\,\%\) in all participants. Therefore, our framework provides a potential solution to this subjective issue for keyframe selection in lifelog image sequences selection.

Similar content being viewed by others

References

Adolphs R, Cahill L, Schul R, Babinsky R (1997) Impaired declarative memory for emotional material following bilateral amygdala damage in humans. Learn Mem 4(3):291–300

Angelica AD (2012) How to measure emotions. http://www.kurzweilainet/how-to-measure-emotions

Barten PG (1999) Contrast sensitivity of the human eye and its effects on image quality. SPIE-International Society for Optical Engineering, Bellingham

Bay H, Tuytelaars T, Van Gool L (2006) Surf: speeded up robust features. Springer, Berlin

Bell G, Gemmell J (2007) A digital life. Sci Am 296:58–65

Blighe M, O’Connor NE, Rehatschek H, Kienast G (2008) Identifying different settings in a visual diary. In: Proceedings of the 2008 ninth international workshop on image analysis for multimedia interactive services, WIAMIS ’08, IEEE Computer Society, Washington, DC, pp 24–27

Bush V (1945) As We May Think. The Atlantic Monthly 176:101–108

Cheng SC, Yang CK (2011) A novel algorithm for key frames selection. In: Proceedings of international conference on opto-electronics engineering and information science

Connaire CO, O’Connor N, Smeaton AF, Jones G (2007) Organising a daily visual diary using multi-feature clustering. In: SPIE electronic imaging: multimedia content access: algorithms and systems (EI121)

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: Computer vision and pattern recognition, 2005. CVPR 2005. IEEE computer society conference on, vol 1, pp 886–893. doi:10.1109/CVPR.2005.177

Datta R, Joshi D, Li J, Wang JZ (2006) Studying aesthetics in photographic images using a computational approach. In: Proceedings of the ECCV, pp 7–13

Dean T, Ruzon M, Segal M, Shlens J, Vijayanarasimhan S, Yagnik J (2013) Fast, accurate detection of 100,000 object classes on a single machine. In: 2013 IEEE conference on computer vision and pattern recognition (CVPR), pp 1814–1821. doi:10.1109/CVPR.2013.237

Doherty AR, Smeaton AF (2008) Combining face detection and novelty to identify important events in a visual lifelog. In: Proceedings of the 2008 IEEE 8th international conference on computer and information technology workshops, IEEE Computer Society, Washington, DC, USA, CITWORKSHOPS ’08, pp 348–353

Doherty AR, Smeaton AF, Lee K, Ellis DPW (2007) Multimodal segmentation of lifelog data. In: Proceedings of the RIAO 2007, Pittsburgh, pp 21–38

Doherty AR, Byrne D, Smeaton AF, Jones G, Hughes M (2008) Investigating keyframe selection methods in the novel domain of passively captured visual lifelogs. In: Proceedings of the international conference on content-based image and video retrieval, ACM, pp 259–268

Dufaux F (2000) Key frame selection to represent a video. In: International conference on image processing (ICIP), pp 275–278

Everingham M, Van Gool L, Williams CKI, Winn J, Zisserman A (2010) The pascal visual object classes (voc) challenge. Int J Comput Vision 88(2):303–338

Gemmell J, Williams L, Wood K, Lueder R, Bell G (2004) Passive capture and ensuing issues for a personal lifetime store. In: Proceedings of the the 1st ACM workshop on continuous archival and retrieval of personal experiences, ACM Press, CARPE’04, pp 48–55

Gurrin C, Smeaton AF, Byrne D, Jones GJF (2008) An examination of a large visual lifelog. In: Proceedings of the AIRS, pp 537–542

Hodges S, Williams L, Berry E, Izadi S, Srinivasan J, Butler A, Smyth G, Kapur N, Wood K (2006) Sensecam: a retrospective memory aid. UbiComp: ubiquitous computing. Springer, Heidelberg, pp 177–193

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. Trans Pattern Anal Mach Intell 20(11):1254–1259

Jinda-apiraksa A (2012) A keyframe selection of lifelog image sequences. Erasmus Mundus M.Sc. in visions and robotics thesis, Vienna University of Technology (TU Wien)

Jinda-apiraksa A, Machajdik J, Sablatnig R (2013) A keyframe selection of lifelog image sequences. In: Proceedings of the IAPR conference on machine vision applications (IAPR MVA). Kyoto, pp 33–36

LaBar KS, Cabeza R (2006) Cognitive neuroscience of emotional memory. Nat Rev Neurosci 7(1):54–64

Lin WH, Hauptmann E (2006) A.: Structuring continuous video recordings of everyday life using time-constrained clustering. In: Symposium on electronic imaging

Mann S (1997) An historical account of the ’wearcomp’ and ’wearcam’ inventions developed for applications in ’personal imaging’. In: Proceedings of the 1st IEEE international symposium on wearable computers, IEEE Computer Society, Washington, DC, USA, ISWC ’97, pp 66–73

Marziliano P, Dufaux F, Winkler S, Ebrahimi T (2002) A no-reference perceptual blur metric. In: Proceedings of the international conference on image processing, IEEE, vol 3, pp 57–60

Meng J, Juan Y, Chang SF (1995) Scene change detection in an mpeg-compressed video sequence. In: IS&T/SPIE’s symposium on electronic imaging: science & technology, international society for optics and photonics, pp 14–25

Poh MZ, Swenson NC, Picard RW (2010) A wearable sensor for unobtrusive, long-term assessment of electrodermal activity. IEEE Transa Biomed Eng 57(5):1243–1252

Ratsamee P, Mae Y, Ohara K, Takubo T, Arai T (2013) Lifelogging keyframe selection using image quality measurements and physiological excitement features. In: International conference on intelligent robots and systems (IROS), IEEE, pp 1682–1687

Rav-Acha A, Pritch Y, Peleg S (2006) Making a long video short: dynamic video synopsis. In: Computer society conference on computer vision and pattern recognition, IEEE, vol 1, pp 435–441

Smeaton AF, Browne P (2006) A usage study of retrieval modalities for video shot retrieval. Inf Process Manag 42(5):1330–1344

Steiner T, Verborgh R, Gabarrã Vallãs J, Troncy R, Hausenblas M, Van de Walle R, Brousseau A (2012) Enabling on-the-fly video shot detection on YouTube. In: WWW 2012, 21st international World Wide Web conference developer, Lyon, 16–20 Apr 2012

Uchihashi S, Foote J (1999) Summarizing video using a shot importance measure and a frame-packing algorithm. In: Proceedings 1999 IEEE international conference on acoustics, speech, and signal processing, ICASSP ’99, vol 06, IEEE Computer Society, Washington, DC, pp 3041–3044

Viola P, Jones M (2001) Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition, CVPR 2001, vol 1, pp I-511-I-518. doi:10.1109/CVPR.2001.990517

Wagner J, Kim J, André E (2005) From physiological signals to emotions: Implementing and comparing selected methods for feature extraction and classification. In: International conference on multimedia and expo, IEEE, pp 940–943

Wolf W (1996) Key frame selection by motion analysis. In: Proceedings on international conference on acoustics, speech, and signal processing, IEEE, vol 2, pp 1228–1231

Acknowledgments

We would like to thank the 6 volunteering students for data collection, ground truth labeling and scoring and Ubiqlog software from Computer Vision Lab in Computer Vision Lab, Vienna University of Technology(TU Wien), Austria. Special thank to Amornched Jinda-apiraksa, Jana Machajdik and Professor Robert Sablatnig who provided valuable comments on this work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ratsamee, P., Mae, Y., Jinda-apiraksa, A. et al. Keyframe Selection Framework Based on Visual and Excitement Features for Lifelog Image Sequences. Int J of Soc Robotics 7, 859–874 (2015). https://doi.org/10.1007/s12369-015-0306-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-015-0306-y