Abstract

Kinect is frequently used as a capture device for perceiving human motion in human–robot interaction. However, the Kinect’s principle of capture makes it possible for outliers to be present in the raw 3D joint position data, yielding an unsatisfying motion imitation by a humanoid robot. To eliminate these outliers and improve the precision of motion perception, we are inspired from the principle of signal restoration and propose a robust regression-based refining algorithm. We made contributions mainly in designing an Arc Tangent Square function to estimate the tendency of motion trajectories, and constructing a stepwise robust regression strategy to successively refine the outliers hidden in the motion capture data. The motion trajectories refined by the proposed algorithm are 40, 10, and 30% better than the raw motion capture data on spatial similarity, temporal similarity, and smoothness, respectively. In the online implementation on a humanoid robot NAO, the imitated motions of the human’s upper limbs are synchronous and accurate. The proposed robust regression-based refining algorithm realizes high-performance motion perception for online imitation of the humanoid robot.

Similar content being viewed by others

References

Aldebaran Robotics (2015) H25 - Links—Aldebaran 2.1.4.13 documentation. http://doc.aldebaran.com/2-1/family/nao_h25/links_h25.html

Aldebaran Robotics (2015) Nao robot: characteristics. https://www.aldebaran.com/en/cool-robots/nao/find-out-more-about-nao

Aldebaran Robotics (2015) SDK, simple software for developing your NAO. https://www.aldebaran.com/en/robotics-solutions/robot-software/development

Almetwally I, Mallem M (2013) Real-time tele-operation and tele-walking of humanoid robot nao using Kinect depth camera. In: 2013 10th IEEE international conference on networking, sensing and control (ICNSC). IEEE, Evry, pp 463–466

Andrews DF (1974) A robust method for multiple linear regression. Technometrics 16(4):523–531

Brown S (2013) Understanding machinima: essays on filmmaking in virtual worlds. Bloomsbury Academic, London

Do M, Azad P, Asfour T, Dillmann R (2008) Imitation of human motion on a humanoid robot using non-linear optimization. In: 2008 8th IEEE-RAS international conference on humanoid robots. IEEE, Daejeon, pp 545–552

Edgeworth FY (1887) On observations relating to several quantities. Hermathena 6(13):279–285

Fujimoto I, Matsumoto T, De Silva PRS, Kobayashi M, Higashi M (2011) Mimicking and evaluating human motion to improve the imitation skill of children with autism through a robot. Int J Soc Robot 3(4):349–357

Gall J, Rosenhahn B, Brox T, Seidel HP (2010) Optimization and filtering for human motion capture. Int J Comput Vis 87(1–2):75–92

Gross AM (1977) Confidence intervals for bisquare regression estimates. J Am Stat Assoc 72(358):341–354

Holland PW, Welsch RE (1977) Robust regression using iteratively reweighted least-squares. Commun Stat Theory Methods 6(9):813–827

Huber PJ (1964) Robust estimation of a location parameter. Ann Math Stat 35(1):73–101

López-Méndez A, Alcoverro M, Pardàs M, Casas JR (2011) Real-time upper body tracking with online initialization using a range sensor. In: 2011 IEEE international conference on computer vision workshops (ICCV workshops). IEEE, pp 391–398

Ltd VMS (2013) T-series brochure. http://vicon.com/file/t-series-brochure.pdf

Luo RC, Shih BH, Lin TW (2013) Real time human motion imitation of anthropomorphic dual arm robot based on Cartesian impedance control. In: 2013 IEEE international symposium on robotic and sensors environments (ROSE). IEEE, Washington, DC, pp 25–30

Microsoft (2014) Develop for Kinect. http://www.microsoft.com/en-us/kinectforwindows

Motion Analysis Corporation (2015) Motion analysis corporation, the motion capture leader. http://www.motionanalysis.com/index.html

Muis A, Indrajit W (2012) Realistic motion preservation-imitation development through Kinect and humanoid robot. TELKOMNIKA 10(4):599–608

Nguyen VV, Lee JH (2012) Full-body imitation of human motions with kinect and heterogeneous kinematic structure of humanoid robot. In: 2012 IEEE/SICE international symposium on system integration (SII 2012), Fukuoka, pp 93–98

Ou Y, Hu J, Wang Z, Fu Y, Wu X, Li X (2015) A real-time human imitation system using Kinect. Int J Soc Robot 7(5):587–600

Ramos O, Mansard N, Stasse O, Hak S, Saab L, Benazeth C (2015) Dynamic whole body motion generation for the dance of a humanoid robot. IEEE Robot Autom Mag (RAM) (in press). https://homepages.laas.fr/ostasse/papers/2015/ramos-ram-2015.pdf

Roosink M, Robitaille N, McFadyen BJ, Hbert LJ, Jackson PL, Bouyer LJ, Mercier C (2015) Real-time modulation of visual feedback on human full-body movements in a virtual mirror: development and proof-of-concept. J Neuroeng Rehabil. doi:10.1186/1743-0003-12-2

Rosado J, Silva F, Santos V (2014) A Kinect-based motion capture system for robotic gesture imitation. In: ROBOT2013: first Iberian robotics conference. Springer, Switzerland, pp 585–595

Stearns KM, Pollard CD (2013) Abnormal frontal plane knee mechanics during sidestep cutting in female soccer athletes after anterior cruciate ligament reconstruction and return to sport. Am J Sports Med. doi:10.1177/0363546513476853

Tan H, Kawamura K (2011) A computational framework for integrating robotic exploration and human demonstration in imitation learning. In: 2011 IEEE international conference on systems, man, and cybernetics (SMC). IEEE, Anchorage, pp 2501–2506

Thobbi A, Sheng W (2010) Imitation learning of arm gestures in presence of missing data for humanoid robots. In: 2010 10th IEEE-RAS international conference on humanoid robots. IEEE, Nashville, pp 92–97

Vakanski A, Mantegh I, Irish A, Janabi-Sharifi F (2012) Trajectory learning for robot programming by demonstration using hidden Markov model and dynamic time warping. IEEE Trans Syst Man Cybern B Cybern 42(4):1039–1052

Xiao Y, Zhang Z, Beck A, Yuan J, Thalmann D (2014) Human–robot interaction by understanding upper body gestures. Presence Teleoper Virtual Environ 23(2):133–154

Zhang L, Huang Q, Yang J, Shi Y, Wang Z (2007) Design of humanoid complicated dynamic motion with similarity considered. Acta Autom Sin 33(5):522–528

Zhang Z (1997) Parameter estimation techniques: a tutorial with application to conic fitting. Image Vis Comput 15(1):59–76

Zhu T (2015) Online imitation of human motion using Kinect and nao. https://www.youtube.com/watch?v=Hw2FrmW312U

Zhu T, Zhao Q, Xia Z (2014) A visual perception algorithm for human motion by a Kinect. Robot 36(6):647–653

Acknowledgements

This work was funded by the Major Research plan of the National Natural Science Foundation of China (91646205), the National Natural Science Foundation of China (51305436), and the Major Project of Guangdong Province Science and Technology Department (2014B090919002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Inverse Kinematics Transform (IKT) Formulas

Inverse Kinematics Transform (IKT) Formulas

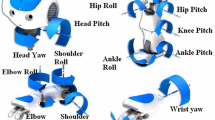

In Appendix A, the left arm is taken as an example for providing the formulas of the IKT. The structure of the left arm is illustrated in Fig. 16. The left arm can be viewed as a two-link manipulator with four DOFs. Using the algebraic approach to solve the inverse kinematics problem can lead to a non-unique solution, while simultaneously having a considerable computational cost. Thus, the geometric solution approach, which is a hierarchical and intuitive approach, is used here to implement the IKT.

The shoulder joint angles are related to the poses of the torso and upper arm, while the elbow angles depend on the relative position of the upper and lower arms. Assuming that the position data of all of the joints has been converted into NAO coordinates, the x-axis of the torso coordinates is represented by the normal vector of the torso plane. This is constructed by the left shoulder \({{\varvec{P}}_{\mathrm {LS}}}\), the right shoulder \({{\varvec{P}}_{\mathrm {RS}}}\), and the center of the hip \({{\varvec{P}}_{\mathrm {CH}}}\). The vector from \({{\varvec{P}}_{\mathrm {RS}}}\) to \({{\varvec{P}}_{\mathrm {LS}}}\) denotes the y-axis, and the vector from \({{\varvec{P}}_{\mathrm {CH}}}\) to the center of shoulder \({{\varvec{P}}_{\mathrm {CS}}}\) denotes the z-axis. The rotation matrices \({{\varvec{R}}_{\mathrm {Torso}}}\) from NAO coordinates to the torso coordinates can then be obtained [27].

1.1 The IKT of the Shoulder Joint Angles

All the joint positions included in the left arm can be translated and rotated from NAO coordinates to the left shoulder coordinates. The left shoulder coordinates only have translation relationships (not rotational relationships) with the torso coordinates, and the joint positions can be presented as:

where \({{\varvec{P}}_{\mathrm {LS}}}\), \({{\varvec{P}}_{\mathrm {LE}}}\), and \({{\varvec{P}}_{\mathrm {LW}}}\) are the joint positions in the NAO coordinates, while \({{\varvec{P}}'_{\mathrm {LS}}}\), \({{\varvec{P}}'_{\mathrm {LE}}}\), and \({{\varvec{P}}'_{\mathrm {LW}}}\) are the corresponding positions in the left shoulder coordinates. Equation (A.1) means that \({{\varvec{P}}'_{\mathrm {LS}}}\) is the origin of the left shoulder coordinates. According to Fig. 16a, \({\theta _{\mathrm {LSPitch}}}\) and \({\theta _{\mathrm {LSRoll}}}\) can be calculated by the following formulas:

1.2 The IKT of the Elbow Joint Angles

The calculation of \({\theta _{\mathrm {LEYaw}}}\) and \({\theta _{\mathrm {LERoll}}}\) requires a further coordinate transform, i.e., from the left shoulder coordinates to the left elbow coordinates. The joint positions in the left elbow coordinates can be presented as follows:

where \({\varvec{P}}''_{\mathrm {LE}}\) and \({\varvec{P}}''_{\mathrm {LW}}\) are the positions of the left elbow and wrist in the left elbow coordinates, respectively. Equation (A.7) indicates that the left shoulder coordinates first rotate \( - {\theta _{\mathrm {LSPitch}}}\) around the y-axis, and then rotate \( - {\theta _{\mathrm {LSRoll}}}\) around the z-axis. The expressions of \({\varvec{R}} \left( y, - {\theta _{\mathrm {LSPitch}}} \right) \) and \({\varvec{R}}\left( z, - {\theta _{\mathrm {LSRoll}}} \right) \) are:

According to Fig. 16b, the solutions of \({\theta _{\mathrm {LEYaw}}}\) and \({\theta _{\mathrm {LERoll}}}\) can be given as follows:

The IKT formulas of the right arm can be derived in a similar fashion.

Rights and permissions

About this article

Cite this article

Zhu, T., Zhao, Q., Wan, W. et al. Robust Regression-Based Motion Perception for Online Imitation on Humanoid Robot. Int J of Soc Robotics 9, 705–725 (2017). https://doi.org/10.1007/s12369-017-0416-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-017-0416-9