Abstract

Assigning categories to objects allows the mind to code experience by concepts, thus easing the burden in perceptual, storage, and reasoning processes. Moreover, maximal efficiency of cognitive resources is attained with categories that best mirror the structure of the perceived world. In this work, we will explore how taxonomies could be represented in the brain, and their application in learning and recall. In a recent work, Sacramento and Wichert (in Neural Netw 24(2):143–147, 2011) proposed a hierarchical arrangement of compressed associative networks, improving retrieval time by allowing irrelevant neurons to be pruned early. We present an extension to this model where superordinate concepts are encoded in these compressed networks. Memory traces are stored in an uncompressed network, and each additional network codes for a taxonomical rank. Retrieval is progressive, presenting increasingly specific superordinate concepts. The semantic and technical aspects of the model are investigated in two studies: wine classification and random correlated data.

Similar content being viewed by others

Notes

Note that in auto-associative setups, every neuron performs input and output roles.

On each box, the central mark is the median, the edges of the box are the 25th and 75th percentiles, whiskers extend to the most extreme data points not considered outliers, and outliers are plotted individually. Any datum higher/lower than 1.5 interquartile range (Q 3 − Q 1) of the lower/upper quartile is considered an outlier

References

Sacramento J, Wichert A. Tree-like hierarchical associative memory structures. Neural Netw. 2011;24(2):143–7.

Harnad S. To cognize is to categorize: cognition is categorization. Handbook of categorization in cognitive science. 2005. pp. 19–43.

Rosch E. Principles of categorization. In: Rosch E, Lloyd BB, editors. Cognition and categorization. Hillsdale, NJ: Lawrence Erlbaum Associates; 1978. p. 27–48. (Reprinted in Readings in Cognitive Science. A Perspective from Psychology and Artificial Intelligence, A. Collins and E.E. Smith, editors, Morgan Kaufmann Publishers, Los Altos (CA), USA, 1991).

Berlin B. Ethnobiological classification: principles of categorization of plants and animals in traditional societies. Princeton, NJ: Princeton University Press; 1992.

Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain: the animate-inanimate distinction. J Cogn Neurosci. 1998;10(1):1–34.

Warrington EK, McCarthy R. Category specific access dysphasia. Brain 1983;106(4):859–78.

Perani D, Schnur T, Tettamanti M, Cappa SF, Fazio F, et al. Word and picture matching: a PET study of semantic category effects. Neuropsychologia 1999;37(3):293–06.

Thompson-Schill S, Aguirre G, Desposito M, Farah M. A neural basis for category and modality specificity of semantic knowledge. Neuropsychologia 1999;37(6):671–6.

Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proc Natl Acad Sci. 1999;96(16):9379.

Sacramento J, Burnay F, Wichert A. Regarding the temporal requirements of a hierarchical Willshaw network. Neural Networks. 2012;25:84–93. doi:10.1016/j.neunet.2011.07.005.

Willshaw DJ, Buneman OP, Longuet-Higgins HC. Non-Holographic Associative Memory. Nature. 1969 06;222(5197):960–962.

Palm G. On associative memory. Biol Cybern. 1980;36:19–31. doi:10.1007/BF00337019.

Palm G. Towards a theory of cell assemblies. Biol Cybern. 1981;39:181–94. doi:10.1007/BF00342771.

Wennekers T. On the natural hierarchical composition of cliques in cell assemblies. Cogn Comput. 2009;1:128–38.

Apostle HG. Aristotle’s Categories and propositions (De Interpretatione). Grinnell, IA: Peripatetic Press; 1980.

Murphy GL. The big book of concepts. Cambridge: MIT Press; 2002.

Smith EE, Medin DL. Categories and concepts. In: Smith EE, Medin DL, editors. Harvard University Press, Cambridge, MA; 1981.

Barsalou LW. Ideals, central tendency, and frequency of instantiation as determinants of graded structure in categories. J Exp Psychol Learn Memory Cogn. 1985;11(4):629–54.

Rosch E, Mervis CB, Gray WD, Johnson DM, Boyes-Braem P. Basic objects in natural categories. Cogn Psychol. 1976;8(3):382–439.

Smith EE. Concepts and categorization. In: Osherson EESD, editor. Thinking. vol. 3. 2nd ed. Cambridge, MA: MIT Press; 1995. pp. 3–33.

McClelland JL, Rumelhart DE. Distributed memory and the representation of general and specific information. J Exp Psychol Gen. 1985;114(2):159–88.

Tversky A. Features of similarity. Psychol Rev. 1977;84(4):327–52.

Osherson DN. Probability judgement. In: Osherson EESD, editor. Thinking. vol. 3. 2nd ed. Cambridge, MA: MIT Press; 1995. pp. 35–75.

Rosch E, Mervis CB. Family resemblances: studies in the internal structure of categories. Cogn Psychol. 1975;7(4):573–605.

Rips LJ, Shoben EJ, Smith EE. Semantic distance and the verification of semantic relations. J Verbal Learn Verbal Behav. 1973;12(1):1–20.

Wichert A. A categorical expert system “Jurassic”. Expert Syst Appl. 2000;(19):149–58.

Nosofsky RM. Attention, similarity, and the identification-categorization relationship. J Exp Psychol Gen. 1986;115(1):39–61.

Kurtz DGK. Relational Categories. In: Ahn WK, Goldstone RL, Love BC, Markman AB, Wolff PW, editors. Categorization inside and outside the lab. Washington, DC: American Psychological Association; 2005. pp. 151–175.

Waltz J, Lau A, Grewal S, Holyoak K. The role of working memory in analogical mapping. Memory Cogn. 2000;28:1205–12. doi:10.3758/BF03211821.

Smith EE, Grossman M. Multiple systems of category learning. Neurosci Biobehav Rev. 2008;32(2):249–64. (The Cognitive Neuroscience of Category Learning).

Tomlinson M, Love B. When learning to classify by relations is easier than by features. Think Reason. 2010;16(4):372–401.

Doumas LAA, Hummel JE, Sandhofer CM. A Theory of the discovery and predication of relational concepts. Psychol Rev. 2008;115(1):1–43.

Kay P. Taxonomy and semantic contrast. Language. 1971;47(4):866–887.

Murphy GL, Brownell HH. Category differentiation in object recognition: typicality constraints on the basic category advantage. J Exp Psychol Learn Memory Cogn. 1985;11(1):70–84.

Sneath PHA, Sokal RR. Numerical taxonomy. Nature. 1962 03;193(4818):855–0.

Sneath PH. The application of computers to taxonomy. J Gen Microbiol. 1957;17:201–26.

Jaccard P. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bulletin del la Société Vaudoise des Sciences Naturelles. 1901;37:547–79.

Hastie T, Tibshirani R, Friedman JH. The elements of statistical learning, Corrected ed. Springer, Berlin; 2003.

Tan PN, Steinbach M, Kumar V. Introduction to data mining, used ed. Addison Wesley, Reading, MA; 2005.

Manning CD, Raghavan P, Schütze H. Introduction to information retrieval, 1st ed. Cambridge University Press, Cambridge; 2008.

Sokal RR. Numerical taxonomy. Sci Am. 1966;215(6):106–116.

Anderson JR, Bower GH. Human associative memory. Winston, Washington; 1973.

Collins A, Quillian M. Retrieval time from semantic memory. J Verbal Learn Verbal Behav. 1969;8(2):240–7.

Rumelhart DE, McClelland JL. Parallel distributed processing: explorations in the microstructure of cognition, vol 1: foundations. MIT Press, Cambridge, MA; 1986.

Collins AM, Loftus EF. A spreading-activation theory of semantic processing. Psychol Rev. 1975;82(6):407–28.

Rojas R. Neural networks: a systematic introduction. Springer, Berlin; 1996.

Steinbuch K. Die Lernmatrix. Kybernetic 1961;1:36–45.

Amari SI. Characteristics of sparsely encoded associative memory. Neural Netw. 1989;2(6):451–7.

Nadal JP, Toulouse G. Information storage in sparsely coded memory nets. Netw Comput Neural Syst. 1990;1(1):61–74.

Buckingham J, Willshaw D. Performance characteristics of the associative net. Netw Comput Neural Syst. 1992;3(4):407–14.

Graham B, Willshaw D. Improving recall from an associative memory. Biol Cybern. 1995;72(4):337–46.

Knoblauch A, Palm G, Sommer FT. Memory capacities for synaptic and structural plasticity. Neural Comput. 2010;22(2):289–41.

Hebb DO. The organization of behaviour. Wiley, New York; 1949.

Buckingham J, Willshaw D. On setting unit thresholds in an incompletely connected associative net. Netw Comput Neural Syst. 1993;4(4):441–59.

Schwenker F, Sommer FT, Palm G. Iterative retrieval of sparsely coded associative memory patterns. Neural Netw. 1996;9(3):445–55.

Wichert A. Subspace tree. In: IEEE on seventh international workshop on content-based multimedia indexing conference proceedings, 2009; p. 38–43.

Reed SK. Pattern recognition and categorization. Cogn Psychol. 1972;3(3):382–07.

Jones GV. Identifying basic categories. Psychol Bull. 1983;94(3):423.

Edgell SE. Using configural and dimensional information. Individual and group decision making: current issues; 1993. p. 43.

Gluck M, Corter J. Information, uncertainty, and the utility of categories. In: Proceedings of the seventh annual conference of the cognitive science society. Hillsdale, NJ: Erlbaum; 1985. pp. 283–287.

Rosenblatt F. Principles of neurodynamics: perceptrons and the theory of brain mechanisms. Washington DC: Spartan; 1962.

Rumelhart DE, Hintont GE, Williams RJ. Learning representations by back-propagating errors. Nature 1986;323(6088):533–6.

Kohonen T. Self-organized formation of topologically correct feature maps. Biol Cybern. 1982;43(1):59–9.

Waibel A, Hanazawa T, Hinton G, Shikano K, Lang KJ. Phoneme recognition using time-delay neural networks. IEEE Trans Acoustics Speech Signal Proc. 1989;37(3):328–39.

Cohen LB, Chaput HH, Cashon CH. A constructivist model of infant cognition. Cogn Dev. 2002;17(3):1323–43.

Brunel N. Storage capacity of neural networks: effect of the fluctuations of the number of active neurons per memory. J Phys A Math Gen. 1994;27(14):4783–9.

Petersen CCH, Malenka RC, Nicoll RA, Hopfield JJ. All-or-none potentiation at CA3-CA1 synapses. Proc Natl Acad Sci. 1998;95(8):4732–7.

O’Connor DH, Wittenberg GM, Wang SSH. Graded bidirectional synaptic plasticity is composed of switch-like unitary events. Proc Natl Acad Sci USA. 2005;102(27):9679–4.

Amit DJ, Fusi S. Learning in neural networks with material synapses. Neural Comput. 1994;6(5):957–82.

Fusi S, Abbott LF. Limits on the memory storage capacity of bounded synapses. Nature Neurosci. 2007;10(4):485–493.

Barrett AB, van Rossum MCW. Optimal learning rules for discrete synapses. PLoS Comput Biol. 2008 11;4(11):e1000230.

Leibold C, Kempter R. Sparseness constrains the prolongation of memory lifetime via synaptic metaplasticity. Cerebral Cortex 2008;18(1):67–7.

Huang Y, Amit Y. Capacity analysis in multi-state synaptic models: a retrieval probability perspective. J Comput Neurosci. 2011;30(3):699–20.

Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci USA. 1982;79(8):2554–58.

Gutfreund H. Neural networks with hierarchically correlated patterns. Phys Rev A 1988;37(2):570–7.

Belohlávek R. Representation of concept lattices by bidirectional associative memories. Neural Comput. 2000;12:2279–90.

Parga N, Virasoro MA. The ultrametric organization of memories in a neural network. J Phys. 1986;47(11):1857–64.

Toulouse G, Dehaene S, Changeux JP. Spin glass model of learning by selection. Proc Natl Acad Sci. 1986;83(6):1695–8.

Fontanari JF. Generalization in a Hopfield network. J Phys France 1990;51(21):2421–0.

Engel A. Storage of hierarchically correlated patterns. J PhysA Math Gen. 1990;23:2587.

Kimoto T, Okada M. Coexistence of memory patterns and mixed states in a sparsely encoded associative memory model storing ultrametric patterns. Biol Cybern. 2004;90(4):229–38.

Abeles M. Local cortical circuits: an electrophysiological study. Springer, New York; 1982.

Abeles M. Corticonics: neural circuits of the cerebral cortex. Cambridge University Press, Cambridge; 1991.

Abeles M, Hayon G, Lehmann D. Modeling compositionality by dynamic binding of synfire chains. J Comput Neurosci. 2004;17(2):179–01.

Földiák P. Forming sparse representations by local anti-Hebbian learning. Biol Cybern. 1990;64(2):165–0.

Brunel N, Carusi F, Fusi S. Slow stochastic Hebbian learning of classes of stimuli in a recurrent neural network. Netw Comput Neural Syst. 1998;9(1):123–52.

Sejnowski TJ. Storing covariance with nonlinearly interacting neurons. J Math Biol. 1977;4(4):303–21.

Amit DJ, Gutfreund H, Sompolinsky H. Information storage in neural networks with low levels of activity. Phys Rev A. 1987;35(5):2293–303.

Dayan P, Willshaw DJ. Optimising synaptic learning rules in linear associative memories. Biol Cybern. 1991;65(4):253–65.

Knoblauch A. Neural associative memory with optimal Bayesian learning. Neural Comput. 2011;23(6):1393–451.

Acknowledgments

This work was supported by national funds through FCT—Fundação para a Ciência e a Tecnologia, under project PEst-OE/EEI/LA0021/2011. J.S. is supported by an FCT doctoral grant (contract SFRH/BD/66398/2009).

Author information

Authors and Affiliations

Corresponding author

Appendix: Cluster Membership Heuristic

Appendix: Cluster Membership Heuristic

After the preprocessing step where elements are clustered, learning of item–category membership associations is performed at every taxonomical rank by an associative net. To determine the cluster C p that contains a certain item x at a certain taxonomical rank, we could simply refer to our cluster-tree.

Alternatively, drawing inspiration from the fixed-points of the oscillator model presented in [14], we propose a heuristic to test whether an element belongs to a cluster which warrants no false negatives (which would impair accuracy). This approach reduces lookup costs in the computational setting and provides a hint of how the model could generalize learning while leveraging a symbolic preprocessing step.

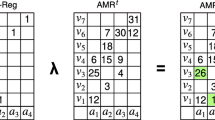

We use a convenient short-hand representation of a cluster, the Boolean sum pattern \({\fancyscript{U}(C_p),}\) previously presented in eq. 20, that describes the feature-set containing every feature which is present in at least one element of C p . Once again we turn to our simple fruit taxonomy to illustrate. Table 9 shows these feature-sets \({\fancyscript{U}(C_p)}\) for each non-single-element cluster. For a single-element cluster, this feature-set is given by that very element.

These feature-sets are analogous to the extension of the Boolean OR aggregates in the prime model. Note, however, that unlike the prime model, the content space is not partitioned, that is, the divisions are possibly overlapping. Thus, in our model, two contrasting feature-sets (at the same taxonomical level) may have a nonzero intersection. For instance, in our illustrative taxonomy of fruits (refer to Table 9), we have \(C_2 \; \cap \; C_3 =\) {sweet, round}.

Given the requirement that we produce no false negatives when pruning from one network to the next, we defined our pattern versus cluster matching heuristic to require a single shared feature between a pattern x and a cluster description \({\fancyscript{U}(C_p)}\) to associate a pattern with said cluster C p .

We exploit the binary structure of the taxonomical tree, testing always cluster pairs. Alike a binary search procedure, when we are checking at which clusters an element x μ belongs, if we have determined that at a given level \({\bf x}^{\mu} \in C_a \wedge {\bf x}^{\mu} \notin C_b,\) we may at deeper levels disregard the descendants of C b .

This approach carries a trade-off: it produces false positives. Consider, for instance, the definition of apple in our fruit taxonomy (refer to Table 1 and the feature-sets of C 2 and C 3 in Table 9). According to our heuristic, we cannot determine which of these clusters contain the pattern (both produce feature matches); however, at deeper levels, this uncertainty of “taxonomical location” decreases.

For an illustration of the side-effects of this method, consider Table 10 depicting item–category associations for the fruit taxonomy where cluster codes are approximated with the heuristic here described. Given the compact and dense feature space of this dataset, and the fact it produces a taxonomy that is not very deep, overlaps in feature-unions are plenty and reduction in uncertainty is minimal.

Rights and permissions

About this article

Cite this article

Rendeiro, D., Sacramento, J. & Wichert, A. Taxonomical Associative Memory. Cogn Comput 6, 45–65 (2014). https://doi.org/10.1007/s12559-012-9198-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-012-9198-4