Abstract

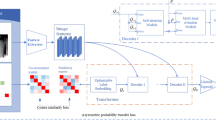

Nowadays, the global COVID-19 situation is still serious, and the new mutant virus Delta has already spread all over the world. The chest X-ray is one of the most common radiological examinations for screening catheters and diagnosis of many lung diseases, which plays an important role in assisting clinical diagnosis during the outbreak. This study considers the problem of multi-label catheters and thorax disease classification on chest X-ray images based on computer vision. Therefore, we propose a new variant of pyramid vision Transformer for multi-label chest X-ray image classification, named MXT, which can capture both short and long-range visual information through self-attention. Especially, downsampling spatial reduction attention can reduce the resource consumption of using Transformer. Meanwhile, multi-layer overlap patch (MLOP) embedding is used to tokenize images and dynamic position feed forward with zero paddings can encode position instead of adding a positional mask. Furthermore, class token Transformer block and multi-label attention (MLA) are utilized to offer more effective processing of multi-label classification. We evaluate our MXT on Chest X-ray14 dataset which has 14 disease pathologies and Catheter dataset containing 11 types of catheter placement. Each image is labeled one or more categories. Compared with some state-of-the-art baselines, our MXT can yield the highest mean AUC score of 83.0% on the Chest X-ray14 dataset and 94.6% on the Catheter dataset. According to the ablation study, we can obtain the following results: (1) The proposed MLOP embedding has a better performance than overlap patch (OP) embedding layer and non-overlap patch (N-OP) embedding layer that the mean AUC score is improved 0.6% and 0.4%, respectively. (2) Our demonstrate dynamic position feed forward can replace the traditional position mask which can learn the position information, and the mean AUC increased by 0.6%. (3) The mean AUC score by the designed MLA is more 0.2% and 0.6% than using the class token and calculating the mean scores of all tokens. The comprehensive experiments on two datasets demonstrate the effectiveness of the proposed method for multi-label chest X-ray image classification. Hence, our MXT can assist radiologists in diagnoses of lung diseases and check the placement of catheters, which can reduce the work pressure of medical staff.

Similar content being viewed by others

References

WHO. WHO Coronavirus (COVID-19) Dashboard. 2021. https://covid19.who.int/.

Xia F, Yang X, Cheke RA, Xiao Y. Quantifying competitive advantages of mutant strains in a population involving importation and mass vaccination rollout. Infectious Disease Modelling. 2021;6:988–96.

Paul A, Basu A, Mahmud M, Kaiser MS, Sarkar R. Inverted bell-curve-based ensemble of deep learning models for detection of COVID-19 from chest X-rays. Neural Comput Applic. 2022:1–15.

Prakash N, Murugappan M, Hemalakshmi G, Jayalakshmi M, Mahmud M. Deep transfer learning for COVID-19 detection and infection localization with superpixel based segmentation. Sustainable Cities Society & Natural Resources. 2021;75: 103252.

Kumar S, Viral R, Deep V, Sharma P, Kumar, M, Mahmud M, et al. Forecasting major impacts of COVID-19 pandemic on country-driven sectors: challenges, lessons, and future roadmap. Personal Ubiquit Comput. 2021:1–24.

Gomes JC, Barbosa VAdF, Santana MA, Bandeira J, Valença MJS, de Souza RE, et al. IKONOS: an intelligent tool to support diagnosis of COVID-19 by texture analysis of X-ray images. Research on Biomedical Engineering. 2020:1–14.

Ismael AM, Şengür A. The investigation of multiresolution approaches for chest X-ray image based COVID-19 detection. Health Information Science Systems. 2020;8(1):1–11.

Gomes JC, Masood AI, Silva LHdS, da Cruz Ferreira JRB, Freire Junior AA, Rocha ALdS, et al. Covid-19 diagnosis by combining RT-PCR and pseudo-convolutional machines to characterize virus sequences. Sci Rep. 2021;11(1):1–28.

Ismael AM, Şengür A. Deep learning approaches for COVID-19 detection based on chest X-ray images. Expert Syst Appl. 2021;164: 114054.

Sorokin R, Gottlieb JE. Enhancing patient safety during feeding-tube insertion: a review of more than 2000 insertions. J Parenter Enter Nutr. 2006;30(5):440–5.

Lotano R, Gerber D, Aseron C, Santarelli R, Pratter M. Utility of postintubation chest radiographs in the intensive care unit. Crit Care. 2000;4(1):1–4.

Wang X, Peng Y, Lu L, Lu Z, Bagheri M, Summers RM. Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. Conference on Computer Vision and Pattern Recognition (CVPR). 2017; pp. 2097–2106.

Irvin J, Rajpurkar P, Ko M, Yu Y, Ciurea-Ilcus S, Chute C, et al. Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison. Proceedings of the AAAI Conference on Artificial Intelligence (AAAI). 2019; pp. 590–597.

Mahapatra, D, Bozorgtabar, B, Garnavi, R, Graphics. Image super-resolution using progressive generative adversarial networks for medical image analysis. Comput Med Imaging Graph. 2019;71:30–39.

Zhang S, Liang G, Pan S, Zheng L. A fast medical image super resolution method based on deep learning network. IEEE Access. 2018;7:12319–27.

Bellver M, Maninis K-K, Pont-Tuset J, Giró-i-Nieto X, Torres J, Van Gool L. Detection-aided liver lesion segmentation using deep learning. 2017. arXiv preprint arXiv: 1711.11069.

Rashid Sheykhahmad F, Razmjooy N, Ramezani M. A novel method for skin lesion segmentation. International Journal of Information, Security Systems Management. 2015;4(2):458–66.

Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T et al. Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning. 2017. arXiv preprint arXiv: 1711.05225.

Liu H, Wang L, Nan Y, Jin F, Wang Q, Pu J. SDFN: Segmentation-based deep fusion network for thoracic disease classification in chest X-ray images. Comput Med Imaging Graphics. 2019;75:66–73.

Yao L, Prosky J, Poblenz E, Covington B, Lyman K. Weakly supervised medical diagnosis and localization from multiple resolutions. 2018. arXiv preprint arXiv: 1803.07703.

Wang H, Jia H, Lu L, Xia Y. Thorax-net: an attention regularized deep neural network for classification of thoracic diseases on chest radiography. IEEE J Biomed Health Inform. 2019;24(2):475–85.

Guan Q, Huang Y. Multi-label chest X-ray image classification via category-wise residual attention learning. Pattern Recog Lett. 2020;130:259–66.

Chen J, Lu Y, Yu Q, Luo X, Adeli E, Wang Y, et al. Transunet: Transformers make strong encoders for medical image segmentation. 2021. arXiv preprint arXiv: 2102.04306.

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. Neural Information Processing Systems (NIPS). 2017; pp. 5998–6008.

Chen M, Radford A, Child R, Wu J, Jun H, Luan D, et al. Generative pretraining from pixels. Conference on Machine Learning (PMLR). 2020. pp. 1691–1703.

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, et al. An image is worth 16x16 words: transformers for image recognition at scale. 2020. arXiv preprint arXiv: 2010.11929.

Zhu X, Su W, Lu L, Li B, Wang X, Dai J. Deformable DETR: deformable transformers for end-to-end object detection. 2020. arXiv preprint arXiv: 2010.04159.

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, et al. Swin transformer: hierarchical vision transformer using shifted windows. 2021. arXiv preprint arXiv: 2103.14030.

Graham B, El-Nouby A, Touvron H, Stock P, Joulin A, Jégou H, et al. LeViT: a vision transformer in convNet's clothing for faster inference. 2021. arXiv preprint arXiv: 2104.01136.

Touvron H, Cord M, Douze M, Massa F, Sablayrolles A, Jégou H. Training data-efficient image transformers & distillation through attention. 2020. arXiv preprint arXiv: 2012.12877.

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, et al. Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. 2021. arXiv preprint arXiv: 2102.12122.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014. arXiv preprint arXiv: 1409.1556.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Conference on Computer Vision and Pattern Recognition (CVPR). 2016; pp. 770–778.

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. Conference on Computer Vision and Pattern Recognition (CVPR). 2017; pp. 4700–4708.

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. Conference on Computer Vision and Pattern Recognition (CVPR). 2015; pp. 1–9.

Wehrmann J, Cerri R, Barros R. Hierarchical multi-label classification networks. International Conference on Machine Learning (ICCV). 2018; pp. 5075–5084.

Freeman I, Roese-Koerner L, Kummert A. Effnet: an efficient structure for convolutional neural networks. 2018; arXiv preprint arXiv: 1801.06434.

Zhang M-L, Zhou Z-H, Engineering D. A review on multi-label learning algorithms. IEEE transactions on knowledge. 2013;26(8):1819–1837.

Durand T, Mehrasa N, Mori G. Learning a deep convnet for multi-label classification with partial labels. Conference on Computer Vision and Pattern Recognition (CVPR). 2019; pp. 647–657.

Krizhevsky, A, Sutskever, I, Hinton, G. ImageNet classification with deep convolutional neural networks. Proceedings of 26th Conference on Neural Information Processing Systems (NIPS). 2012; pp. 1097–1105.

Qin Z, Zhang P, Wu F, Li X. FcaNet: frequency channel attention networks. 2020; arXiv preprint arXiv: 2012.11879.

Hu J, Shen L, Sun G. Squeeze-and-excitation networks. Conference on Computer Vision and Pattern Recognition (CVPR). 2018; pp. 7132–7141.

Li X, Wang W, Hu X, Yang J. Selective kernel networks. Conference on Computer Vision and Pattern Recognition (CVPR). 2019. pp. 510–519.

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q. ECA-Net: efficient channel attention for deep convolutional neural networks. 2020. arXiv preprint arXiv: 1910.03151.

Woo S, Park J, Lee J-Y, Kweon IS. CBAM: Convolutional block attention module. IEEE International Conference on Computer Vision (ECCV). 2018. pp. 3–19.

Chen Y, Kalantidis Y, Li J, Yan S, Feng J. A2-Nets: Double attention networks. 2018. arXiv preprint arXiv: 1810.11579.

Guha Roy A, Navab N, Wachinger C. Concurrent spatial and channel squeeze & excitation in fully convolutional networks. In International conference on medical image computing and computer-assisted intervention. 2018; pp. 421–429.

Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. Conference on Medical image computing and computer-assisted intervention. 2015; pp. 234–241.

Elfwing S, Uchibe E, Doya K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018;107:3–11.

Hahnloser RH, Sarpeshkar R, Mahowald MA, Douglas RJ, Seung HS. Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit. Nature. 2000;405(6789):947–51.

Islam MA, Jia S, Bruce ND. How much position information do convolutional neural networks encode?. 2020. arXiv preprint arXiv: 2001.08248.

NIH. ChestX-ray14 dataset. 2017. https://nihcc.app.box.com/v/ChestXray-NIHCC.

Kaggle. Catheter and Line Position Challenge. 2021. https://www.kaggle.com/c/ranzcr-clip-catheter-line-classification.

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. Imagenet large scale visual recognition challenge. Int J Comput Vision. 2015;115(3):211–52.

Loshchilov I, Hutter F. Sgdr: Stochastic gradient descent with warm restarts. 2016. arXiv preprint arXiv: 1608.03983.

Loshchilov I, Hutter F. Decoupled weight decay regularization. 2017. arXiv preprint arXiv: 1711.05101.

Robin X, Turck N, Hainard A, Tiberti N, Lisacek F, Sanchez J-C, et al. pROC: an open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinformatics. 2011;12(1):1–8.

Funding

The authors received financial support from National Scientific Foundation of China (82170110), Shanghai Pujiang Program (20PJ1402400), Zhongshan Hospital Clinical Research Foundation (2019ZSGG15), and Science and Technology Commission of Shanghai Municipality (20DZ2254400, 21DZ2200600, 20DZ2261200).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jiang, X., Zhu, Y., Cai, G. et al. MXT: A New Variant of Pyramid Vision Transformer for Multi-label Chest X-ray Image Classification. Cogn Comput 14, 1362–1377 (2022). https://doi.org/10.1007/s12559-022-10032-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-022-10032-4