Abstract

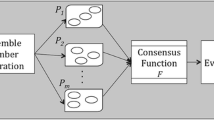

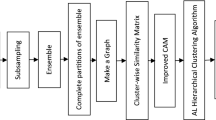

Combining multiple clusterers is emerged as a powerful method for improving both the robustness and the stability of unsupervised classification solutions. In this paper, a framework for cascaded cluster ensembles is proposed, in which there are two layers of clustering. The first layer is considering about the diversity of clustering, and generating different partitions. In doing so, the samples in input space are mapped into labeled samples in a label attribute space whose dimensionality equals the ensemble size. In the second layer clustering, we choose a clustering algorithm as the consensus function. In other words, a combined partition is given by using the clustering algorithm on these labeled samples instead of input samples. In the second layer, we use the reduced k-means, or the reduced spectral, or the reduced hierarchical linkage algorithms as the clustering algorithm. For comparison, nine consensus functions, four of which belong to cascaded cluster ensembles are used in our experiments. Promising results are obtained for toy data as well as UCI data sets.

Similar content being viewed by others

References

Jain AK, Murty MN, Flynn PJ (1999) Data clustering: a review. ACM Comput Surv 31(3):264–323

Yu J (2005) General C-means clustering model. IEEE Trans Pattern Anal Mach Intell 27(8):1197–1211

Chen H, Tino P, Yao X (2009) Predictive ensemble pruning by expectation propagation. IEEE Trans Knowl Data Eng 21(7):999–1013

Wang XZ, Zhai JH, Lu SX (2008) Induction of multiple fuzzy decision trees based on rough set technique. Info Sci 178(16):3188–3202

Zhang L, Zhou WD (2011) Sparse ensembles using weighted combination methods based on linear programming. Pattern Recogn 44:97–106

Kuncheva LI, Vetrov DP (2006) Evaluation of stability of k-means cluster ensembles with respect to random initialization. IEEE Trans Pattern Anal Mach Intell 28(11):1798–1808

Zhou ZH, Tang W (2006) Clusterer ensemble. Knowl Based Syst 19(1):77–83

Strehl A, Ghosh J (2002) Cluster ensembles - a knowledge reuse framework for combining multiple partitions. J Mach Learn Res 3:583–617

Fischer B, Buhmann JM (2003) Bagging for path-based clustering. IEEE Trans Pattern Anal Mach Intell 25(11):1411–1415

Dudoit S, Fridlyand J (2003) Bagging to improve the accuracy of a clustering procedure. Bioinformatics 19(9):1090–1099

Topchy A, Jain A, Punch W (2004) A mixture model for clustering ensembles. In: Proceedings of SIAM Data Mining, pp 379–390

Topchy A, Jain A, Punch W (2005) Clustering ensembles: models of consensus and weak partitions. IEEE Trans Pattern Anal Mach Intell 27(12):1866–1881

Jia J, Xiao X, Liu B, Jiao L (2011) Bagging-based spectral clustering ensemble selection. Pattern Recogn Lett 32(10):1456–1467

Fred A (2001) Finding consistent clusters in data partitions. In: MCS ’01: Proceedings of the Second International Workshop on Multiple Classifier Systems, Springer, London, pp 309–318

Fred A, Jain AK (2002) Data clustering using evidence accumulation. In: Proceedings of the 16th International Conference on Pattern Recognition, pp 276–280

Leisch F (1999) Bagged clustering, SFB "Adaptive Information Systems and Modeling in Economics and Management Science", p 51

Domeniconi C, Papadopoulos D, Gunopulos D, Ma S (2004) Subspace clustering of high dimensional data. In: Processings of the fourth SIAM International Conference on Data Mining, pp 517–521

Parsons L, Haque E, Liu H (2004) Subspace clustering for high dimensional data: a review. SACM SIGKDD Explorations Newsletter - Special issue on learning from imbalanced datasets 6(1):90–105

Murphy P, Aha D (1992) UCI machine learning repository URL:http://www.ics.uci.edu/mlearn/MLRepository.html

Liang J, Song W (2011) Clustering based on Steiner points. Int J Mach Learn Cybern. doi:10.1007/s13042-011-0047-7

Graaff AJ, Engelbrecht AP (2011) Clustering data in stationary environments with a local network neighborhood artificial immune system. Int J Mach Learn Cybern. doi:10.1007/s13042-011-0041-0

Guo G, Chen S, Chen L (2011) Soft subspace clustering with an improved feature weight self-adjustment mechanism. Int J Mach Learn Cybern. doi:10.1007/s13042-011-0038-8

Acknowledgments

We want to thank Dr. Alexander Topchy who graciously shares the code for comparison purposes. We would like to thank three anonymous reviewers and Editor Xi-Zhao Wang for their valuable comments and suggestions, which have significantly improved this paper. This work was supported in part by the National Natural Science Foundation of China under Grant Nos. 60970067 and 61033013, by the Natural Science Foundation of Jiangsu Province of China under Grant No. BK2011284.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Zhang, L., Ling, XH., Yang, JW. et al. Cascaded cluster ensembles. Int. J. Mach. Learn. & Cyber. 3, 335–343 (2012). https://doi.org/10.1007/s13042-011-0065-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-011-0065-5