Abstract

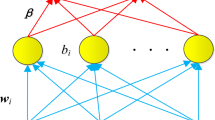

Fuzzy extreme learning machine (F-ELM) is a hybrid combination made to get the benefits of fuzzy system and extreme learning machine (ELM). F-ELM randomly initializes the weights between input layer to the hidden layer and analytically tunes the weights between hidden layer to the output layer. Due to this random initialization and the availability of redundant and irrelevant features, F-ELM may degrade the overall (generalization) performance. To solve the mentioned problem in this paper, an advanced classification algorithm \(\hbox {F-WSS}^{++}\) (incremental wrapper subset selection algorithm for F-ELM) is designed for multiclass and binary class classification problems. The merits of the proposed algorithm are analyzed theoretically and experimentally. The test results are cross checked for \(\hbox {F-WSS}^{++}\) and \(\hbox {E-WSS}^{++}\) (incremental wrapper subset selection algorithm for ELM) for the clinical dataset. The effectiveness of the \(\hbox {F-WSS}^{++}\) algorithm is verified by statistical methods. It is observed that \(\hbox {F-WSS}^{++}\) has the capability to handle weighted classification problem, feature subset selection problem and optimization problem. It also improves 9–10% classification accuracy by using only 50% features.

Similar content being viewed by others

References

Cambria E, Huang G-B, Kasun LLC, Zhou H, Vong CM, Lin J, Yin J, Cai Z, Liu Q, Li K et al (2013) Extreme learning machines [trends and controversies]. IEEE Intell Syst 28(6):30–59

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3(Mar):1157–1182

Bharathi PT, Subashini P (2015) Optimal feature subset selection using differential evolution with sequential extreme learning machine for river ice images. In: Tencon 2015–2015 IEEE region 10 conference, pp 1–6

Bermejo P, Gámez JA, Puerta JM (2014) Speeding up incremental wrapper feature subset selection with Naive Bayes classifier. Knowl Based Syst 55:140–147

Shu W, Shen H (2014) Incremental feature selection based on rough set in dynamic incomplete data. Pattern Recogn 47(12):3890–3906

Zhang WB, Ji HB (2013) Fuzzy extreme learning machine for classification. Electron Lett 49(7):448–450

Wong SY, Yap KS, Yap HJ, Tan SC, Chang SW (2015) On equivalence of FIS and ELM for interpretable rule-based knowledge representation. IEEE Trans Neural Netw Learn Syst 26(7):1417–1430

Yong Z, Joo EM, Sundaram S (2014) Meta-cognitive fuzzy extreme learning machine. In Control automation robotics and vision (ICARCV), 2014 13th international conference on IEEE, pp 613–618

Azevedo WW, Lima SML, Fernandes IMM, Rocha ADD, Cordeiro FR, da Silva-Filho AG, dos Santos WP (2015) Fuzzy morphological extreme learning machines to detect and classify masses in mammograms. In: 2015 IEEE international conference on fuzzy systems (fuzz-IEEE), pp 1–8

Mukherjee S, Sharma N (2012) Intrusion detection using Naive Bayes classifier with feature reduction. Procedia Technol 4:119–128

Feng G, Guo J, Jing B-Y, Sun T (2015) Feature subset selection using Naive Bayes for text classification. Pattern Recogn Lett 65:109–115

Karegowda AG, Jayaram MA, Manjunath AS (2010) Feature subset selection problem using wrapper approach in supervised learning. Int J Comput Appl 1(7):13–17

Salimi A, Ziaii M, Amiri A, Zadeh MH, Karimpouli S, Moradkhani M (2017) Using a feature subset selection method and support vector machine to address curse of dimensionality and redundancy in hyperion hyperspectral data classification. Egypt J Remote Sens Sp Sci 21:27

Karakaya G, Galelli S, Ahipaşaoğlu SD, Taormina R (2016) Identifying (quasi) equally informative subsets in feature selection problems for classification: a max-relevance min-redundancy approach. IEEE Trans Cybern 46(6):1424–1437

Ertugrul OF, Tagluk ME (2017) A fast feature selection approach based on extreme learning machine and coefficient of variation. Turk J Electr Eng Comput Sci 25(4):3409–3420

Laura CB, Adrián AR, Silvia JF, Enrique A, Carlos NBJ, Sancho SS (2016) A grouping genetic algorithmextreme learning machine approach for optimal wave energy prediction. In: Evolutionary computation (CEC), 2016 IEEE congress on IEEE, pp 3817–3823

Huang G-B, Zhu Q-Y, Siew C-K (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1):489–501

Seera M, Lim CP (2014) A hybrid intelligent system for medical data classification extreme learning machine: theory and applications. Expert Syst Appl 41(05):2239–2249

Zhang R, Lan Y, Huang G, Zong-Ben X (2012) Universal approximation of extreme learning machine with adaptive growth of hidden nodes. IEEE Trans Neural Netw Learn Syst 23(2):365–371

Yu L, Liu H (2004) Efficient feature selection via analysis of relevance and redundancy. J Mach Learn Res 5(Oct):1205–1224

Aslam MW, Zhu Z, Nandi AK (2013) Feature generation using genetic programming with comparative partner selection for diabetes classification. Expert Syst Appl 40(13):5402–5412

Huang SH (2015) Supervised feature selection: a tutorial. Artif Intell Res 4(2):22

Hall MA, Holmes G (2003) Benchmarking attribute selection techniques for discrete class data mining. IEEE Trans Knowl Data Eng 15(6):1437–1447

Wang A, An N, Chen G, Yang J, Li L, Alterovitz G (2014) Incremental wrapper based gene selection with Markov blanket. In: Bioinformatics and biomedicine (BIBM), 2014 IEEE international conference on IEEE, pp 74–79

Bermejo P, Gámez JA, Puerta JM (2009) Incremental wrapper-based subset selection with replacement: an advantageous alternative to sequential forward selection. In: IEEE symposium on computational intelligence and data mining, 2009. cidm’09, pp 367–374

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (1982) Numerical recipes in c, vol 2. Cambridge University Press, Cambridge

Nahato KB, Nehemiah KH, Kannan A (2016) Hybrid approach using fuzzy sets and extreme learning machine for classifying clinical datasets. Inf Med Unlocked 2:1–11

Webmd (2015) http://www.webmd.com. Accessed Dec 2016

Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml. Accessed Dec 2016

Jasmina N (2016) Toward optimal feature selection using ranking methods and classification algorithms. Yugoslav J Oper Res 21:1

Wuensch KL (1996) Straightforward statistics for the behavioral sciences. J Am Stat Assoc 91(436):1750–1752

Devaraj S, Paulraj S (2015) An efficient feature subset selection algorithm for classification of multidimensional dataset. Sci World J 2015:821798. https://doi.org/10.1155/2015/821798

Kothari CR (2004) Research methodology: methods and techniques. New Age International, Chennai

Yap KS, Lim CP, Mohamad-Saleh J (2010) An enhanced generalized adaptive resonance theory neural network and its application to medical pattern classification. J Intell Fuzzy Syst 21(1, 2):65–78

Quteishat A, Lim CP (2008) A modified fuzzy min-max neural network with rule extraction and its application to fault detection and classification. Appl Soft Comput 8(2):985–995

Polat K, Güneş S, Arslan A (2008) A cascade learning system for classification of diabetes disease: generalized discriminant analysis and least square support vector machine. Expert Syst Appl 34(1):482–487

Temurtas H, Yumusak N, Temurtas F (2009) A comparative study on diabetes disease diagnosis using neural networks. Expert Syst Appl 36(4):8610–8615

Çalişir D, Doğantekin E (2011) An automatic diabetes diagnosis system based on lda-wavelet support vector machine classifier. Expert Syst Appl 38(7):8311–8315

Varma KVSRP, Rao AA, Sita Maha Lakshmi T, Nageswara Rao PV (2014) A computational intelligence approach for a better diagnosis of diabetic patients. Comput Electr Eng 40(5):1758–1765

Mattila J, Koikkalainen J, Virkki A, van Gils M, Lötjönen J, Initiative ADN et al (2012) Design and application of a generic clinical decision support system for multiscale data. IEEE Trans Biomed Eng 59(1):234–240

Jaganathan P, Kuppuchamy R (2013) A threshold fuzzy entropy based feature selection for medical database classification. Comput Biol Med 43(12):2222–2229

Martiínez-Estudillo FJ, Hervás-Martínez C, Gutiérrez PA, Martínez-Estudillo AC (2008) Evolutionary product-unit neural networks classifiers. Neurocomputing 72(1):548–561

Hervás-Martínez C, Martínez-Estudillo FJ, Carbonero-Ruz M (2008) Multilogistic regression by means of evolutionary product-unit neural networks. Neural Netw 21(7):951–961

Subbulakshmi CV, Deepa SN (2015) Medical dataset classification: a machine learning paradigm integrating particle swarm optimization with extreme learning machine classifier. Sci World J 2015:418060. https://doi.org/10.1155/2015/418060

Diao R, Chao F, Peng T, Snooke N, Shen Q (2014) Feature selection inspired classifier ensemble reduction. IEEE Trans Cybern 44(8):1259–1268

Bonilla-Huerta E, Hernandez-Montiel A, Morales-Caporal R, Arjona-López M (2016) Hybrid framework using multiple-filters and an embedded approach for an efficient selection and classification of microarray data. IEEE/ACM Trans Comput Biol Bioinf 13(1):12–26

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kale, A., Sonavane, S. F-WSS\(^{++}\): incremental wrapper subset selection algorithm for fuzzy extreme learning machine. Int. J. Mach. Learn. & Cyber. 10, 1821–1832 (2019). https://doi.org/10.1007/s13042-018-0859-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-018-0859-9