Abstract

The error correction problem is a very important topic in machine learning. However, existing methods only focus on data recovery and ignore data compact representation. In this paper, we propose a flexible robust principal component analysis (FRPCA) method in which two different matrices are used to perform error correction and the data compact representation can be obtained by using one of matrices. Moreover, FRPCA selects the most relevant features to guarantee that the recovered data can faithfully preserve the original data semantics. The learning is done by solving a nuclear-norm regularized minimization problem, which is convex and can be solved in polynomial time. Experiments were conducted on image sequences containing targets of interest in a variety of environments, e.g., offices, campuses. We also compare our method with existing method in recovering the face images from corruptions. Experimental results show that the proposed method achieves better performances and it is more practical than the existing approaches.

Similar content being viewed by others

References

Costeira JP, Kanade T (1998) A multibody factorization method for independently moving objects. Int J Comput Vis 29(3):159–179

Belhumeur P, Hespanha J, Kriegman D (2002) Eigenfaces versus fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

De la Torre F, Black MJ (2001) Robust principal component analysis for computer vision. In: Proceedings 8th IEEE international conference on computer vision, ICCV 2001. IEEE, 2001, 1, Barcelona, Spain, pp 362–369

Eldar YC, Mishali M (2009) Robust recovery of signals from a structured union of subspaces. IEEE Trans Inf Theory 55(11):5302–5316

Elhamifar E, Vidal R (2009) Sparse subspace clustering. In: Proceedings of the IEEE conference on computer vision and pattern recoginition, vol 2, pp 2790–2797

Liu G, Lin Z, Yu Y (2010) Robust subspace segmentation by low-rank representation. In: Proceedings of the international conference on machine learning, pp 1–8

Li S, Fu Y (2014) Robust subspace discovery through supervised low-rank constraints. In: Proceedings of the SIAM international conference on data mining, pp 163–171

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

Kim E, Lee M, Oh S (2015) Elastic-net regularization of singular values for robust subspace learning. In: Proceedings of the IEEE conference of computer vision and pattern recognition, pp 915–923

Wen J et al (2019) Robust sparse linear discriminant analysis. IEEE Trans Circ Syst Video Technol 29(2):390–403

Wen J, Zhong Z, Zhang Z, Fei L, Lai Z, Chen R (2018) Adaptive locality preserving regression. IEEE Trans Circuits Syst Video Technol. https://doi.org/10.1109/tcsvt.2018.2889727

Fei L et al (2017) Low rank representation with adaptive distance penalty for semi-supervised subspace classification. Pattern Recogn 67(2017):252–262

Fang X, Han N, Jigang W, Xu Y, Yang J, Wong WK, Li X (2018) Approximate low-rank projection learning for feature extraction. IEEE Trans Neural Netw Learn Syst 29(11):5228–5241

Wen J, Han N, Fang X, Fei L, Yan K, Zhan S (2019) Low-rank preserving projection via graph regularized reconstruction. IEEE Trans Cybern 49(4):1279–1291

Pearson K (1901) On lines and planes of closest fit to systems of points in space. Philos Magn 2(6):559–572

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

He X, Niyogi P (2004) Locality preserving projections. In: Advances in neural information processing systems pp 153–160

He X et al (2005) Neighborhood preserving embedding. Computer Vision, 2005. ICCV 2005. In: 10th IEEE international conference on Vol 2, IEEE

Yan S et al (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29(1):40–51

Zou H, Hastie T, Tibshirani R (2006) Sparse principal component analysis. J Comput Graph Stat 15(2):265–286

Lai Z, Yong X, Yang J, Shen L, Zhang D (2017) Rotational invariant dimensionality reduction algorithms. IEEE Trans Cybern 47(11):3733–3746

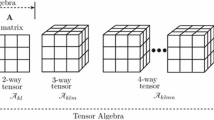

Lai Z, Yong X, Yang J, Tang J, Zhang D (2013) Sparse tensor discriminant analysis. IEEE Trans Image Process 22(10):3904–3915

Cai D, He X, Han J (2007) Spectral regression: a unified approach for sparse subspace learning. In: 7th IEEE international conference on data mining (ICDM 2007). IEEE

Candès EJ et al (2011) Robust principal component analysis? J ACM (JACM) 58(3):11

Maronna RA (1976) Robust M-estimators of multivariate location and scatter. Ann Stat 4(1):51–67

Devlin SJ, Gnanadesikan R, Kettenring JR (1981) Robust estimation of dispersion matrices and principal components. J Am Stat Assoc 76(374):354–362

Li G, Chen Z (1985) Projection-pursuit approach to robust dispersion matrices and principal components: primary theory and Monte Carlo. J Am Stat Assoc 80(391):759–766

Torre F, Black M (2001) Robust principal component analysis for computer vision. In: Proceedings of the international conference computer vision, Vancouver, BC, pp 362–369

Wright J, Ganesh A, Rao S, Peng Y, Ma Y (2009) Robust principal component analysis: exact recovery of corrupted low-rank matrices via convex optimization. In: Proceedings of neural information processing system, pp 1–9

Xu H, Caramanis C, Sanghavi S (2010) Robust PCA via outlier pursuit. In: Proceedings of advances in neural information processing system, pp 1–30

Ma Y, Derksen H, Hong W, Wright J (2007) Segmentation of multivariate mixed data via lossy data coding and compression. IEEE Trans Pattern Anal Mach Intell 29(9):1546–1562

Bao B-K et al (2012) Inductive robust principal component analysis. IEEE Trans Image Process 21(8):3794–3800

Chung FRK (1997) Spectral graph theory. AMS, Providence, RI

Donoho DL (2006) For most large underdetermined systems of equations, the minimal \(\ell ^{1}\)-norm near-solution approximates the sparsest nearsolution. Commun Pure Appl Math 59(7):907–934

Lin Z, Chen M, Wu L, Ma Y (2009) The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. Department of Electrical and Computer Engineering, UIUC, Urbana, Technical Report, UILU-ENG-09-2215

Cai X, Ding C, Nie F, Huang H (2013) The equivalent of low-rank linear regressions and linear discriminant analysis based regressions. In: Proceedings of the 19th ACM SIGKDD conference knowledge discovery data mining, pp 1124–1132

Fang X, Xu Y, Li X, Lai Z, Wong WK (2016) Robust semi-supervised subspace clustering via non-negative low-rank representation. IEEE Trans Cybern 46(8):1828–1838

Liu G, Lin Z, Yan S, Sun J, Yu Y, Ma Y (2013) Robust recovery of subspace structures by low-rank representation. IEEE Trans Pattern Anal Mach Intell 35(1):171–184

Acknowledgements

This work was supported in part by the National Key R & D Program of China under Grant No. 2018YFB1003201, the National Natural Science Foundation of China under Grants 61672171 and 61772141, the Guangdong Natural Science foundation under Grants 2018B030311007, and the Major project of Educational Commission of Guangdong Province under Grants 2016KZDXM052. The corresponding author is Jigang Wu (asjgwucn@outlook.com).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

He, Z., Wu, J. & Han, N. Flexible robust principal component analysis. Int. J. Mach. Learn. & Cyber. 11, 603–613 (2020). https://doi.org/10.1007/s13042-019-00999-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-019-00999-2