Abstract

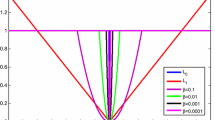

\(l_{q}\) (\(0< q < 1\)) regularization is a dominating strategy for matrix completion problems. The main goal of nonconvex \(l_{q}\) regularization based algorithm is to find a so-called low-rank solution.Unfortunately, most existing algorithms suffer from full singular value decomposition (SVD), and thus become inefficient for large-scale matrix completion problems. To alleviate this limitation, in this paper we propose an accelerated inexact algorithm to handle such problem. The key idea is to employ the closed-form q-thresholding (\(q = 1/2, 2/3\)) operator to approximate the rank of a matrix. The power method and the special “sparse plus low-rank” structure of the matrix iterates are adopted to allow efficient SVD. Besides, we employ Nesterov’s accelerated gradient method and continuation technique to further accelerate the convergence speed of our proposed algorithm. A convergence analysis shows that the sequence \(\{X_{t}\}\) generated by our proposed algorithm is bounded and has at least one accumulation point. Extensive experiments have been conducted to study its recovery performance on synthetic data, image recovery and recommendation problems. All results demonstrate that our proposed algorithm is able to achieve comparable recovery performance, while being faster and more efficient than state-of-the-art methods.

Similar content being viewed by others

References

Rennie J, Srebro N (2005) Fast maximum margin matrix factorization for collaborative prediction. In: Proceedings of the 22nd international conference on machine learning (ICML-05), pp 713–719

Koren Y (2008) Factorization meets the neighborhood: A multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 426–434

Li X, Wang Z, Gao C, Shi L (2017) Reasoning human emotional responses from large-scale social and public media. Appl Math Comput 310:128–193

Peng X, Zhang Y, Tang H (2016) A unified framework for representation-based subspace clustering of out-of-sample and large-scale data. IEEE Trans Neural Netw Learn Syst 27(12):2499–2512

Yang L, Nie F, Gao Q (2018) Nuclear-norm based semi-supervised multiple labels learning. Neurocomputing 275:940–947

Yang L, Gao Q, Li J, Han J, Shao L (2018) Zero shot learning via low-rank embedded semantic autoencoder. In: Proceedings of the international joint conference on artificial intelligence, pp 2490–2496

Cabral R, De la Torre F, Costeira JP, Bernardino A (2015) Matrix completion for weakly-supervised multi-label image classification. IEEE Trans Pattern Anal Mach Intell 37(1):121–135

Yang L, Shan C, Gao Q, Gao X, Han J, Cui R (2019) Hyperspectral image denoising via minimizing the partial sum of singular values and superpixel segmentation. Neurocomputing 330:465–482

Recht B, Fazel M, Parrilo PA (2010) Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev 52(3):471–501

Candès EJ, Recht B (2009) Exact matrix completion via convex optimization. Found Compt Math 9(6):717–772

Fazel M (2002) Matrix rank minimization with applications. Ph.D. thesis, Stanford University

Cai J-F, Candès EJ, Shen Z (2010) A singular value thresholding algorithm for matrix completion. SIAM J Optim 20(4):1956–1982

Toh K-C, Yun S (2010) An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac J Optim 6(3):615–640

Ma S, Goldfarb D, Chen L (2011) Fixed point and Bregman iterative methods for matrix rank minimization. Math Program 128(1–2):321–353

Mazumder R, Hastie T, Tibshirani R (2010) Spectral regularization algorithms for learning large incomplete matrices. J Mach Learn Res 11:2287–2322

Yao Q, Kwok JT (2015) Accelerated inexact soft-impute for fast largescale matrix completion. In: Proceedings of the international joint conference on artificial intelligence, pp 4002–4008

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its Oracle properties. J Am Stat Assoc 96:1348–1361

Zhang T (2010) Analysis of multi-stage convex relaxation for sparse regularization. J Mach Learn Res 11:1081–1107

Candès EJ, Wakin MB, Boyd SP (2008) Enhancing sparsity by reweighted \(l_{1}\) minimization. J Fourier Anal Appl 14(5–6):877–905

Hu Y, Zhang D, Ye J, Li X, He X (2013) Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans Pattern Anal Mach Intell 35(19):2117–2130

Zhang C-H (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38(2):894–942

Marjanovic G, Solo V (2012) On \(l_{q}\) optimization and matrix completion. IEEE Trans Signal Process 60(11):5714–5724

Abu Arqub O, Abo-Hammour Z (2014) Numerical solution of systems of second-order boundary value problems using continuous genetic algorithm. Inf Sci 279:396–415

Abu Arqub O, AL-Smadi M, Momani S, Hayat M (2016) Numerical solutions of fuzzy differential equtions using reproducing kernel Hilbert space method. Soft Comput 20(8):3283–3302

Abu Arqub O, AL-Smadi M, Momani S, Hayat M (2017) Application of reproducing kernel algorithm for solving second-order, two-point fuzzy boundary value problems. Soft Comput 21(23):7191–7206

Abu Arqub O (2017) Adaptation of reproducing kernel algorithm for solving fuzzy Fredholm–Volterra integrodifferential equations. Neural Comput Appl 28(7):1591–1610

Xu Z, Chang X, Xu F, Zhang H (2012) \(L_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans Neural Netw Learn Syst 23(7):1013–1027

Cao W, Sun J, Xu Z (2013) Fast image deconvolution using closed-form thresholding formulas of \(l_{q}(q = 1/2, 2/3)\) regularization. J Vis Commun Image R 24(1):31–41

Peng D, Xiu N, Yu J (2017) \(S_{1/2}\) regularization methods and fixed point algorithms for affine rank minimization problems. Comput Optim Appl 67:543–569

Wang Z, Wang W, Wang J, Chen S (2019) Fast and efficient algorithm for matrix completion via closed-form 2/3-thresholding operator. Neurocomputing 330:212–222

Qian W, Cao F (2019) Adaptive algorithms for low-rank and sparse matrix recovery with truncated nuclear norm. Int J Mach Learn Cyb 10(6):1341–1355

Nesterov Y (2013) Gradient methods for minimizing composite functions. Math Program 140(1):125–161

Li H, Lin Z (2015) Accelerated proximal gradient methods for noncovex programming. In: Proceedings of the advances in neural information processing systems, pp 379–387

Yao Q, Kwok J (2019) Accelerated and inexact soft-impute for large-scale matrix and tensor completion. IEEE Trans Knowl Data En 31(9):1665–1679

Yao Q, Kwok J, Wang T, Liu T (2019) Large-scale low-rank matrix learning with nonconvex regularizers. IEEE Trans Pattern Anal Mach Intell 41(11):2628–2643

Gu B, Wang D, Huo Z, Huang H (2018) Inexact proximal gradient methods for non-convex and non-smooth optimization. In: AAAI conference on artificial intelligence

Yao Q, Kwok J, Gao F, Chen W, Liu T (2017) Efficient inexact proximal gradient algorithm for nonconvex problems. In: Proceedings of the international joint conference on artificial intelligence, pp 3308–3314

Oh T, Matsushita Y, Tai Y, Kweon I (2018) Fast randomized singular value thresholding for low-rank optimization. IEEE Trans Pattern Anal Mach Intell 40(2):376–391

Halko N, Martinsson P-G, Tropp J (2011) Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev 53(2):1805–1811

Hsieh C.-J, Olsen P (2014) Nuclear norm minimization via active subspace selection. In: Proceedings of the 31st international conference on machine learning (ICML-14), pp 575–583

Larsen R (1998) Lanczos bidiagonalization with partial reorthogonalization. Department of Computer Science, Aarhus University, DAIMI PB-357

Gong P, Zhang C, Lu Z, Huang J, Ye J (2013) A general iterative shrinkage and tresholding algorithm for non-convex regularized optimization problems. In: Proceedings of the 30th international conference on machine learning (ICML-13), pp 37–45

Wen Z, Yin W, Zhang Y (2012) Solving a low-rank factorization model for matrix completion by a nonlinear successive over-relaxation algorithm. Math Program Comput 44(4):333–361

Wang Z, Lai M, Lu Z, Fan W, Davulcu H, Ye J (2015) Orthogonal rank-one matrix pursuit for low rank matrix completion. SIAM J Sci Comput 37(1):A488–A514

Tanner J, Wei K (2016) Low rank matrix completion by alternating steepest descent methods. Appl Comput Harmon A 40:417–420

Xu C, Lin Z, Zha H (2017) A unified convex surrogate for the schatten-\(p\) norm. In: AAAI conference on artificial intelligence

Lu C, Tang J, Yan S, Lin Z (2016) Noncovex nonsmooth low rank minimization via iteratively reweighted nuclear norm. IEEE Trans Image Process 25(2):829–839

Jain P, Meka R, Dhillon I (2010) Guaranteed rank minimization via singular value projection. In: Proceedings of the advances in neural information processing systems, pp 937–945

Lai M, Xu Y, Yin W (2013) Improved iteratively rewighted least squares for unconstrained smoothed \(l_{p}\) minimization. SIAM J Numer Anal 5:927–957

Hastie T, Mazumder R, Lee J, Zadeh R (2015) Matrix completion and low-rank SVD via fast alternating least squares. J Mach Learn Res 16:3367–3402

Thu Q, Ghanbari M (2008) Scope of validity of PSNR in image/video quality assesment. Electron Lett 44(13):800–801

Acknowledgements

This work is supported in part by the Natural Science Foundation of China under Grant 61273020, and in part by the Fundamental Research Funds for the Central Universities under Grant XDJK2019B063.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, Z., Gao, C., Luo, X. et al. Accelerated inexact matrix completion algorithm via closed-form q-thresholding \((q = 1/2, 2/3)\) operator. Int. J. Mach. Learn. & Cyber. 11, 2327–2339 (2020). https://doi.org/10.1007/s13042-020-01121-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01121-7