Abstract

Multivariate time series analysis is a vital but challenging task, with multidisciplinary applicability, tackling the characterization of multiple interconnected variables over time and their dependencies. Traditional methodologies often adapt univariate approaches or rely on assumptions specific to certain domains or problems, presenting limitations. A recent promising alternative is to map multivariate time series into high-level network structures such as multiplex networks, with past work relying on connecting successive time series components with interconnections between contemporary timestamps. In this work, we first define a novel cross-horizontal visibility mapping between lagged timestamps of different time series and then introduce the concept of multilayer horizontal visibility graphs. This allows describing cross-dimension dependencies via inter-layer edges, leveraging the entire structure of multilayer networks. To this end, a novel parameter-free topological measure is proposed and common measures are extended for the multilayer setting. Our approach is general and applicable to any kind of multivariate time series data. We provide an extensive experimental evaluation with both synthetic and real-world datasets. We first explore the proposed methodology and the data properties highlighted by each measure, showing that inter-layer edges based on cross-horizontal visibility preserve more information than previous mappings, while also complementing the information captured by commonly used intra-layer edges. We then illustrate the applicability and validity of our approach in multivariate time series mining tasks, showcasing its potential for enhanced data analysis and insights.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent advancements in technology have led to the widespread availability of substantial amounts of high-dimensional time-indexed data. To effectively analyze such multidimensional time-indexed data, commonly referred to as multivariate time series, appropriate methodological and computational tools are essential. These data types are prevalent across diverse domains, including climate studies, health monitoring, and financial data analysis. Multivariate time series are characterized by serial correlation and cross-sectional dependencies, often termed the "curse of dimensionality" (Wei, 2019).

To address the challenge of analyzing high-dimensional time-indexed data, feature-based approaches have been proposed. Traditionally, time series features rely on statistics and models that may involve pre-processing and assumptions not universally satisfied. An alternative methodology involves mapping time series into complex networks (Silva et al., 2021) to extract topological features for mining tasks and forecasting (Zhang et al., 2018; Li and Liu, 2021; Silva et al., 2022). Network science, which studies information extraction from complex networks, offers a rich set of topological graph measurements (Albert and Barabási, 2002; Costa et al., 2007; Peach et al., 2021).

Univariate time series can typically be mapped into single-layer networks utilizing concepts such as visibility, transition probability, and proximity (Zou et al., 2019; Silva et al., 2021). On the other hand, when dealing with multivariate time series, one may opt for mapping these into either single-layer or multiple-layer networks. In the former, nodes symbolize the individual time series components, and the edges represent relationships between the latter, computed using statistical methods or models. However, this approach often results in the loss of vital information regarding the dynamics of each time series component, including serial correlation.

Recognizing this limitation, several authors proposed mappings that represent multivariate time series as multiplex networks, aiming to preserve both the temporal dynamics and cross-sectional information within the data (Lacasa et al., 2015; Sannino et al., 2017; Eroglu et al., 2018; Silva et al., 2021). In these multiplex networks, each univariate time series component is allocated to a distinct layer, employing a univariate time series mapping where each node represents a timestamp or pattern. Layers are interconnected through common nodes in successive layers. Nevertheless, this mapping process invariably leads to the loss of directed lagged cross-correlations, which are of considerable importance in most multivariate scenarios.

To overcome the limitations mentioned above, we propose a new mapping method to represent multivariate time series as multilayer complex networks. Multilayer networks, with their capacity for internal connections within the same layer and external connections between different layers, offer a comprehensive and flexible data representation structure (Kivelä et al., 2014). The new mapping relies on a new horizontal visibility concept, Cross-Horizontal Visibility, designed to capture cross-dependencies between pairs of time series components. Extending previous work on multiplex visibility graphs (Lacasa et al., 2015; Sannino et al., 2017), we incorporate inter-layer edges based on cross-horizontal visibility. These connections capture dependencies between different (lagged) timestamps of various variables, resulting in what we term Multilayer Horizontal Visibility Graphs.

In addition to accurate analysis and understanding of data, temporal data statistics play a crucial role in many time series analysis applications (Fulcher and Jones, 2014; Hyndman et al., 2015; Kang et al., 2020), particularly for dimensionality reduction. Identifying a collection of features that encapsulates the main properties of such data is a pivotal task, which typically entails conventional statistical methods and non-linear measures (Montero-Manso et al., 2020). While univariate time series data offers a broad range of descriptive features, including linear statistics, nonlinear dynamics, symbolization, and innovative topological features, features for multivariate time series are currently more limited and pose a challenge. To address this gap, we propose a set of global topological multilayer network features as a novel set for multivariate time series analysis. These features include intra-layer, inter-layer, all-layer topological features, along with relational features. Within the category of relational features, which pertains to topological features related to components of the network, we introduce a new feature designed to capture the relationship between intra-layer and inter-layer connections.

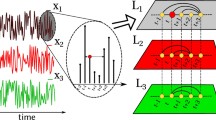

The methodology proposed in this work and represented in Fig. 1, introduces a novel algorithmic method to transform multivariate time series data into multilayer networks, enabling the extraction of features from the resulting networks for comprehensive multivariate time series data analysis. This non-parametric methodology does not require data preprocessing and is not tailored to a specific time series mining problem or dataset, ensuring its versatility across various applications. To comprehensively analyze, assess, and test the framework introduced in this work, we leverage synthetic multivariate time series generated from a selected set of multivariate time series models. Additionally, a diverse set of real-world multivariate time series, with varying characteristics and dimensionality properties, is employed in our evaluation.

Schematic diagram of the network-based features approach to time series mining tasks. The first column displays a set of synthetic multivariate time series, the second column the corresponding multiplex horizontal visibility graphs (based on Lacasa et al., 2015) and the multilayer horizontal visibility graphs proposed in this work, the third column shows the set of topological features extracted from the two types of multiple-layer networks, and the last column a principal component analysis derived from the extracted topological feature sets. The description of the notation corresponding to the topological features presented in the third column is given in Sect. 4

1.1 Contributions

The main contributions of this work are the following:

-

The Cross-Horizontal Visibility concept and mapping. We propose a novel visibility concept that extends horizontal visibility defined within a time series to a bivariate setting. Based on cross-horizontal visibility we define a mapping for multivariate time series into multilayer visibility networks, by introducing inter-layer edges between different nodes of distinct layers. Multiplex visibility networks, then, become a particular case of the multilayer visibility networks. This novelty, to the best of our knowledge, has not been previously explored in the literature for the analysis of multivariate time series data Footnote 1.

-

A new set of multidimensional features. We introduce a new topological feature tailored for multilayer networks and curate a distinct set of multilayer network topological features for analyzing the proposed mapping method and reducing the dimensionality of multivariate time series data. We present a diverse set of topological features of multilayer networks, leveraging their intra- and inter-layer connections within the context of multivariate time series analysis.

-

A comprehensive exploratory and empirical analysis. We perform a thorough exploratory and empirical analysis of multidimensional topological features using synthetic datasets that encompass multiple correlation scenarios (both serial and crossed).

-

Application of multidimensional time series features to mining tasks. While the proposed multivariate time series mapping and the underlying features are not explicitly tailored for specific mining tasks, we demonstrate their application through clustering and classification tasks on a diverse set of synthetic and real-world datasets. It is important to note that this work primarily aims to introduce our proposed method, highlighting its versatility and ease of application. The emphasis is on presenting its practical utility rather than engaging in direct competition or comparison with other potential approaches.

1.2 Organization

We have structured this document as follows. Section 2 introduces fundamental concepts of multivariate time series and multilayer networks, establishing the notation for the paper. This section also provides background information on mapping methods crucial for comprehending the proposed methodology. Section 3 presents the concept of cross-horizontal visibility between time series components and introduces our novel multivariate time series mapping. In Sect. 4 we present the set of topological features extended to multilayer networks and, consequently, to multivariate time series data. This section also unveils a new topological feature designed specifically for multilayer networks. Section 5 conducts a comprehensive study of the proposed mapping method through the analysis of the corresponding feature set, providing insights into the properties of the multivariate time series. Additionally, this section explores multivariate time series mining tasks across synthetic and real-world datasets of various dimensions and types. Finally, Sect. 6 presents the conclusions drawn from our research, along with additional considerations, and outlines potential avenues for future work and exploration.

2 Background

This section introduces fundamental concepts on multivariate time series and multilayer networks, thus establishing the notation that will be used in the paper.

2.1 Multivariate time series

An Univariate Time Series (UTS) is a sequence of (scalar) observations indexed by time t, typically denoted as \(\{{Y}_t\}_{t=1}^{T}\). Unlike a random sample, such observations are ordered in time and usually exhibit serial correlation that must be accounted for in the analysis. In instances where, at each time t, a vector of m observations is obtained, expressed as \(\varvec{Y}_t = [Y_{1,t}, Y_{2,t}, \ldots , Y_{m,t}]^{\prime }\), where \(\prime\) represents the transpose, the resulting dataset \(\varvec{Y}=\{\varvec{Y}_t\}_{t=1}^{T}\) is termed a Multivariate Time Series (MTS). Henceforward, the UTS components of the MTS \(\varvec{Y}\) are denoted by \(\varvec{Y}^{\alpha }=[Y_{\alpha ,1}, Y_{\alpha ,2}, \ldots , Y_{\alpha , T}]\), \(\alpha =1, \ldots ,m\) and thus we can denote the MTS by its components, \(\varvec{Y}=\{Y^{\alpha }\}_{\alpha =1}^{m}\). MTS data present not only serial correlation within each component, \(\varvec{Y}^{\alpha }\), but also a correlation between the different UTSs, \(\varvec{Y}^{\alpha }\) and \(\varvec{Y}^{\beta }\) with \(\alpha \ne \beta\), both contemporaneous and lagged correlation. Thus, analyzing MTS depends on key dependence measures such as the autocorrelation function (ACF), which measures the linear predictability of a UTS,

and the cross-correlation function (CCF), which measures the correlation between any two components of the MTS, \(\alpha\) and \(\beta\), say, at times s and t,

Time series analysis encompasses a range of methodologies developed to systematically address statistical challenges posed by serial correlation. A multitude of statistical models, both linear and non-linear, are available in the literature for effectively characterizing the dynamics of UTS (Shumway and Stoffer, 2017). While the theoretical framework for UTS naturally extends to the multivariate case, incorporating concepts such as mean, covariance, ACF, and CCF, new complexities emerge. MTS analysis demands specialized tools, methods, and models capable of extracting valuable insights from multiple measurements exhibiting both temporal and cross-sectional correlations. This requires the development of innovative approaches to effectively capture the intricacies inherent in multivariate time series data.

2.2 Multilayer networks

A graph (or network) G, is a mathematical structure defined by a pair (V, E), where V represents the set of nodes and E the set of edges (connections) between pairs of nodes. Two nodes \(v_i\) and \(v_j\) are called neighbors if they are connected, \((v_i,v_j) \in E\). If there is no direction from a source node to a target node the edges are undirected: \((v_i,v_j) \in E\) implies that \((v_j,v_i) \in E\). A graph can be represented by an adjacency matrix \(\varvec{A}\), where \(A_{i,j}\) is 1 when \((v_i,v_j) \in E\) and is 0 otherwise.

A Multilayer Network (MNet) is a complete and general structure suitable for modeling multiple complex systems through their interactions, intra- and inter-systems. An MNet is generally defined as a quadruplet \(M = (V_M, E_M, V, \varvec{L})\) where V and \(\varvec{L}\) represent the set of entities and the set of layers of M, respectively, and \(V_M\) and \(E_M\) represent the global sets of nodes and edges, respectively. The \(V_M \subseteq V \times L_1 \times \ldots \times L_m\), where \(L_{\alpha } \in \varvec{L}\) is an elementary layer, is a set of node-layer combinations in which a node is present in the corresponding layer \(L_{\alpha }\). The \(E_M \subseteq V_M \times V_M\) is the set of edges that contain the pairs of possible combinations of nodes and elementary layers (Kivelä et al., 2014). We denominate as intra-layer edges, the connections between nodes of the same layer, \((v_i^{\alpha }, v_j^{\alpha })\), and inter-layer edges the connections between nodes of different layers, \((v_i^{\alpha }, v_j^{\beta })\) with \(\alpha \ne \beta\).

Two specific instances of multilayer networks include the monoplex network, where \(m=1\) and M reduces to a single-layer network denoted as G and the multiplex network, where M is a sequence of m graphs, \(\{G^\alpha \}_{\alpha =1}^m = \{(V^\alpha , E^\alpha )\}_{\alpha =1}^m\). In the case of multiplex networks, these graphs typically share a common set of nodes across all elementary layers, and inter-layer edges exclusively connect corresponding nodes across all successive layers, i.e., connecting \((v_i^{\alpha }, v_i^{\beta })\) where \(\alpha \ne \beta\) (Boccaletti et al., 2014). Figure 2 illustrates the representation of both simple multilayer and multiplex networks.

Illustrative example of two toy multilayer networks with five entities, \(V = \{1,2,3,4,5\}\), and two layers \(\varvec{L} = \{L_1, L_2\}\). (a) Represents a toy multilayer network and (b) a toy multiplex network. Solid lines represent the intra-layer edges and dashed lines represent the inter-layer edges. Source: Modified from Silva et al. (2021)

A node-aligned MNetFootnote 2 has an associated adjacency tensor of order 4, \(\pmb {\mathcal {A}}\), with tensor elements \(\mathcal {A}_{i,j,\alpha ,\beta } = 1\) if \((v_i^{\alpha }, v_j^{\beta }) \in E_M\) and 0 otherwise (Kivelä et al., 2014). If the MNet is not node-aligned, we use empty nodes to fill the tensor structure. An alternative representation involves flattening \(\pmb {\mathcal {A}}\) into a supra-adjacency matrix, \(\varvec{A}\). In this matrix, intra-layer edges correspond to diagonal element blocks, while inter-layer edges are associated with off-diagonal element blocks. Figure 3 visually demonstrates the supra-adjacency matrices corresponding to the networks depicted in Fig. 2. From these element blocks, we can deduce three types of subgraphs:

-

intra-layer graphs, \(G^{\alpha }\), represented by the square matrices of order \(|V^{\alpha }|\) formed by the diagonal element blocks (intra-layer edges, \(A_{i,j}^{\alpha }\)), i.e., \(\left[ {\begin{smallmatrix} \varvec{A}^{\alpha } & \varvec{0}\\ \varvec{0} & \varvec{0} & \end{smallmatrix}} \right]\).

-

inter-layer graphs, \(G^{\alpha ,\beta }\), represented by the square matrices of order \(|V^{\alpha }|+|V^{\beta }|\) constructed from off-diagonal element blocks (inter-layer edges, \(A_{i,j}^{\alpha ,\beta }\) and \(A_{j,i}^{\beta ,\alpha }\), and no intra-layer edges, \(A_{i,j}^{\alpha } = 0\) and \(A_{i,j}^{\beta } = 0\))Footnote 3, i.e., \(\left[ {\begin{smallmatrix} \varvec{0} & \varvec{A}^{\alpha ,\beta } \\ \varvec{A}^{\beta ,\alpha } & \varvec{0} \end{smallmatrix}} \right]\).

-

all-layer graphs, \(G^{\alpha , \beta }_{all}\), represented by the square matrices of size \(|V^{\alpha }|+|V^{\beta }|\) constructed by both on and off-diagonal element blocks (intra-layer edges, \(A_{i,j}^{\alpha }\) and \(A_{i,j}^{\beta }\), and inter-layer edges, \(A_{i,j}^{\alpha ,\beta }\) and \(A_{j,i}^{\beta ,\alpha }\)), i.e., \(\left[ {\begin{smallmatrix} \varvec{A}^{\alpha } & \varvec{A}^{\alpha ,\beta } \\ \varvec{A}^{\beta ,\alpha } & \varvec{A}^{\beta } \end{smallmatrix}} \right]\).

Network science stands as a valuable tool for addressing diverse problems in various scientific disciplines (Vespignani, 2018). A plethora of topological, statistical, spectral, and combinatorial metrics, extracting information from networks, is available in the literature (Albert and Barabási, 2002; Barabási, 2016; Costa et al., 2007; Peach et al., 2021; Silva et al., 2022). These metrics can be categorized into global, local, and "intermediate" features. The first group quantifies properties involving all network elements, the second focuses on properties over a specific node or edge, and the last pertains to properties that involve subsets of the network, such as subgraphs. In multilayer contexts, these metrics find natural extensions to MNets. Most common topological metrics can be straightforwardly extended to intra-layer metrics by computation over intra-layer edges. Furthermore, these can also be applied to the entire MNet by computation over both intra-layer and inter-layer edges (Kivelä et al., 2014; Huang et al., 2021). Other approaches rely on measurements and properties inspired in tensor analysis literature (Kivelä et al., 2014).

2.3 Mapping time series into networks via visibility concepts

Over the past two decades, numerous approaches for time series analysis grounded in network principles have emerged. These methodologies involve the transformation of both univariate and multivariate time series into the network domain, manifested as either single-layer or multiple-layer networks (Silva et al., 2021). The mappings presented in the literature are essentially based on concepts of visibility, transition probability, proximity, time series models, and statistics (Zou et al., 2019; Silva et al., 2021). In this section, we review the concepts of horizontal visibility graphs and multiplex visibility graphs essential for the discussion that follows.

The Horizontal Visibility Graph (HVG) (Luque et al., 2009) is a graph \(G=(V,E)\) associated with a Univariate Time Series (UTS) \(\{Y_t\}_{t=1}^T\), where \(V=\{v_t, t=1,\ldots ,T\}\) with \(v_t\) representing the timestamp t. For all \(i,j = 1 \ldots T, i \ne j, (v_i, v_j) \in E\) if, for all k with \(i< k < j\) the following condition on the UTS observations holds:

In particular, when \(j = i+1, (v_{i}, v_{i+1}) \in E\) meaning that consecutive nodes are always connected.

Intuitively, the Horizontal Visibility Graph (HVG) algorithm interprets each observation, denoted as \(Y_t\), in a UTS as a vertical bar laid on a landscape, connecting different bars based on a visibility criterion. The height of each bar corresponds to its numerical value (at time t), and the visibility between two bars depends on their heights, determining mutual visibility. In an HVG, each timestamp, t, maps to a node, \(v_t\), and edges \((v_i, v_j)\) for \(i,j = 1 \ldots T, i \ne j\) are established if there is a horizontal visibility line between the corresponding data bars that is not intercepted by another data bar between the corresponding timestamps. Fig. 4 illustrates the HVG method.

Illustrative example of the Horizontal Visibility Graph (HVG) algorithm. (a) Represents a toy time series and corresponding visibility between data bars (observations), where solid blue lines represent the horizontal visibility lines according to the HVG method. (b) Represents a network generated by the corresponding mapping. Source: Adapted from Silva et al. (2021)

Some properties of HVG (Luque et al., 2009):

-

Connected: HVGs are always connected graphs, as each node \(v_i\) has horizontal visibility to its nearest neighbors \(v_{i-1}\) and \(v_{i+1}\).

-

Undirected/Directed: HVGs edges are undirected unless we consider the direction of the time axis or another directional concept.

-

Invariant under affine transformations: Each transformation \(X_t= aY_t + b\), where \(a \in \mathbb {R}, b \in \mathbb {R}\), and \(t = 1, \ldots , T\), leads to the same HVG.

The horizontal visibility method represents global and local topological characteristics of time series data in the graph. It is easy to implement, computationally fast, and parameter-free. In particular, there exists a set of mathematically well-defined relationships (Luque et al., 2009) between the properties of HVGs and the underlying time series characteristics (see Silva et al., 2021 for more details).

The Multiplex Visibility Graph (MVG) algorithm was proposed by Lacasa et al. (2015) as an extension of the horizontal visibility mapping for MTS analysis. Building upon the definition of MNet given in the previous section, formally, a MVG of m layers, M, is constructed, where the layer set \(\{L_{\alpha }\}_{\alpha = 1}^m\) corresponds to the HVGs, \(\{G^\alpha \}_{\alpha = 1}^m\), associated with the time series components \(\{Y^{\alpha }\}_{\alpha =1}^{m}\). The adjacency matrix vector \(\varvec{A}_M\), represents M with elements being the adjacency matrices of each layer, \(\varvec{A}_M = \{\varvec{A}^{1}, \ldots , \varvec{A}^{m}\}\) where \(A^{\alpha }_{i,j} = 1\) indicates that nodes \(v_i^{\alpha }\) and \(v_j^{\alpha }\) are connected in layer \(L_{\alpha }\) and 0 otherwise. Figure 5 illustrates this method.

Illustrative example of the Multiplex Horizontal Visibility Graph (HVG) algorithm: (a) Represents a toy multivariate time series with three components, \(\varvec{Y} = \{\varvec{Y}^{1}, \varvec{Y}^{2}, \varvec{Y}^{3}\}\), and (b) the resulting multiplex network with three layers generated by the Multiplex HVG mapping. Source: Adapted from Silva et al. (2021)

3 MHVG: a new multivariate time series mapping

Visibility mapping methods have demonstrated great promise in capturing time series properties that encompass both local and global characteristics of the data (Silva et al., 2021). For example, in Lacasa et al. (2008), the authors employ Natural Visibility Graph (NVG)Footnote 4 and demonstrate that periodic time series are mapped onto regular graphs, random time series are mapped onto random graphs, and fractal time series onto scale-free graphs. Notably, HVGs predominantly preserve local data information by connecting the closest timestamps due to their horizontal line visibility criterion. In our previous works (Silva, 2018; Silva et al., 2022), we emphasized crucial characteristics of time series data reflected in the topological features of HVGs. For instance, we correlated clustering-based measures with autocorrelation values in autoregressive data and with properties of discrete time series. Additionally, we observed that time series based on states and periodic data are mapped into HVGs exhibiting a topological structure that emphasizes communities, as reflected in relevant measures such as communities and centrality measures.

Approaches based on networks, particularly leveraging their topological features, have proven not only relevant but also complementary to conventional time series analysis. Various mining tasks for UTS and, more recently, for MTS have emerged, yielding promising results (Fulcher and Jones, 2017; Li and Liu, 2021; Silva et al., 2022). Recent literature indicates that multiplex versions of visibility graphs when applied to MTS data, can achieve enhanced accuracy and more promising outcomes compared to traditional time series mappings (Lacasa et al., 2015; Sannino et al., 2017; Eroglu et al., 2018; Silva et al., 2021).

However, to our knowledge, the aforementioned results do not directly incorporate inter-layer edges. The analysis involves examining the networks through topological measurements on the monoplex networks resulting from flattening approaches or using similarity measures on the individual layers of multiplex networks. We argue that high-level network structures can retain a greater amount of data information post-mapping functions. This structure also allows us to expand the range of resources available in the literature and explore additional network components, such as inter-layer edges, thereby broadening the exploration of multidimensional data.

This section introduces a novel visibility algorithm to map an MTS into a Multilayer Horizontal Visibility Graph (MHVG). The algorithm is based on a new visibility concept called cross-horizontal visibility, extending the traditional horizontal visibility.

3.1 Cross-horizontal visibility

Consider two time series \(\varvec{Z}^{\alpha }=\left( Z_{\alpha ,1}, \ldots , Z_{\alpha ,T}\right)\) and \(\varvec{Z}^{\beta }=(Z_{\beta ,1}, \ldots , Z_{\beta ,T})\) on the same scale. Two arbitrary data values \(Z_{\alpha ,i}\) and \(Z_{\beta ,j}\) are said to have cross-horizontal visibility, Cross-HV, if for all timestamps k with \(i< k < j\) and \(i, j = 1, \ldots , T, i \ne j\), the following condition is satisfied:

In the particular case where \(j = i+1\) the corresponding data values \(Z_{\alpha ,i}\) and \(Z_{\beta ,{i+1}}\) also have Cross-HV. Figure 6 illustrates the concept of Cross-HV with two toy time series.

Schematic diagram of the Cross-Horizontal Visibility (Cross-HV) concept. (a) Illustrates a toy bivariate time series \(\varvec{Z}^{yellow},\varvec{Z}^{blue}\), (in the same scale), and the corresponding maximum time series. (b) Represents the cross-horizontal visibility by solid bi-color lines (yellow and blue) connecting the data bars of the time series components \(\varvec{Z}^{yellow}\) and \(\varvec{Z}^{blue}\), for the first six timestamps

Cross-HV condition establishes a direct geometric relationship between lagged timestamps of two variables using cross-horizontal visibility lines. The objective is to capture cross-lagged dependencies and joint dynamic properties between pairs of time series. Timestamps from two layers are then connected if the internal dynamics of both time series (i.e., between the specified timestamps) do not change in a way that restricts the cross-horizontal visibility criterion.

Some properties of Cross-HV:

-

Reciprocal Footnote 5: It means that for all \(\alpha ,\beta ,i,j\) with \(\alpha \ne \beta , i \ne j\), if \(Z_{\alpha ,i}\) has Cross-HV to \(Z_{\beta ,j}\) then \(Z_{\beta ,j}\) has Cross-HV to \(Z_{\alpha ,i}\). In Fig. 6 the first six data points are highlighted and bi-colored lines are used to indicate the (reciprocal) visibility.

-

Non-Symmetric: Cross-HV is not symmetric. This means that for all \(\alpha ,\beta ,i,j\) with \(\alpha \ne \beta , i \ne j\), if \(Z_{\alpha ,i}\) has Cross-HV to \(Z_{\beta ,j}\), \(Z_{\beta ,i}\) may not have Cross-HV to \(Z_{\alpha ,j}\). Figure 6 illustrates the lack of symmetry in Cross-HV: the observation \(Z_{blue,2}\) has Cross-HV to \(Z_{yellow,6}\) (highlighted by the red ellipse), but \(Z_{yellow,2}\) does not have Cross-HV to \(Z_{blue,6}\).

3.2 Multilayer horizontal visibility graph

A Multilayer Horizontal Visibility Graph (MHVG) is obtained by mapping an MTS, \(\varvec{Y}=\{Y^{\alpha }\}_{\alpha =1}^{m}\), into an MNet structure, \(M = (V_M, E_M, V, \varvec{L})\), using the concepts of HV and Cross-HV, as follows. Each unique timestamp, t, is mapped into an unique entity in \(V_M\) and each component time series, \(\varvec{Y}^{\alpha }\), is mapped into a layer, \(L_{\alpha } \in \varvec{L}\), \(\alpha =1, \ldots , m\), using the HVG method described in Sect. 2.3, thus establishing the intra-layer edges, \((v_i^{\alpha }, v_j^{\alpha }) \in E_M\), \(i,j=1, \ldots , T\), \(i \ne j\). Then inter-layer edges \((v_i^{\alpha }, v_j^{\beta }) \in E_M,\) between any two layers \(L_{\alpha }\) and \(L_{\beta }\), \(\alpha ,\beta = 1, \ldots , m\), \(\alpha \ne \beta\) and \(i,j = 1, \dots , T\), \(i \ne j\) are established using the Cross-HV described above in Sect. 3.1. Note that to establish Cross-HV all the time series \(\varvec{Y}^{\alpha }\), \(\alpha =1, \ldots ,m\) must be in the same scale, which may require a pre-processing step of the dataset \(\varvec{Y}\), comprising the Min-Max scaling of each time series. The mapping is illustrated in Fig. 7, with toy bivariate time series, for the sake of simplicity.

Schematic diagram of the Multilayer Horizontal Visibility Graph (MHVG) algorithm: (a) represents the original toy time series, (b) shows the Min-Max re-scaled time series and maximum time series, (c) showcases the Cross-Horizontal Visibility (Cross-HV) with the edges between adjacent timestamps omitted for simplicity (detail for the first four timestamps), and (d) illustrates the MHVG: solid black lines represent the intra-layer edges (the HVGs), dashed lines the inter-layer edges (the Cross-HVGs), and the red lines highlight inter-layer edges between nodes corresponding to non-adjacent timestamps

From the generated MHVG, we can identify the intra-layer graphs, \(\{G^{\alpha }\}_{\alpha = 1}^m\) and the inter-layer graphs, \(G^{\alpha , \beta }\), for \(\alpha , \beta = 1, \ldots , m\) and \(\alpha \ne \beta\). The former corresponds to the HVGs associated with each time series component and it is represented by the adjacency matrix \(\varvec{A}^{\alpha }\) with \(A_{i,j}^{\alpha } = 1\) if \((v_i^{\alpha },v_j^{\alpha }) \in E_M\) and 0 otherwise. The second set of graphs corresponds to the Cross-Horizontal Visibility Graphs (Cross-HVG) associated with each pair of different time series components and it is represented by the adjacency matrix \(\varvec{B}^{\alpha ,\beta } = \left[ {\begin{smallmatrix} \varvec{0} & \varvec{A}^{\alpha ,\beta } \\ \varvec{A}^{\beta ,\alpha } & \varvec{0} \end{smallmatrix}} \right]\) with \(A_{i,j}^{\alpha , \beta } = 1\) and \(A_{j,i}^{\beta , \alpha } = 1\) if \((v_i^{\alpha },v_j^{\beta }) \in E_M\) and 0 otherwise. With this representation, we aim to map the serial dependencies within the data onto intra-layer graphs and the cross-dependencies onto inter-layer graphs. Consider the hypothetical case (trivial, but not real) of a two-component MTS where both components precisely represent the same values, that is, exhibiting the same behavior and correlations. In such an instance, the intra-layer adjacency matrices (\(\varvec{A}^{1}\) and \(\varvec{A}^{2}\)), as well as the inter-layer adjacency matrices (\(\varvec{A}^{1,2}\) and \(\varvec{A}^{2,1}\)) resulting from the MHVG mapping, would be identical, reflecting exactly the same correlations.

Algorithm 1 describes the Cross-HV method which establishes the inter-layer edges between two given time series. This involves two for-loops that check for Cross-HV between a pair of time series components (Eq. 4). The first for-loop (lines 3–9) tests the Cross-HV from a given node \(v_i^{\alpha }\) to the nodes \(v_j^{\beta }\) (with \(j>i\)) to its right (i.e., for timestamps newer than it), while the second for-loop (lines 10–16) tests the Cross-HV from a given node \(v_i^{\alpha }\) to the nodes \(v_j^{\beta }\) (with \(j<i\)) to its left (i.e., for timestamps older than it). For the sake of simplicity, Algorithm 1 does not include all the implementation details related to the Cross-HV condition. In the Appendix A Algorithm 3, we provide the same algorithm with the necessary details for reproducibility.

Algorithm 2 describes the complete mapping of a multivariate time series into a Multilayer Horizontal Visibility Graph. The procedure starts by mapping each time series component of an MTS into the respective HVGs (following the condition of Eq. 3) and allocating them to individual layers on an MNet (line 7). Next, all series are re-scaled to the range [0, 1] using Mix-Max scaling (line 8). Finally, for pairwise time series components, the corresponding maximum time series is obtained (line 14), and the Cross-HV method is applied to establish the inter-layer connections (line 15), resulting in the creation of the MHVG corresponding to the given MTS (line 18).

The Cross-HV method requires time series to be on the same scale because the visibility method is sensitive to their values. If one series has much larger values, connections can only be made between the nearest neighbors of a given observation, as higher values can block visibility. We use the Min-Max method to scale the series to the same range. However, this scaling does not handle high noise levels well, and noisy data should be cleaned before scaling. If noise is low, the method maps the time series behavior accurately. Future work will explore the impact of noise on this method.

3.3 MHVG: computational complexity

The computational complexity of the proposed mapping method depends on two variables: T, the time series length, and m the number of variables. It is determined by the two for-loops (lines 5–9 and lines 10–17 of Algorithm 2) and the procedures performed within them. The computational complexity of the first for-loop is \(\mathcal {O}(m(T\log T))\), as the loop iterates m times (representing each time series component), and for each iteration, the HVG algorithm is executed. Using the version proposed in Lan et al. (2015), this incurs a complexity of \(\mathcal {O}(T\log T)\). The second for-loop consists of two nested loops iterating through pairs of time series components. In each iteration, the call to the CHVG function determines the complexity of the loop. The proposed Cross-HVG mapping has a complexity \(\mathcal {O}(T^2)\), which is determined by the for-loops that test the cross-horizontal visibility. Thus, the total complexity of the second for-loop in Algorithm 2 is \(\mathcal {O}(m^2T^2)\). Finally, Algorithm 2 has computational complexity \(\mathcal {O}(m^2T^2)\) which is determined by the procedure that tests Cross-HV between all pairs of time series in an MTS.

It is worth noting that the complexity of Algorithm 3 can be improved by applying the divide-and-conquer strategy proposed by Lan et al. (2015). This improvement would result in a final complexity of the MHVG method of \(\mathcal {O} (m^2 (T \log T))\). This improvement is particularly noteworthy in practical scenarios since, in real datasets, the variable T is typically much larger than the variable m. Additionally, given the independence of the individual HVG procedures (for each layer) and Cross-HVG procedures (for each pair of layers), parallelization techniques can also improve the runtime complexity.

Figure 8 empirically illustrates the polynomial behavior analyzed above with synthetic data. For simplicity and without loss of generality, we simulate independent white noise processes (neither serial nor cross-correlation) with two fixed lengths, \(T = 10^4\) and \(T = 10^5\), and for increasing the number of time series components m, ranging from 2 to 30, with increments of 2. Additionally, the simulations were also conducted for \(m = 2\) and \(m = 6\), varying the time series length, T, from \(10^2\) to \(7.5\times 10^6\), where the exponent is increased from 2 to 6 and the coefficient with increments of 2.5. The simulation and mapping process of the resulting MTS onto an MHVG was executed on a "common" laptop with an AMD Ryzen processor with 8 cores and 16 GB of RAM.

Illustration of time complexity behavior for the MHVG mapping with synthetic data (independent white noises). Runtime, in seconds, for (a) two fixed time series lengths, \(T = 10^4\) and \(T = 10^5\), and increasing the number of time series components m, ranging from 2 to 30; (b) fixed number of components, \(m = 2\) and \(m = 6\), and increasing time series length, T, from \(10^2\) to \(7.5\times 10^6\)

4 A novel set of multivariate time series features

Feature extraction has become a popular and manageable approach to analyzing high-dimensional time series data. Several sets of features for univariate time series have been proposed in the literature and have been made available in widely-used programming languages such as R and Python (Wang et al., 2006; Hyndman et al., 2015; Fulcher, 2018; Kang et al., 2020; Montero-Manso et al., 2020; Silva et al., 2022; Bonifati et al., 2022). However, this level of development and availability is not mirrored in the context of multivariate time series. This section aims to introduce a set of features for multivariate time series leveraging on mapping an MTS into an MHVG, encompassing: i) conventional topological features extended to MNets and ii) a novel feature specifically designed for MNets.

4.1 Topological features extended to MNets

Common network topological features, such as node centrality, graph distances, clustering, and community, can be naturally extended to an MNet structure, including all the subgraphs mentioned in Sect. 2.2. To illustrate, let us consider a local centrality measure like the degree \(k_i\) of a node \(v_i\), representing the number of edges adjacent to \(v_i\). In an MNet, we can compute the following three variants of \(k_i\), for each layer \(L_{\alpha }, \alpha =1, \ldots ,m\). Here, we use the symbols \(\prec\) (\(\preceq\)) to denote the inter-layer edges from a “source" layer \(L_{\alpha }\) (includes intra-layer edges of the “source" layer), to a “destination" layer \(L_{\beta }\), with \(\beta \ne \alpha\),

-

intra-layer degree: \(k_i^{\alpha } = \sum _{j}{A_{ij}^{\alpha }}\)

-

inter-layer degree: \(k_i^{\alpha \prec \beta } = \sum _{j}{A_{ij}^{\alpha ,\beta }}\)

-

all-layer degree: \(k_i^{\alpha \preceq \beta } = k_i^{\alpha } + k_i^{\alpha \prec \beta }\)

Note that local inter and all-layer topological measurements are asymmetric measures, meaning that \(k_i^{\alpha \prec \beta } \ne k_i^{\beta \prec \alpha }\) and \(k_i^{\alpha \preceq \beta } \ne k_i^{\beta \preceq \alpha }\), as the measure is relative to node-layer \(v_i^{\alpha }\) or node-layer \(v_i^{\beta }\).

Similarly, we can extend global topological features to MNets. Within the same example, consider the average degree \(\bar{k}\) of a graph, which calculates the arithmetic mean of the degree \(k_i\) of all nodes in the graph, we can compute three variants of \(\bar{k}\) in an MNet,

-

average intra-degree: \(\bar{k}^{\alpha } = \frac{1}{|V_{\alpha }|} \sum _{i}{k_i^{\alpha }}\)

-

average inter-degree: \(\bar{k}^{\alpha ,\beta } = \frac{1}{|V_{\alpha }|+|V_{\beta }|} \left( \sum _{i}{k_i^{\alpha \prec \beta }} + \sum _{j}{k_j^{\beta \prec \alpha }} \right)\)

-

average all-degree: \(\bar{k}^{\alpha , \beta }_{all} = \frac{1}{|V_{\alpha }|+|V_{\beta }|} \left( \sum _{i}{k_i^{\alpha \preceq \beta }} + \sum _{j}{k_j^{\beta \preceq \alpha }} \right)\)

Note that these features involve all elements (nodes and edges) of the corresponding (sub)graphs and are therefore symmetric features.

In general, any common local topological feature \(F_i\) can be easily extended to intra-layer features, \(F_i^{\alpha }\), by computing them over individual layers, to inter-layer features, \(F_i^{\alpha \prec \beta }\), by computing over inter-layer edges, and to all-layer features, \(F_i^{\alpha \preceq \beta }\), which compute over both intra-layer and inter-layer edges of a given node \(v_i\). And, a global topological feature \(\varvec{F}\) can be computed in the subgraphs of the MNet: intra (\(\varvec{F}^{\alpha }\)), inter (\(\varvec{F}^{\alpha , \beta }\)), and all-layer graphs (\(\varvec{F}^{\alpha , \beta }_{all}\)).

Motivated by the definition of MNet features introduced above and by the set of features proposed in Silva et al. (2022) for UTS analysis, namely features based on the concepts of node centrality, graph distances, clustering, and community, we propose the following topological features for each layer (or for each pair of layers) of an MNet, in particularly of an MHVGs:

-

Average degree: The average intra-degree \(\bar{k}^{\alpha }\), average inter-degree \(\bar{k}^{\alpha ,\beta }\) and average all-degree \(\bar{k}^{\alpha , \beta }_{all}\), as formulated above.

-

Average path length: Geodesic distances \(d_{i, j}, i \ne j\) between node \(v_i\) and \(v_j\) corresponding to the length of the shortest paths between them, where the path length is the number of edges in the path. The average (intra-/inter-/all-)path length (\(\bar{d}^{\alpha }, \bar{d}^{\alpha ,\beta }\) and \(\bar{d}^{\alpha ,\beta }_{all}\)) is the arithmetic mean of the shortest paths among all pairs of nodes in (intra, inter, and all-layer) graph.

-

Number of communities: The number of (intra-/inter-/all-)communities, (\(S^{\alpha }, S^{\alpha ,\beta }\) and \(S^{\alpha , \beta }_{all}\)), is the number of groups/communities of nodes that are densely connected on the corresponding subgraph. These communities are found by performing random walks on the subgraph (intra, inter, and all-layer graph) so that short random walks tend to stay in the same community until the modularity value (defined below) cannot be increased anymore.

-

Modularity: (Intra-/Inter-/All-)modularity, (\(Q^{\alpha }, Q^{\alpha ,\beta }\) and \(Q^{\alpha , \beta }_{all}\)), measures how well a specific division of (intra-/inter-/all-)graph is into communities.

-

Degree distribution: A fundamental property of a graph is the degree distribution P(k). This topological feature measures the fraction of nodes in a graph with degree k. Three extended variants of degree distributions are also proposed, namely, \(P(k^{\alpha }), P(k^{\alpha \prec \beta })\) and \(P(k^{\alpha \preceq \beta })\), in layer \(L_{\alpha }, \alpha = 1, \ldots , m\), which is associated with its intra-layer degree, inter-layer degree and all-layer degree, respectively.

-

Jensen-Shannon divergence: To measure the similarity between pairs of layers in an MNet, we use the Jensen-Shannon divergence (JSD) to quantify the distance between two degree distributions. \(JSD^{\alpha , \beta }_{intra}, JSD_{inter}^{\alpha , \beta }\) and \(JSD_{all}^{\alpha , \beta }\) measure, respectively, the JSD between intra-layer, inter-layer and all-layer degree distributions of layers \(L_{\alpha }\) and \(L_{\beta }\). For example, \(JSD^{\alpha , \beta }_{intra}\) is defined as follows:

$$\begin{aligned} JSD(P(k^{\alpha })||P(k^{\beta })) = \frac{1}{2} KLD(P(k^{\alpha }) || Q(k)) + \frac{1}{2} KLD(P(k^{\beta }) || Q(k)) \end{aligned}$$where \(Q(k) = \frac{1}{2} (P(k^{\alpha }) + P(k^{\beta }))\) and KLD is the Kullback–Leibler divergence Footnote 6:

$$\begin{aligned} KLD(P(k^{\alpha }) || Q(k)) = \sum _k{P(k^{\alpha }) \log _2 \left( \frac{P(k^{\alpha })}{Q(k)} \right) }. \end{aligned}$$

Table 1 provides additional formulation details.

4.2 Ratio degree: a new MNet topological feature

The ratio degree is introduced here as a new topological feature for multilayer graph analysis, aiming to capture the relationship between intra-layer and inter-layer connections.

For any two different layers \(L_{\alpha }\) and \(L_{\beta }\), \(\alpha ,\beta = 1, \ldots , m\), with \(\alpha \ne \beta\), the ratio degree of node \(v_i^{\alpha }, i = 1, \ldots , T,\) from layer \(L_{\alpha }\) to layer \(L_{\beta }\) is defined as

The average ratio degree, \(\bar{r}^{\alpha \preceq \beta }\), is the arithmetic mean of the ratio degree of all nodes of layer \(L^{\alpha }\). This ratio between the external and the internal connections of a given layer provides insights into the prevalence of the Cross-HV relationships between nodes across layers compared to their horizontal visibility relationships within the layer. Thus, if \(\bar{r}^{\alpha \preceq \beta } > 1\), it indicates an emphasis on the Cross-HV relation. Conversely, if \(\bar{r}^{\alpha \preceq \beta } < 1\), it suggests a significance on horizontal visibility relations within the layer. If \(\bar{r}^{\alpha \preceq \beta }=1\), both serial and cross-horizontal visibility relationships are similar. Note that the ratio degree and the average ratio degree are asymmetric measures. Therefore, it is not necessarily true that if \(r_i^{\alpha \preceq \beta } = r_i^{\beta \preceq \alpha }\), then \(r_i^{\beta \preceq \alpha } = r_i^{\alpha \preceq \beta }\).

In this work, we will use the term relational features to refer to similarity measures like JSD and average ratio degree.

4.3 The MHVG feature set

The set of features defined above and summarized in Table 1 constitutes a feature set extracted from MHVG, as shown in Fig. 9. This feature set is proposed for characterizing a Multivariate Time Series (MTS).

Schematic diagram of the process for extracting the multilayer network feature set. A multivariate time series \(\varvec{Y}\) is mapped into a Multilayer Horizontal Visibility Graph. Global topological features (\(\bar{k}, \bar{d}, S, Q\) and P(k)) and relational features (\(\bar{r}\) and JSD) are computed for each of its subgraphs (intra-layer, inter-layer, and all-layer graphs)

5 Empirical evaluation of MHVG

In this section, we investigate the utility of the mapping method and the feature set introduced above for characterizing and analyzing MTS data. We also assess the applicability of the methodology and its performance in various MTS mining tasks. We begin by utilizing synthetic bivariate time series generated from bivariate time series models to control for MTS correlation properties, both serial and cross-correlation. Subsequently, we apply the methodology to real and benchmark datasets, encompassing a diverse range of characteristics and featuring data with varying dimensions, including differences in time series length and the number of time series components.

Before delving into a detailed analysis of the proposed MHVG feature set and its performance on different time series models, we provide some considerations about the methodology’s implementation. Finally, we finish with the MTS mining analysis on both the synthetic and benchmark datasets to verify the applicability and advantage of introducing inter-layer edges to the horizontal visibility method.

5.1 Implementation details

For illustrative purposes, in Fig. 9, a bivariate time series, \(m=2\) was considered. However, the method is extensible to any value of m, as shown in the subsequent analysis of the benchmark datasets.

We follow the Algorithm 2 to map a multivariate time series \(\varvec{Y}\) into the corresponding MHVG. Specifically, we use the algorithm implemented as proposed in Lan et al. (2015) to create the intra-layer HVGs, \(\{G^{\alpha }\}_{\alpha = 1}^m\), for each time series component, \(\{Y_{\alpha ,t}\}, \alpha =1, \ldots , m, t = 1, \ldots , T\), and Algorithm 3, which follows the mapping method based on cross-horizontal visibility criteria proposed in Sect. 3.1, to establish the inter-layer edges between the pairwise layers. We fix the subgraphs corresponding to intra-, inter-, and all-layers via the corresponding adjacency submatrices of the resulted MHVG (see Sect. 2.2). Finally, we compute the corresponding topological features described in Sect. 4 using the methodologies and algorithms described below.

The average degree (\(\bar{k}\)) and average ratio degree (\(\bar{r}\)) are calculated by the arithmetic mean of the degrees \(k_i\) and ratio degrees \(r_i\), respectively, of all nodes \(v_i\) in the respective subgraph. In this work, the average path length (\(\bar{d}\)) follows an algorithm that computes the average shortest path length between all pairs of nodes (of respective subgraphs) using a breadth-first search algorithm. To calculate the number of communities (S), the function used makes use of the known "Louvain" algorithm that finds community structures by multi-level optimization of modularity (Q) feature (see Blondel et al., 2008 for more details). The degree distributions (P(k)) and Jensen-Shannon divergence (JSD) are implemented as described in the above section.

We used C++ and its needed set of libraries (such as igraph and standard libraries) to implement the data structure to store an MNet and its subgraph structures and to compute the functions to extract the topological features. For reproducibility purposes, the datasets and results are made available in https://github.com/vanessa-silva/MHVG2MTS.

5.2 Synthetic datasets

We consider a set of six bivariate time series models (choosing \(m=2\) for reasons of simplicity in generation and explanation for subsequent analysis), denoted as Data Generating Processes (DGPs), summarized in Table 2. These MTS models exhibit particular characteristics in terms of serial and cross-correlation (see Sect. 2.1), including: White Noise (WN) processes simulating noise effects, with one process devoid of any correlation and the other featuring strong contemporaneous correlation; Vector Autoregression (VAR) processes simulate smooth linear data, presenting both serial and cross-correlation; Vector Generalized Autoregressive Conditional Heteroskedasticity (VGARCH) processes simulate nonlinear data with persistent periods of high or low volatility. The parameters of each DGP are chosen to ensure that the data exhibits a range of serial and cross-correlation properties, as described in Table 2. A detailed description of the DGP, their properties, and computational details are presented in Appendix B.

For each DGP in Table 2, we generated 100 instances of length \(T = 10000\). As illustrated in Fig. 9, we map each bivariate time series into an MHVG, highlight the intra-, inter-, and all-layer graphs, and extract the corresponding topological features.

To illustrate the procedure, we represent in Fig. 10 one instance with 300 observations of each DGP and the corresponding cross-correlation (CCF) plot (first two columns of the plot), the intra-, inter-, and all-layers degree distributions on a semi-logarithmic scale (last three columns of the plot). These degree distributions are computed as the arithmetic mean of the degree distributions of the 100 simulated instances.

Analysis plots of DGP. The first column shows a subset of timestamps of each DGP, the second plots the cross-correlation function between their corresponding time series components, and the last three show the degree distribution of the MHVGs. The plots corresponding to the degree distributions are on a semi-logarithmic scale. Lines of different colors refer to each DGP model (\(\varvec{Y}\)), where the darkest colors refer to their first components (\(\varvec{Y}^1\)) and the lighter ones to the second (\(\varvec{Y}^2\))

The plots presented in Fig. 10 clearly show that the degree distributions are different across the DGPs. In fact, Luque et al. (2009) has shown that the intra-layer degree distribution for white noise (uncorrelated data) follows a power law \(\left( {P(k) = 1 / 3\left( {2 / 3} \right)^{{k - 2}} } \right)\) and our results indicate that strong serial correlation leads to intra-layer degree distributions that are positively skewed: as illustrated in Appendix B, the sVAR is the only DGP that produces data with strong serial correlation. The degree distribution for the inter-layer subgraphs does not have an algebraic close form even in the simplest case of two uncorrelated white noises. However, extensive simulations indicate that it does not follow the power law \(P(k) = 1/3\left( {2/3} \right)^{{k - 2}}\), as illustrated in the first line, third column of Fig. 10. The plots also indicate that inter-layer degree distribution depends both on the correlation between the two time series (CCF represented in the second column of the plot in Fig. 10) and the serial correlation within each time series. Moreover, we note that inter-layer degree distributions for sVAR are positively skewed, for VGARCH models, wGARCH and sGARCH, are exponentially shaped while the remaining are approximately linear. Once again, a slower decay of the lagged correlation leads to a longer tail in the degree distribution. Also, the degree distribution curves corresponding to the VGARCH models stand out from the others, especially the inter- and all-layer degree distributions. The exponential shape of the inter-layer degree distributions is induced by the heteroscedasticity and volatility clusters in the data which limit cross-horizontal visibility to the nearest neighbors.

5.3 MTS feature set via MHVG

The results for all the 21 features introduced in Sect. 4 and all DGPs, organized by subgraph structure, are summarized, mean (standard deviation), in Tables 3 and 4. The values have been Min-Max normalized (across models) for comparison purposes since the range of the different features varies across the different DGPs. The cells in the tables are colored with a gradient based on the mean values: cells with a maximum value of 1 are colored red, cells with a minimum value of 0 are colored white, and the remainder with a hue of red color proportional to its value.

The results indicate that each set of features — intra-layer (first two columns of each feature in Table 3), inter-layer (third column of each feature in Table 3), all-layer (top of Table 4) and relational (bottom of Table 4) — distinguishes two groups of MTS based on properties related to correlation (serial and cross) and volatility clustering.

Focusing on the characteristic of conditional heteroscedasticity, we can observe that the proposed mapping is sensitive to this property, particularly the Cross-HVG mapping. The visibility criterion is dependent on the very high and very low values of the data over time. Therefore, the heteroscedasticity and correlation between data impose additional limits on the cross visibility between values of different variables over time, leading to greater variability in the topological features of the HVG and, especially, Cross-HVG. This observation is evident in Table 3 where the standard deviation values corresponding to the 100 samples of each DGP analyzed are higher for the VGARCH models.

To understand which MNet topological features capture the specific properties of the MTS models, we perform PCA on the feature space. Figure 11 represents a bi-plot obtained using the intra-, inter-, all-layer, and relational features, with the two principal components (PC) explaining \(83.8\%\) of the variance. The bi-plots resulting from PCA in restricted feature sets are represented in Fig. 15 in Appendix B). Overall, we can say that the average degree and average ratio degree, \(\bar{k}\) and \(\bar{r}\), are positively and negatively correlated, respectively, with the average path length, \(\bar{d}\). The community-related features of the intra- and all-layer graphs are positively correlated but less correlated with the community-related features of the inter-layer graphs. The features that most contribute to the first two PCs are the \(\bar{k}^{1,2}, S^{1,2}\) and \(Q^{1,2}\) of the inter-layer graphs, the \(\bar{k}^{1, 2}_{all}\) of the all-layer graphs, the \(\bar{r}^{1 \preceq 2}\) and \(\bar{r}^{2 \preceq 1}\) of the relational layers, and \(Q^1\) and \(Q^2\) of the intra-layer graphs (see Fig. 16 of Appendix B). Figure 11 clearly shows four groups of models, VGARCH, sVAR, cBWN, and a group constituted by wVAR and iBWN, identifying the topological features that characterize them.

Bi-plot of the first two principal components (PCs) of principal component analysis for the Data Generating Process (DGP). Each DGP is represented by a different color and the arrows represent the contributions of the MNet features to the PCs. The larger the size, sharpness, and closeness to the red the greater the contribution of the feature. Features placed together are positively correlated and those placed on opposite quadrants are negatively correlated

The strong ACF and CCF of the sVAR are represented by high values for the number of communities and modularity in its intra- and all-layer graphs. Inter-layer graphs present higher values of community-related features for VGARCH models. The average path length represents the VGARCH models, in particular, the average path length of the all-layer graphs tries to distinguish both wGARCH and sGARCH. The strong contemporaneous CCF of cBWN is represented by high values of average ratio degree features, such as the average degree values of its inter and all-layer graphs. iBWN and wVAR are represented by high values of intra-layer average degree.

The above results indicate that the topological features extracted from MHVG are adequate as a set of MTS features. To further explore this idea, in the next section, we proceed with multivariate time series mining tasks of the DGPs and the benchmark datasets via MNet topological features.

5.4 Mining time series with MNet features

To conclude our analysis and demonstrate the applicability and practicality of the proposed method, this section presents two direct applications in one of the most common time series mining tasks. We begin with a multivariate time series clustering task, an unsupervised learning, and then proceed with a classification task, a supervised learning. We analyze these mining tasks on both the synthetic dataset described in the above Section and on real-world and benchmark datasets.

For benchmark MTS datasets, we select 19 datasets from the UEA Multivariate Time Series Classification archive (Bagnall et al., 2018). Table 5 summarizes the general description of each MTS dataset, including dataset size, time series length, number of dimensions/components UTS, number of classes, and the dataset type. The UEA Multivariate Time Series Classification archive offers a total of 30 MTS datasets; however, for simplicity, we selected the datasets with the same length and without missing values. These data are diverse, representing different types and exhibiting significant variety in terms of dimensionality. The dataset size varies between 27 and 10992, with \(T \in [8, 2500]\), \(m \in [2,28]\), and the number of different classes ranging from 2 to 26.

5.4.1 Multivariate time series clustering

In this section, we demonstrate the utility of MNet features, particularly those related to both intra- and inter-layer edges, in MTS data mining tasks, focusing on clustering through a feature-based approach (Maharaj et al., 2019). For a given set of MTS, we compute the MHVG feature vectors. These vectors are then Min-Max rescaled to the [0, 1] range and organized in a feature data matrix. The PCs are computed without the need for z-score normalization in PCA computation. Finally, we apply the k-means clustering algorithm to all PCs (100% of variability). We choose k-means for its speed and widespread use, even though it requires the pre-specification of the number of clusters, which is not a problem for this work. We employ commonly used clustering evaluation metrics: Average Silhouette (AS), Adjusted Rand Index (ARI), and Normalized Mutual Information (NMI). AS does not require ground truth, while the ARI and NMI do. The range of values is \([-1,1]\) for ARI and AS and [0, 1] for NMI.

The clustering analysis results for the 100 instances of the six DGP datasets (see Table 2) are summarized in Fig. 12. This summary reflects 10 repetitions of the clustering analysis using the set of 21 MHVG features proposed in Sect. 4.3 and the k-means algorithm for different values of k (with \(k \in [2,12]\)). Different evaluation metrics suggest different optimal numbers of clusters for the dataset: ARI indicates \(k=5\), followed by \(k = 6\) (the ground truth value), while the NMI indicates either \(k=6\) or \(k=7\). Metric AS, using the silhouette method to assess cluster quality indicates \(k=3\). It is interesting to note that the elements of the three clusters are: the sVAR models in one cluster, the VGARCH models in another, and the WN and wVAR models in the third cluster, indicating that the DGPs were clustered according to correlation (serial and cross) and volatility properties.

Results of DGP’s clustering evaluations using the three evaluation metrics, (a) Adjusted Rand Index (ARI), (b) Normalized Mutual Information (NMI), and (c) Average Silhouette (AS). The evaluation is computed for different numbers of clusters, k, given as input in the k-means algorithm. The results refer to 10 repetitions of the clustering analysis using the MNet features set

Figure 13 shows the results for DGP clustering using the 21 MHVG features and the k-means algorithm with \(k=6\) (ground truth). Notably, there is a perfect attribution of cBWN and sVAR samples across two different clusters (clusters 1 and 3), the attribution of iBWN and wVAR samples to the same cluster (cluster 2), and a uniform attribution of GARCH samples (wGARCH and sGARCH) across two clusters (4 and 5). Additionally, some samples of cBWN and sGARCH are found in cluster 6, consistent with the chosen \(k = 6\) and the inherent dissimilarity of VGARCH samples in the feature space.

Attribution of the samples corresponding to instances of MTS models to the different clusters based on intra, inter, all-layer, and relational feature sets. Models are displayed on the horizontal axis, each represented by a unique color. Colored points represent bivariate time series samples according to their model process, while the vertical axis denotes the assigned cluster number

We also conducted the clustering analysis considering the different MNet feature sets described in Sect. 4. The summarized results in Table 6 indicate that inter-layer edges contain valuable information about MTS data, leading to improved cluster outcomes. Subgraphs incorporating both intra-layer edges and inter-layer edges contribute additional information, resulting in enhanced clustering compared to using only intra-layer features (compare the last three rows with the first two). The findings reveal that Cross-HVG of inter-layer edges capture distinct properties of MTS data, yielding improved clustering outcomes as anticipated. Also, note that ARI and NMI results from the set of intra-layer features are favorable, reflecting the shared statistical process in the DGP being analyzed for the two time series components. The inherent properties of each process are effectively captured by the HVG mapping methods.

To complement and validate our findings, we replicated the aforementioned experiment on benchmark datasets detailed in Table 5. Our focus was initially on evaluating the performance of the feature set derived from MHVGs in automatically determining the optimal number of clusters (k) for each dataset, utilizing clustering evaluation metrics such as ARI, NMI, and AS.

We followed the same methodology, conducting 10 repetitions of clustering analyses for each dataset. The optimal value of k was determined by assessing the clustering performance using the selected clustering metric. To achieve this, we performed the clustering task for k values ranging from 2 to the ground truth value plus 6. The first k that yielded the highest metric value was selected (i.e., the smallest k if there were multiple with the highest metric value). In Appendix C is presented Table 9 with the results, that is, with the mean values corresponding to the 10 repetitions (standard deviations were non-significant). Overall, the obtained k values align closely with the ground truth for ARI and NMI metrics, except for datasets 3 and 6 — Human Activity Recognition (HAR) datasets from smartwatches — where k values significantly deviate. Furthermore, we can observe that the AS metric produced less favorable results, indicating a low intra-cluster density in the generated clusters. This observation can be attributed to the number of features used in the experiments, as we did not employ techniques to reduce dimensionality or select more representative features. Additionally, it may be influenced by the normalization method used for the feature vector, which, in this case, was the Min-Max method.

Subsequently, we fixed the value of k to the ground truth of each dataset and applied the clustering methodology, as described earlier, to different subsets of topological features extracted from the corresponding MHVGs. The results of the 10 repetitions of the experiment, using the NMI evaluation metric, are presented in Table 7.

With the benchmark dataset, it becomes evident that the features associated with inter-layer edges, introduced in this work, contribute additional information that enhances the clustering results. Notably, only dataset 2 exhibits superior results for the subset of intra-layer features. Moreover, it is worth highlighting that the least favorable outcomes are observed for ECG and EEG datasets, suggesting that the topological features proposed in this work may not be optimally suited for this particular type of data record. Conversely, the most favorable results are associated with HAR and MOTION data types, particularly for datasets where classes are linked to movement determination or recognition. Interestingly, the results seem to be independent of the dataset dimensions, including the time series length, the number of components/dimensions, and the number of classes.

5.4.2 Multivariate time series classification

We extended our analysis of MHVG feature sets to a classification problem, specifically a supervised learning task, using benchmark multivariate time series datasets. The datasets were divided into training and testing sets following the procedure outlined in Bagnall et al. (2018). Leveraging the Random Forest ensemble learning method, we conducted ten iterations of training and predictions for each dataset. Results were evaluated using the widely accepted Accuracy metric, which computes the ratio of correct predictions to the total number of predictions.

Table 8 presents the results obtained using different sets of MHVG features, with the values representing the mean of the 10 repetitions for each experiment. The most favorable outcomes were consistently achieved when utilizing the entire set of MHVG features, combining all topological feature subsets. Notably, these results surpassed those obtained with the intra-layer feature set. This aligns with our findings from the clustering analysis, reinforcing the significance of the proposed topological inter-layer features and validating the Cross-HVG method. Similar to the clustering analysis, performance was very good for certain datasets, particularly those related to HAR and MOTION data, while less favorable for others, namely ECG and EEG data. Additionally, there appears to be no discernible relationship with the dimensionality characteristics of the analyzed data.

In conclusion, while the overall results may not exhibit exceptional strength, we consider them good and promising, especially given the global nature of the features employed and the absence of more advanced techniques, such as feature selection, which could further enhance the results.

6 Conclusion

In this paper, we introduce a novel mapping method for representing multivariate time series as multilayer networks. Our approach involves mapping each time series component into a layer using the horizontal visibility concept and establishing inter-layer edges based on a new concept called cross-horizontal visibility. While our focus is on horizontal visibility due to promising results in prior works (Silva, 2018; Silva et al., 2022), the proposed Cross-HVG algorithm can naturally extend to other visibility concepts and versions of visibility graphs.

To evaluate our proposed method, we analyzed a specific set of topological features in multilayer networks, drawing inspiration from concepts such as node centrality, graph distances, clustering, communities, and similarity measures. These features are extracted from three types of subgraphs within the resulting multilayer network structure: intra-layer graphs (containing only intra-layer edges), inter-layer graphs (containing only inter-layer edges), and all-graphs (containing both intra and inter-layer edges).

We conduct an empirical evaluation on a set of 600 synthetic bivariate time series, grouped into 6 different and specific statistical models, resulting in a dataset of 600 MHVGs. To understand the potential of our proposed mapping method, we first analyze the degree distributions of the intra-, inter-, and all-layer subgraphs within MHVGs. We were able to identify the specific properties of multivariate time series models. Notably, we establish connections between weak and strong cross-correlation and the shapes of inter-layer degree distribution curves, as well as weak and strong autocorrelation and the shapes of intra-layer degree distribution curves. Specifically, we identified that the persistence of strong correlations is related to distributions with a positively skewed shape, indicating a longer right tail. Additionally, beyond correlation properties — both auto and cross, contemporaneous and lagged — the properties of statistical models, such as heteroscedasticity and smoothness, result in inter-layer and all-layer degree distributions with distinct shape curves.

We also investigated the global topological features of the subgraphs (intra, inter, and all-layer). Community-related features from intra and all-layer graphs highlight the strong VAR models, with high and persistent autocorrelation and cross-correlation, as well as with smoothness, and from inter-layer graphs highlight the heteroscedasticity models, both weak and strong VGARCH models. However, the values of average path length from all-layer graphs seem to distinguish the properties of weakly and strongly correlated. The average intra-degree has higher values for independent white noise and weak VAR models but does not distinguish them.

The new relational feature proposed in this work, average ratio degree, seems to differentiate well highly correlated contemporary white noise models, resulting from the similarity between its inter-layer degree and intra-layer degree features. In the context of this work, based on the synthetic models chosen for analysis, the Jensen-Shannon divergence (JSD) measure is only useful to characterize strong VAR models that present very strong and very persistent correlations, unlike the other models. However, this feature can prove to be particularly valuable in real scenarios, where the different variables within a multivariate time series can follow different dynamic models - captured effectively by this feature. We observe this fact, for example, in some real data sets, namely, AtrialFibrillation and StandWalkJump (ECG type data), and Epilepsy and Cricket (HAR type data). Notably, in our analysis, we verify that these relational features emerge as one of the topological features that contribute most to the principal components in the resulting PCA for these datasets.

Our focus is not on competition or comparison with existing methods, proof of this is the non-introduction of weights on visibility edges — a factor known to improve clustering results in the univariate setting. Instead, we emphasize the validity of our proposed mapping method and features by conducting clustering and classification mining analyses on synthetic time series and benchmark datasets. The results highlight the value of incorporating inter-layer edges within the MHVGs. This complements intra-layer edges, enabling more effective differentiation among the different statistical processes underlying the multivariate data models and improves the clustering and classification results in predicting the ground truth classes for real datasets.

While we acknowledge the potential for further advancements by integrating machine and deep learning approaches with our proposed methodology, our current focus lies in presenting an innovative approach for multivariate time series analysis based, essentially, on interpretable features. This is a crucial aspect of meaningful data analysis — an attribute often overlooked in machine and deep learning models.

To conclude, this work introduces a procedure based on multivariate mapping to derive a set of features for multivariate time series applicable to various mining tasks. The method revolves around the concept of visibility, where the construction of the graph involves mapping point values onto individual nodes. While scalability challenges may arise for large datasets, as indicated by the complexity analysis conducted, future efforts could enhance computational efficiency through algorithmic techniques. Additionally, exploring alternative mapping methods, like those based on transition and proximity concepts, may also offer solutions for scalability concerns.

Furthermore, the visibility criteria addressed in this work may exhibit sensitivity, implying less resistance to noise present in real data. In future work, we plan to analyze our methodology within the Limited Penetrable Visibility Graph (Zhou et al., 2012) method, as it demonstrated noise resistance in the univariate setting.

Looking ahead, our future work will explore bridging the aforementioned gap by not only integrating our methodologies with advanced machine and deep learning techniques but also by conducting comparative analyses with recent literature. Additional open issues include exploring new topological features of multilayer networks and incorporating advanced feature selection techniques (supervised and/or unsupervised) to reduce the number of topological features that increase with the number of time series components.

This comprehensive exploration aims to position our work within the evolving landscape of time series data analysis, offering both interpretable features and the potential for further performance enhancements.

Data Availability

The raw data is available at https://github.com/vanessa-silva/MHVG2MTS.

Code Availability

The code is available from the authors on request.

Notes

Initially introduced in Silva et al. (2023)

A multilayer network is node-aligned if all layers contain all entities, that is, \(V_M = V \times L_1 \times \ldots \times L_m\).

Note also that the inter-layer graphs have the characteristics of a bipartite graph. A bipartite graph \(G^{\alpha ,\beta }\) has a node set \(V^{\alpha ,\beta }\) that can be divided into two disjoint and independent sets \(V^{\alpha }\) and \(V^{\beta }\) (\(V^{\alpha ,\beta } = V^{\alpha } \cup V^{\beta }\) and \(V^{\alpha } \cap V^{\beta } = \emptyset\)) and every edge connects a node in \(V^{\alpha }\) to a node in \(V^{\beta }\).

We use the term reciprocal where mutual is used in the literature since we find the former is a more appropriate term to describe the property as it implies a back-and-forth nature.

Note that JSD is a symmetrical version of the asymmetrical measure KLD.

References

Albert R, Barabási AL (2002) Statistical mechanics of complex networks. Rev Mod Phys 74(1):47. https://doi.org/10.1103/RevModPhys.74.47

Bagnall AJ, Dau HA, Lines J, et al (2018) The UEA multivariate time series classification archive, 2018. CoRR abs/1811.00075. http://arxiv.org/abs/1811.00075,

Barabási AL (2016) Network Science. Cambridge University Press, Cambridge, United Kingdom

Barbosa SM (2012) mAr: Multivariate AutoRegressive analysis. https://CRAN.R-project.org/package=mAr, r package version 1.1-2

Blondel VD, Guillaume JL, Lambiotte R et al (2008) (2008) Fast unfolding of communities in large networks. J Stat Mech: Theory Exp 10:P1000. https://doi.org/10.1088/1742-5468/2008/10/P10008

Boccaletti S, Bianconi G, Criado R et al (2014) The structure and dynamics of multilayer networks. Phys Rep 544(1):1–12. https://doi.org/10.1016/j.physrep.2014.07.001

Bonifati A, Buono FD, Guerra F et al (2022) Time2Feat: learning interpretable representations for multivariate time series clustering. Proc VLDB Endowment. https://doi.org/10.14778/3565816.3565822

Cipra T (2020) Time series in economics and finance. Springer, Wiesbaden, Deutschlan. https://doi.org/10.1007/978-3-030-46347-2

Costa LdF, Rodrigues FA, Travieso G et al (2007) Characterization of complex networks: a survey of measurements. Adv Phys 56(1):167–242. https://doi.org/10.1080/00018730601170527

Eroglu D, Marwan N, Stebich M et al (2018) Multiplex recurrence networks. Phys Rev E 97(1):01231. https://doi.org/10.1103/physreve.97.012312

Fulcher BD (2018) Feature-based time-series analysis. In: Feature engineering for machine learning and data analytics. CRC Press, Boca Raton, Florida, p 87–11https://doi.org/10.1201/9781315181080

Fulcher BD, Jones NS (2014) Highly comparative feature-based time-series classification. IEEE Trans Knowledge Data Eng 26(12):3026–3037. https://doi.org/10.1080/00018730601170527