Abstract

Reduction operations frequently appear in algorithms. Due to their mathematical invariance properties (assuming that round-off errorscan be tolerated), it is reasonable to ignore ordering constraints on the computation of reductions in order to take advantage of the computing power of parallel machines.

One obvious and widely-used compilation approach for reductions is syntactic pattern recognition. Either the source language includes explicit reduction operators, or certain specific loops are recognized as equivalent to known reductions. Once such patterns are recognized, hand optimized code for the reductions are incorporated in the target program. The advantage of this approach is simplicity. However, it imposes restrictions on the reduction loops—no data dependence other than that caused by the reduction operation itself is allowed in the reduction loops.

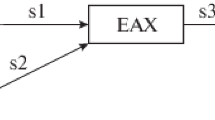

In this paper, we present a parallelizing technique, interleaving transformation, for distributed-memory parallel machines. This optimization exploits parallelism embodied in reduction loops through combination of data dependence analysis and region analysis. Data dependence analysis identifies the loop structures and the conditions that can trigger this optimization. Region analysis divides the iteration domain into a sequential region and an order-insensitive region. Parallelism is achieved by distributing the iterations in the order-insensitive region among multiple processors. We use a triangular solver as an example to illustrate the optimization. Experimental results on various distributed-memory parallel machines, including the Connection Machines CM-5, the nCUBE, the IBM SP-2, and a network of Sun Workstations are reported.

Similar content being viewed by others

References

The kap (kuck and associates preprocessor) fortran optimizer. http: //squish.ucs.indiana.edu, 1998.

The MIPSpro POWER Fortran Programmers Guide. http: //www.ncsa.uiuc.edu/Pubs/NCSASysDir.html, 1998.

P. Bose. Interactive program improvement via eave. In Proceedings of the International Conference on Supercomputing, pp. 119–130, 1988.

Z. Bozkus, A. Choudhary, G. Fox, T. Haupt, S. Ranka, and M. Wu. Compiling fortran 90/hpf for distributed memory mimd computers. Journal of Parallel and Distributed Computing, 21(1): 15–24, April 1994.

M. Chen and Y. Hu. Optimizations for compiling iterative spatial loops to massively parallel machines. In Proceedings of the 5th Workshop on Languages and Compilers for Parallel Computing, New Haven, CT, 1992.

A. L. Fisher and A. M. Ghuloum. Parallelizing complex scans and reductions. In Proceedings of the ACM SIGPLAN'94 Conference on Programming Language Design and Implementation, pp. 135–145, 1994.

A. M. Ghuloum and A. L. Fisher. Flattening and parallelizing irregular, recurrent loop nests. In Proc. of Principles and Practice of Parallel Programming (PPOPP'95), pp. 58–67, 1995.

M. W. Hall, M. Lam, R. Murphy, and S. P. Amarasinghe. Interprocedural analysis for parallelization: Design and experience. In SIAM Conference on Parallel Processing for Scientific Computing, 1995.

P. Jouvelot and B. Dehbonei. A unified semantic approach for the vectorization and parallelization of generalized reductions. In ACM International Conference on Supercomputing, 1989.

B. Leasure. The parafrase project's fortran analyzer. Technical Report 85-504, Dept. Computer Science, University of Illinois at Urbana-Champaign, 1985.

Z. Li, P. C. Yew, and C. Q. Zhu. An efficient data dependence analysis for parallelizing compilers. IEEE Transactions on Parallel and Distributed Systems, 1(1): 26–34, January 1990.

D. B. Loveman. High performance Fortran language specifications. In High Performance FORTRAN Forum, Houston, Texas, January 1995.

L.-C. Lu and M. Chen. Subdomain dependency test for massively parallelism. In Proceedings of Supercomputing'90, 1990.

Y. Paek, J. Hoeflinger, and D. Padua. Simplification of array access patterns for compiler optimizations. In ACM SIGPLAN'98 Conference on Programming Language Design and Implementation, 1998.

P. Peterson and D. Padua. Static and dynamic evaluation of data dependence analysis techniques. IEEE Transactions on Parallel and Distributed Systems, 7(11): 1121–1132, 1996.

S. S. Pinter and R. Y. Pinter. Program optimization and parallelization using idioms. In Proceedings of Principles of Programming Languages, 1990.

W. Pottenger. Induction variable substitution and reduction recognition in the polaris parallelizing compiler. Master's thesis, Dept. Computer Science, UIUC, 1995.

L. Rauchneerger and D. Padua. The lrpd test: Run-time parallelization of loops with privatization and reduction parallelism. In ACM SIGNPLAN'95 Conference on Programming Languages Design and Implementation, pp. 218–232, 1995.

H. B. Ribas. Obtaining dependence vectors for nested-loop computations. In Proceedings of 1990 International Conference on Parallel Processing, pp. 212–219, 1990.

X. Roden and P. Feautrier. Detection of recurrences in sequential programs with loops. In Lecture Notes in Computer Science, vol. 694, pp. 132–145, 1993.

C.-W. Tseng. An optimizing Fortran D compilers for MIMD distributed-memory machine. Ph.D. thesis, Rice University, Dept. of Computer Science, January 1993.

M. J. Wolfe. Optimizing supercompilers for supercomputers. Ph.D. thesis, University of Illinois at Urbana-Champaign, Dept. of Computer Science, 1982.

H. Zima and B. Chapman. Compiling for distributed memory systems. In Proceedings of the IEEE Special Section on Languages and Compilers for Parallel Machines, pp. 264–287, February 1993.

H. Zima and B. Chapman. Supercompilers for Parallel and Vector Computers. ACM Press Frontier Series. Addison-Wesley, 1990.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Wu, JJ. An Interleaving Transformation for Parallelizing Reductions for Distributed-Memory Parallel Machines. The Journal of Supercomputing 15, 321–339 (2000). https://doi.org/10.1023/A:1008168528240

Issue Date:

DOI: https://doi.org/10.1023/A:1008168528240