Abstract

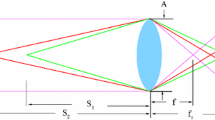

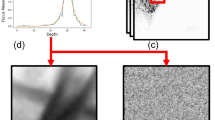

Depth from Focus (DFF) and Depth from Defocus (DFD) methods are theoretically unified with the geometric triangulation principle. Fundamentally, the depth sensitivities of DFF and DFD are not different than those of stereo (or motion) based systems having the same physical dimensions. Contrary to common belief, DFD does not inherently avoid the matching (correspondence) problem. Basically, DFD and DFF do not avoid the occlusion problem any more than triangulation techniques, but they are more stable in the presence of such disruptions. The fundamental advantage of DFF and DFD methods is the two-dimensionality of the aperture, allowing more robust estimation. We analyze the effect of noise in different spatial frequencies, and derive the optimal changes of the focus settings in DFD. These results elucidate the limitations of methods based on depth of field and provide a foundation for fair performance comparison between DFF/DFD and shape from stereo (or motion) algorithms.

Similar content being viewed by others

References

Abbott, A.L. and Ahuja, N. 1988. Surface reconstruction by dynamic integration of focus, camera vergence, and stereo. In Proc. ICCV, Tarpon Springs, Florida, pp. 532–543.

Abbott, A.L. and Ahuja, N. 1993. Active stereo: Integrating disparity, vergence, focus, aperture and calibration for surface estimation. IEEE Trans. PAMI, 15:1007–1029.

Adelson, E.H. and Wang, J.Y.A. 1992. Single lens stereo with a plenoptic camera. IEEE Trans. PAMI, 14:99–106.

Amitai, Y.Y., Friesem, A.A., and Weiss, V. 1989. Holographic elements with high efficiency and low aberrations for helmet displays. App. Opt., 28:3405–3417.

Asada, N., Fujiwara, H., and Matsuyama, T. 1998. Seeing behind the scene: Analysis of photometric properties of occluding edges by the reversed projection blurring model. IEEE Trans. PAMI, 20:155–167.

Bergen, J.R., Burt, P.J., Hingorani, R., and Peleg, S. 1992. A three-frame algorithm for estimating two-component image motion. IEEE Trans. PAMI, 14:886–895.

Besl, P.J. 1988. Active, optical range imaging sensors. Machine Vision and Applications, 1:127–152.

Bove Jr., V.M. 1989. Discrete fourier transform based depth-from focus, Image Understanding and Machine Vision 1989. Technical Digest Series, 14, Conference ed., 118–121.

Bove Jr., V.M. 1993. Entropy-based depth from focus. J. Opt. Soc. Amer. A, 10:561–566.

Castleman, K.R. 1979. Digital Image Processing. Prentice-Hall: New Jersey, pp. 357–360.

Chen, W.G., Nandhakumar, N., and Martin, W.N. 1996. Image motion estimation from motion smear–a new computational model. IEEE Trans. PAMI, 18:412–425.

Darrell T. and Wohn, K. 1988. Pyramid based depth from focus. In Proc. CVPR, Ann Arbor, pp. 504–509.

Darwish, A.M. 1994. 3D from focus and light stripes. In Proc. SPIE Sensors and Control for Automation, Vol. 2247, Frankfurt, pp. 194–201.

Dias, J., de Araujo, H., Batista, J., and de Almeida, A. 1992. Stereo and focus to improve depth perception. In Proc. 2nd Int. Conf. on Automation, Robotics and Comp. Vis., Vol. 1, Singapore, pp. cv-5.7/1–5.

Engelhardt, K. and Hausler, G. 1988. Acquisition of 3-D data by focus sensing. App. Opt., 27:4684–4689.

Ens J. and Lawrence, P. 1993. An investigation of methods for determining depth from focus. IEEE Trans. PAMI, 15:97–108.

Farid, H. 1997. Range estimation by optical differentiation. Ph.D Thesis, University of Pennsylvania.

Farid, H. and Simoncelli, E.P. 1998. Range estimation by optical differentiation. JOSA A, 15:1777–1786.

FitzGerrell, A.R., Dowski, Jr., E.R., and Cathey, T. 1997. Defocus transfer function for circularly symmetric pupils. App. Opt., 36:5796–5804.

Fox, J.S. 1988. Range from translational motion blurring. In Proc. CVPR, Ann Arbor, pp. 360–365.

Girod, B. and Scherock, S. 1989. Depth from defocus of structured light. In Proc. SPIE Optics, Illumination and Image Sensing for Machine Vision IV, Vol. 1194, pp. 209–215. TR-141, Media-Lab, MIT.

Hiura, S., Takemura G., and Matsuyama, T. 1998. Depth measurement by multi-focus camera. In Proc. of Model-Based 3D Image Analysis, Mumbai, pp. 35–44.

Hopkins, H.H. 1955. The frequency response of a defocused optical system. Proc. R. Soc. London Ser. A, 231:91–103.

Hwang, T., Clark, J.J., and Yuille, A.L. 1989. A depth recovery algorithm using defocus information. In Proc. IEEECVPR, San-Diego, pp. 476–482.

Irani, M., Rousso, B., and Peleg, S. 1994. Computing occluding and transparent motions. Int. J. Comp. Vis., 12:5–16.

Jarvis, R. 1983. A perspective on range-finding techniques for computer vision. IEEE Trans. PAMI, 3:122–139.

Kawasue, K., Shiku, O., and Ishimatsu, T. 1998. Range finder using circular dynamic stereo. In Proc. ICPR, Vol. I, Brisbane, pp. 774–776.

Klarquist, W.N., Geisler, W.S., and Bovic, A.C. 1995. Maximum-likelihood depth-from-defocus for active vision. In Proc. Inter. Conf. Intell. Robots and Systems: Human Robot Interaction and Cooperative Robots, Vol. 3, Pittsburgh, pp. 374–379.

Kristensen, S., Nielsen, H.M., and Christensen, H.I. 1993. Cooperative depth extraction. In Proc. Scandinavian Conf. Image Analys, Vol. 1, Tromso, Norway, pp. 321–328.

Krotkov, E. and Bajcsy, R. 1993. Active vision for reliable ranging: Cooperating focus, stereo, and vergence. Int. J. Comp. Vis., 11:187–203.

Lee, H.C. 1990. Review of image-blur models in a photographic system using the principles of optics. Opt. Eng., 29:405–421.

Marapane, S.B. and Trivedi, M.M. 1993. An active vision system for depth extraction using multi-primitive hierarchical stereo analysis and multiple depth cues. In Proc. SPIE Sensor Fusion and Aerospace Applications, Vol. 1956, Orlando, pp. 250–262.

Marshall, J.A., Burbeck, C.A., Ariely, D., Rolland, J.P. and Martin, K.E. 1996. Occlusion edge blur: A cue to relative visual depth. J. Opt. Soc. Amer. A, 13:681–688.

Nair, H.N. and Stewart, C.V. 1992. Robust focus ranging. In Proc. CVPR, Champaign, pp. 309–314.

Nayar, S.K. 1992. Shape from focus system. In Proc. CVPR, pp. 302–308.

Nayar, S.K., Watanabe, M., and Nogouchi, M. 1995. Real time focus range sensor. In Proc. ICCV, Cambridge, pp. 995–1001.

Noguchi M. and Nayar, S.K. 1994. Microscopic shape from focus using active illumination. In Proc. ICPR-A, Jerusalem, pp. 147–152.

Pentland, A.P. 1987. A new sense for depth of field. IEEE Trans. PAMI, 9:523–531.

Pentland, A.P., Darrell, T., Turk, M., and Huang, W. 1989. A simple, real-time range camera. In Proc. CVPR, San Diego, pp. 256–261.

Pentland, A., Scherock, S., Darrell T., and Girod, B. 1994 Simple range camera based on focal error. J. Opt. Soc. Amer. A, 11:2925–2934.

Rajagopalan, A.N. and Chaudhuri, S. 1995a. A block shift-invariant blur model for recovering depth from defocused images. In Proc. ICIP, Washington DC, pp. 636–639.

Rajagopalan, A.N. and Chaudhuri, S. 1995b. Recovery of depth from defocused images. In Proc. 1st National Conference on Communications, pp. 155–160.

Rajagopalan, A.N. and Chaudhuri, S. 1997. Optimal selection of camera parameters for recovery of depth from defocused images. In Proc. CVPR, San Juan, pp. 219–224.

Rioux, M. and Blais, F., 1986. Compact three-dimensional camera for robotic applications. JOSA A, 3:1518–1521.

Saadat, A. and Fahimi, H. 1995. A simple general and mathematically tractable way to sense depth in a single image. In Proc. SPIE Applications of Digital Image Processing XVIII, Vol. 2564, San Diego, pp. 355–363.

Schechner, Y.Y. and Kiryati, N., 1998. Depth from defocus vs. Stereo: How different really are they? In Proc. ICPR, Brisbane, pp. 1784–1786.

Schechner, Y.Y. and Kiryati, N. 1999. The optimal axial interval in estimating depth from defocus. In Proc. IEEE ICCV, Vol. II, Kerkyra, pp. 843–848.

Schechner, Y.Y., Kiryati, N., and Basri, R. 1998. Separation of transparent layers using focus. In Proc. ICCV, Mumbai, pp. 1061–1066.

Scherock, S. 1991. Depth from defocus of structured light. TR-167, Media-Lab, MIT.

Schneider, G., Heit, B., Honig, J., and Bremont, J. 1994. Monocular depth perception by evaluation of the blur in defocused images. In Proc. ICIP, Vol. 2, Austin, pp. 116–119.

Simoncelli, E.P. and Farid, H. 1996. Direct differential range estimation using optical masks. In Proc. ECCV, Vol. 2, Cambridge, pp. 82–93.

Stewart, C.V. and Nair, H. 1989. New results in automatic focusing and a new method for combining focus and stereo. In Proc. SPIE Sensor Fusion II: Human and Machine Strategies, Vol. 1198, Philadelphia, pp. 102–113.

Subbarao, M. 1988. Parallel depth recovery by changing camera parameters. In Proc. ICCV, Tarpon Springs, Florida, pp. 149–155.

Subbarao, M. and Liu, Y.F. 1996. Accurate reconstruction of three-dimensional shape and focused image from a sequence of noisy defocused images. In Proc. SPIE Three dimensional imaging and Laser-Based Systems For Metrology and Inspection II, Vol. 2909, Boston, pp. 178–191.

Subbarao M. and Surya, G. 1994. Depth from defocus: A spatial domain approach. Int. J. Comp. Vis., 13:271–294.

Subbarao, M. and Wei, T.C. 1992. Depth from defocus and rapid autofocusing: A practical approach. In Proc. CVPR, Champaign, pp. 773–776.

Subbarao, M., Yuan, T., and Tyan, J.K., 1997. Integration of defocus and focus analysis with stereo for 3D shape recovery. In Proc. SPIE Three Dimensional Imaging and Laser-Based Systems for Metrology and Inspection III, Vol. 3204, Pittsburgh, pp. 11–23.

Surya, G. and Subbarao, M. 1993. Depth from defocus by changing camera aperture: A spatial domain approach. In Proc. CVPR, New York, pp. 61–67.

Swain, C., Peters, A., and Kawamura, K. 1994. Depth estimation from image defocus using fuzzy logic. In Proc. 3rd International Fuzzy Systems Conference, Orlando, pp. 94–99.

Watanabe, M. and Nayar, S.K. 1996. Minimal operator set for passive depth from defocus. In Proc. CVPR, San Francisco, pp. 431–438.

Xiong, Y. and Shafer, S.A. 1993. Depth from focusing and defocusing. In Proc. CVPR, New York, pp. 68–73.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Schechner, Y.Y., Kiryati, N. Depth from Defocus vs. Stereo: How Different Really Are They?. International Journal of Computer Vision 39, 141–162 (2000). https://doi.org/10.1023/A:1008175127327

Issue Date:

DOI: https://doi.org/10.1023/A:1008175127327