Abstract

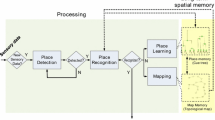

We describe a general framework for learning perception-based navigational behaviors in autonomous mobile robots. A hierarchical behavior-based decomposition of the control architecture is used to facilitate efficient modular learning. Lower level reactive behaviors such as collision detection and obstacle avoidance are learned using a stochastic hill-climbing method while higher level goal-directed navigation is achieved using a self-organizing sparse distributed memory. The memory is initially trained by teleoperating the robot on a small number of paths within a given domain of interest. During training, the vectors in the sensory space as well as the motor space are continually adapted using a form of competitive learning to yield basis vectors that efficiently span the sensorimotor space. After training, the robot navigates from arbitrary locations to a desired goal location using motor output vectors computed by a saliency-based weighted averaging scheme. The pervasive problem of perceptual aliasing in finite-order Markovian environments is handled by allowing both current as well as the set of immediately preceding perceptual inputs to predict the motor output vector for the current time instant. We describe experimental and simulation results obtained using a mobile robot equipped with bump sensors, photosensors and infrared receivers, navigating within an enclosed obstacle-ridden arena. The results indicate that the method performs successfully in a number of navigational tasks exhibiting varying degrees of perceptual aliasing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Albus, J. 1971. A theory of cerebellar functions. Math. Bio., 10:25- 61.

Asada, M., Uchibe, E., Noda, S., Tawaratsumida, S., and Hosoda, K. 1994. Coordination of multiple behaviors acquired by a vision-based reinforcement learning. In Proceedings of the 1994 IEEE/RSJ International Conference on Intelligent Robots an Systems, Munich, Germany, pp. 917-924.

Beer, R. and Gallagher, J. 1992. Evolving dynamical neural networks for adaptive behavior. Adaptive Behavior, 1:91-122.

Brooks, R.A. 1986. A robust layered control system for a mobile robot. IEEE Journal of Robotics and Automation, 2(1):14-22.

Broomhead, D.S. and Lowe, D. 1988. Multivariable functional interpolation and adaptive networks. Complex Systems, 2:321-355.

Chrisman, L. 1992. Reinforcement learning with perceptual aliasing. In Proceedings of the Eleventh National Conference on Artificial Intelligence.

Cliff, D., Husbands, P., and Harvey, I. 1992. Evolving visually guided robots. In From Animals to Animats 2: Proceedings of the Second International Conference on the Simulation of Adaptive Behavior, J.A. Meyer, H. Roitblat, and S. Wilson (Eds.), MIT Press: Cambridge, MA, pp. 374-383.

Connell, J.H. 1990. Minimalist Mobile Robotics: A Colony-Style Architecture for an Artificial Creature, Academic Press: Boston, MA.

Dayan, P. and Hinton, G. 1993. Feudal reinforcement learning. In Advances in Neural Information Processing Systems 5, S. Hanson, J. Cowan, and C. Giles (Eds.), Morgan Kaufmann: San Mateo, CA, pp. 271-278.

de Bourcier, P. 1993. Animate navigation using visual landmarks. Cognitive Science Research Papers 277, University of Sussex at Brighton.

Elfes, A. 1987. Sonar-based real-world mapping and navigation. IEEE Journal of Robotics and Automation, 3:249-265.

Fuentes, O., Rao, R.P.N., and Wie, M.V. 1995. Hierarchical learning of reactive behaviors in an autonomous mobile robot. In Proceedings of the IEEE International Conf. on Systems, Man, and Cybernetics.

Fuentes, O. and Nelson, R.C. 1997. Learning dextrous manipulation skills using the evolution strategy. In Proceedings of the 1997 IEEE International Conference on Robotics and Automation, Albuquerque, New Mexico.

Hackbusch, W. 1985. Multi-grid Methods and Applications, Springer-Verlag: Berlin.

Hertz, J., Krogh, A., and Palmer, R. 1991. Introduction to the Theory of Neural Computation, Addison-Wesley Publishing Company: Redwood City, CA.

Hinton, G., McClelland, J., and Rumelhart, D. 1986. Distributed representations. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition, vol. 1. MIT Press: Cambridge, MA.

Joglekar, U.D. 1989. Learning to read aloud: A neural network approach using sparse distributed memory. Technical Report 89.27, Research Institute for Advanced Computer Science, NASA Ames Research Center.

Jordan, M. 1986. Attractor dynamics and parallelism in a connectionist sequential machine. In Proceedings of the 1986 Cognitive Science Conference.

Kaelbling, L. 1993a. Hierarchical reinforcement learning: Preliminary results. In Proceedings of the Tenth International Conference on Machine Learning, Morgan Kaufmann: San Mateo, CA, pp. 167-173.

Kaelbling, L.P. 1993b. Learning in Embedded Systems, MIT Press: Cambridge, MA.

Kaelbling, L.P., Littman, M.L., and Moore, A.W. 1996. Reinforcement learning: A survey. Journal of Artificial Intelligence Research, 4:237-285.

Kanerva, P. 1988. Sparse Distributed Memory, Bradford Books: Cambridge, MA.

Kanerva, P. 1993. Sparse distributed memory and related models. In Associative Neural Memories, M.H. Hassoun (Ed.), Oxford University Press: New York, pp. 50-76.

Krose, B.J.A. and Eecen, M. 1994. A self-organizing representation of sensor space for mobile robot navigation. In IEEE/RSJ/GI International Conference on Intelligent Robots an Systems, pp. 9-14.

Kuipers, B.J. and Byun, Y.-T. 1988. A robust, qualitiative approach to a spatial learning mobile robot. In SPIE Cambridge Symposium on Optical and Optoelectronic Engineering: Advances in Intelligent Robotics Systems.

Lewis, M.A., Fagg, A.H., and Solidum, A. 1992. Genetic programming approach to the construction of a neural network for control of a walking robot. In Proceedings of the 1992 IEEE International Conference on Robotics and Automation, Nice, France.

Maes, P. and Brooks, R.A. 1990. Learning to coordinate behaviors. In Proceedings of AAAI-90, pp. 796-802.

Mahadevan, S. and Connell, J. 1991. Scaling reinforcement learning to robotics by exploiting the subsumption architecture. In Proceedings of the Eighth International Workshop on Machine Learning.

Marr, D. 1969. A theory of cerebellar cortex. J. Physiol. (London), 202:437-470.

Mataric, M. 1992. Integration of representation into goal-driven behavior-based robot. IEEE Transactions on Robotics and Automation, 8(3):304-312.

McCallum, R.A. 1996. Hidden state and reinforcement learning with instance-based state identification. IEEE Trans. on Systems, Man and Cybernetics, 26(3):464-473.

McIlwain, J.T. 1991. Distributed spatial coding in the superior colliculus: A review. Visual Neuroscience, 6:3-13.

Nehmzow, U. and Smithers, T. 1991. Mapbuilding using self-organizing networks in “Really Useful Robots”. In From Animals to Animats 1: Proceedings of the First International Conference on Simulation of Adaptive Behavior, J.-A. Meyer and S.W. Wilson (Eds.), MIT Press: Cambridge, MA, pp. 152-159.

Nelson, R.C. 1991. Visual homing using an associative memory. Biological Cybernetics, 65:281-291.

Nolfi, S. 1997. Using emergent modularity to develop control systems for mobile robots. Adaptive Behavior, 5:343-363.

Nowlan, S. 1990. Maximum likelihood competitive learning. In Advances in Neural Information Processing Systems 2, D. Touretzky (Ed.), Morgan Kaufmann: San Mateo, CA, pp. 574-582.

Pierce, D. and Kuipers, B. 1991. Learning hill-climbing functions as a strategy for generating behaviors in a mobile robot. In From Animals to Animats: Proceedings of the First International Conference on Simulation of Adaptive Behavior, pp. 327-336.

Poggio, T. and Girosi, F. 1990. Networks for approximation and learning. In Proc. IEEE, vol. 78, pp. 1481-1497.

Pomerleau, D. 1989. ALVINN:Anautonomous land vehicle in a neural network. In Advances in Neural Information Processing Systems, D. Touretzky (Ed.), vol. 1, Morgan Kaufmann: San Mateo, pp. 305-313.

Pomerleau, D. 1991. Efficient training of artificial neural networks for autonomous navigation. Neural Computation, 3(1):88-97.

Prager, R.W. and Fallside, F. 1989. The modified Kanerva model for automatic speech recognition. Computer Speech and Language, 3(1):61-81.

Rao, R.P.N. and Ballard, D.H. 1995a. An active vision architecture based on iconic representations. Artificial Intelligence (Special Issue on Vision), 78:461-505.

Rao, R.P.N. and Ballard, D.H. 1995b. Learning saccadic eye movements using multiscale spatial filters. In Advances in Neural Information Processing Systems 7, G. Tesauro, D.S. Touretzky, and T.K. Leen (Eds.), MIT Press: Cambridge, MA, pp. 893-900.

Rao, R.P.N. and Ballard, D.H. 1995c. Natural basis functions and topographic memory for face recognition. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), pp. 10-17.

Rao, R.P.N. and Ballard, D.H. 1995d. Object indexing using an iconic sparse distributed memory. In Proceedings of the International Conference on Computer Vision (ICCV), pp. 24-31.

Rao, R.P.N. and Fuentes, O. 1995. Perceptual homing by an autonomous mobile robot using sparse self-organizing sensory-motor maps. In Proceedings of World Congress on Neural Networks (WCNN), pp. II380-II383.

Rao, R.P.N. and Fuentes, O. 1996. Learning navigational behaviors using a predictive sparse distributed memory. In From Animals to Animats 4: Proceedings of the Fourth Int. Conf. on Simulation of Adaptive Behavior (SAB), pp. 382-390.

Rao, R.P.N. and Ballard, D.H. 1997. Dynamic model of visual recognition predicts neural response properties in the visual cortex. Neural Computation, 9(4):721-763.

Rogers, D. 1990. Predicting weather using a genetic memory: A combination of Kanerva's sparse distributed memory and Holland's genetic algorithms. In Advances in Neural Information Processing Systems 2, D.S. Touretzky (Ed.), Morgan Kaufmann, pp. 455-464.

Singh, S. 1992. Transfer of learning by composing solutions of elemental sequential tasks. Machine Learning, 8:323-339.

Tani, J. and Fukumura, N. 1994. Learning goal-directed sensory-based navigation of a mobile robot. Neural Networks, 7(3):553-563.

Whitehead, S. and Ballard, D. 1991. Learning to perceive and act by trial and error. Machine Learning, 7(1):45-83.

Wixson, L. 1991. Scaling reinforcement learning techniques via modularity. In Proceedings of the Eighth International Workshop on Machine Learning, Morgan Kaufmann, pp. 368-372.

Yair, E., Zeger, K., and Gersho, A. 1992. Competitive learning and soft competition for vector quantizer design. IEEE Trans. Signal Processing, 40(2):294-309.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Rao, R.P., Fuentes, O. Hierarchical Learning of Navigational Behaviors in an Autonomous Robot using a Predictive Sparse Distributed Memory. Autonomous Robots 5, 297–316 (1998). https://doi.org/10.1023/A:1008810406347

Issue Date:

DOI: https://doi.org/10.1023/A:1008810406347