Abstract

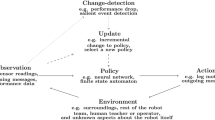

Generating teams of robots that are able to perform their tasks over long periods of time requires the robots to be responsive to continual changes in robot team member capabilities and to changes in the state of the environment and mission. In this article, we describe the L-ALLIANCE architecture, which enables teams of heterogeneous robots to dynamically adapt their actions over time. This architecture, which is an extension of our earlier work on ALLIANCE, is a distributed, behavior-based architecture aimed for use in applications consisting of a collection of independent tasks. The key issue addressed in L-ALLIANCE is the determination of which tasks robots should select to perform during their mission, even when multiple robots with heterogeneous, continually changing capabilities are present on the team. In this approach, robots monitor the performance of their teammates performing common tasks, and evaluate their performance based upon the time of task completion. Robots then use this information throughout the lifetime of their mission to automatically update their control parameters. After describing the L-ALLIANCE architecture, we discuss the results of implementing this approach on a physical team of heterogeneous robots performing proof-of-concept box pushing experiments. The results illustrate the ability of L-ALLIANCE to enable lifelong adaptation of heterogeneous robot teams to continuing changes in the robot team member capabilities and in the environment.

Similar content being viewed by others

References

Arkin, R.C., Balch, T., and Nitz, N. 1993. Communication of behavioral state in multi-agent retrieval tasks. In Proceedings of the 1993 International Conference on Robotics and Automation, pp. 588–594.

Asama, H., Ozaki, K., Matsumoto, A., Ishida, Y., and Endo, I. 1992. Development of task assignment system using communication for multiple autonomous robots. Journal of Robotics and Mechatronics, 4(2):122–127.

Benda, M., Jagannathan, V., and Dodhiawalla, R. 1985. On optimal cooperation of knowledge sources. Technical Report BCS-G2010-28, Boeing AI Center.

Beni, G. and Wang, J. 1990. On cyclic cellular robotic systems. In Japan USA Symposium on Flexible Automation, Kyoto, Japan, pp. 1077–1083.

Bond A. and Gasser, L. 1988. Readings in Distributed Artificial Intelligence, Morgan Kaufmann.

Caloud, P., Choi,W., Latombe, J.-C., Le Pape, C., and Yim, M. 1990. Indoor automation with many mobile robots. In Proceedings of the IEEE International Workshop on Intelligent Robots and Systems, Tsuchiura, Japan, pp. 67–72.

Cammarata, S., McArthur, D., and Steeb, R. 1983. Strategies of cooperation in distributed problem solving. In Proceedings of 8th International Joint Conference on Artificial Intelligence, pp. 767–770.

Cohen, P., Greenberg, M., Hart, D., and Howe, A. 1990. Real-time problem solving in the Phoenix environment. COINS Technical Report 90-28, University of Massachusetts at Amherst.

Davis, R. and Smith, R. 1983. Negotiation as a metaphor for distributed problem solving. Artificial Intelligence, 20(1):63–109.

Deneubourg, J., Goss, S., Sandini, G., Ferrari, F., and Dario, P. 1990. Self-organizing collection and transport of objects in unpredictable environments. In Japan U.S.A.Symposium on Flexible Automation, Kyoto, Japan, pp. 1093–1098.

Donald, B.R., Jennings, J., and Rus, D. 1993. Towards a theory of information invariants for cooperating autonomous mobile robots. In Proceedings of the International Symposium of Robotics Research, Hidden Valley, PA.

Drogoul, A. and Ferber, J. 1992. From Tom Thumb to the Dockers: Some experiments with foraging robots. In Proceedings of the Second International Conference on Simulation of Adaptive Behavior, Honolulu, Hawaii, pp. 451–459.

Durfee, E. and Montgomery, T. 1990. A hierarchical protocol for coordinating multiagent behaviors. In Proceedings of the Eighth National Conference on Artificial Intelligence, pp. 86–93.

Fukuda, T., Nakagawa, S., Kawauchi, Y., and Buss, M. 1988. Self organizing robots based on cell structures—CEBOT. In Proceedings of 1988 IEEE International Workshop on Intelligent Robots and Systems (IROS' 88), pp. 145–150.

Garey, M.R. and Johnson, D.S. 1979. Computers and Intractability: A Guide to the Theory of NP-Completeness. W.H. Freeman and Company.

Haynes, T. and Sen, S. 1986. Evolving behavioral strategies in predators and prey. In Adaptation and Learning in Multi-Agent Systems, G. Weiss and S. Sen (Eds.), Springer, pp. 113–126.

IS Robotics 1993. Inc., Somerville, Massachusetts. ISR Radio Communication and Positioning System.

Korf, R. 1992. A simple solution to pursuit games. In Working Papers of the 11th International Workshop on Distributed Artificial Intelligence, pp. 183–194.

Kreifelts, T. and von Martial, F. 1990. A negotiation framework for autonomous agents. In Proceedings of the Second EuropeanWorkshop on Modeling Autonomous Agents and Multi-Agent Worlds, pp. 169–182.

Kube, C.R. and Zhang, H. 1992. Collective robotic intelligence. In Proceedings of the Second International Workshop on Simulation of Adaptive Behavior, Honolulu, Hawaii, pp. 460–468.

Lesser, V.R. and Corkill, D.D. 1983. The distributed vehicle monitoring testbed:Atool for investigating distributed problem solving networks. AI Magazine, pp. 15–33, Fall.

Liu, B. 1988. A reinforcement approach to scheduling. In Proceedings of the 8th European Conference on Artificial Intelligence, pp. 580–585.

Marsella, S., Adibi, J., Al-Onaizan, Y., Kaminka, G., Muslea, I., and Tambe, M. 1999. On being a teammage: Experiences acquired in the design of robocup teams. In Proceedings of the Third Annual Conference on Autonomous Agents, O. Etzioni, J. Muller, and J. Bradshaw (Eds.), pp. 221–227.

Mataric, M. 1992. Designing emergent behaviors: From local interactions to collective intelligence. In Proceedings of the Second International Conference on Simulation of Adaptive Behavior, J. Meyer, H. Roitblat, and S. Wilson (Eds.), Honolulu, Hawaii, MIT Press, pp. 432–441.

Mataric, M., Nilsson, M., and Simsarian, K.T. 1995. Cooperative multi-robot box pushing. In Proceedings of IEEE International Conference on Intelligent Robots and Systems (IROS), pp. 556–561.

Noreils, F.R. 1993. Toward a robot architecture integrating cooperation between mobile robots: Application to indoor environment. The International Journal of Robotics Research, 12(1):79–98.

Ohko, T., Hiraki, K., and Anzai, Y. 1993. Lemming: A learning system for multi-robot environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Yokohama, Japan, pp. 1141–1146.

Parker, L.E. 1994. Heterogeneous Multi-Robot Cooperation. Ph.D. Thesis, Massachusetts Institute of Technology, Artificial Intelligence Laboratory, Cambridge, MA. MIT-AI-TR 1465 (1994).

Parker, L.E. 1996. On the design of behavior-based multi-robot teams. Journal of Advanced Robotics.

Parker, L.E. 1997. L-ALLIANCE: Task-oriented multi-robot learning in behavior-based systems. Journal of Advanced Robotics.

Parker, L.E. 1998a. ALLIANCE: An architecture for fault-tolerant multi-robot cooperation. IEEE Transactions on Robotics and Automation, 14(2):220–240.

Parker, L.E. 1998b. Distributed control of multi-robot teams: Cooperative baton-passing task. In Proceedings of the 4th International Conference on Information Systems Analysis and Synthesis (ISAS' 98), Vol. 3, pp. 89–94.

Parker, L.E. 1999a. Adaptive heterogeneous multi-robot teams. Neurocomputing, special issue of NEURAP' 98: Neural Networks and Their Applications, 28:75–92.

Parker, L.E. 1999b. Acase study for life-long learning and adaptation in cooperative robot teams. In Proceedings of SPIE Sensor Fusion and Decentralized Control in Robotic Systems II, Vol. 3839, pp. 92–101.

Parker, L.E. 1999c. Cooperative robotics for multi-target observation. Intelligent Automation and Soft Computing, special issue on Robotics Research at Oak Ridge National Laboratory, 5(1):5–19.

Rosenschein, J. and Genesereth, M.R. 1985. Deals among rational agents. In Proceedings of the International Joint Conference on Artificial Intelligence, pp. 91–99.

Sen, S., Sekaran, M., and Hale, J. 1994. Learning to coordinate without sharing information. In Proceedings of AAAI-94, pp. 426–431.

Smith, R.G. 1980. The contract net protocol: High-level communication and control in a distributed problem solver. IEEE Transactions on Computers, C-29(12):1104–1113.

Smith, R.G. and Davis, R. 1981. Frameworks for cooperation in distributed problem solving. IEEE Transactions on Systems, Man, and Cybernetics, SMC-11(1):61–70.

Steeb, R., Cammarata, S., Hayes-Roth, F., Thorndyke, P., and Wesson, R. 1981. Distributed intelligence for air fleet control. Technical Report R-2728-AFPA, Rand Corp.

Steels, L. 1990. Cooperation between distributed agents through selforganization. In Decentralized A.I., Y. Demazeau and J.-P. Muller (Eds.), Elsevier Science.

Stilwell, D. and Bay, J. 1993. Toward the development of a material transport system using swarms of ant-like robots. In Proceedings of IEEE International Conference on Robotics and Automation, Atlanta, GA, pp. 766–771.

Stone, P. and Veloso, M. 1998. A layered approach to learning client behaviors in the robocup soccer server. Applied Artificial Intelligence, 12:165–188.

Tan, M. 1993. Multi-agent reinforcement learning: Independent vs. cooperative agents. In Proceedings of the Tenth International Conference on Machine Learning, pp. 330–337.

Theraulaz, G., Goss, S., Gervet, J., and Deneubourg, J.-L. 1990. Task differentiation in Polistes wasp colonies: A model for selforganizing groups of robots. In Proceedings of the First International Conference on Simulation of Adaptive Behavior, Paris, France, pp. 346–355.

Wang, J. 1993. DRS operating primitives based on distributed mutual exclusion. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Yokohama, Japan, pp. 1085–1090.

Weiss, G. 1992. Collective learning and action coordination. Technical Report FKI-1662-92 (revised), Technische Universit¨at M¨unchen.

Weiss, G. and Sen, S. 1996. (Eds.), Adaption and Learning in Multi-Agent Systems. Springer.

Zlotkin, G. and Rosenschein, J. 1990. Negotiation and conflict resolution in non-cooperative domains. In Proceedings of the Eighth National Conference on AI, pp. 100–105.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Parker, L.E. Lifelong Adaptation in Heterogeneous Multi-Robot Teams: Response to Continual Variation in Individual Robot Performance. Autonomous Robots 8, 239–267 (2000). https://doi.org/10.1023/A:1008977508664

Issue Date:

DOI: https://doi.org/10.1023/A:1008977508664