Abstract

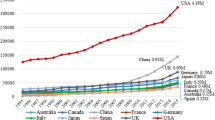

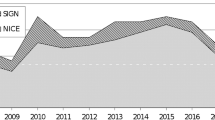

The aim of this study was to draw attention to the possible existence of "quirk", inbibliographic databases and to discuss their implications. We analysed the time-trends of"publication types" (PTs) relating to clinical medicine in the most frequently searched medicaldatabase, MEDLINE. We counted the number of entries corresponding to 10 PTs indexed inMEDLINE (1963-1998) and drew up a matrix of [10 PTs × 36 years] which we analysed bycorrespondence factor analysis (CFA). The analysis showed that, although the "internal clock" ofthe database was broadly consistent, there were periods of erratic activity. Thus, observed trendsmight not always reflect true publication trends in clinical medicine but quirks in MEDLINEindexing of PTs. There may be, for instance, different limits for retrospective tagging of entriesrelating to different PTs. The time-trend for Reviews of Reported Cases differed substantiallyfrom that of other publication types.

Despite the quirks, quite rational explanations could be provided for the strongest correlationsamong PTs. The main factorial map revealed how the advent of the Randomised Controlled Trial(RCT) and the accumulation of a critical mass of literature may have increased the rate ofpublication of research syntheses (meta-analyses, practice guidelines...). The RCT is now theiogold standardls in clinical investigation and is often a key component of formal "systematicreviews" of the literature. Medical journal editors have largely contributed to this situation andthus helped to foster the birth and development of a new paradigm, "evidence based medicine"which assumes that expert opinion is biased and therefore relies heavily — virtually exclusively —on critical analysis of the peer-reviewed literature. Our exploratory factor analysis, however, leadsus to question the consistency of MEDLINEs indexing procedures and also the rationale forMEDLINE's choice of descriptors. Databases have biases of their own, some of which are notindependent of expert opinion. User-friendliness should not make us forget that outputs depend onhow the databases are constructed and structured.

Similar content being viewed by others

References

M. J. Simo, G. M. Gaztambide, P. J. Latour, Atencion Primaria journal in MEDLINE: an analysis of the first 7 years of indexing (1989–1995), Atencion Primaria, 23 (suppl. 1) (1999) 5-13.

J. J. Allison, C. I. Kiefe, N. W. Weissman, J. Carter, R. M. Centor, The art and science of searching MEDLINE to answer clinical questions. Finding the right number of articles, International Journal of Technology Assessment in Health Care, 15 (1999) 281-296.

R. B. Haynes, Computer searching of the medical literature: an evaluation of MEDLINE searching systems, Annals of Internal Medicine, 103 (1995) 812-816.

Information Automation Ltd. The Quality of Publicly-Available Databases: WYSIWYG or what? Routes to Quality, Bournemouth University Occasional Papers on Library and Information Science, University of Bournemouth, 1996.

J. P. BenzÉcri et al., L'Analyse des Données, Tome 1: La Taxinomie. Tome II: L'Analyse des Correspondances, Bordas/Dunod, Paris, 1973.

J. Blasius, M. Greenacre, Visualization of Categorical Data, Academic Press, New York, 1998.

M. Greenacre, Theory and Applications of Correspondence Analysis, Academic Press, New York, 1984.

T. Ojasoo, J.C. DorÉ, J.F. Miquel, Regional cinderellas, Nature, 370 (1994) 172.

P. S. Nagpaul, L. Sharma, Science in the eighties: A typology of countries based on interfield comparisons, Scientometrics, 34 (1995) 263-283.

J. C. DorÉ., T. Ojasoo, Y. Okubo, T. Durand, G. Dudognon, J. F. Miquel, Correspondence factorial analysis of the publication patterns of 48 countries over the period 1981–1992, Journal of the American Society for Information Science, 47 (1996) 588-602.

Y. Okubo, J. C. DorÉ, T. Ojasoo, J. F. Miquel, A multivariate analysis of publication trends in the 1980s with special reference to South-East Asia, Scientometrics, 41 (1998) 273-289.

T. Ojasoo, J. C. DorÉ, Citation bias in medical journals, Scientometrics, 45 (1999) 81-94.

H. F. Moed, Bibliometric indicators reflect publication and management strategies. Scientometrics, 47 (2000) 323-346.

K. Dickerson, R. Scherer, C. Lefebvre, Identifying relevant studies for systematic reviews. In: Systematic Reviews (I. Chalmers, D. G. Altman (Eds)), BMJ Publishing Group, London, 1995, pp. 17-36.

W. R. Hersh, D. H. Hickam, T. J. Leone, Words, concepts, or both: optimal indexing units for automated information retrieval, In: Proceedings of the Annual Symposium on Computer Applications in Medical Care, 1992, pp. 644-648.

M. Callon, J. P. Courtial, W. A. Turner, S. Bauin, From translations to problematic networks: An introduction to co-word analysis, Social Science Information, 22 (1983) 191-235.

O. Raffy, Les dangers de l.evidence based medicine. Revue du Praticien, 49 (1999) 801-802.

I. Chalmers, D. G. Altman (Eds), Systematic Reviews, BMJ Publishing Group, London, 1995.

I. Chalmers, L. V. Hedges, H. Cooper, A brief history of research synthesis, In: Evaluation and the Health Professions (M. Clarke, (Ed.)), in press.

K. A. Mckibbon, N. Wilczynski, R. S. Hayward, C. J. Walker-Dilks, R. B. Haynes, The medical literature as a resource for evidence based care, http://hiru.mcmaster.ca/hiru/medline/asis-pap.htm (02/09/99).

I.M. Crombie, The Pocket Guide to Critical Appraisal, BMJ Publishing Group, London, 1996.

T. Greenhalgh, How to Read a Paper. The Basics of Evidence Based Medicine, BMJ Publishing Group, London, 1997.

C. Mulrow, D. Cook, (Eds) Systematic Reviews. Synthesis of Best Evidence for Health Care Decisions, American College of Physicians, Philadelphia, 1998.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Ojasoo, T., Maisonneuve, H. & Doré, JC. Evaluating publication trends in clinical research: How reliable are medical databases?. Scientometrics 50, 391–404 (2001). https://doi.org/10.1023/A:1010598313062

Issue Date:

DOI: https://doi.org/10.1023/A:1010598313062