Abstract

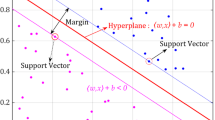

In this paper we extend the conformal method of modifying a kernel function to improve the performance of Support Vector Machine classifiers [14, 15]. The kernel function is conformally transformed in a data-dependent way by using the information of Support Vectors obtained in primary training. We further investigate the performances of modified Gaussian Radial Basis Function and Polynomial kernels. Simulation results for two artificial data sets show that the method is very effective, especially for correcting bad kernels.

Similar content being viewed by others

References

Boser, B., Guyon, I. and Vapnik, V.: A training algorithm for optimal margin classifiers. Fifth Annual Workshop on Computational Learning Theory. New York: ACM Press.

Cortes, C. and Vapnik, V.: Support Vector Networks, Machine Learning, 20 (1995), 273–297.

Vapnik, V.: The Nature of Statistical Learning Theory. Springer.

Aizerman, M. A., Braverman, E. M. and Rozonoer, L. I.: Theoretical foundations of the potential function method in pattern recognition learning. Automation and Remote Control, 25 (1964), 821–837.

Schölkopf, B., Sung, K., Burges, C. J. C., Girosi, F., Niyogi, P., Poggio, T. and Vapnik, V.: Comparing Support Vector Machines with Gaussian kernels to Radial Basis Function classifiers. IEEE Trans. Signal Processing, 45 (1997), 2758–2765.

Smola, A. J., Schölkopf, B. and Müller, K. R.: The connection between regularization operators and support vector kernels. Neural Network, 11 (1998), 637–649.

Girosi, F.: An equivalence between sparse approximation and support vector machines. Neural Computation, 20 (1998), 1455–1480.

Williams, C. K. I.: Prediction with Gaussian process: From linear regression to linear prediction and beyond. In M. I. Jordan (ed.) Learning in Graphical Models. Kluwer, 1997.

Sollich, P.: Probabilistic methods for Support Vector Machines. Advances in Neural Information Systems 12, pp. 349–355, MIT Press, 2000.

Seeger, M.: Bayesian model selection for Support Vector machines, Gaussian processes and other kernel classifiers. Advances in Neural Information Systems 12, pp. 603–609, MIT Press, 2000.

Opper, M. and Winther, O.: Gaussian process classification and SVM mean field results and Leave-One-Out estimator. In A. J. Smola, P. Bartlett, B. Schölkopf and D. Schuurmans (eds) Advances in Large Margin Classifiers, pp. 43–56. Cambridge, MA, 2000.

Schölkopf, B., Simard, P., Smola, A. and Vapnik, V.: Prior knowledge in support vector kernels. Advances in Neural Information Processing Systems 10, MIT Press, 1998.

Burges, C. J. C.: Geometry and Invariance in Kernel Based Method. In B. Schölkopf, C. J. C. Burges and A. J. Smola (eds), Advances in Kernel Methods, pp. 89–116, MIT Press, 1999.

Amari, S. and Wu, S.: Improving Support Vector machine classifiers by modifying kernel functions. Neural Networks, 12 (1999), 783–789.

Amari, S. and Wu, S.: An information-geometrical method for improving the performance of Support Vector machine classifiers. ICANN99, pp. 85–90, 1999.

Burges, C. J. C.: A tutorial on Support Vector machines for pattern recognition. Data Mining and Knowledge Discovery, 2 (1998), 121–167.

Smola, A. J. and Schölkopf, B.: A tutorial on Support Vector regression. Neuro COLT Technical Report TR-1998–030, 1998.

Cristanini, N. and Shawe-Tayor, J.: An Introduction to Support Vector Machines and Other Kernel Based Methods. Cambridge Univ. Press, 2000.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Wu, S., Amari, SI. Conformal Transformation of Kernel Functions: A Data-Dependent Way to Improve Support Vector Machine Classifiers. Neural Processing Letters 15, 59–67 (2002). https://doi.org/10.1023/A:1013848912046

Issue Date:

DOI: https://doi.org/10.1023/A:1013848912046